Scaling Microservices with an Event Stream

In Building Microservices, Sam Newman explains why services choreography may be a more appropriate integration model for supporting complex business processes across domains.

In this article, I would like to stress the challenges of using a point-to-point integration model, and present services choreography as a foundation of a more expandable microservices architecture, where services are highly cohesive and loosely coupled.

Services orchestration from the trenches

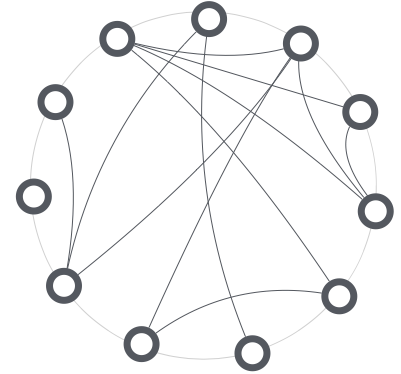

Last year I participated in a microservices project where code changes became harder and harder because we were making asynchronous point-to-point calls between services. The dependency graph of the eleven microservices we released to production is illustrated in the diagram below. Each node represents a service, and each edge represents a direct HTTP call from one service to another.

Dependency graph in a real world orchestrated microservice project

This approach introduced a great deal of complexity when implementing business transactions involving several services, so we struggled to continuously deliver changes in an efficient and safe manner.

When a business transaction spanned across several services, handling a failure in any of the participating service required careful consideration: is the service that fails essential to the transaction? If it is, then the transaction had to abort and a meaningful error returned to the consumer. On the other hand, when the system could recover from the failure, we let the transaction succeed.

Our testing strategy required depending services to be stubbed, so as to be able to contract-test1 one service in isolation from the others. Each service had a stub, with its own code repository and Go pipelines. By stubbing each service, we dramatically increased the number of services to manage, and had to create dedicated environments where each service only depends on stubs for contract-testing.

For some of our most complex services, we also relied on our browser-based tests of the client application to validate their integration and make sure we had not introduced unforeseen side effects when executing a transaction against real services (and not stubbed). High services coupling prevented us from releasing them independently from one another. We ended up with a “monolithic” release process where all services are deployed simultaneously, alongside the client application.

Services Choreography with an Event Stream

Some of the issues we faced with point-to-point integration could have been avoided by decoupling services and limiting the boundaries of all business transactions. Using a model where an upstream service publishes events, while downstream services subscribe to those events, does provide the required level of isolation. The diagram below shows a number of microservices integrated through an event stream.

Dependency graph illustrating the concept of a fully choreographed set of microservices

To explain the idea, let’s reuse Sam’s example of a workflow in MusicCorp2 where three services have to participate in the customer creation process. In an orchestrated approach, when a new customer registers, the customer service implements the entire customer creation workflow: saving the new customer's details and calling downstream services, so that the customer receives a welcome email and gets her first membership status points.

In an event-based approach, the responsibility of what happens after a new customer gets created shifts towards the downstream services. The JavaScript code snippet below shows how a customer service3 can publish a customer_created event:

app.post('/', function(req, res) {

customers.push(req.body);

var event = { 'type':'customer_created', 'data':req.body };

publish(event);

res.sendStatus(200);

});

The boundaries of the customer creation transaction are confined to the customer service, whose responsibility is simply to add the new customer and let the world know about it. Then, any downstream service can subscribe to the stream and will asynchronously be notified when such an event is published, like the email service below.

http.listen(3001, function() {

eventStore.subscribe('customer_created',

function(customer) {

sendWelcomeEmail(customer);

});

console.log('email service listening on *:3001');

});

Scale with errors in mind

Handling endpoint failures, which has to be carefully handcrafted in a point-to-point situation, is now pushed to the messaging system. Therefore, the messaging system itself and the choreography of services around this component need to be built with these failures in mind.

If a service if down for a short period of time, event stores will naturally re-post missed events as soon as the subscriber service comes back up. However, if a service is down for a longer period of time, then the service must reload the history of past events and check which ones have been missed, as shown by the code snippet below.

http.listen(3001, function() {

eventStore.history('customer_created',

function(customer) {

if(!hasWelcomeEmail(customer)) {

sendWelcomeEmail(customer);

}

});

console.log('email service listening on *:3001');

});

In order to track down errors to process an event, a common pattern is to use the event ID as correlation ID among all services participating in the workflow for that event. Hence, when an event is posted, it is logged by the upstream service with its unique ID. For instance, the customer service will log the customer_created event with event_id 2987. Then, the resulting transaction in a downstream service is logged with a reference to the original event. Hence, the loyalty service will log the add_status_point transaction with transaction_id =2987.0987.

If each downstream service logs its transaction in relation to the triggering event, it becomes easy - by aggregating logs of all your microservices - to draw a graph of all downstream transactions for an event, and ensure they all occurred successfully.

Beyond services integration

While I strongly recommend the use of events to avoid some pitfalls of point-to-point services integration, it also presents other noticeable benefits.

One benefit is to be able to build cross-domain data aggregates without leaking one service domain model into another. In a database-centric application, dedicated views can be used to tailor datasets for presentation purposes. However, in an event-driven world, it is simply a matter of creating a dedicated service that subscribes to the relevant events to build the aggregate and exposes it to its consumers.

Services collaboration through events leads to a much more scalable architecture, with simpler and better tailored models, that will reduce the complexity of your digital assets, increase scalability, and improve your ability to collect data for analytics purposes.

1. Martin Fowler, Contract Testing & Ian Robinson Consumer Driven Contracts (Addison Wesley, 2011) - Pg 250

2. Sam Newman, Building Microservices (O’Reilly Media, 2015) - Pg 46, Chapter 4: “Orchestration vs. Choreography”

3. All code samples in this article can be found at https://github.com/jdamore/jspubsub

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.