Introduction

Event-driven microservices are, today, quite popular; they’ve been widely adopted for numerous reasons, such as the fact they promote loose coupling.

But there are also good reasons to use request/response-based communication. For instance, perhaps you are in the process of modernizing your systems and some of the systems have been migrated to an event-driven architecture while others haven’t been migrated at all. Or perhaps you use a third party SaaS solution that offers their services via REST APIs over HTTP. In these scenarios it’s not uncommon to integrate event-driven microservices with request/response APIs. This results in new challenges because of the tight coupling that the integration introduces.

In this series of blog posts, we would like to share four lessons we've learned from integrating event-driven microservices with request/response APIs:

Implement idempotent event processing (covered in this post)

Decouple event retrieval from event processing (explored in part two)

Use a circuit breaker to pause event retrieval (covered in part three)

Rate limit event processing (covered in part four)

The challenge: integrating event-driven microservices with request/response APIs

To understand why we should implement idempotent event processing, we first need to pay close attention to integrating event-driven microservices with request/response APIs.

Event-driven communication is an indirect communication style where microservices communicate with each other by producing and consuming events and by exchanging them through a middleware like AWS SQS. Event-driven microservices often implement an event loop to continuously retrieve events from the middleware and process them (this is sometimes referred to as a polling consumer). This is illustrated in the code sample below:

void eventLoop() {

while(true) {

List<Events> events = retrieveEvents();

for(Event event : events) {

processEvent(event);

}

}

}

Listing 1: Simplified Event Loop

Integrating an event-driven microservice with a request/response-based API effectively means sending requests to APIs from within an event loop while the event is being processed. After sending a request to an API, the processing of the event is blocked until the responses are received. Only then will the event loop continue with the remaining business logic. This is illustrated in the code sample below:

void processEvent(Event event) {

/* ... */

Request request = createRequest(event);

Response response = sendRequestAndWaitForResponse(request);

moreBusinessLogic(event, response);

/* ... */

}

Listing 2: Simplified Event Processing with an Integration of a Request/Response API

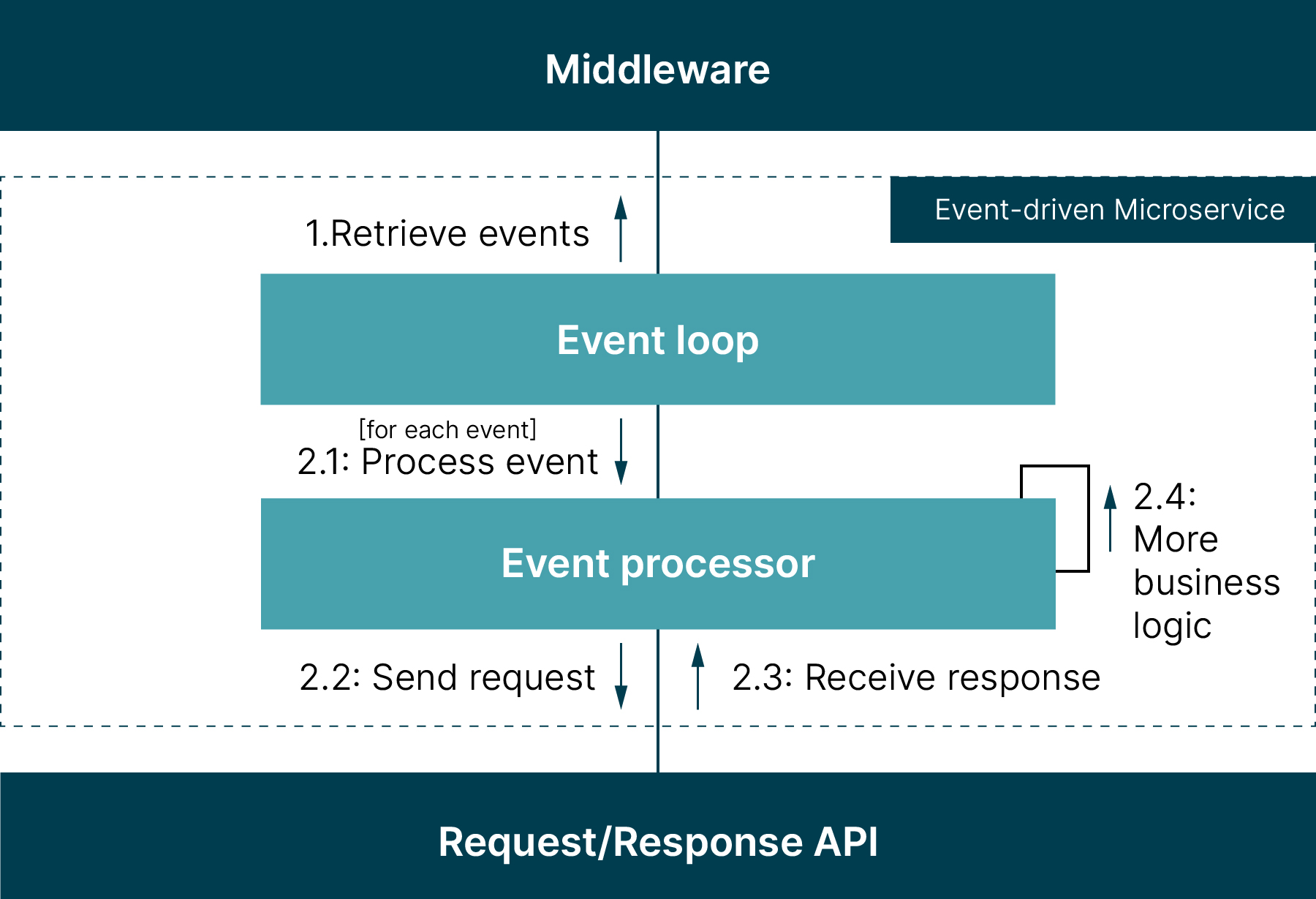

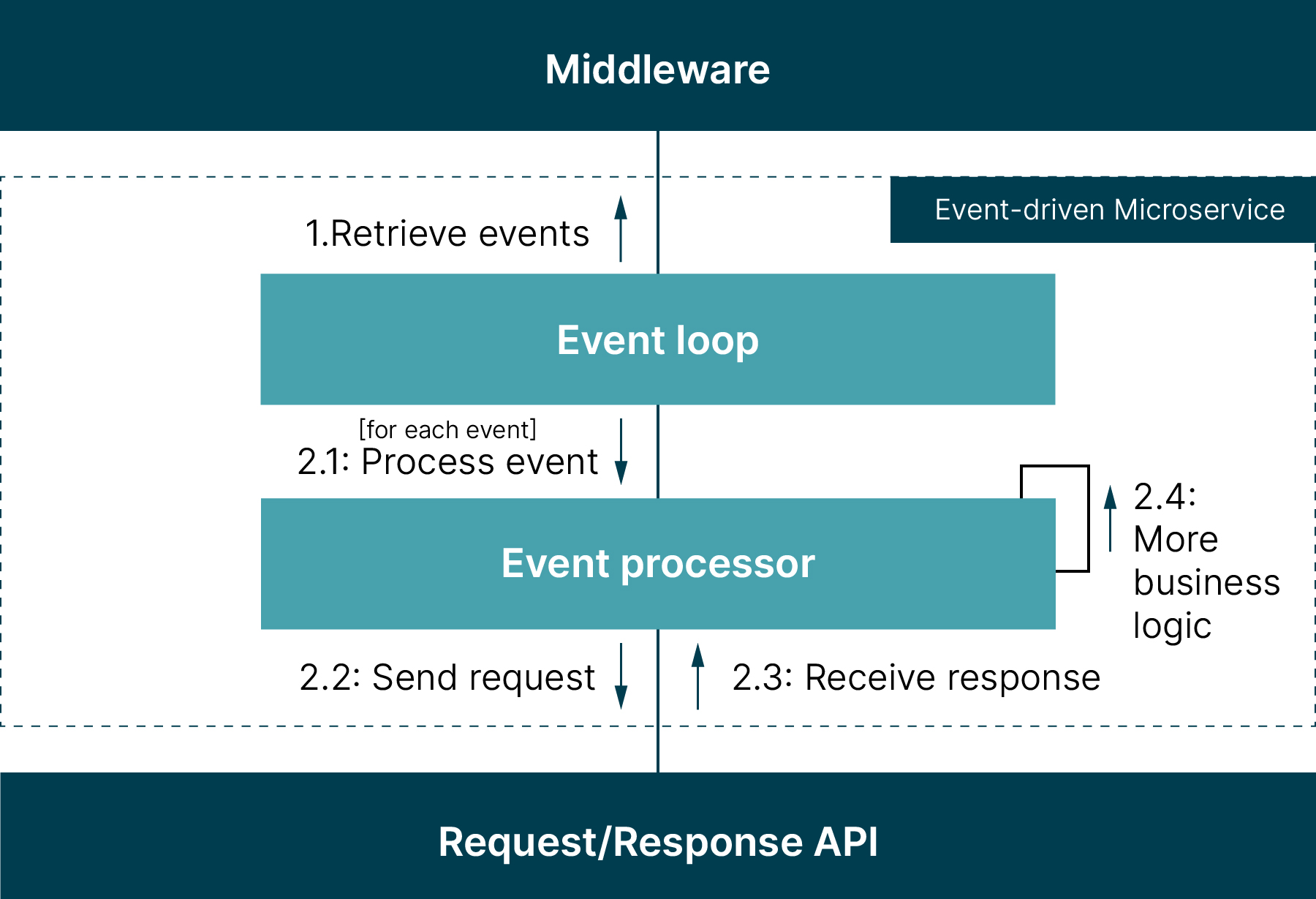

The image below provides another way of looking at this. It depicts the detailed interactions between the middleware, the event-driven microservice and the request/response API.

Figure 1: Interactions between Middleware, Event-driven Microservice and Request/Response API

The reason integration is challenging is because of the two different communication styles: event-driven communication is asynchronous and request/response-based communication is synchronous.

The event-driven microservice retrieves events from the middleware. It processes them in a way that’s decoupled from the microservices that produce the event. It’s also independent of the pace at which the producing microservices are emitting new events. This means that even if the consuming microservice is temporarily unavailable, the producing microservice can continue to emit events with the middleware buffering them so they can be retrieved later.

In contrast, request/response-based communication is a direct communication between two microservices. If an upstream microservice sends a request to a request/response API of a downstream microservice, it blocks its own processing and waits until it has received a response from the downstream microservice. This tight coupling means both microservices need to be available. The processing of the downstream microservice is also impacted by the pace with which the upstream microservices send requests.

Lesson learned #1: implement idempotent event processing

When working with event-driven microservices, retries are inevitable; some events will be consumed multiple times. This is because middleware typically provides certain delivery guarantees such as at-least-once for AWS SQS, and their retry functionality, used if processing fails or takes too long (e.g. visibility timeout).

This is why it’s important to account for retries in event processing by making the processing idempotent. In other words, when an event is processed multiple times it should have the same result as processing an event once. Duplicate events that have already been successfully processed can be ignored by implementing the idempotent receiver pattern; this is done by giving events unique identifiers (idempotency keys) that are stored and checked during event processing.

In our experience, however, it isn’t enough to detect and ignore duplicates of successfully processed events. The request/response APIs should also be idempotent so they can handle duplicate requests. Think about a scenario where a request gets lost because of an unreliable network or where a response hasn’t been received by an upstream microservice. If the API isn’t idempotent, the duplicate request will fail or produce incorrect results when the request is made again.

Therefore, the best solution is to make request/response APIs idempotent. Some API operations like GET or PUT can be easily made idempotent, while other operations like POST require idempotency keys and implementation patterns like the idempotent receiver. If you can't influence the design of an API to make it idempotent, you should at least account for it in the event processing to avoid failures and incorrect results from using a non-idempotent API. That, however, depends largely on the design of the API.

The example below can clarify this point. The code shows an integrated request/response API that responds to duplicate requests with errors. In this context, what matters is identifying duplication errors and other kinds of errors.

Consider a POST operation that fails with 422 UNPROCESSABLE_ENTITY errors for duplicate requests stating that the resources exist. Conveniently, error responses contain further information, e.g. resource identifier, that allow us to get the existing resource and proceed with the business logic.

void processEvent(Event event) {

Response response;

try {

response = sendRequestAndWaitForResponse(create_Post_Request(event));

} catch(UnprocessableEntityException ex) {

var resourceIdentifier = extractResourceIdentifier(ex.errorDetails());

response = sendRequestAndWaitForResponse(create_Get_Request(resourceIdentifier);

}

moreBusinessLogic(event, response);

/* ... */

}

Listing 3: Get resources when creation of new resources fails

Conclusion

The above discussion shows that idempotent request/response APIs are easier to integrate. Nevertheless, non-idempotent request/response APIs can also be integrated in an idempotent manner. How you go about integrating a non-idempotent API ultimately depends on the context and the API design.

Regardless of the API design, it’s important to account for duplicate events and duplicate requests by providing sensible recovery options. In the next post in this series, we’ll cover the next integration challenge and explore an issue related to the event retrieval.

You can read the rest of the series here:

Retries are inevitable and event processing including the request/response APIs should be idempotent (Part One).

The response times of request/response APIs impact the performance of event-driven microservices. If response times fluctuate significantly, it makes sense to decouple event retrieval from event processing (Part Two).

Circuit breakers can improve the integration with request/response APIs as they handle periods of unavailability (Part Three).

Request/response APIs may have usage limits and rate limits help adhering to them (Part Four)

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.