Why AI testing matters

As businesses strive to gain an edge over their competition, many are embracing the idea of infusing core applications with AI capabilities. It’s an alluring idea, but here’s the kicker: few organizations are equipped to manage the complexity this brings.

This point has been brought sharply into focus by Forrester Research in its latest report “It’s Time To Get Really Serious About Testing Your AI”. While AI is increasingly pervasive in enterprise software, Forrester warns that “little consideration is given to testing the resulting AI-infused applications.”

Why does that matter? After all, aren’t these intelligent systems smart enough to correct themselves? Frankly, the answer is “no”. And while Forrester correctly highlights the need for enterprises to introduce more comprehensive testing programs for their AI initiatives, at Thoughtworks — one of the vendors interviewed for this report — we’d go further still.

Effective testing can help unlock more value from AI

We’ve worked with many clients over recent years to maximize their return from AI solutions and learned why testing is an essential part of being able to confidently deploy these systems into production — and when needed, evolve them.

In the two-part report, Forrester analysts explore how to test AI-infused applications (AIIAs) and the technology stack needed for automated and continuous testing of AIIAs. In Part Two, they note:

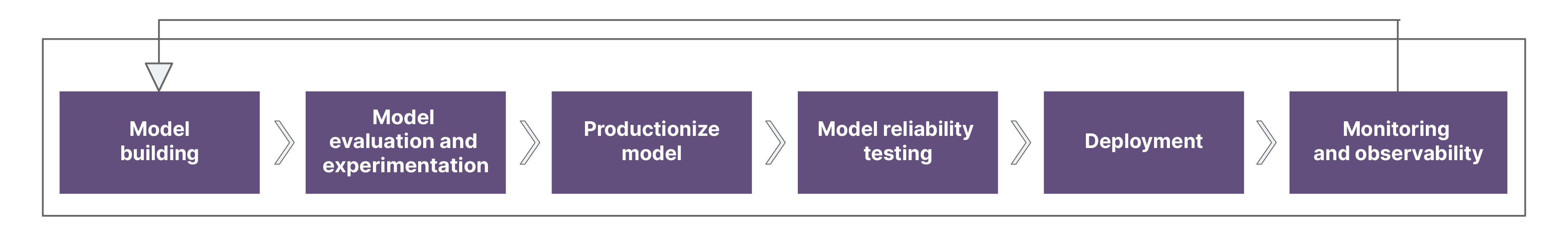

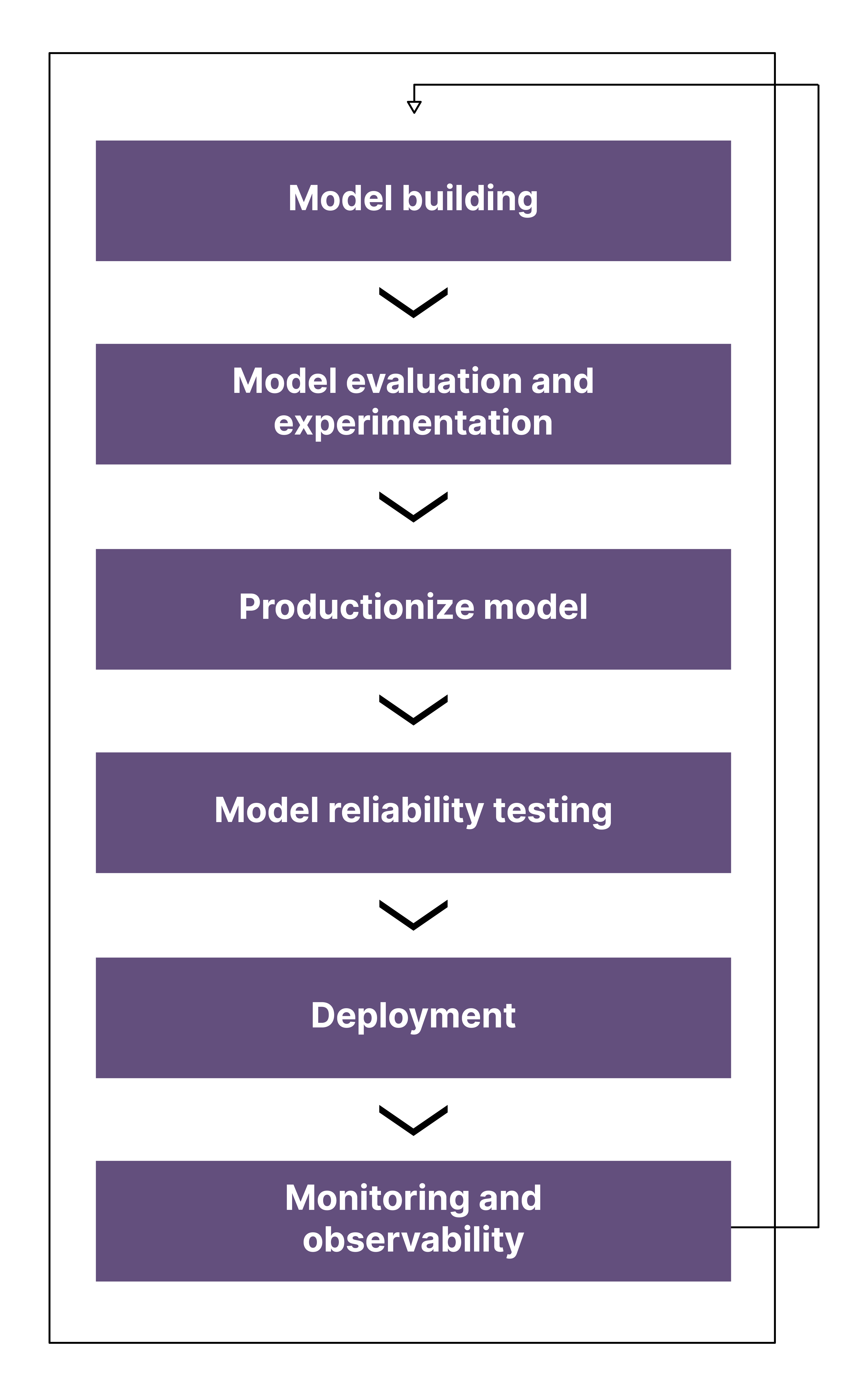

There is no silver bullet for an integrated, automated development, delivery, and testing solution for AIIAs — yet. However, some early solutions are worth piloting: Thoughtworks, one of the founding companies of continuous delivery, has pulled together a continuous delivery process and toolchain called CD4ML, which supports the process needed to build, test, and deploy AIIAs. CD4ML enables you to build data pipelines for sourcing the training and testing data needed by the models, model pipelines, and code pipelines including testing and test automation support.

The good news is that, just like continuous delivery, many of the elements of testing strategy you need for AI models can be replicated from good practices elsewhere, for instance, through driving data quality standards across all systems, or using a test pyramid as the foundation for testing in the software development lifecycle.

Spot the signs your AI testing is inadequate

As AI becomes increasingly pervasive in the enterprise tech stack, so too do the risks of inadequate testing. Generative AI has democratized the technology further — and in so doing, widened the blast radius for potential problems.

And the number of high profile failures is growing. But you don’t need to wait until you’ve become headline news: there are warning signs that your current AI testing regime needs a rethink.

Maybe you’ve detected a noticeable decline in performance; maybe you’re not seeing the value you once did from your systems. Maybe you’ve rushed headlong into production without evaluating whether your training data matches the scenarios you'll encounter in the real world. Other key signals include: production incidents; constant firefighting; excessive manual testing before anything can be released to production, reducing speed to market; and an inability to refactor safely and often, thereby compounding these issues.

Whatever the situation you’re in, Part Two of the Forrester report has provided guiding principles for testing AI. These include:

Unit-test during development

Test in production

Performance-test models and the end-to-end experience

Unit- and functional-test for bias

Test integrations

We think that’s useful advice, highlighting the need for a diversity of test types, which Forrester notes in Part Two of their report also means coordinating different functions and roles. But before simply asking ‘How will we test this?’, you might want to consider the questions: Should we do this? And how should we do this? Testing is not something you do to a solution, rather testability is also a key requirement when deciding which problems to go after and how to design solutions for them. Testing also has cultural connotations: in many cases organizations pay lip service to testing; that makes it difficult to implement changes needed to prioritize testing and drive real business impact.

Eliminating the unknowns of AI testing

As Forrester points out in Part Two of the report, the use of deep learning, reinforcement learning algorithms and large language models result in opaque and tightly coupled systems that are difficult to test.

These types of AI systems are designed to get smarter over time — for instance, a very simple example could be getting better at identifying images that contain dogs from a large, random data set of images. By the time the AI model can achieve human-levels of performance, it may be unclear how the model achieved those results, or perhaps you don’t always get the same results — such AI systems are said to lack ‘explainability’ and lack ‘deterministic behavior’.

But we can still apply proven testing practices, even allowing for issues of explainability or deterministic behavior. A system designed to be testable enables you to identify all the sources of input variation — such as random seeds, prompt construction, LLM temperature and sampling parameters — and the more of these you can hold constant in a test environment, the more you should expect deterministic behavior. In applications for which explainability is a requirement, choose models that are interpretable by design (such as decision trees). For more opaque models, testing is still possible and, in fact, requires an especially systematic approach in order to address edge case failures.

You might start by creating a “model card” to include the fundamental details of how an AI model works, what it’s been tested on, whether it learns online. This type of foundational documentation raises awareness levels and critically evaluates what these systems are doing: what they've learned and how, and what prior human decisions guide their behavior. It should also be a ‘living’ artifact, not one just created at the design stage but one that evolves over time.

Now you can test that your AI algorithm is implemented correctly in known cases and also run simulations, sampling the space of inputs expected in production. You won’t get a guarantee it works in all cases, but you will know if it’s outright wrong and get a sense of the types of failures to expect. You can test the reproducibility of results and sensitivity of parameters too.

Investing your AI testing effort wisely

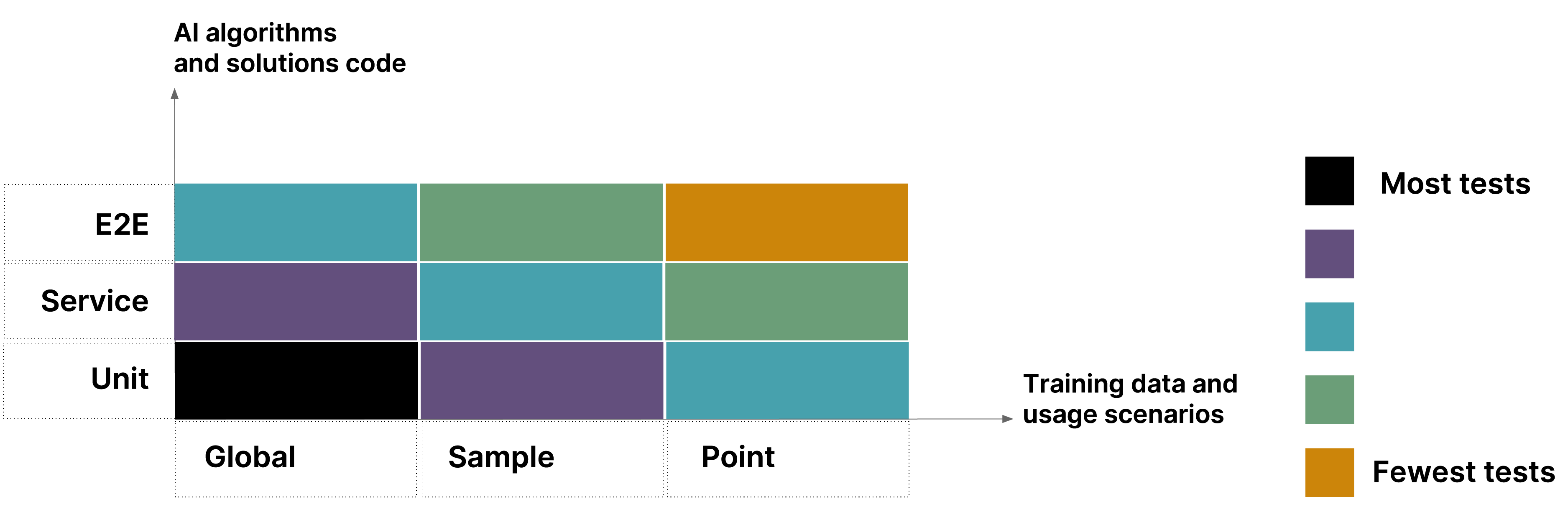

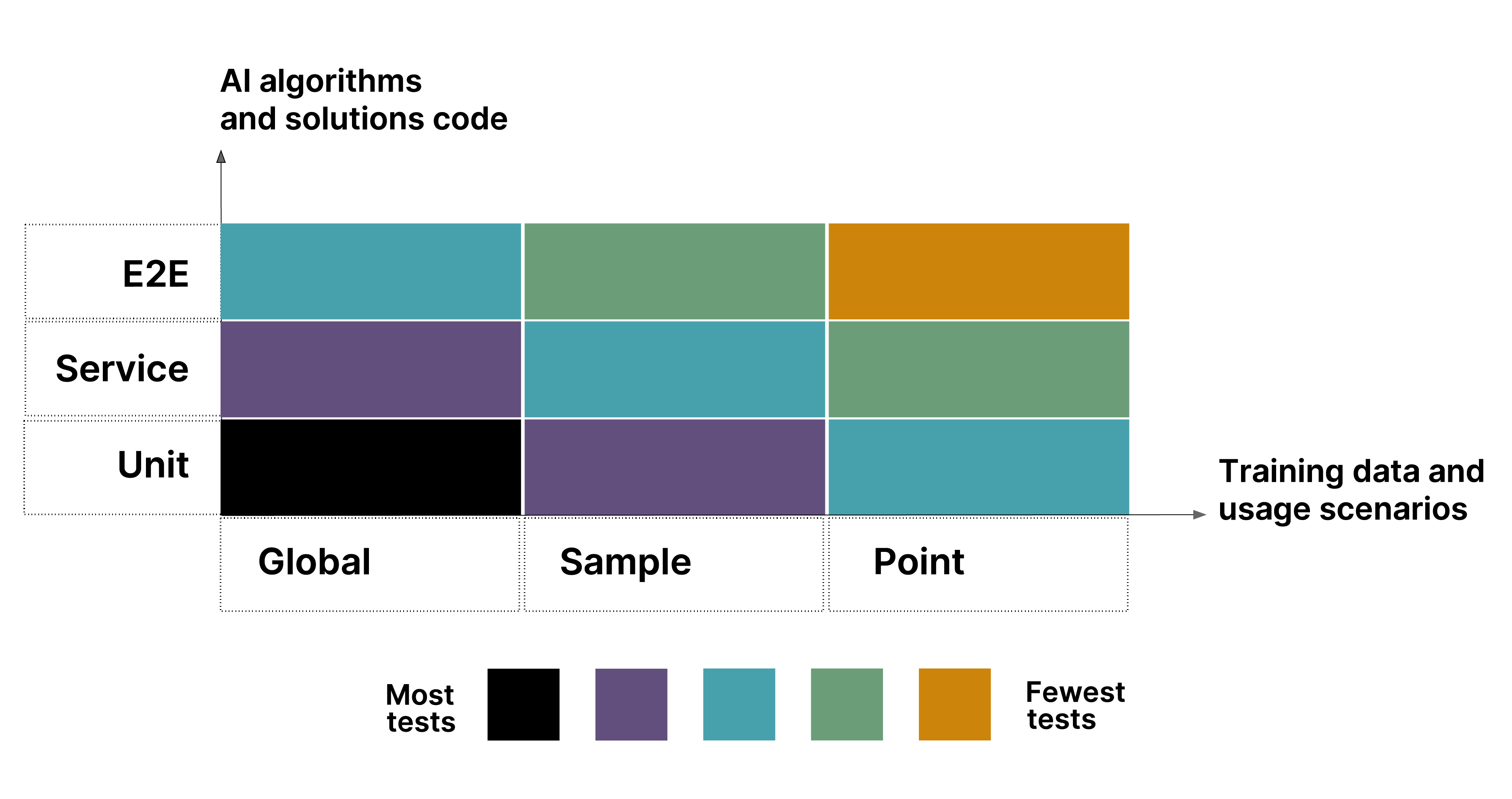

To establish the level and breadth of testing that’s appropriate for your use cases, we think it’s useful to frame this decision in terms of the Test Pyramid: a metaphor that tells us to group tests into buckets of different granularity. You’ll also get an idea of how many tests you should have in each of these groups, so you can establish what tests are appropriate to, say, guard against biased data.

That idea of what constitutes ‘good’ data is the final piece of the testing puzzle. At Thoughtworks, we build solid data foundations for AI-enabled applications. Having a good understanding of the data going in allows you to more effectively and efficiently test the AI and isolate issues.

For many of the clients we’ve helped, the first step towards leveraging AI successfully is driving data quality standards, through adopting a data product mindset — thereby reducing time to market for AI initiatives through ensuring quality is built into the data at the source, and reducing risk through appropriate data governance.

Neither the Test Pyramid nor the application of product thinking are new to most enterprises. We think that’s a strength. Yes, the complexity and performance of today’s AI systems can be daunting, but given their importance, we think the smart application of tried and tested ideas is the best basis for a practical testing regime. And that, ultimately, will give business leaders greater confidence that they can leverage AI technology effectively.