Reliability under abnormal conditions — Part Two

Testing in production

Given that setting up and maintaining fully production-like environments can get ever more costly and problematic there are various approaches for using production to gain confidence in how your evolving system will respond under load.Canary releases

A standard approach, pioneered by the likes of Google and Facebook, for using real production traffic and infrastructure is Canary releasing. By testing changes under a very low percentage of traffic, which is then incrementally increased as confidence grows, many categories of performance and usability problems can be caught quickly.Canary releasing has a few prerequisites and some limitations:

- It requires reasonable levels of traffic to be able to segment in a manner that provides useful feedback. Ideally, you can start with safe internal “dogfood” users

- It demands solid levels of operational maturity so that issues can be spotted and rolled-back quickly

- It also only really gives you feedback on standard traffic patterns (during the canary release period) and gives you less confidence about how the updated system will operate under abnormal traffic shape or volume driven by promotions or seasonal shifts

Load testing in production

If your production environment is so large, so expensive to provision, or your data is so hard to recreate, that realistic pre-production testing is hard, you should first try to reduce the cost of recreating production, but failing that there are various approaches for load-testing in production. One approach is to run “synthetic transactions” in large volume to “hammer test” components of your system to see how they respond. Another approach is to store up asynchronous load and release it in a large batch to simulate heavier traffic scenarios. Both of these approaches require reasonable levels of confidence in your operational ability to manage these rushes in load, but they can be a useful tactic in understanding how your system will respond under serious load.

Example 1:

We would hold production traffic in our queues for a certain amount of time, typically 6-8 hours (enough to create necessary queue depth for a load test), release it and then monitor application behavior. We built a business impact dashboard to monitor business facing metrics. We took utmost care to not affect business SLAs when doing so. For example, for our order routing system, we would not hold expedited shipping orders as it would affect the shipping SLAs. Also, we had to be aware of downstream implications of the test — essentially we were load testing the downstream systems as well. So in our case, it would be the warehouses that would receive a lot of requests for shipments at the same time. This would put pressure not only on their software systems but also their human systems like the workforce in the warehouse.

Chaos engineering

In many ways, the gold standard for resilience testing is called 'Chaos Engineering.' This is really an approach for verifying that your system is resilient to the types of failures that occur in large distributed systems through deliberate fault injection. The underlying concept is to randomly generate the types of failures you feel your system needs to be resilient to and then verifying that your important SLOs aren’t impacted by these generated failures.Obviously embarking on this approach without high levels of operational maturity is fraught with danger, but there are techniques for progressively introducing types of failures and performing non-destructive dry-runs to prepare teams and systems.

We strongly believe the right way to develop the required operational maturity is to exercise this type of approach from the early stages of system development and evolution so that the observability, monitoring, isolation, and processes for recovery are firmly set in place before a system becomes too large or complex for easy retrofitting.

Mitigating impact in production

Certain categories of problems are still going to make it to production so focus on two dimensions of lowering the impact of issues:- Minimize the breadth of impact of issues, mainly through architectural approaches

- Minimize time to recover through monitoring, observability, ops/dev partnership, CD practices

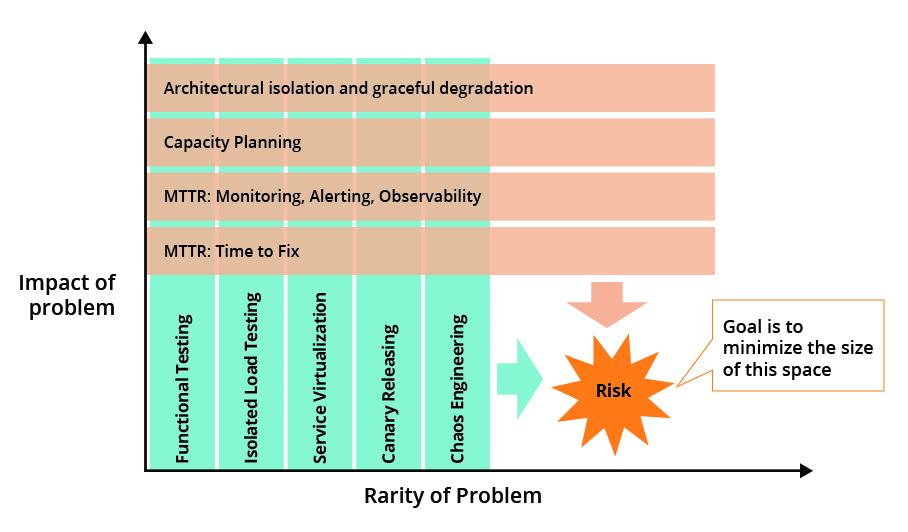

Figure 1: Minimizing impact of problems

Minimizing the blast radius (breadth of impact)

Minimizing the breadth of impact of a problem is largely an architectural concern. There are many patterns and techniques for adding resilience to systems, but most of the advice can be boiled down to having sensible slicing of components inside your system, having a Plan A and a Plan B for how those components interact, and having a way for deciding when you need to invoke Plan B (and Plans C, D, E, etc). The book “Release It!” has a great exploration of the major patterns (timeouts, bulkheads, circuit-breakers, fail-fast, handshakes, decoupling, etc) and how to think about capacity planning and monitoring. Tools such as Hystrix can help manage graceful degradation in distributed systems, but don’t remove the need for good design and ongoing rigorous testing.Shortening time to recovery

If we accept that in a complex system some problems are inevitable, then we need to focus much of our attention on how to rectify them quickly when they do occur. We can break this down into three areas: time to notice, time to diagnose, and time to push a fix. Thoughtworks has been advising teams for a while to focus on mean time to recovery over mean time between failures.

Continuous Delivery

Time to push a fix once a problem has been diagnosed is largely about engineering lead time. Thoughtworks has destroyed multiple forests writing about how to optimize going from feature/defect request to safely push a change, so I won’t revisit the principles and practices of Continuous Delivery here.Monitoring and alerting

Monitoring is a detailed topic, but the core is to be gathering information that will allow you to notice (and be alerted) when part of the system is no longer functioning as expected. Where possible the focus should be on leading indicators that can alert you before an issue causes noticeable effects. It’s useful to monitor at three different levels:- Infrastructure: disk, CPU, network, IO

- Application: request rate, latency, errors, saturation

- Business: funnel conversions, progress through standard flows etc.

Including key business metrics and watching for deviations from standard behavior is a particularly useful tool for measuring the negative impact of new features or code changes which may not be impacting the lower level metrics.

Observability

“Observability” is a concept that’s gathering traction. It focuses on designing applications so that they emit all the information required to rapidly discover the root cause of not just expected problems (known unknowns), but also the unexpected problems (unknown unknowns) that are typical of the types of issue encountered in complex distributed systems. Tooling and practices in this space are still evolving, but having good approaches for structured logging, correlation IDs, and semantic monitoring are good starting points, as is the ability to query your time series logging and monitoring data in a correlated fashion through tools such as Prometheus or Honeycomb.Starting early to avoid the S-curve

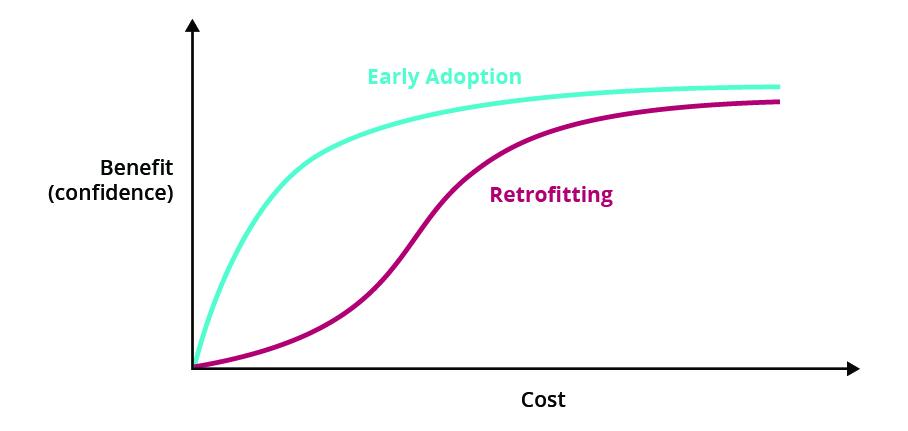

Earlier we talked about the cost-benefit curves of the various tactics you can apply to gain confidence in how your system will operate under abnormal load or unexpected conditions. We drew a nice convex curve to illustrate the trade-offs, but this is based on the assumption that the cost of getting started with a technique is low. The longer you wait to introduce a practice the greater the ramp-up will be and the convex curve will turn into more of an S-curve.

Figure 2: Starting practices early and late

For this reason, we recommend starting as many practices (load testing, observability, chaos engineering, etc) as early as possible to avoid the high cost associated with retrofitting a practice or test suite.

Conclusion

Accepting and preparing for unanticipated combinations of problems is a fact of life in modern complex systems. However, we believe the risks posed by these unknown unknowns can be heavily reduced by applying a combination of approaches:- Stopping predictable problems through pre-production testing, layering together multiple approaches to gain the maximum benefit from the effort applied

- Limiting the impact of problems that occur under standard usage by carefully phasing in releases through incremental roll-outs and providing architectural bulwarks to prevent proliferation of problems

- Preparing to respond to unanticipated problems that happen under rare conditions by developing mature operational abilities to notice, diagnose and fix issues as they arise

- Applying hybrid techniques, such as chaos engineering, to both identify potential issues before they arise and at the same time test out your operational maturity

- Lowering the cost of these approaches by thinking about and applying them from early in the development lifecycle

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.