This blog post is based on "Beyond Moore’s Law: the next golden age of computer architecture," the e4r Thoughtworks-organized online symposium held in February 2022.

For the last 50 years, Moore’s Law has been guiding the computing industry. However, it wasn’t initially posited as a law; instead it was merely an observation made by Gordon Moore, the then director of Fairchild Corporation: the number of transistors on a silicon chip doubles every two years.

This was a significant insight for the computing industry because transistors on a chip are fundamental to the processing work at the heart of computational devices. They are the building blocks of storing, processing and transferring bits, which means Moore’s Law has important consequences for various qualities of computing such as speed, performance, reliability and energy consumption.

The end of Moore’s Law?

For nearly fifty years, Moore’s Law held true in all domains of digital electronics and computing. This was largely due to the silicon lithography technique, by which one can etch transistors on appropriate materials. However, today the shrinking size of a transistor is approaching the size of an atom. This poses a serious challenge to existing lithography techniques. As a result, Moore’s Law is decelerating. As Prof. Kale of UIUC suggested at "Beyond Moore’s Law: the next golden age of computer architecture," an online symposium organized by Thoughtworks in February 2022, the atomic limit will allow at most 100 billion transistors per chip and this can be achieved by 2025.

What next for silicon chip manufacturing?

The question, then, is what next? Will it be the end of electronic computing as we know it? Or can we invent techniques very different from what we have today? If not, then how do we cope with the ever-increasing demand for affordable high performance digital computing systems? What can we do to mitigate this slowdown?

How can we mitigate the deceleration of Moore’s Law?

There are two ways the effects of the fading of Moore's law can be mitigated or at least delayed. One is about redefining the underlying hardware of our systems, and the other calls upon an evolution of software architecture. Both of these approaches will require significant investment — in terms of both money and people.

Innovations in hardware

Let’s begin with the most significant hardware challenges along with the new directions in research associated with them.

The practical limit for lithography is due to the extreme miniaturization of electronic components. As components reach near-atomic scale, only a handful of silicon atoms remain present across the critical features of the component. This requires a different approach to advance current manufacturing techniques with metal oxide semiconductor technology (CMOS) such as nanosheet field-effect-transistors (FET). The researchers are looking at packaging and 3D chiplet architecture as a next logical step.

Nanosheet field effect transistor technology will keep Moore's Law alive for many more years.

Nanosheet field effect transistor technology will keep Moore's Law alive for many more years.

Chiplets are smaller chips that are fabricated separately and joined together using the direct copper-to-copper bond on a silicon wafer. Packaging technology allows a higher density of components on a single chip. Modern packaging architecture is built in a way that balances performance, power, area, and cost.

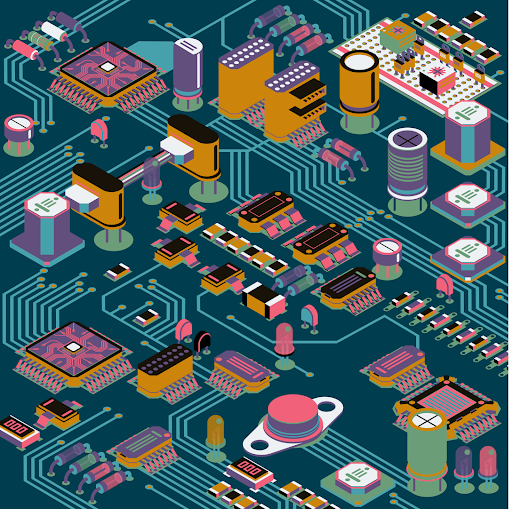

Moving towards domain specific architectures

Today we are in the era of multi-core processors. They have emerged to cater to the needs of mixed workloads and the clock-speed limitations of prevailing single-core processors. “There is an excessive demand for heterogeneous computing architectures.” said Dr Ranjani Narayan (co-founder and CEO of Morphing Machines)The solution, he suggested, is "Software-defined Domain Specific Architecture (DSA)."

Non-CPU devices like Graphical Processing Units (GPUs), Field Programmable Gate Arrays (FPGAs) and Application Specific Integrated Circuits (ASICs) are examples of DSAs. Runtime configurable processor architecture is a part of the DSA “repertoire” today. REDEFINE, as it is named, is a multi-node processor that can be configured at runtime, making a REDEFINE-based device resilient to runtime failures. In addition, REDEFINE can switch off a particular node if it isn’t required, thus saving power.

AI-driven high performance computing

Artificial intelligence (AI) and machine learning require significant compute power. However, they are also proving invaluable in helping us unlock gains in computational power too. In the context of high performance computing (HPC) for example, AI is moving from using HPC to actually driving it. This could mean we are approaching a future in which AI-driven supercomputers and clouds will merge into one. AI will penetrate into the entire software stack and will replace compute-intensive libraries with learnt surrogates.

Global AI Modelling Supercomputers (GAIMSC) is on the horizon. It will revolutionize the industry of supercomputing.

Global AI Modelling Supercomputers (GAIMSC) is on the horizon. It will revolutionize the industry of supercomputing.

HPC is often used in big data analysis. As with AI, processing data at huge scales can be expensive and demands serious computational resources. Fortunately, data-centric approaches and data-driven architectures can help to make moving data more efficient and reduce energy consumption. This means analytics projects can push the boundaries of innovation even if chip manufacturing cannot. Key architectural concerns for the future include the need to process data in or near memory and storage, hybrid or compressed memory, low latency, low energy data access, and intelligent controllers for security.

Teaching photons new tricks

One potential avenue to explore is to combine two technologies and leverage the advantages of both. It might be possible, for example, to use photonics with digital electronics. "Photons and silicon are old dogs. How can you teach an old dog a new trick?", Prof. Selvaraja of IISc, Bengaluru asked at February’s symposium. Bandwidth is the bottleneck in today’s processors. To describe this problem, he gives an analogy of a skyscraper having just a single lift with an average speed. The point here is that it isn’t storage that’s a problem — it’s transport. In the context of multicore processors, this issue could be resolved by using optical fibers to connect the cores with memory. Optical wire-bonding can connect the logic and the memory together on a chip with a laser, improving the speed dramatically while also reducing latency and noise.

Could we make silicon chips that work like the brain?

Prof. Selvaraja also discussed the functionality of the human brain. He suggested the possibility of mirroring it using a neuromorphic photonics network. Neuromorphic computing is a bottom-up approach to computer architecture. Scientists want to apply the latest neuroscience insights to create chips that function like the human brain. “In the brain, the processing happens inside the storage where the information is kept. Neuromorphic photonic networks can replicate the neuron structure of the brain. This works perfectly for the photons. Photonics can be one of the platforms to implement neuromorphic circuits. The heart of the processor can be a photonics integrated circuit,” he said.

Innovations in software

All the techniques we have discussed above are about evolving hardware. But what about software? Let’s review a few important ideas.

OmniX

As we have seen, many hardware technologies are only on the horizon; they need time to reach the market. While domain-specific architectures — also known as Accelerators — are bridging the gap of high performance at lower cost and energy consumption, a challenge remains because the operating systems only run on CPUs; all the necessary instructions need to be “passed through” the CPU. Fortunately, there is a solution to this CPU-centric problem: OmniX — an accelerator-centric OS architecture.

OmniX uses something called smart-network-interface-cards (SmartNIC). These are programmable accelerators that create secure, flexible, and data-centric systems. A single SmartNIC can support up to 100 accelerators performing neural net inference. This can considerably reduce the load on the CPU.

Domain specific programming languages

In-memory, accelerator-centric, and neuromorphic processing designs are perfect examples of non-traditional computer architectures. While they have clear benefits, these systems will pose significant challenges to existing computing ecosystems and will likely require new tools and skills to be effectively developed and maintained. This is where Domain-specific languages (DSLs) could prove useful — they could help bridge the programmability gap.

The golden era in computer architecture requires major changes in programming to democratize heterogenous and emerging high-performance computing.

The golden era in computer architecture requires major changes in programming to democratize heterogenous and emerging high-performance computing.

New editions of such languages should provide sufficient abstractions so programmers don’t get overwhelmed with the intricacies of the underlying domain-specific hardware. Some DSLs that are already used today — and popular — include Halide, TensorFlow, and Firedrake.

Supercomputers

The supercomputers of the future will be limited more by capital and energy budget than by Moore’s law. Parallel computing has always been a powerful technique to multi-node clusters for high performance computing; a lot can still be done with it.

Backed by an adaptive runtime system, Charm++ is a general purpose parallel programming model for supercomputing applications. This combination yields portable performance and many productivity benefits. The model is object based, message-driven and runtime-assisted.

Open hardware

One of the most interesting but potentially impactful trends that will shape the future of computing is open hardware. Like open source software, this is all about collaborative and democratized hardware development and innovation. One of the key organizations behind this movement is the CHIPS Alliance, which is a part of the Linux Foundation. It is a hub for collaborative activities across academia and industry. Speaking at the Symposium, Rob from CHIPS Alliance suggested the driving force of such a movement could be the RISC-V ISA: an open alternative to proprietary ISAs like x86 and ARM.

Although there are a number of challenges today with open source EDA software, and the open IP community and business services, business models are evolving to embrace open source development. Corporations are innovating at higher levels, leveraging open source IP and accelerating time to market. To overcome today’s challenges, new strategies can be invented using a global collaborative ecosystem in the coming years.

Looking ahead to the future

With all these intriguing research directions, the future of computing beyond Moore’s law shouldn’t be viewed as gloomy. On the contrary, there are many reasons for optimism; it has opened a new window of hope and opportunity. The future belongs to "complex systems" and their solutions. High-level domain specific languages and architectures, open source ecosystems, improved security, agile chip manufacturing will set the scene for a new golden age for hardware. RISC will remain and the most rapid architectural improvements will be seen in cost, energy, security and performance.

The next generation of engineering professionals will need to engage with these concepts and ideas to continue to build high performance computing systems and solve complex and multifaceted real-world problems for people, businesses, and the world.

Thank you to Chhaya Yadav, Harshal Hayatnagarkar and Richard Gall for their invaluable feedback and reviews and to Priyanka Poddar for her illustrations.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.