When ChatGPT was first released to the public in November 2022 as a chatbot, it made waves, becoming the fastest-growing app of all time. ChatGPT 3.5, the app’s base model, allowed its users to experience LLMs (large language models) in an interactive and intuitive way. A language model is a type of machine learning model that predicts the next possible word based on an existing sentence as input and a large language model) is simply a language model with a large number of parameters. GPT-3.5 has at least 175 billion parameters, while other LLMs, such as Google's LaMDA and PaLM, and META's LLaMA, have parameter counts ranging from 50 billion to 500 billion.

The main emergent abilities of LLMs include:

Language ability: LLMs can generate fluent and coherent language.

World knowledge: LLMs "remember" vast amounts of knowledge from their training text.

Contextual learning ability: LLMs can learn from input text and extract knowledge without requiring fine-tuning or updating parameters.

Logical reasoning ability: LLMs demonstrate logical reasoning ability, although their reasoning isn’t always correct.

Alignment ability: Their ability to “understand” and follow human-language instructions.

These abilities can be useful for a lot more than idle chat. Prompt engineering is one of the ways in which we can fully leverage the abilities of LLMs.

What is prompt engineering?

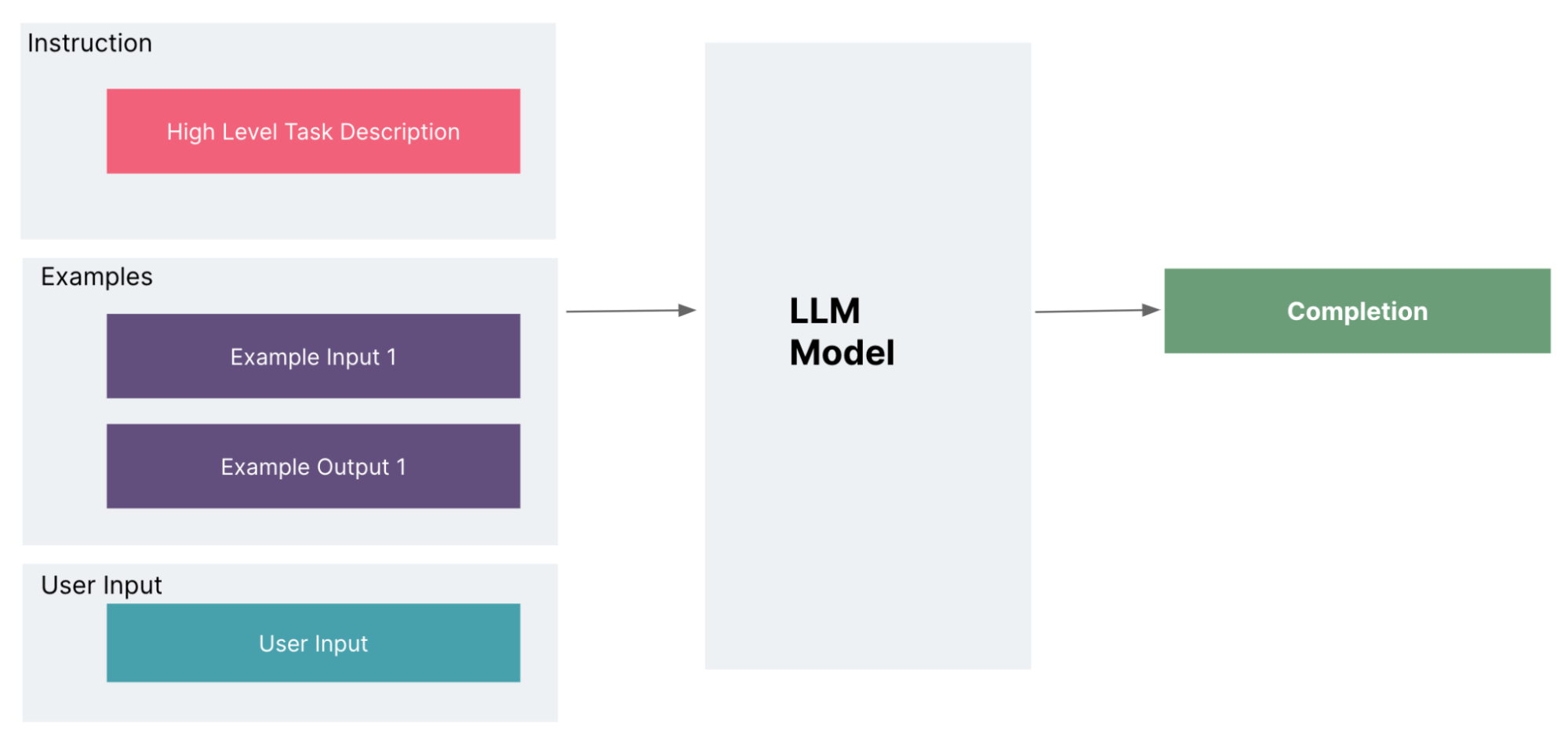

Prompt engineering is about crafting prompts for an LLM model to guide it into generating a desired output. Itfirst emerged with the release of GPT-3 in 2020. At that time, a typical prompt included task descriptions, user inputs and examples. The examples were needed because of LLMs’ weak alignment ability — their capacity to follow a given instruction. The following image shows the composition of a typical prompt.

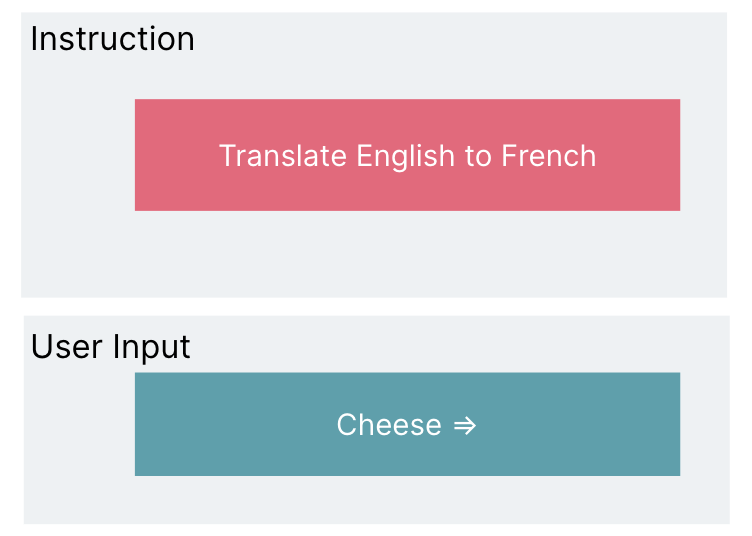

And here is an example taken from the GPT-3 paper.

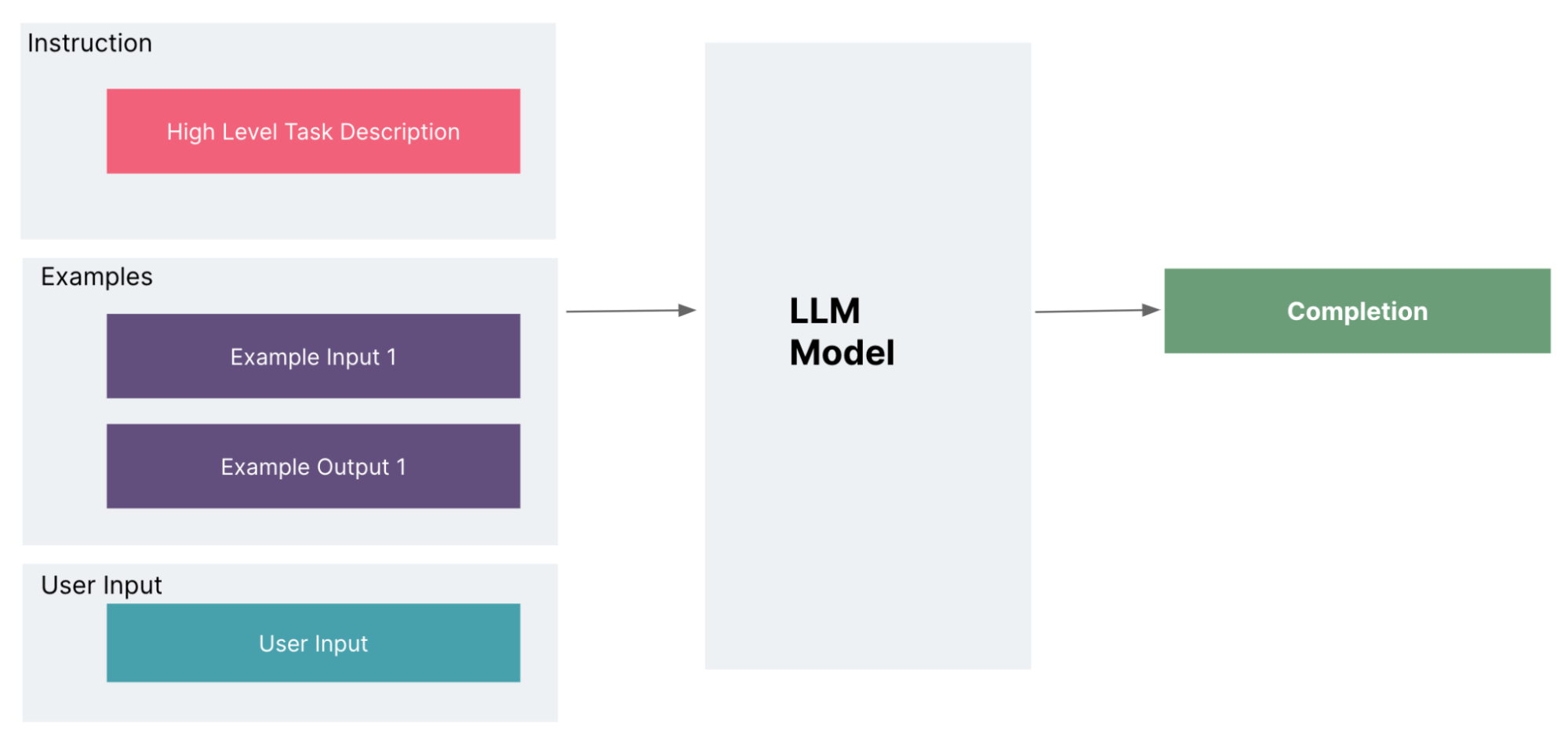

As LLMs further developed their alignment ability, the use of examples in prompts became unnecessary. Clear instructions, stated in natural language, are now enough so the prompt above can be converted to the following:

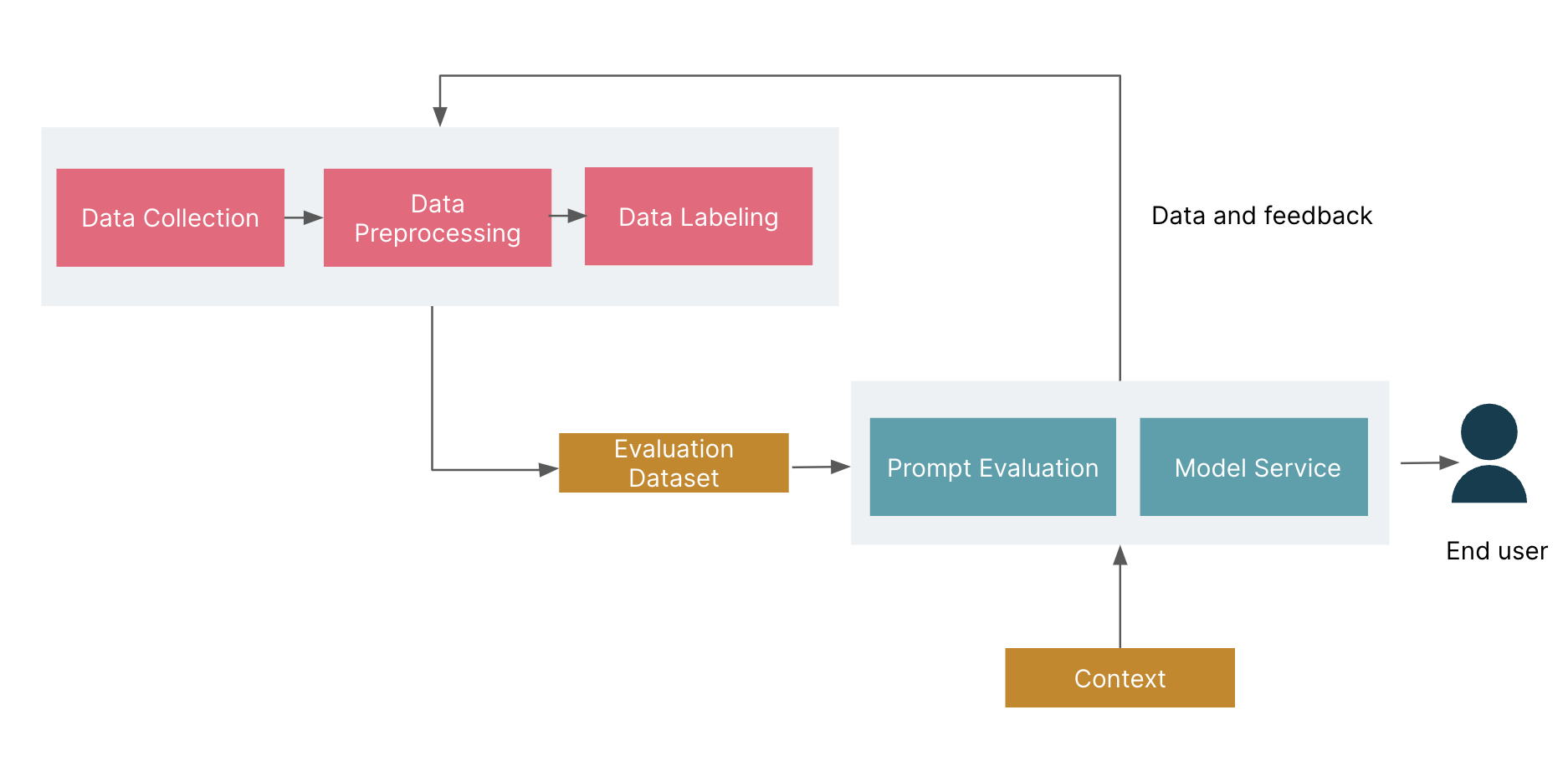

Paradigm Shift: from machine learning engineering to prompt engineering

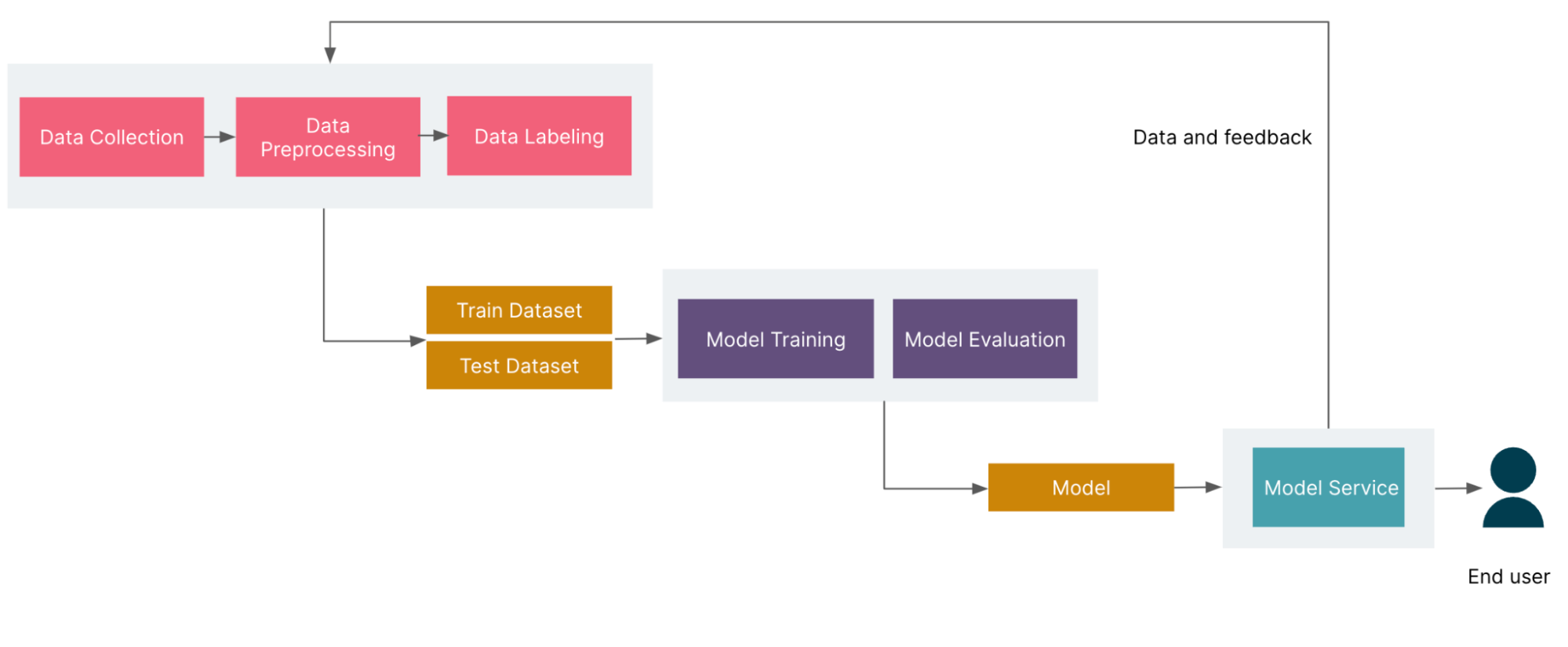

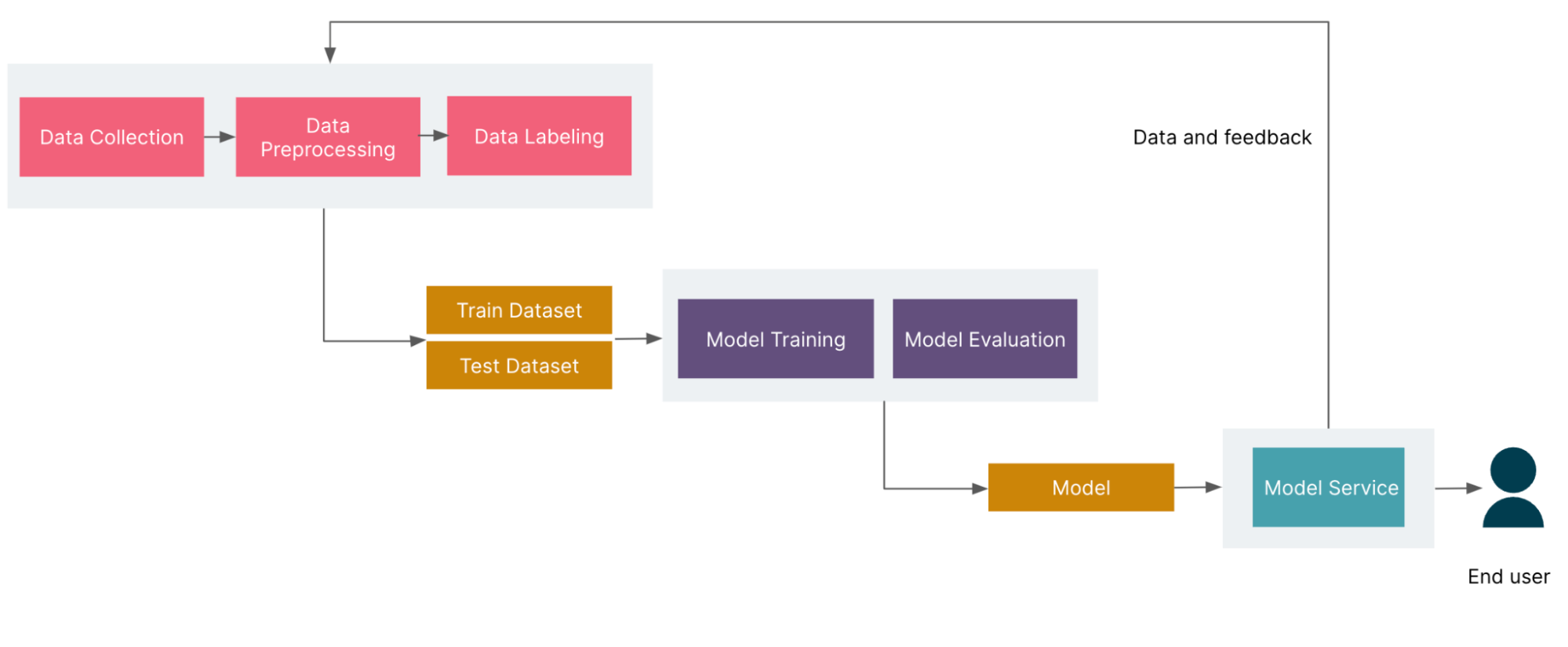

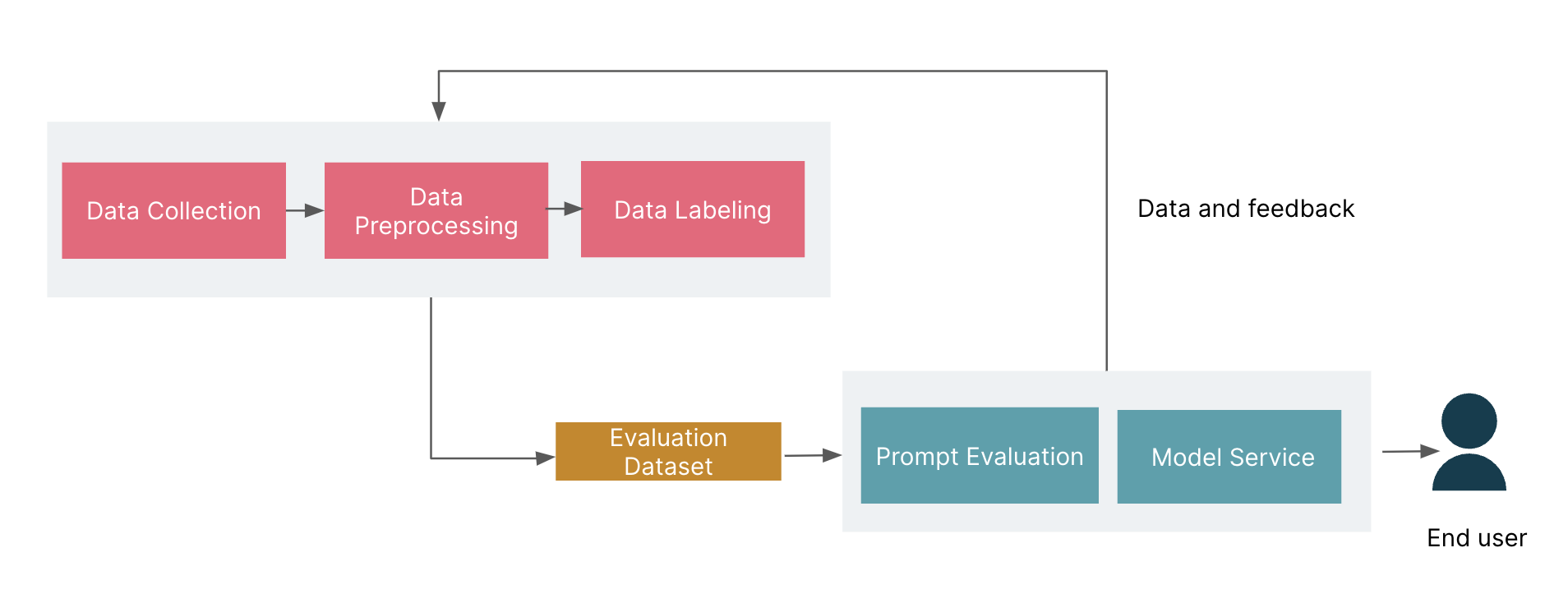

Using LLMs in combination with prompt engineering can change the current practice of machine learning projects. The process of machine learning engineering is shown in the following image:

Fig. The basic workflow of Machine Learning Engineering

The process begins with data collection, where data is manually cleaned and labeled to generate training and testing datasets. The training dataset is used for model training, while the testing dataset is used to evaluate the model's accuracy. Typically, the training dataset accounts for around 90% of the total dataset, while the testing dataset accounts for around 10%.

After completing the dataset, the model training stage begins. This stage typically uses AutoML technology to train multiple models and automatically adjust hyperparameters to select the best performing model on the training and testing datasets.

Once model training is completed, the model needs to be deployed online. During the deployment process, user data and feedback are collected and used to update the dataset for the next iteration of the model.

The main challenges in the iterative process of machine learning projects are:

A lack of training data — this is because the model requires a very large amount of data

The need for manual intervention to annotate data — once data has been collected, many machine learning projects require human intervention for data labeling.

Difficulty in predicting model performance — even after completing the first round of training, data scientists and machine learning engineers cannot confidently predict how much additional data is needed to improve a model's accuracy.

Tackling these three main challenges require large numbers of people and create long cycles for machine learning projects. It is also difficult to mitigate the uncertainty of outcomes in such projects.

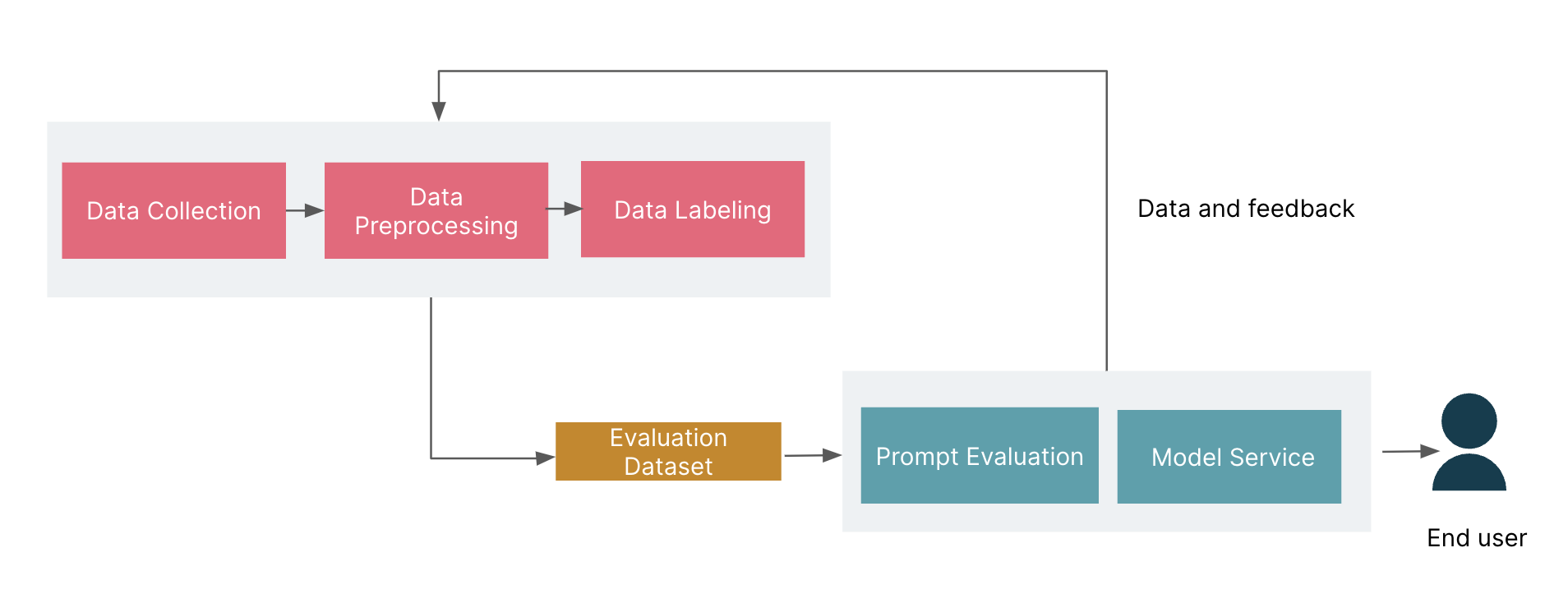

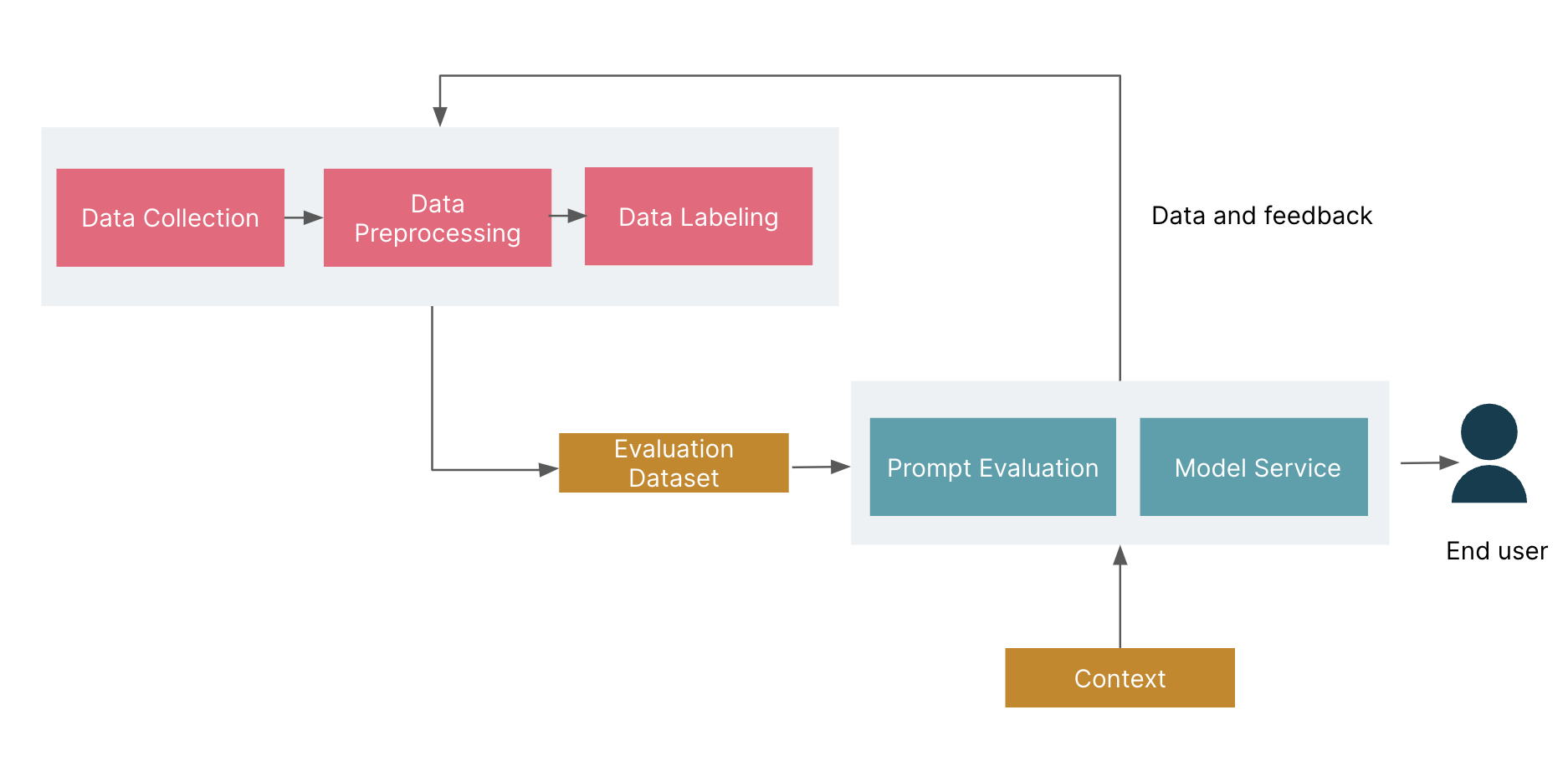

On the other hand, the prompt engineering-centered paradigm provides another possible path for the iteration and development of intelligent products.

Fig. The basic workflow of basic prompt engineering

The prompt engineering-centered paradigm has three phases:

Collect a small amount of data to construct an evaluation dataset.

Build prompts based on LLMs and evaluate their effectiveness using the evaluation dataset.

Deploy the prompts to production and track user input and feedback.

Prompt engineering still requires user feedback and prompt iteration. However, its advantages are that it only needs to construct a small evaluation dataset, which greatly reduces the demand for human intervention. In addition, due to LLMs’ powerful generalization abilities, prompt engineering can ensure good performance from a cold start. This gives engineers more confidence in estimating the expected results. Finally, the development and iteration of intelligent products based on prompt engineering is faster than with machine learning models.

Here are two examples to explain the effectiveness of prompt engineering in replacing machine learning models:

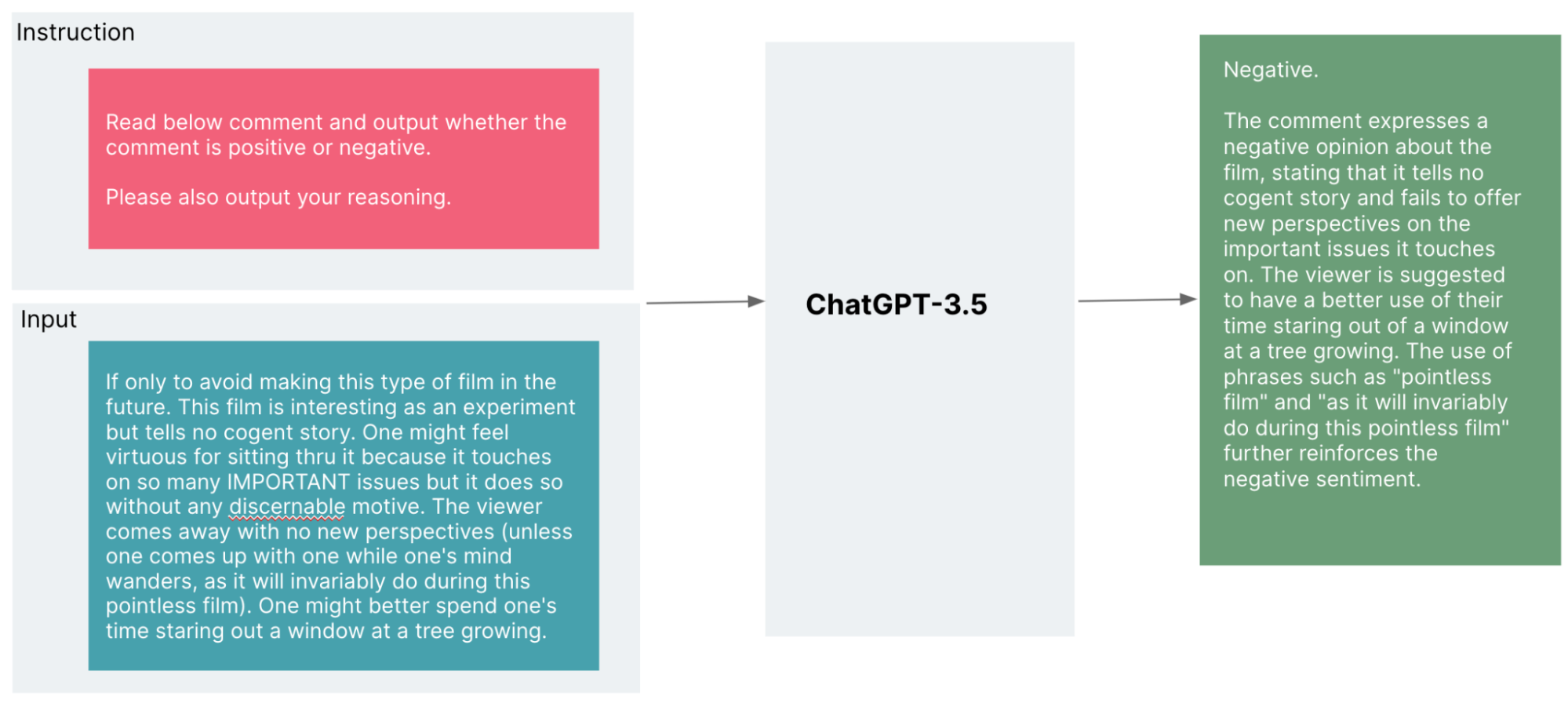

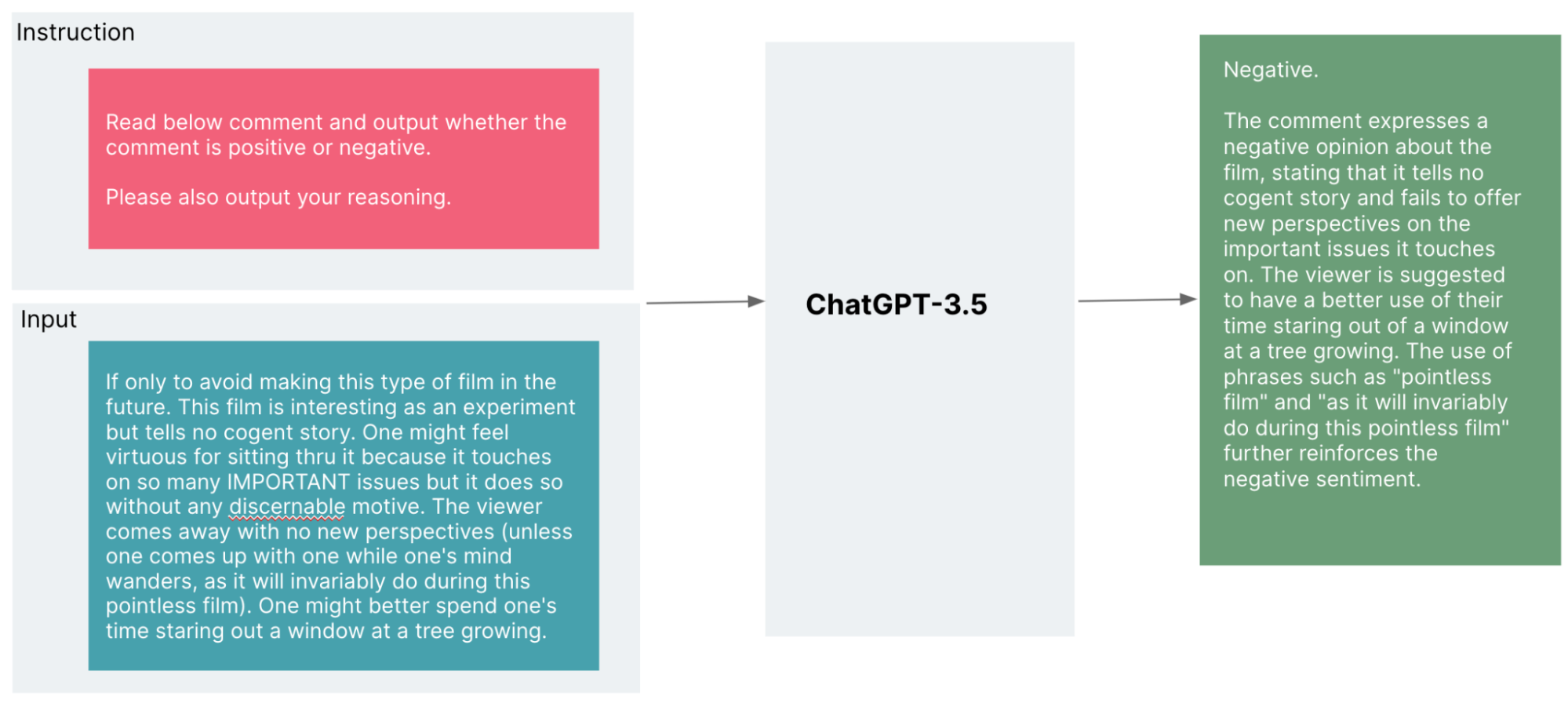

Example 1: Sentiment classification using prompts and LLMs

This task aims to evaluate a user's opinion and classify them as positive or negative.

When using a machine learning model, we need to collect a large training dataset of users’ reviews and manually label the reviews — as “positive” or “negative”, for example. It can then be used to train a text classification model. However, before data collection and model training, no one can accurately predict how accurate the final model will be.

However, if you use prompts and LLMs, you only need to prepare a few dozen user evaluations and use natural language-like instructions to quickly get results. Building a text classification model with this kind of effectiveness has almost no cost.

Fig. Using the ChatGPT-3.5 API, we can quickly classify user evaluations by providing natural language-like instructions.

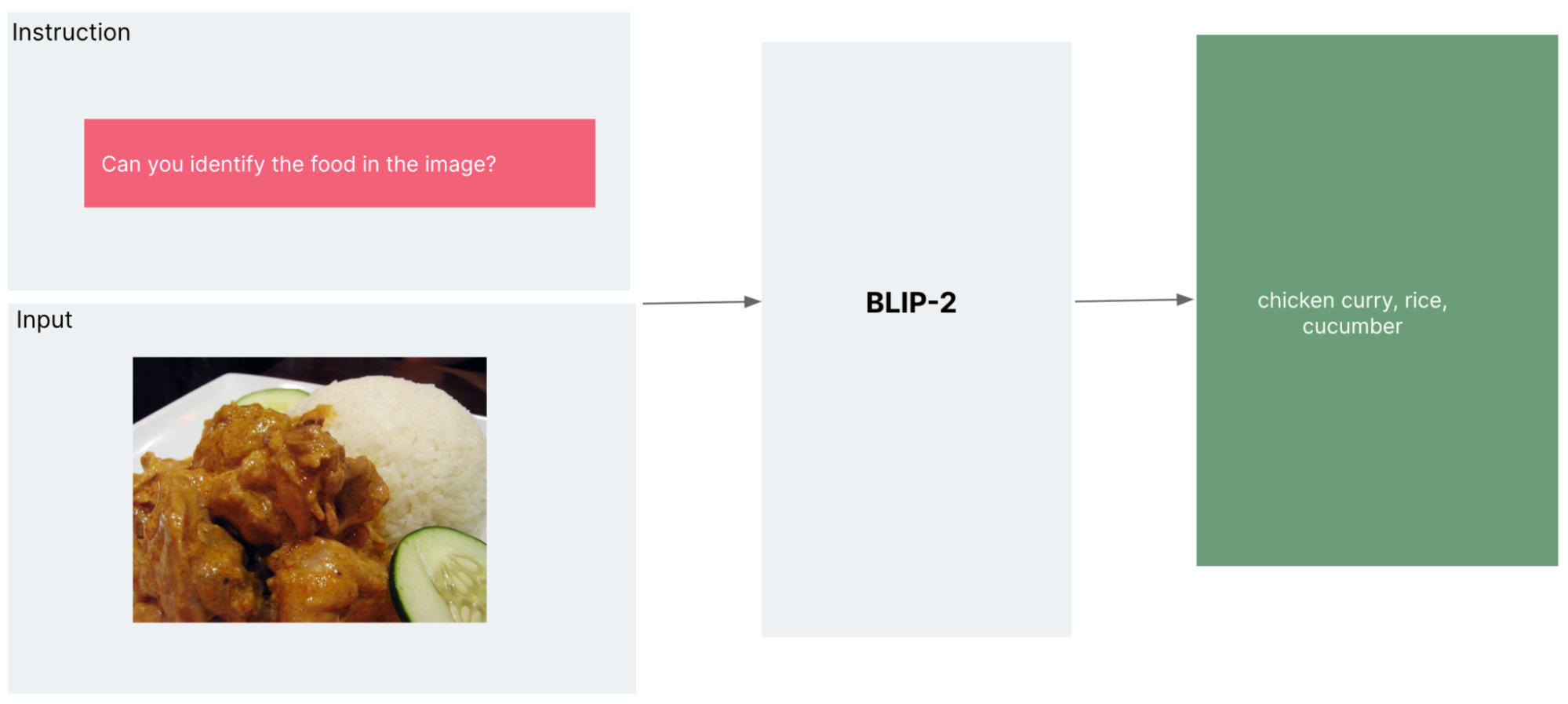

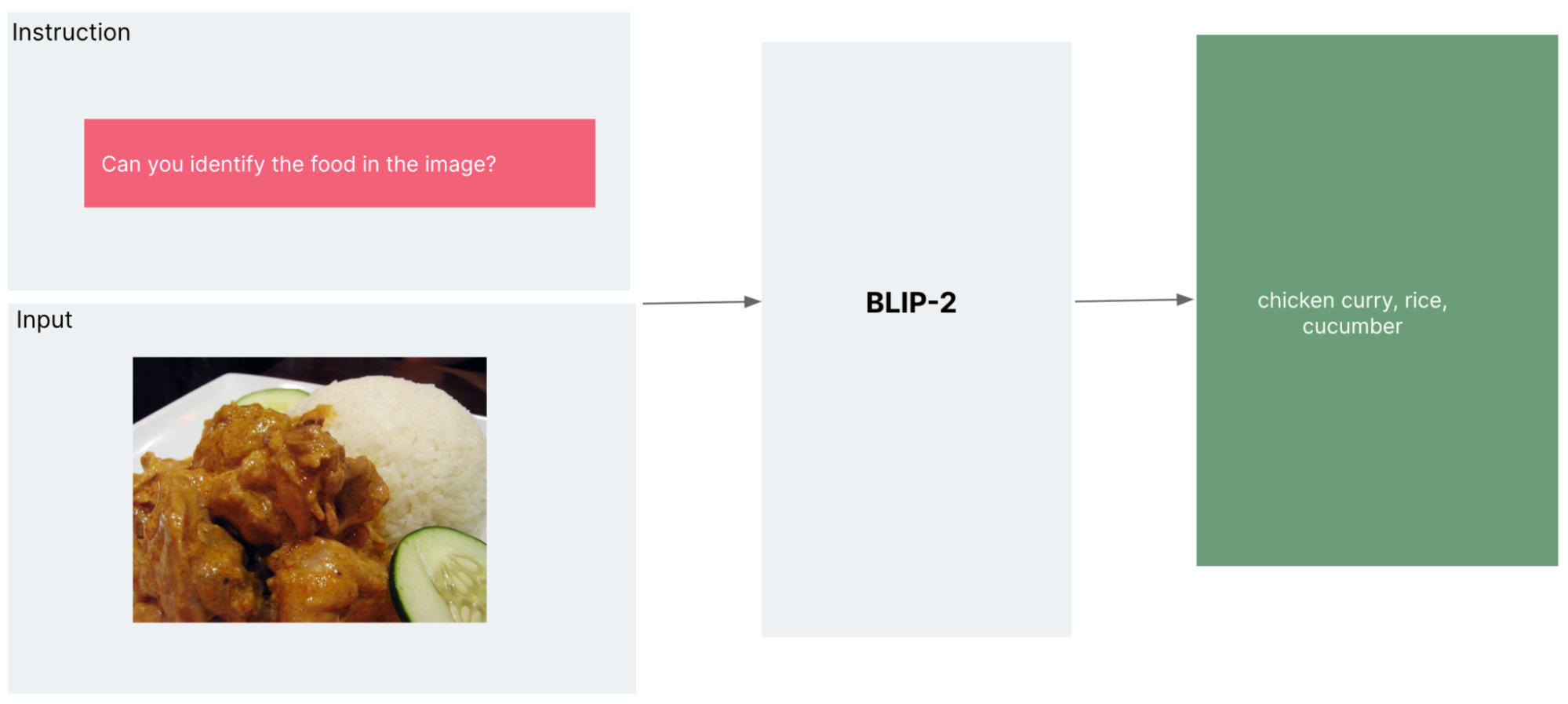

Example 2. Using prompt engineering and Multi-Modal LLM for image classification

This task aims to classify food photos and determine the type of food represented.

With machine learning-based image classification models, we generally use a pre-trained backbone neural network. However, even with a pre-trained neural network, considering the diversity of food types, we still need to prepare thousands of photos of different types of food and train multiple models using AutoML technology to select the best one.

With the development of Multi-Modal LLM, we can now also use prompt engineering for image classification tasks. For example, using BLIP2, inputting a food photo and asking the question "Can you identify the food in the image?" is enough to get the correct answer. Here the question is just the prompt to the LLM.

Fig. Using Prompt on BLIP-2 model to complete task of food classification

The above two examples demonstrate that using prompts alone can complete single-sample text classification or image classification tasks. To comprehensively evaluate the effectiveness of prompts, we need to prepare more samples. The good news is that the sample size can be much lower than for training machine learning models. Also, we can adjust the prompt or use multiple prompts to complete a task.

With the powerful generalization abilities demonstrated by LLMs, the paradigm centered around prompt engineering will dominate the development of intelligent products. Though the paradigm centered around machine learning models will continue to exist, it will be used for specific tasks, like improving LLMs themselves by collecting more data and fine-tuning LLMs.

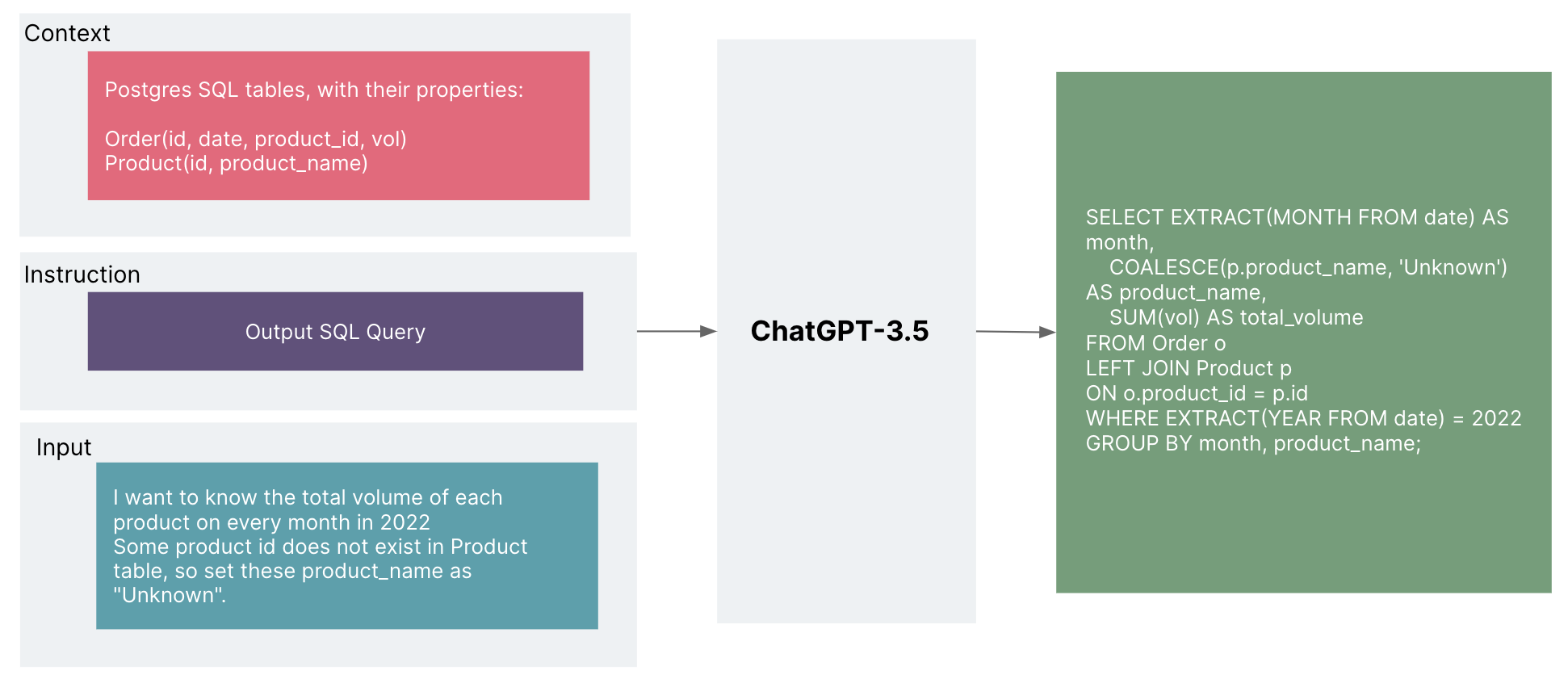

Prompt engineering with Context

LLMs’ world knowledge derives from the size of its parameters and scale of the data on which it was trained. It is also the reason why it is costly to use new data to train the model to acquire new knowledge. When ChatGPT was firstly launched, its knowledge cutoff was Sep, 2021.

There are two methods for imparting new knowledge to a large language model (LLM): one involves fine-tuning the LLM with a dataset containing the updated information, while the other entails incorporating the knowledge as contextual information within prompts.

By integrating task specific context text into prompts the LLM can not only acquire new knowledge but also effectively leverage its capabilities in logical reasoning and adhering to instructions.. When there is context involved, the workflow of prompt engineering is as follows:

Fig. The workflow of basic prompt engineering with context

For example, when we try to develop a natural language query and analysis application, the application will accept a user’s input, generate a SQL statement based on the attributes of tables in the database, and then query the database to return the relevantresults.

In this process, including the attributes of tables in the database as part of the prompt can provide the model with knowledge it didn’t previously possess. (This is because the database table schema is not part of its training data.)

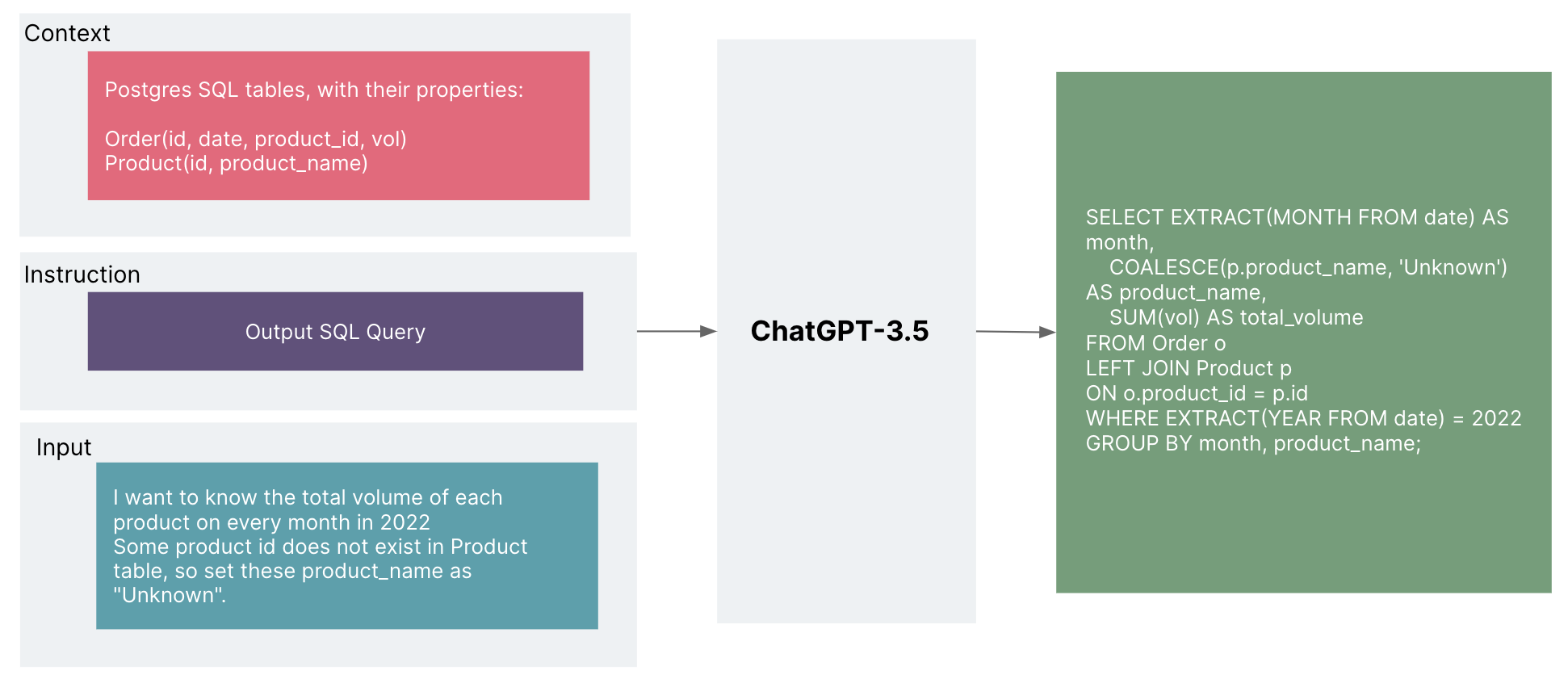

In the following figure, the context provided is a definition of two tables in a database. After assembling the prompt, the LLM generates the correct SQL based on the user's natural language query.

Fig. The prompt with table schemas as context to generate SQL query

Incorporating context into prompts for an LLM offers advantages such as reducing training costs and enhancing security in certain cases.

For example, when integrating a data platform or warehouse with a LLM to facilitate natural language queries, it is possible to control the LLM's output by including authorized context within prompts. This approach ensures that users can only access content they have permission to view, thereby improving the system's overall security.

On the other hand, if the LLM memorizes the entire database schema through fine-tuning, it could potentially allow users to access content they do not have permission for, as they may be able to elicit unauthorized information from the LLM.

The limitations of prompt engineering

For general LLM models, prompt length is limited. For example, OpenAI has a token limit of 4096 for the current Text-Davinci-003 model and the GPT-3.5-turbo model. If a smaller LLM is used, the token limit may be as short as 512.

When injecting context into the prompt, it’s necessary to consider the rules for extracting context to avoid exceeding the limit. Currently, OpenAI claims that the token limit for future GPT-4 can be increased to 32k, which will significantly increase the upper limit of the prompt context.

Conclusion

Due to the significant improvement in the depth and breadth of LLM capabilities, the prompt engineering workflow — which is centered on prompt-based development — may require less data and achieve higher accuracy when solving natural language processing (NLP) and computer vision (CV) problems than a typical machine learning model-based workflow. This would reduce the resources required to develop intelligent products and could accelerate the process of iteration.

By organizing appropriate context in the prompt, we can flexibly use the capabilities of LLM and create more secure intelligent products.

In conclusion, the collection of production data and feedback is still critical in the prompt engineering workflow. It is an essential way to evaluate the effectiveness of the prompt; in fact, it is the only way. When context cannot meet product requirements, the machine learning model-based workflow can still be used, leveraging new datasets to fine-tune the LLM to enable it to possess specific knowledge.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.