Technology strategy

Intelligent Empowerment: The Next Wave of Technology-led Disruption

Applying Continuous Delivery to data science accelerates its impact to your business.

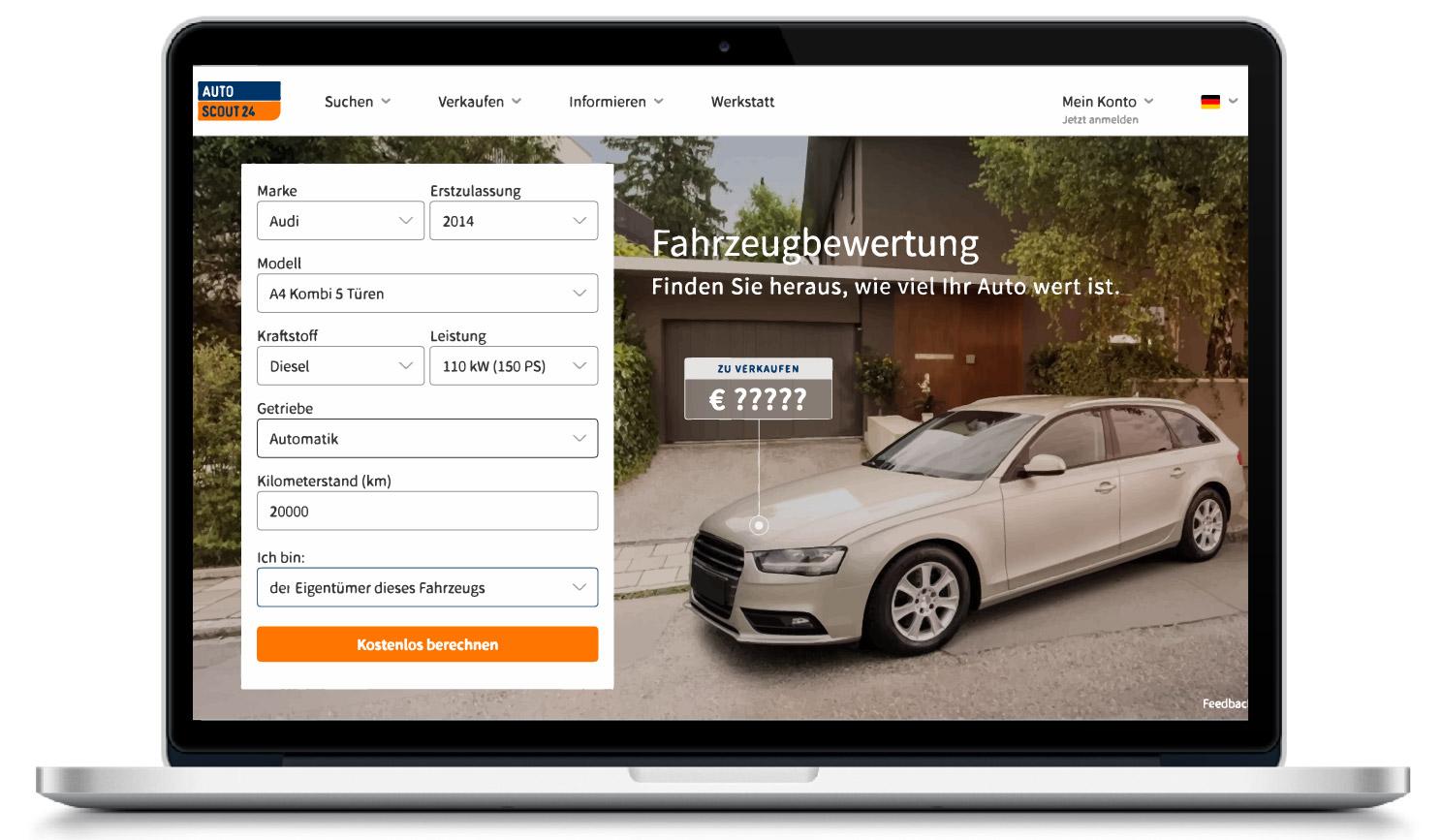

In addition to ongoing improvements, the prediction model needs to be retrained every month at minimum, to accurately reflect the market. To encourage experimentation, and to improve the model further, we found that it helped to automatically validate prediction accuracy prior to deployment.Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.