Continuous delivery is more than just a technical challenge

“Continuous delivery is the ability to get changes of all types — including new features, configuration changes, bug fixes, and experiments — into production, or into the hands of users, safely and quickly in a sustainable way.” Jez Humble

For the past 10 years, I’ve been fortunate enough to work almost exclusively in teams that have aimed at and been capable of delivering changes to production software frequently. For me, “frequently” has meant “multiple times per day”. In this article, I will discuss some of the learnings from these experiences.

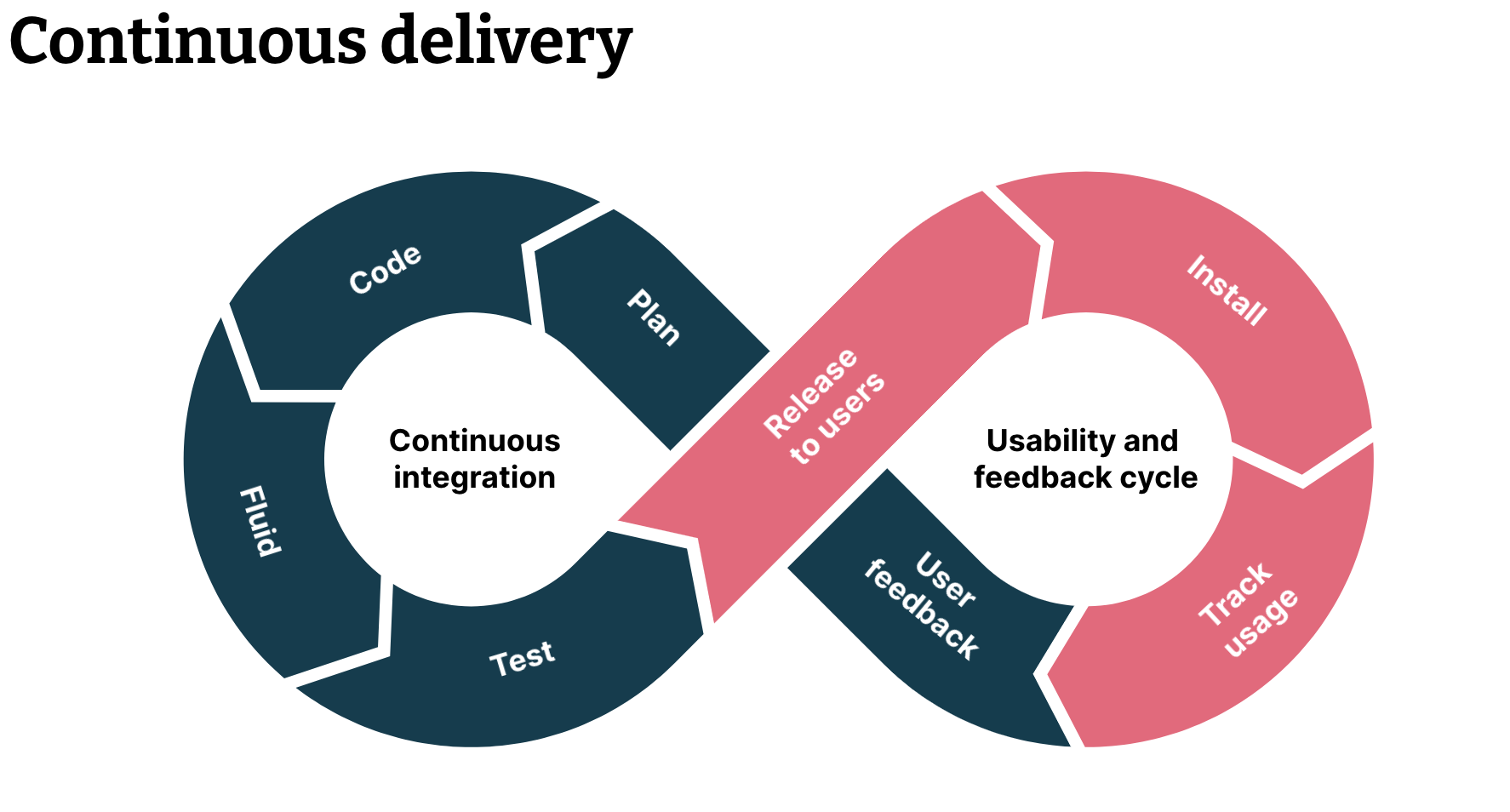

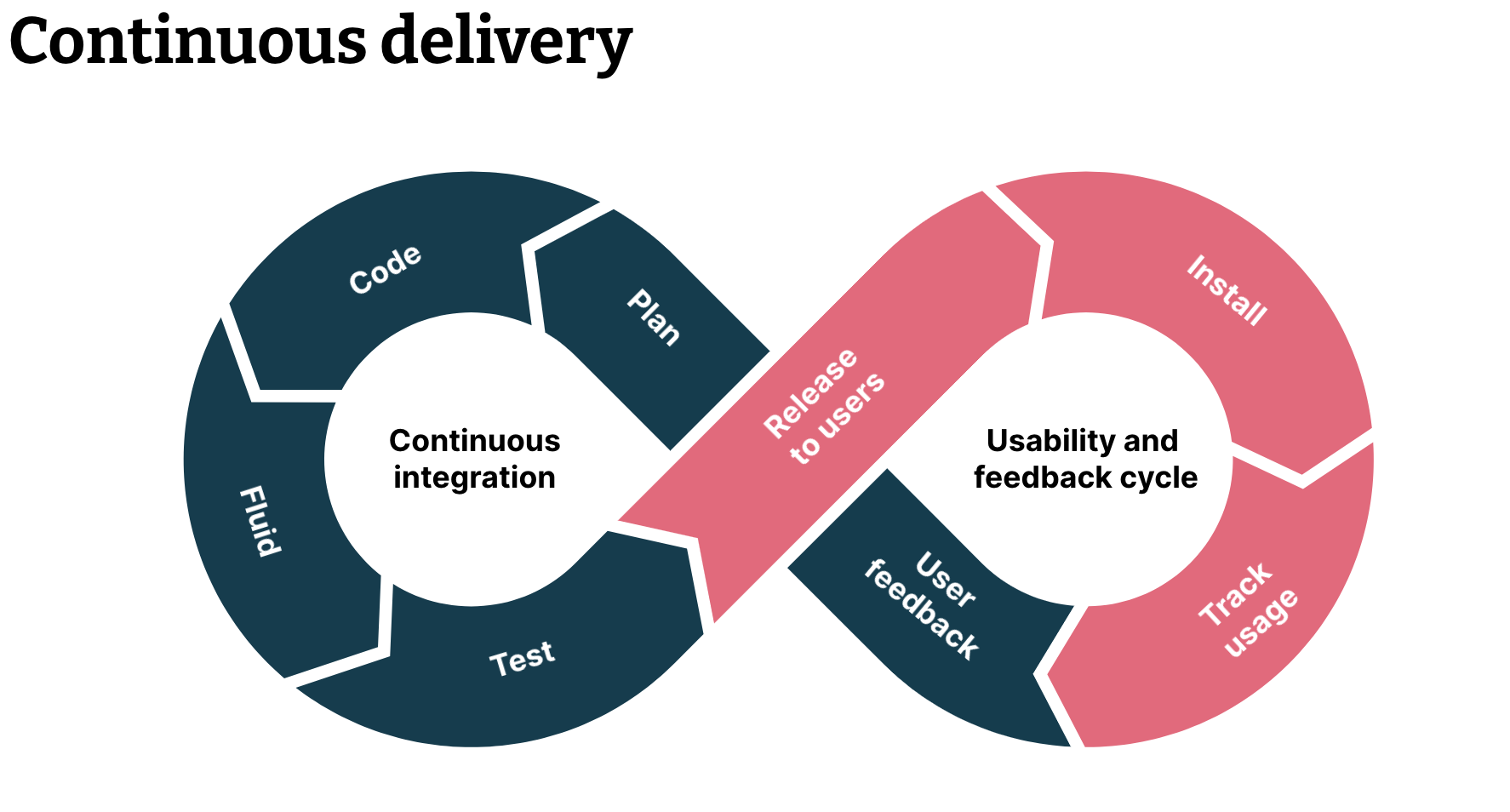

Jez Humble’s definition of continuous delivery, despite being concise, includes many nuances that are easily missed. Too often we see continuous delivery as a purely technical challenge. Of course, doing it well includes plenty of well-executed technical efforts. But for me, the technical aspects are just a tiny part of what well-executed continuous delivery is about.

The full benefits that continuous delivery promises are not realized unless the teams, the business processes, and the organization around it are aligned and capable of taking advantage of the technical capabilities — and often this isn’t the case. This article describes the three components that I view as necessary to reach the benefits in full. To achieve the full benefits, fundamental changes are often needed to:

How functionalities are ideated into an incremental but coherent whole

How systems are built and operated

How teams and organizational processes work, and

How business leaders collaborate with the developers

Why aim for continuous delivery?

So if it’s not only technically challenging but also requires a ton of other changes and work, why even aim for continuous delivery?

“Almost any question can be answered cheaply, quickly, and finally, by a test campaign. And that’s the way to answer them – not by arguments around a table.”

That quote sounds like great reasoning for continuous delivery. It turns out it’s a quote by Claude Hopkins in ”Scientific Advertising,” published in 1923. To test our ideas with real customers quickly isn’t that new. But the internet has drastically changed how easy that can be.

An idea is just the first step. It’s vital to remember that it’s the testing that demonstrates whether it will deliver value to your enterprise. So all those lovely workshops and ideation sessions are not what makes your company innovative. Instead, it’s your company’s capability of turning those ideas into something concrete that lands in the hands of your customers. And as we know, most ideas end up not being that great, so experimentation is a crucial part of innovation. And for that, in the world of software, you need continuous delivery.

But it’s not all about business either. I’ve seen teams that spend half of every second of their sprints to take their software into production. I’ve seen teams that need to stay at work through the night to do their production deployment as it requires downtime. It’s not just expensive to run your teams like this. The mental toll this takes on the developers is considerable. There’s been some research on how significant an impact having a hassle-free, safe deployment capability has on the mental health of developers involved.

How do we get there?

Well-executed continuous delivery enables innovation, experimentation, higher efficiency, happier customers and developers. For our journey there, we will need:

Improved technical capabilities and higher automation everywhere

Leaner processes with fewer queues and handovers

Business agility, with real learning

As it’s easy to think you are improving when you are changing, it’s important to have a way to measure your progress. The book “Accelerate: The Science of Lean Software and DevOps” introduces four key metrics for measuring your success in DevOps:

Delivery lead time — the time it takes to go from a customer making a request to the request being satisfied

Deployment frequency — how often you’re deploying value-add units of work

Mean time to restore service — how long it generally takes to restore a service after unplanned downtime

Change fail rate — the percentage of changes to production that fail

Let’s keep these in mind while we move forward. I will reference all of them in the following section.

Technical capabilities

Although I emphasized that technical capabilities are just a small part of continuous delivery, it is nevertheless the foundation upon which all the other aspects build upon. You will need “development environment as code”, testability, deployability, and improved live operations. Each of these topics would be worth a book or two of its own, so I will only outline a few of the crucial bits here. Some teams say they’re unable to do continuous delivery. These are the bits they often struggle with.

Development environment as code

Many teams, when asked if they have everything in version control, will answer yes. But when you start setting up your development environment, it turns out that even though the source code is version controlled, you need to then copy and paste this configuration from a wiki page and then ask for the API keys from another teammate and so on. To reach high automation, this is not acceptable. Everything needs to be version-controlled and trivial to set up. Learn to be highly allergic to any manual steps, as they will gradually make everything hard to automate. All passwords, test data and configuration information need to be available programmatically.

Testability

The obvious thing is, of course, that you need automated tests with high coverage. If you aim to go to production multiple times per day, requiring someone to do any manual testing is impractical. So you need to have high confidence in your tests. Tests need to be repeatable and consistent: Too many teams get accustomed to “a couple of integration tests always being red”. It also means that you should catch most bugs in your development environment. This, in turn, means that developers need to own the tests.

Integration and regression tests should be runnable without an arduous environment setup. I’ve done a good amount of external reviews for projects, and it’s almost always the case that I can’t just pull the code and run the tests. “Ah, yes, you need to be in our corporate network”, “Oh, it works only with our test servers.” Each of these will make your continuous delivery journey more challenging, so be mindful of your dependencies from the start.

Your level of automated testing plays a big role in improving the key performance metric four: change fail rate. With a high change fail rate, everyone will be discouraged to take things to production, which will undermine the whole process of continuous delivery.

Deployability

In general, you should aim to build modular systems. But it’s very easy to say and harder to accomplish. Investing time in domain-driven design and understanding the business domain you work in will help you find how to split your system, making sense on the business level, and emphasizing clear interfaces between components.

The smaller the parts are that you can deploy in isolation, the easier it is to do frequent deployments. Here, preferring asynchronous communication (messaging) between your components (services) helps a lot. Messaging-based components usually do not expect to know if you have the rest of the components deployed already. They will just send and consume messages when they arrive.

In general, you should be very cautious about deployment orchestration. If your components need to be deployed in a specific order for them to work, they are deemed to require orchestration. If you can build a component so that it doesn’t depend on having other services available immediately when it spins up, you should do that. Otherwise, you’ll soon end up with a complex dance of deployments that force you to deploy all your components in specific order regardless of how small your change was.

I’m also a big fan of services owning their data sources. I much rather have each service cache/duplicate some data than have each of my services rely on the constant availability of other services to do even the smallest of tasks.

Deployability has some effect on at least the first three of the four key performance indicators mentioned earlier.

Live operations

When doing frequent deployments, the first thing your team needs is high situational awareness. They need to know what exactly is deployed and where. They also need to see the status or health of each of these services.

Infrastructure as code is, if not mandatory, at least highly convenient. Reproducible environments mean you know precisely what your services are running on. You also know that staging and production are really identical or if they differ, you know precisely how.

Regardless of how good your automated testing is, from time to time, your production will break. And that’s completely fine if you can quickly roll back to a known working state. Good automation and the use of a deployment artifact repository (for example, a container registry if it’s containers that you run in production) usually help a lot. After rolling back, you also need a quick way to find out what went wrong. For this, you absolutely must have aggregated logging and tracing available. You should put effort into each request having a unique correlation ID that you can use to find all logs from all your services involved in serving that request.

The above points obviously have a big impact on the “mean time to restore a service” key performance metric. Remember that this metric measures the whole duration of the service outage. So it’s not just how fast you can fix a broken system, but also how long it takes for your team to notice an outage.

Leaner Processes

Better user stories

It’s surprisingly easy to push things to production daily and still provide no value for the customers. This is often related to how the team creates user stories and tasks. To give an oversimplified example:

“We need this new UI thing.”

“Let’s split it into tasks. One task for the frontend changes, one for the backend changes, and one for the needed changes to reporting”.

So when is the first time the team delivers value? Even though they can do three deployments, the first time it’s valuable to the end-user is after the team has completed all three of the tasks.

Moving from formal requirements to post-its has been great, but I think it’s now too easy to think that writing stories or tasks is trivial. It isn’t. Teams should carefully consider how they can split a story so that each step already either provides value or proves an assumption. Like, could we do a read-only version of the requested UI first? Would that already provide value, reduce risk or show us if customers ever are interested in this?

I also think that many harmful practices like micromanagement, ultra-detailed requirements gathering, estimation and analysis paralysis are all caused by a lack of trust in a team’s delivery capability. The best way to get rid of these practices is to consistently provide value: small stories that bring value combined with continuous delivery.

As the “deployment frequency” key performance metric is a proxy metric for batch size, the above emphasis on reducing the batch size the team works with directly helps in improving the metric.

I have previously written about good user stories for continuous delivery, you can check it out here.

Value-streams, backlogs and empathy

Your organization has a core value-stream. Being lean is doing everything you can to enable this stream to flow efficiently. Unfortunately, we often consider lean as waste elimination. But if it turns out that the bottleneck of your value-stream is not having enough people for a specific role, hiring more people can be the most lean thing to do.

A great way to lose your best developers is to squeeze more out of your development team when they are clearly not the bottleneck. Instead, start by gathering people from all necessary functions and together draw a value-stream. Instead of trying to map your whole organization, the trick is to pick a recently finished feature and draw the value-stream for that feature from the original idea through to production. See where you spend most calendar time and where you spend most value-adding time. The insights this provides are often surprising. Changing the frequency of a meeting or improving the availability of a certain person could do more for the value-stream than any technology automation, for example.

You should be extra mindful of any backlogs, roadmap documents, etc., where things stop and wait. Quoting D. Reinertsen from his excellent book Principles of Product Development Flow:

“In product development, our greatest waste is not unproductive engineers, but work products sitting idle in process queues.”

Mary Poppendick went as far as to say she considers backlogs the most harmful thing to come from Scrum. Each backlog is a delay on your way from idea to production. You should consider them like buffers: keep them as short as possible while still keeping your team with enough to do.

Remember also that each team has multiple clients: the end-user, the operational team (which could even be you in true devops mindset) and the team downstream from them. You should have empathy towards those internal clients. If something makes you work faster but makes the next team twice as slow, it’s not something you should be proud of. “Well it’s an ops problem now” type of thinking is absolutely not ok.

All of this is clearly measured by the “delivery lead time” key performance metric. Just make sure you have the data from the start of your process. Meaning that you have a record of when an idea or request was created and you can associate that with a specific feature being delivered.

Business agility

Gemba

In the end, having a highly automated way of quickly producing value, innovating and experimenting, is of limited importance if the rest of your company still operates according to “four-year plan” or yearly strategies or requirements planning made at the beginning of the project two years ago.

There’s a saying in Lean: “Going to the Gemba”. It means to go where the action is. It means that factory managers should walk the factory floors. And although in a traditional factory, it used to be more obvious if stuff would start piling up in front of the wrong machine, it’s still well worth the visit for executives to meet developers and testers and listen.

“Executives have a rosier view of their DevOps progress than the teams they manage.

For nearly every DevOps practice, C-suite respondents were more likely to report that these practices were in frequent use.” - State of DevOps Report 2018

Experimentation driven... almost

It can feel like a superpower when you find yourself with the technical capability to quickly test a new idea in production and measure its performance against the current implementation. At least that’s how I felt. In some organizations, we ended up using it for absolutely everything. But much more common is that you find out that everyone loves the idea of experimentation-driven development until you question the need for their favorite feature. ”Well, we know we need that!” Don’t try to force this. Just gradually introduce more experiments and win acceptance for this approach through small wins.

Perishable opportunities

Some opportunities in business have a small time window. One feature is only useful if you can create it before the Christmas holidays. Another feature is only really valuable if you introduce it before any of your competitors. Is your organization capable of quick decision-making? Could you delegate power closer to the teams? So that the team doing the experiments can also act based on the results as often as possible.

The same applies to learning from experiments. Learnings are only useful when applied. How do we use what we learn? How fast can we change after learning? Who learns? Just the team? Other teams? Leadership?

To get the most out of continuous delivery and to turn it into continuous learning, your company needs to address its business agility capabilities. Should it organize or connect differently to enable faster learning and decision-making?

Summary

To be really competitive, a company must be able to innovate. Innovation is defined as the ability to turn ideas into products or services. Doing this efficiently is what I believe continuous delivery really aims to facilitate. For this larger feedback cycle — from ideas to products back to learning and new ideas — to work, a lot more than technology is required. If we work on outsized features, we can’t deliver them continuously. If the processes are slow and heavy, it doesn’t matter how quickly a piece of code moves. If the delivered pieces are too often faulty, this loop grinds to a halt. If we just deliver but do not measure or learn from this rapid delivery then very soon we are just executing an outdated plan. So go out there and reach beyond the technology, challenge the ways you work, plan and collaborate to really reap the rewards of a continuous delivery done right.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.