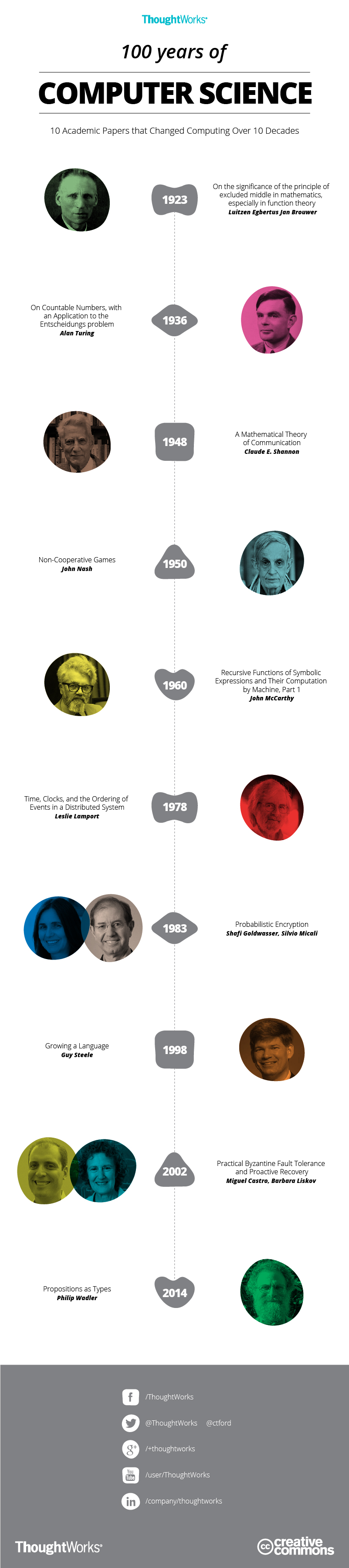

100 Years of Computer Science

This article was originally published in 2014.

Anniversaries are peculiar things. On the one hand, they are artificial, post hoc pinpricks on time's continuum. On the other, they are a place to stand, to reflect and to make sense of what came before. As it is Thoughtworks 25th anniversary, my colleagues suggested that we revisit an article I wrote that makes its own attempt to slice and dice time for easy consumption.

Nothing important ever happened in one day, and even if it did it would be inextricably tangled up in everything else that happened before and afterward. No paper exists in a vacuum, and every contribution contains the echoes of the intellectual conversations in which they arose. But while none of these papers are beginnings in themselves, they were beginnings for me, and perhaps for you too.

So here it is, a century for a quarter century. To paraphrase British statistician George E. P. Box, all anniversaries are wrong; some anniversaries are useful.

Computer science and mathematics papers are challenging but rewarding. I might not always grok the notation or have the background to fully appreciate the conclusions, but they are a good way for me to expand my horizons.

I came to appreciate academic papers relatively late. While a student, I can only specifically remember reading two papers, one of which (by Claude Shannon) appears below. As a professional programmer without a strong theoretical background, papers seemed difficult and unapproachable, and it took me a long time to realise what I'd been missing.

Some colleagues, responding to my new-found enthusiasm, asked me for recommendations on where to start. Inspired by similar lists by Michaels Feathers and Fogus, I've compiled a timeline of papers that (for me) represent something of the spirit of the development of computer science over the past 100 years. In selecting papers, I used the following criteria:

- The paper must have changed the world.

- The paper must have changed my perspective.

- Only one paper is allowed from each decade.

Any list of this sort is inevitably incredibly selective and subjective. If you think I've left something out, perhaps you'd consider publishing your own list of great papers. ;-)

On the significance of the principle of excluded middle in mathematics, especially in function theory (1923)

Intuitionism predates computation theory, but it was vital in its foundation. Luitzen Egbertus Jan Brouwer's original formulation was a critique on the nature of proof, but later work by Arend Heyting incorporated Intuitionism into mathematical logic.

I chose Brouwer's paper because its rejection of the law of the excluded middle emphasises the construction of values. Brouwer does not consider it valid to conclude that if a proposition is not false, that it must be true. Rather, one must construct the truth of a proposition directly.

As a programmer, the distinction between computing a value and merely implying its existence is very pertinent. What's more, the constructivist mathematics that descended from Brouwer's ideas proved vital in the subsequent development of type theory.

On Computable Numbers, with an Application to the Entscheidungsproblem(1936)

Alan Turing is well-known outside of the programming community for his code-breaking efforts during World War Two and his tragic persecution and suicide. This paper is from before the war when he was a young Ph.D. student.

Turing describes a phenomenon I find hard to reconcile with my intuition - uncomputable numbers. I find the idea that there are hard limits to computation itself baffling and intriguing.

Turing was narrowly beaten to publication by Alonzo Church's alternative solution to the famous Entscheidungsproblem, though both Turing machines and Church's lambda calculus have proven to be enduring ways of modeling computation.

A Mathematical Theory of Communication (1948)

I remember being literally shocked when I first read this paper as a university student. Information, which had previously been to me as intangible as humour or beauty, suddenly became precise and quantifiable.

Claude E. Shannon lays the foundations of information theory, which makes modern information and communications technology possible.

Non-Cooperative Games (1950)

This paper isn't about computation, in fact, it isn't strictly a paper at all. John Nash's Ph.D. thesis is 26 pages and has only two references, one of which is to an earlier article by himself. In his thesis, Nash describes a mathematical theory of non-cooperative games, which had a great impact on economics, Cold War strategy and eventually won him the Nobel Prize for Economics.

This is worth reading if only to see where Nash has handwritten the symbols that were unavailable on his typewriter.

Recursive Functions of Symbolic Expressions and Their Computation by Machine, Part I (1960)

It's almost a cliche to include John McCarthy's seminal description of Lisp. This paper breaks down programming to its atoms.

Carl Sagan said that to make an apple pie from scratch, you first have to invent the universe. McCarthy shows that to build computing from scratch, all you have to do is invent S-expressions.

Time, Clocks, and the Ordering of Events in a Distributed System (1978)

Leslie Lamport examines the limits of synchronisation and causality, in a manner reminiscent of Albert Einstein's theories of relativity. Though he wrote before the creation of the internet (the paper references ARPA net), this paper gets to grips with coordination problems that are highly relevant to contemporary distributed systems.

Lamport won the 2013 Turing Prize, and the joke goes that everyone knew he deserved it for years, but we only just achieved a consensus.

Probabilistic Encryption (1983)

Modern encryption typically relies on trapdoor functions that are cheap to compute, but intractably hard to reverse. This allows someone to cheaply encrypt data and be confident that it is impossibly difficult to reverse the process and reveal the data without knowing the key. The RSA asymmetric cryptosystem is a well-known example.

A drawback in the original formulations of these schemes is that they may still permit an attacker to derive partial information about the encrypted message. In this paper, Shafi Goldwasser and Silvio Micali propose and prove a scheme for using randomness to close this loophole, by making encryption non-deterministic.

Growing a Language (1998)

To code is great fun. To write on code can be fun too. In this work, Guy Steele starts with words that have just one sound and then make words that have more sounds - one at a time. He makes the good point that growth is key for code whose might can shift through time, and on the sheer joy that comes when one plays with words.

This was first seen by the world as a talk.

Practical Byzantine Fault Tolerance and Proactive Recovery (2002)

The internet is a scary place. Not only are networks and services subject to unavailability, but they may act peculiarly, maliciously and even attempt to bring down or compromise one another.

Building distributed systems that are tolerant to any and all of these failures is commonly known as the Byzantine Generals Problem after hypothetical armies lead by unreliable generals, who must coordinate to successfully attack a city.

In this paper, Miguel Castro and Barbara Liskov describe an efficient algorithm for preserving the integrity of a system where up to a third of the nodes can fail in arbitrary and malicious ways.

Algorithms for ensuring this kind of integrity are very relevant to today's distributed systems, for example, Bitcoin, which can maintain integrity so long as at least half of the computing power of the Bitcoin network behaves correctly.

Propositions as Types (2014)

Any of Philip Wadler's papers could have made this list. Propositions as Types, still in draft at time of writing, is a new classic. Wadler's references range from the movie Independence Day to Gilbert and Sullivan as he lays bare the exquisite symmetry that is the Curry-Howard correspondence.

The Curry-Howard correspondence charts the uncanny relationship between logical propositions and the types of functional programs. What's more, it validates the importance of Brouwer's Intuitionism as described in the 1923 paper cited at the start of this post, as functions correspond to constructive proofs in the Intuitionist tradition.

This paper has a special place in my heart because Wadler released the latest draft the same morning I gave an introductory talk on the Curry-Howard correspondence. Fortunately, nothing of substance was altered, and I didn’t give the audience outdated information!

In review

I began this article bemoaning the myopia of my industry. Though we have a relatively short history, practitioners are still sadly unfamiliar with it. I read that someone asked Bjarne Stroustrup if he was including lambda expressions in C++ because it was the hip new thing - the lambda calculus dates from the 1930s and is considerably older than C++ itself.

But when I looked back at the background and diversity of the computer scientists whose work I’ve chosen to highlight, I have to wonder at my own myopia. Of the twelve authors mentioned, ten are male, and all are white.

This leads me to conclude that unless I am particularly biased, we are using only a fraction of the talent that is available to us. When I look at how far we have come in computer science over the last hundred years, I cannot even imagine what we might be able to accomplish in the next hundred years if we get this right.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.