For tech leaders, when it comes to AI for software delivery, there are a number of key questions that need to be considered:

What do I want to achieve by adopting AI for software delivery?

How do I balance innovation and risks?

How does this impact my team including myself?

How do I measure the impact of AI?

When GenAI became a key trend with the release of ChatGPT, many organizations wanted in - fast. Radical assertions of productivity uplifts, like GitHub Copilot’s claim that developers who used the tool could complete coding tasks 55% faster only increased interest.

However, such optimism often gave way to disappointment. After a year or so, most organizations found that only 20% of their employees were using the tools in their role, and they’re not getting the value promised.

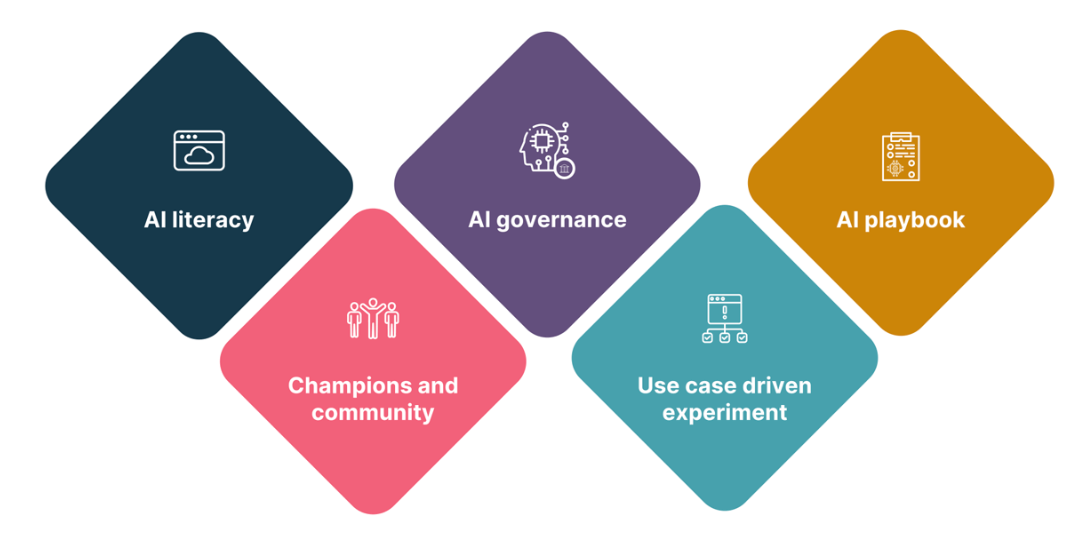

Now it’s time to move away from a tools-only view. With that in mind, we’ve developed a useful framework for adopting AI.

The framework features five different dimensions:

Let’s take a look at each of these in more detail and what they actually involve.

AI literacy

GenAI has had a significant impact on software development, both in the way we build and maintain software. But for it to be used effectively, builders — whether they’re developers, business analysts, QAs or experience designers — need to develop AI literacy.

These include:

Prompt engineering. In order to use generative AI to generate high quality, easy to maintain and secure code that aligns with good engineering practices, it’s essential that users know how to actually prompt an LLM.

AI evals. LLMs are non-deterministic and inherently unpredictable. Testing using evaluations (or ‘evals’) is critical if the technology is to be consistently valuable and impactful.

Critical thinking. The ability to think critically and independently, minimising the automation bias, anchoring bias.

Architecture thinking. With AI taking over specific tasks, it may be possible for job roles to be raised up a further layer of abstraction. The role developers play, for instance, may move to one that focuses more on leadership and architecture.

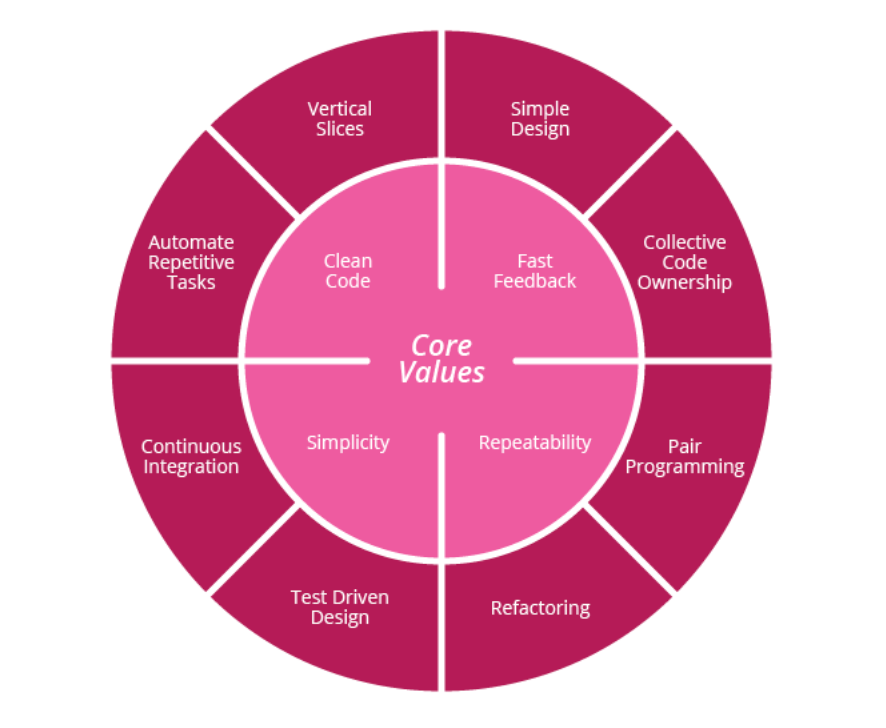

The importance of good engineering practices

With AI working as an amplifier, good engineering practices are becoming even more important, especially things like test-driven design, fast feedback, and the automation of repetitive tasks.

In addition to this, critical thinking — the ability to analyse, organize, consider counterfactuals and form an opinion - has emerged as the key skill to counteract risk from AI hallucination, bias and the creation of ‘AI slop’ in code form.

Champions and community

AI impacts every stakeholder in an organization, not just technology. Adopting AI tooling requires informed input from legal (IP protection), finance (future costs and traceability for auditing), HR (strategic workforce planning), sales (customer reaction is key), business risk and even the Board of Directors. Its impact may reach as far as cyber-insurance risks and government regulation.

Change management needs to be prioritized in order to make sure any major change is sustainable. We believe the best outcomes are achieved when people are genuinely placed at the center of any change initiative, and a coherent narrative for that change is shared with every stakeholder.

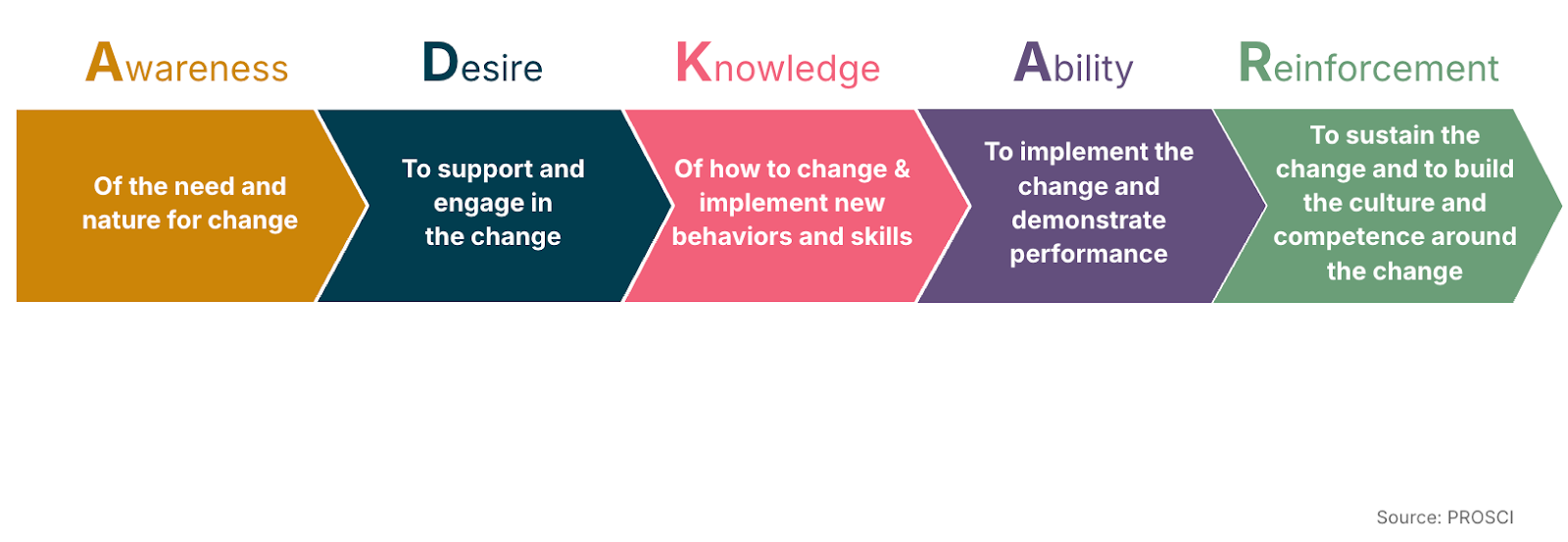

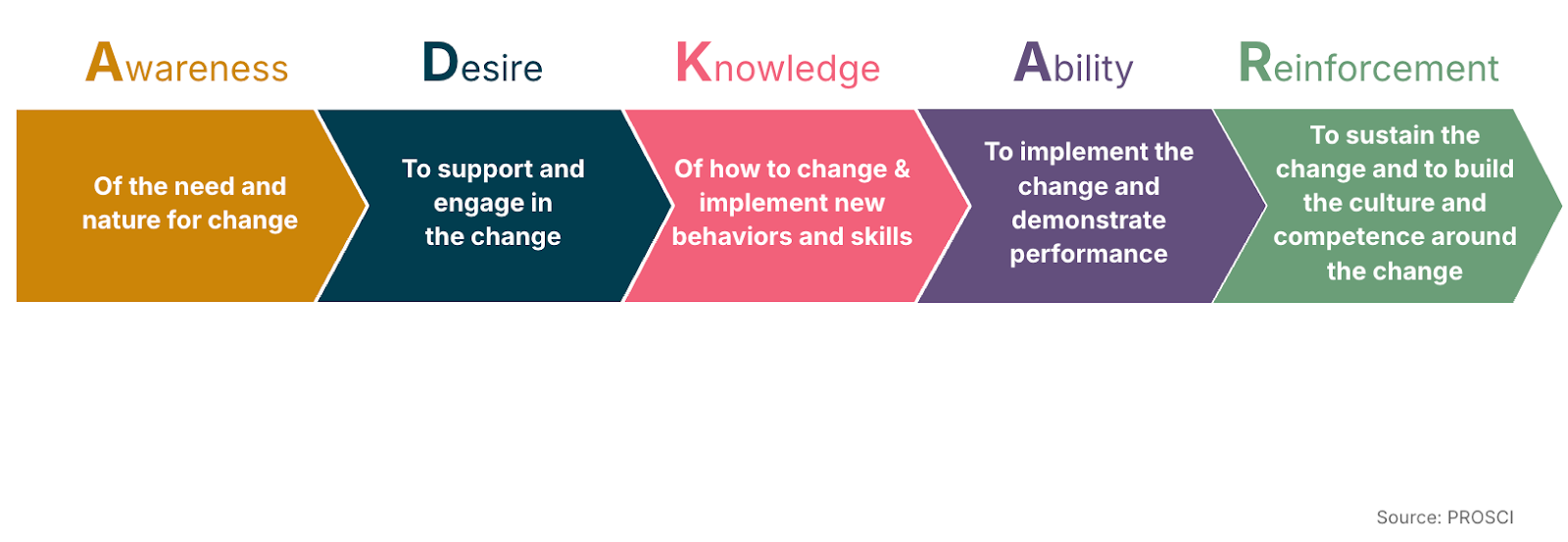

ADKAR is a useful framework:

AI champions play a crucial role here. There are four characteristics required to be a good champion:

Learn. As the entire ecosystem is evolving rapidly, it’s important to both grow individually and to encourage and cultivate the wider team.

Experiment. There’s still a lot we don’t know about how software is going to be redefined by AI; driving experimentation is an important way to guide our direction.

Advocate. Be the advocate for AI day-to-day, leading by example and sharing relevant lessons.

Define. AI champions can play a role in defining how we work in future and striking the right balance between people and AI — as well as the new capabilities required.

AI governance

We’re just two years into the LLM revolution and already we’re beginning to see some organizations already paying tech debt for earlier decisions — like, for example, building their own LLMs. How can we make wise investment decisions in this fast-moving world? How can we be sure we’re striking a balance between innovation and risk? How can we create the confidence for organizations to move at the right speed while not exposing organizations to undue risks?

Here are a few ways to implement AI governance in a lightweight way:

An AI innovation lab. This is where you provide a production-like environment for teams to run experiments and test ideas with the right guardrails and governance controls. In addition to providing the environment, organizations should also consider applying WIP limits for how many experiments can be allowed without disrupting normal BAU activities.

An AI technology radar. Technology radars can be an effective catalyst for architecture conversations, and can act as an information radiator. Creating an AI-specific edition will provide visibility about the tools and techniques you might adopt, try or stop using. This should significantly reduce the risks of using unapproved tools.

An AI ADR (architecture decision record). Architecture decision records can be an effective way to communicate AI-related decisions with internal and external stakeholders. This will reduce the risk of decisions driven by local needs having negative global consequences.

Anti-patterns and the importance of use-case driven experiments

It's crucial to avoid these anti-patterns when conducting AI experiments:

Shotgun approach: This is where you try many different tools or models without a clear hypothesis or understanding of what each might offer. This leads to wasted effort and unclear results.

Isolated experiments: Experiments conducted in silos without sharing learnings across teams or the organization prevents collective growth and reinforces the "not invented here" syndrome.

Ignoring feedback loops: Failing to collect and act on feedback from users, developers or operational metrics turns experiments into academic exercises rather than drivers of real-world impact.

Premature scaling: Attempting to scale an experimental AI solution too quickly before its value, risks, and operational requirements are fully understood.

Lack of clear success metrics: Starting an experiment without clearly defined metrics for success or failure. This makes it impossible to evaluate the experiment's effectiveness.

Static experimentation: Running a one-off experiment and then stopping. AI adoption requires continuous experimentation and iteration to adapt to evolving technology and business needs.

Focusing only on technical metrics: Over-emphasizing technical performance metrics (e.g., accuracy, latency) while neglecting business value, user experience, and ethical considerations.

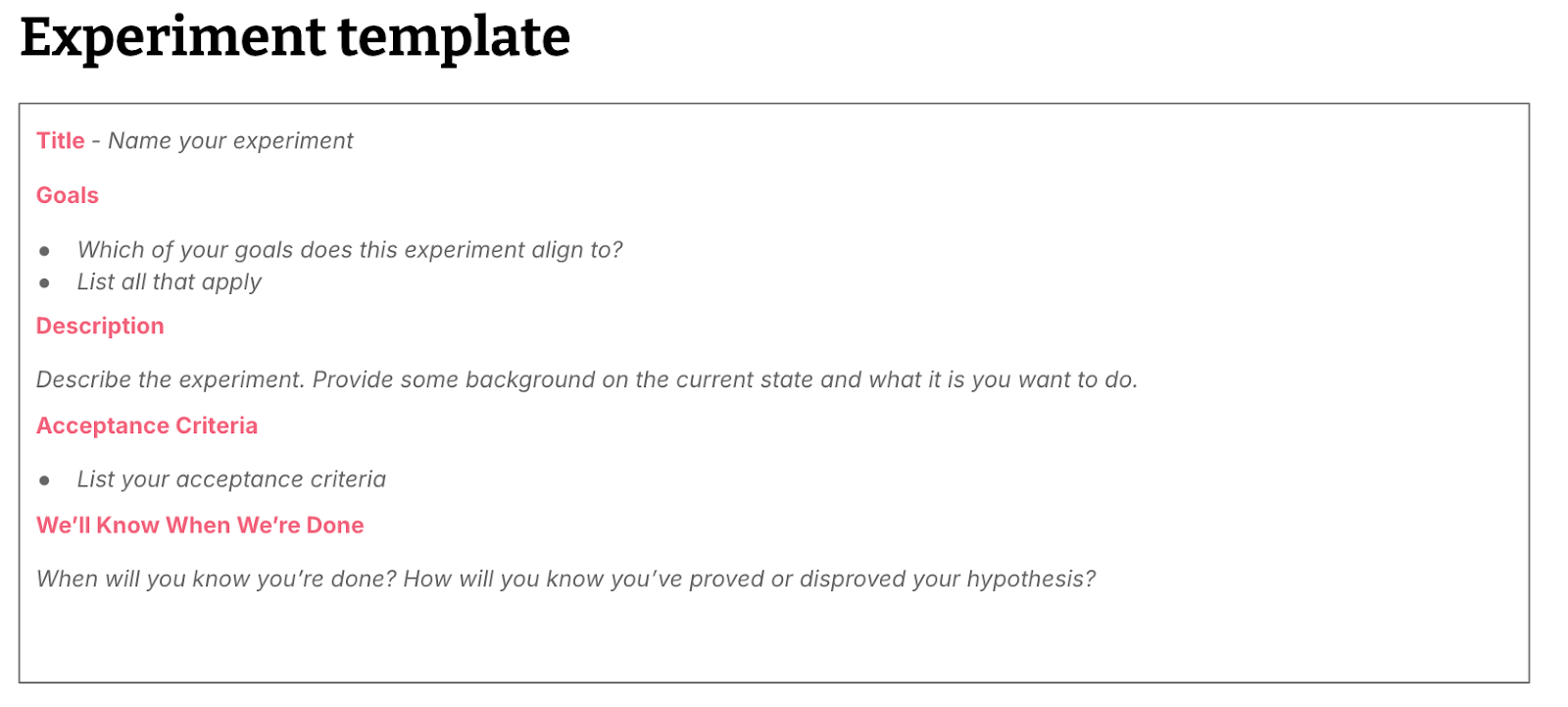

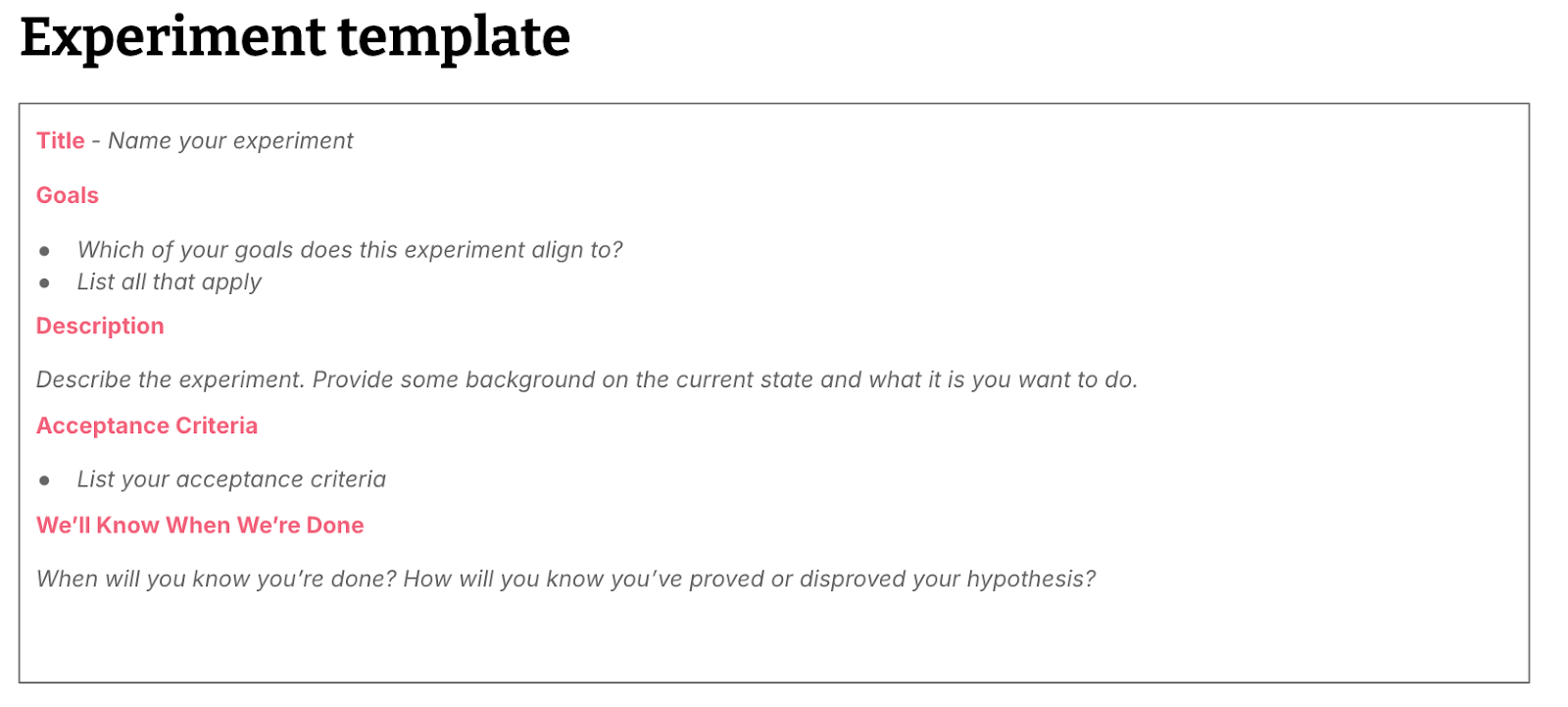

Use-case driven experiments, focusing on fast feedback loops, with a clearly defined hypothesis, will help build organizational level transparency about what has been learned. More details about data-driven hypothesis experiment can be found here.

A good starting point for identifying where to experiment with AI is to use value stream mapping, from story ideas to production to support, identify some friction or pain points, using AI to reduce the waste in the system via automation or redesign.

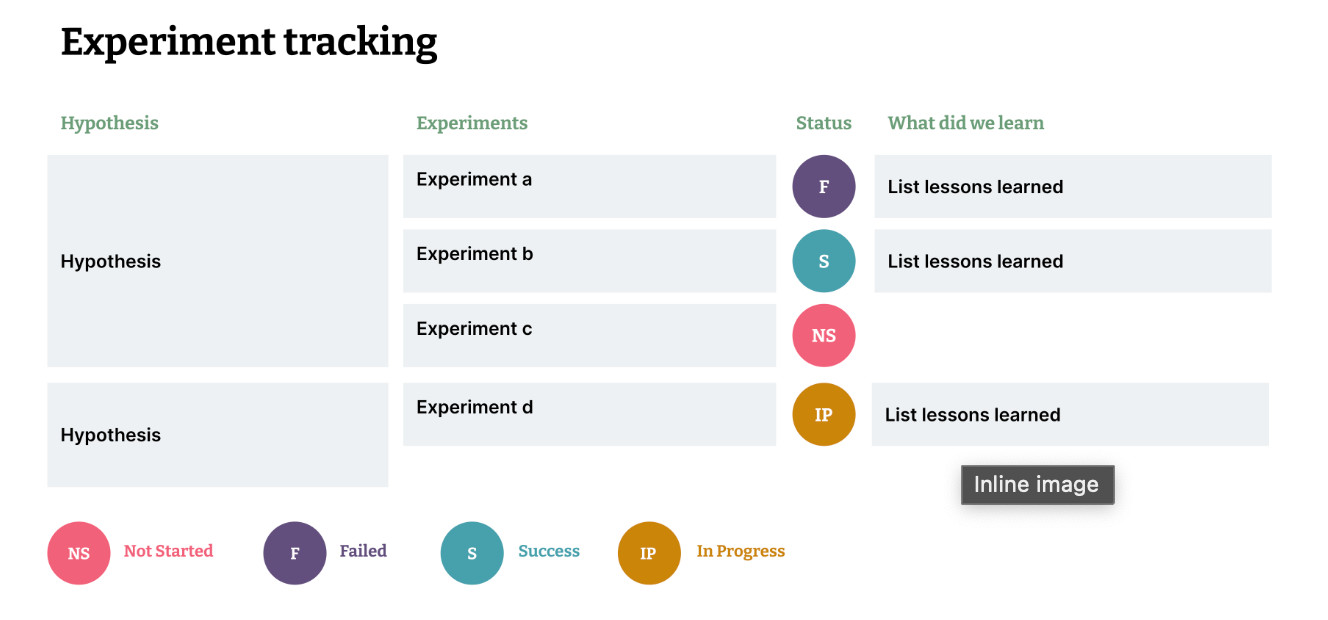

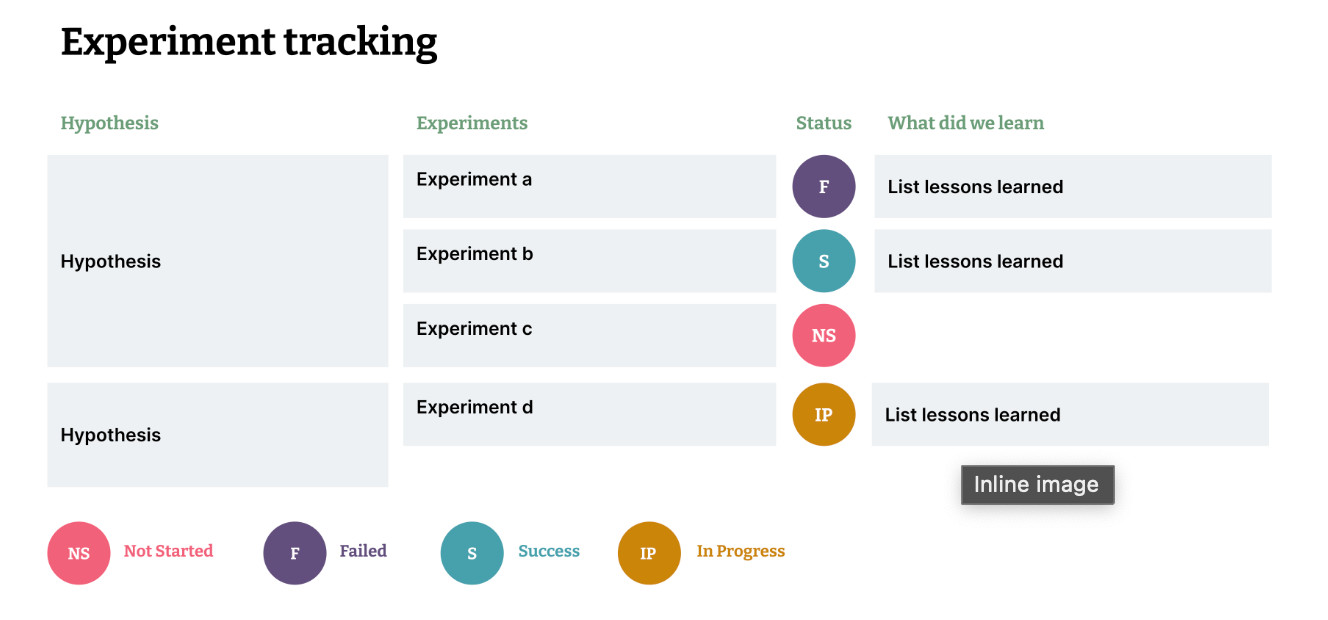

The experiments dashboard will show the status of experiments (past, current and future) to provide transparency and avoid duplication of effort.

AI playbook

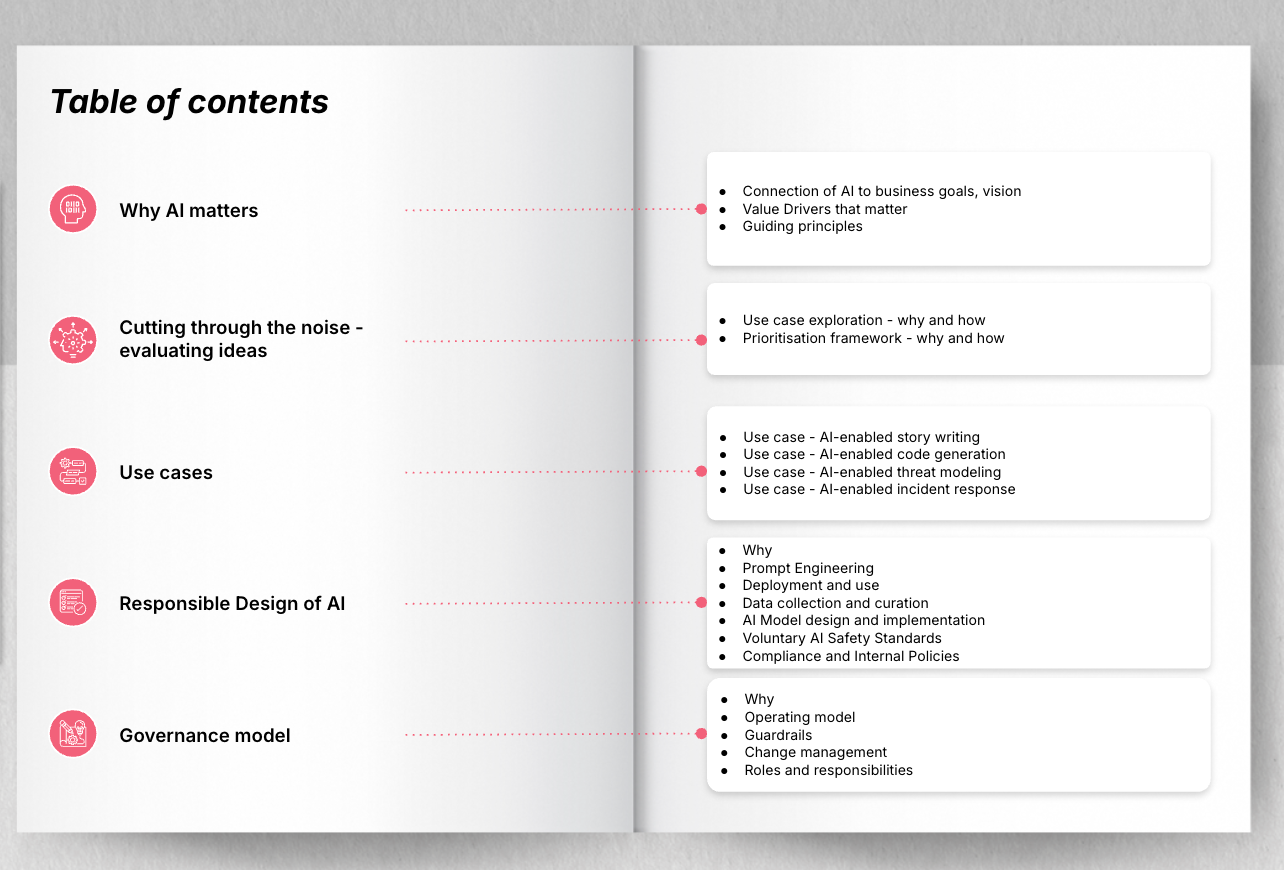

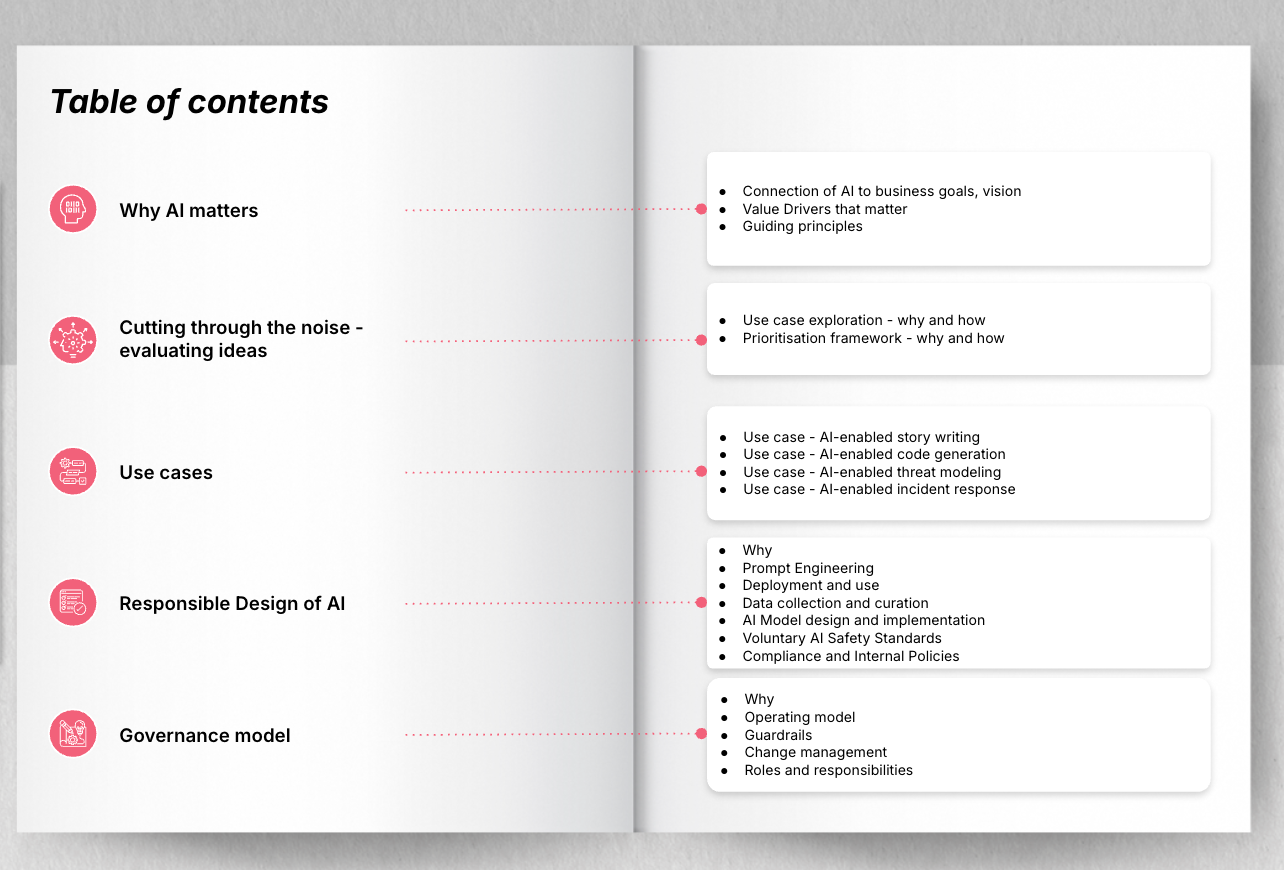

An AI playbook is the single source of truth in the organization about everything AI, from principles, to guidelines, and practices, with a list of proven use cases, both successful and unsuccessful. This will pave the way for the organization about guided adoption, rather than keep reinventing the wheel. It is important to develop a culture of transparency about the failed experiments - emphasising the learning rather than punishing failure.

The value of AI playbooks lies in a grounding in reality, a fusion with the art of real world adoption, thereby catalyzing business outcomes:

Accelerates AI learning and adoption by providing a collection of proven use cases across the organization, both successful and unsuccessful.

Mitigates risks and ensures ethical AI through clear guidelines.

Fosters innovation and experimentation by leveraging past and latest insights.

Builds a collective organizational memory as the single source of AI adoption.

Potential outcomes for a successful AI adoption

With a successful AI adoption addressing these five dimensions — skills and capabilities, champions and community, AI governance, use case driven experimentation and the AI playbook, organizations are likely to achieve these outcomes:

Improved developer experience via using AI to reduce waste and shift cognitive load across the entire value stream.

Lowered tech risk by reducing manual operations and simplifying the process.

Faster value creation by leveraging a proven use case with clearly defined business value.

Enhanced agility through evolved capabilities across the organization.

This strategic approach enables teams to move beyond initial enthusiasm, fostering sustained and impactful AI adoption patterns that fundamentally redefine how software is built and delivered in an AI era.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.