By Tiankai Feng, Patrick Schidler and Alice Crohas

Published: February 16, 2026

A guide to human-centered AI for executives by Thoughtworks and Microsoft

AI tools are everywhere and provide value in everyday life. However, as Sol Rashidi mentions in her book Your AI Survival Guide (Wiley, 2024), tech is the easiest part of the AI lifecycle. When AI fails, it’s usually human: failure of judgment, foresight or responsibility.

Since humanity moves slower than technology, the important question for you, as an executive, is how to keep humans at the center of your AI efforts.

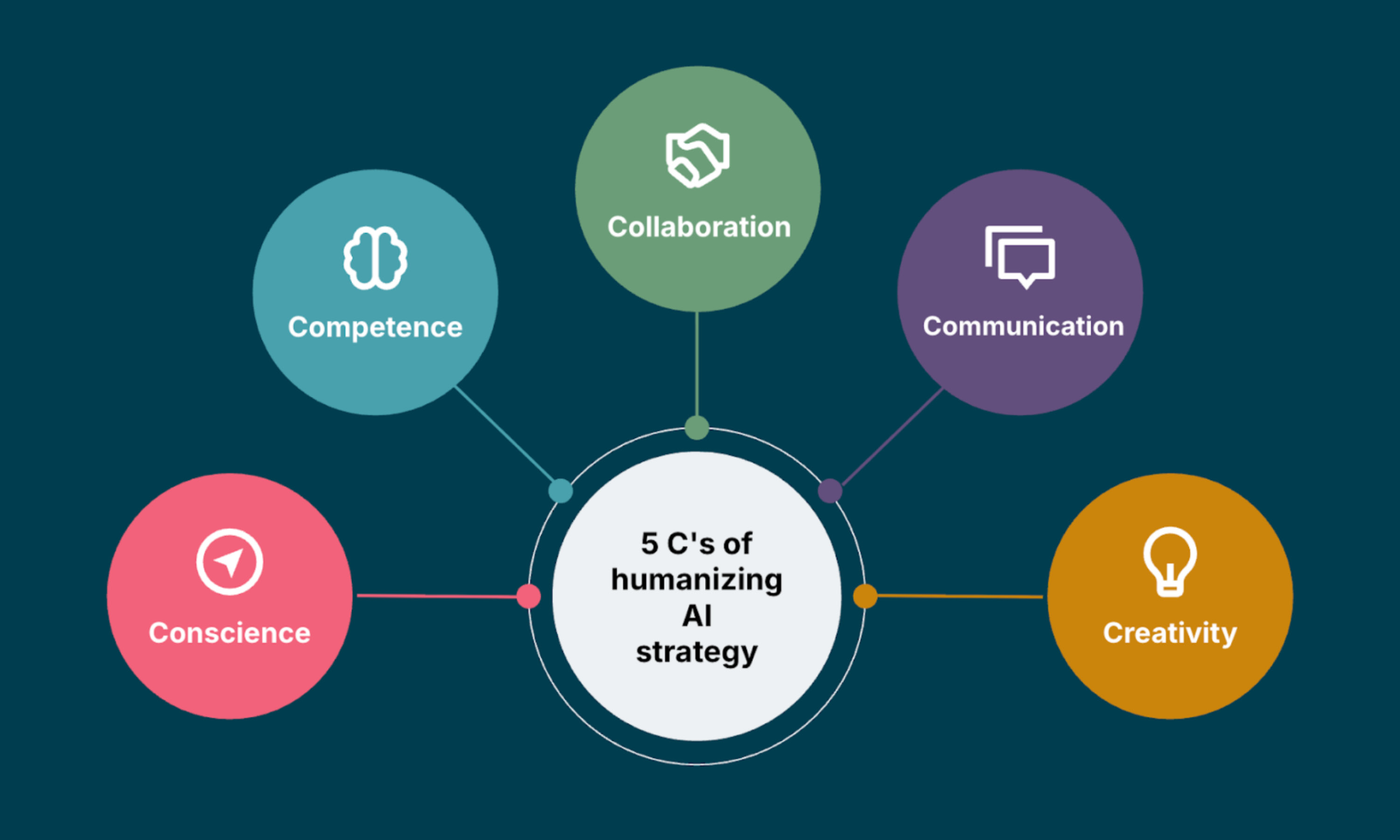

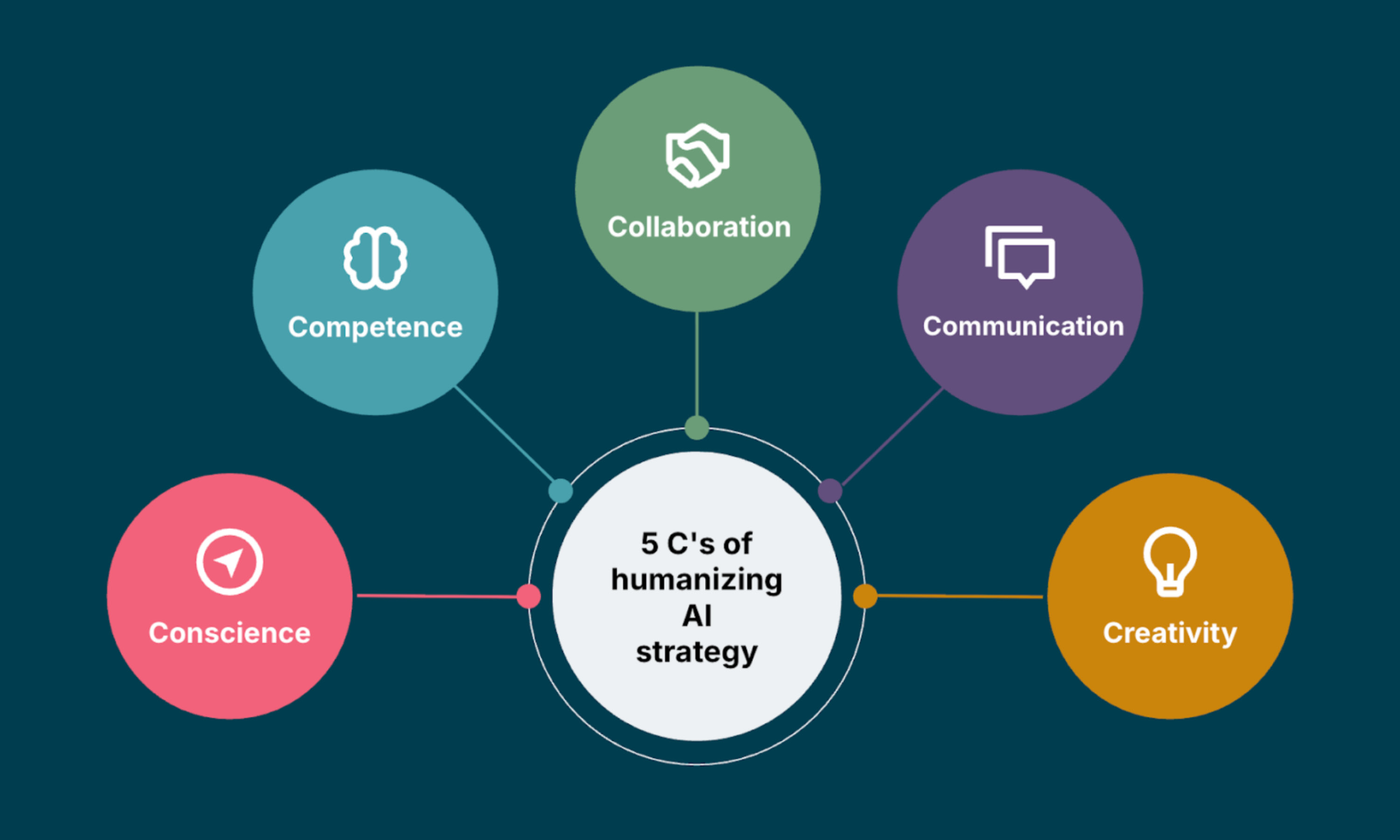

Tiankai Feng, Data and AI Strategy Director at Thoughtworks, developed in his book Humanizing AI Strategy: Leading AI with Sense and Soul (Technics Publications, 2025) the five C’s framework – Competence, Collaboration, Communication, Creativity and Conscience – as a human-centric lens for AI initiatives.

In this article, we’ll deep dive into what each C means.

The five C’s framework for human-centered AI

The five C’s is a simple and easy-to-remember framework to not lose sight of the human side in designing an AI strategy:

Competence is about developing AI literacy so that we don’t blindly follow what the algorithms suggest, keeping the right human at the right time.

Collaboration reminds us that AI is a human team effort and starts from the bottom up in an organization.

Communication is about helping everyone understand what the AI is doing, and what it isn’t, and designing AI conversations inside and out.

Creativity is how to co-create with AI and leverage prompting to augment humans’ originality, not replace it.

Conscience is the grounding force and is about ethics, integrity, governance and responsibility.

Competence

AI literacy starts with a necessary technical understanding of how AI works and produces its results. Human behaviors such as hallucinating, thinking and argumenting are attributed to LLMs, but what seems very “human” is actually just LLMs – in very simple terms – calculating the most probable next word in a sequence.

As Mustafa Suleyman, CEO, Microsoft AI, points out, AI isn’t “blackmailing” you any more than a robot vacuum cleaner, repeatedly banging into your leg, is attacking you. Both are just following their instructions – no malice, no guilt, no emotions or moral compass involved.

AI literacy doesn’t end with the technical aspects but also includes ethical awareness, prompt literacy and the ability to interpret and critique model outputs. Because AI sounds smart, we sometimes forget to think critically.

Competence in the AI era also relies on the human-in-the-loop design pattern. It’s about having the right human at the right time to judge AI’s outputs and intervene if necessary.

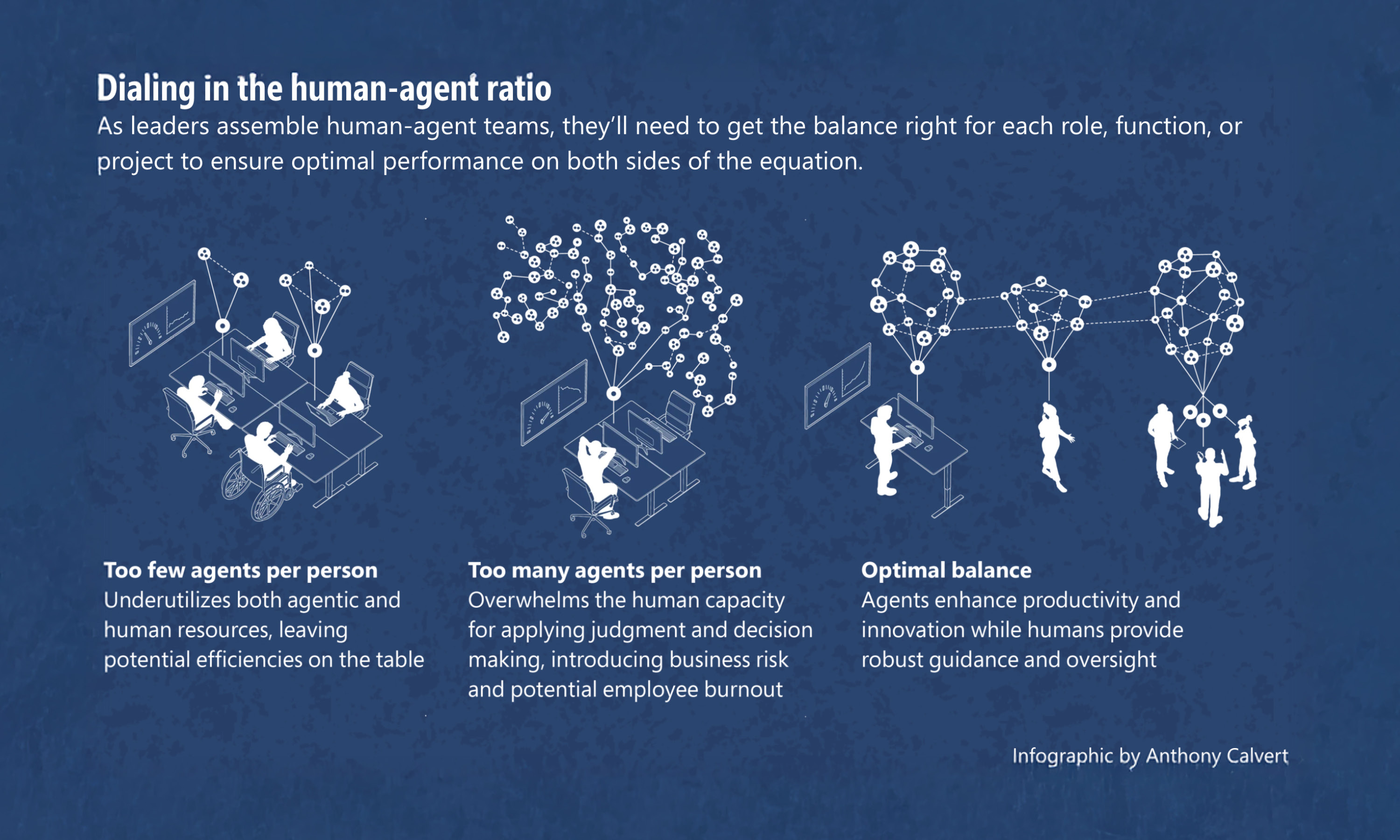

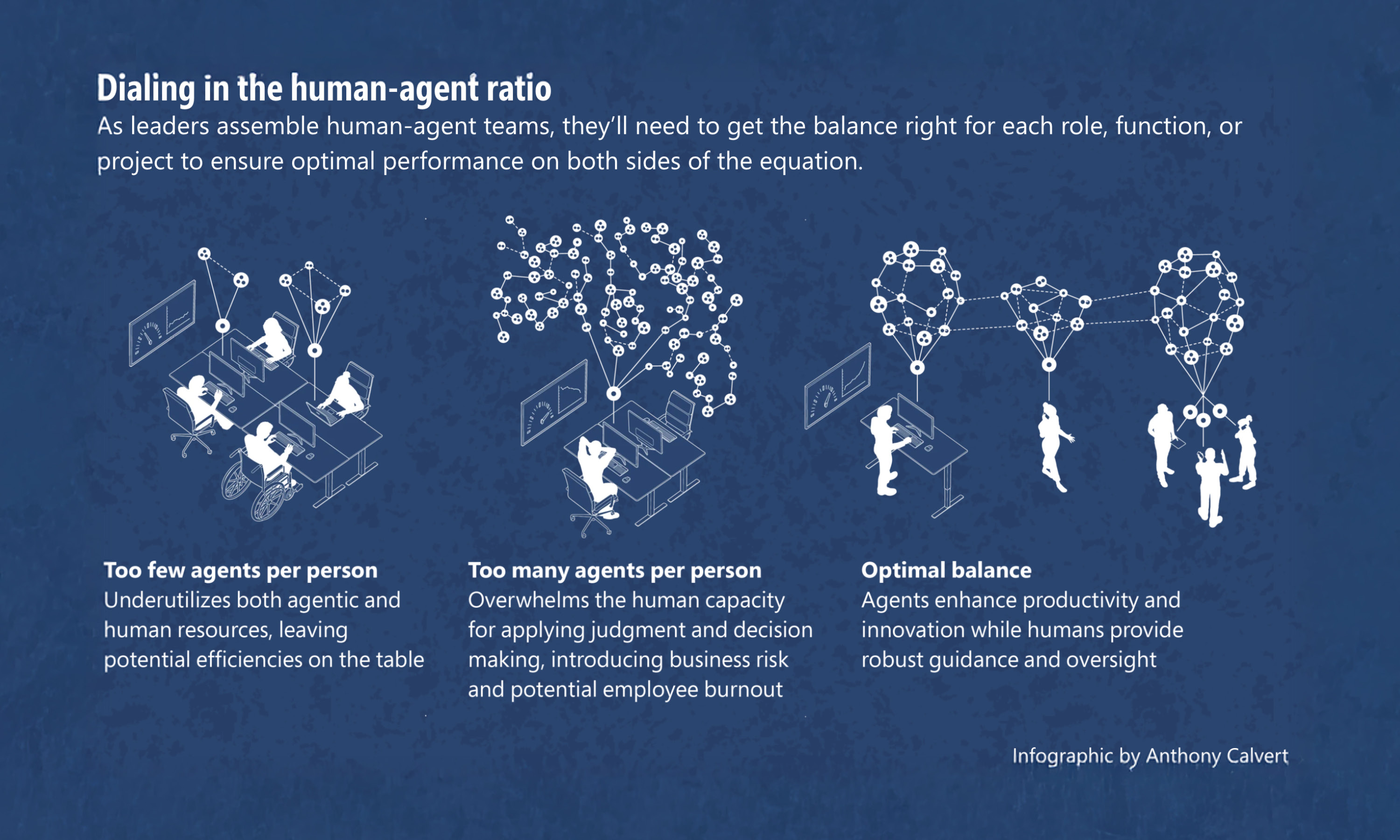

Microsoft Work Trend Index Annual Report 2025 highlights the emergence of an entirely new organization, the Frontier Firm, where humans are taking on the role of “Agent Boss”, rewriting the classical organizational chart in enterprises. Humans manage one or more agents and orchestrate these digital co-workers while keeping decision override, appropriately relying on AI (not over-relying but keeping critical judgment) as well as being ultimately responsible for the agents’ actions.

Collaboration

AI initiatives often fall apart not because the models are wrong, but because the teams around them were never aligned in the first place. Different incentives, different vocabularies, different assumptions about what “success” looks like - these are the real blockers. That’s why truly multidisciplinary, cross-functional by default teams – legal, security, ethics, business, tech – are fundamental.

Continuous collaboration is also needed. What wasn’t possible yesterday might be possible today as AI model capabilities double every six months, according to Satya Nadella, Microsoft’s CEO. So while many of us approach AI as a project (with a defined start and end date), we must rethink how to adapt to this pace.

Continuous collaboration can be set up within a project, but it must be a process (e.g., part of existing project portfolio management processes) that identifies new opportunities early and brings new stakeholders into existing individual initiatives.

Another key aspect is to follow the pain in your organization when launching a new AI use case. Where are your people frustrated? What do your customers complain about? Where is the business losing time and money? This way, you’re no longer pushing an AI agenda from the top but creating value with AI from the bottom, which in turn generates advocacy.

This point of view is further supported by MIT State of AI Business in 2025. With the capabilities GenAI has today, the biggest ROI is expected to come from back-office functions rather than “shiny new” front-end projects. Focusing there might not be as inspiring as something brand new, but given the business challenges (cost pressure, competition, skilled people gap), leveraging AI where it can have the biggest impact today will not only improve competitiveness but also free resources to focus on more differentiating projects tomorrow.

Finally, the old saying “culture eats strategy for breakfast” still holds true with AI, emphasized in Microsoft's New Future of Work Report 2025. Organizational AI adoption depends on employees as much as leaders:

Workers can be reluctant to adopt top-down-mandated AI products that prioritize efficiency over quality and creativity, undermining the traditional view of humans as the core value driver of businesses. This reluctance limits the success of AI pilot programs (Young et al., 2025; Sharma, 2025; Murire, 2024).

Organizations can facilitate AI adoption by creating systems and incentives for employees to share how they use AI with one another (Tursunbayeva & Chalutz-Ben Gal, 2024; Winsor, 2024).

Communication

Communicating about what the AI does and doesn’t do is essential. Trust is foundational to any human-AI interaction and transparency plays a central role in building it: transparency breeds trust. We have to design transparency checkpoints into the experience of using AI:

Plain language about how the AI works

Confidence scores that are visible and interpretable by non-technical users

Side-by-side examples showing model behaviors in different scenarios

Clear escalation path when something goes off

For example, Microsoft Transparency Notes for Copilot are intended to help understand how Microsoft AI technology works, the choices system owners can make that influence system performance and behavior, and the importance of thinking about the whole system, including the technology, the people, and the environment.

How the leadership team communicates about (and with) AI internally (AI strategy, goals, principles, etc.) is crucial, as employees need clarity and the leadership team role-models with knowledgeable, balanced communication about the risks and opportunities of AI.

While many AI systems use natural language as their interface, it doesn’t mean they automatically know how to communicate effectively, respectfully or clearly. Hence, the importance of designing AI conversations inside and out: your AI should reflect your brand, team and values.

The key elements of designing how AI should communicate are:

Ensure brand consistency: Your brand book and guideline, as well as corporate communication strategy, should be reflected in the AI.

Re-think communication with AI: In the past, corporate communications (including project communications) were mostly broadcasting style “1:many” with the same message communicated to everyone. AI adds new capabilities to communication. While staying consistent in wording and tone, communication can be adjusted more easily than ever before to individuals’ backgrounds and needs. This means 1:many becomes truly 1:individuals - more personalized communication driving more awareness or action.

Set guardrails: Clarity on what to communicate, and what not to communicate, is key. Once communication becomes the “UI”, we need to secure that channel accordingly.

Tweak the AI’s voice: The AI should speaks in its own style. Microsoft recently released Mico to make Copilot more personal and adaptable to users’ needs and styles, while staying true to Microsoft's brand values. The new Mico character – its name a nod to Microsoft Copilot – is expressive, customizable, and warm, and designed to be empathetic and supportive, not sycophantic.

Creativity

With the rise of GenAI, it’s tempting to think creativity is becoming machine territory. But there is a big difference between remixing what already exists and inventing something that’s never been seen before. Rather than replacing human creativity, you should see AI as an amplifier of it.

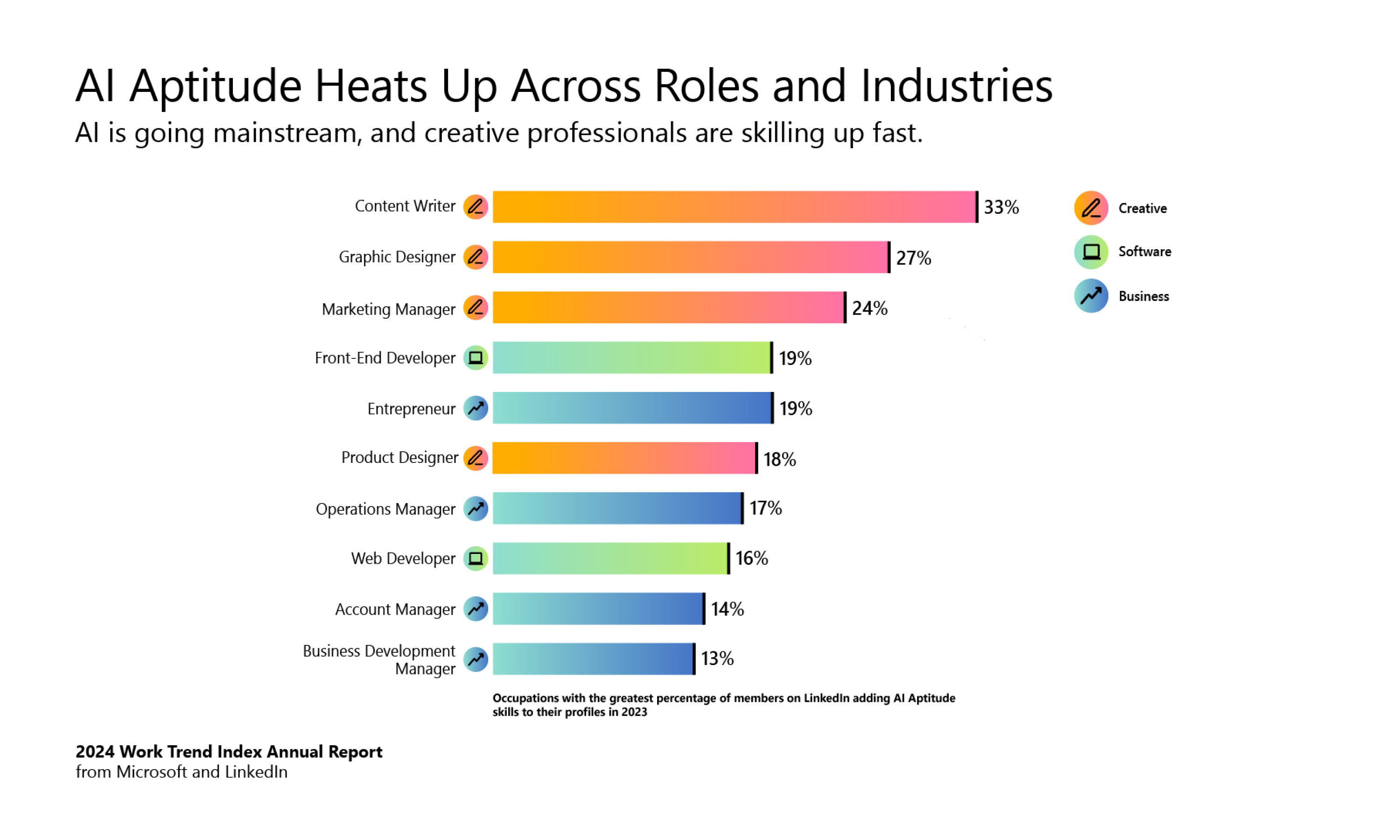

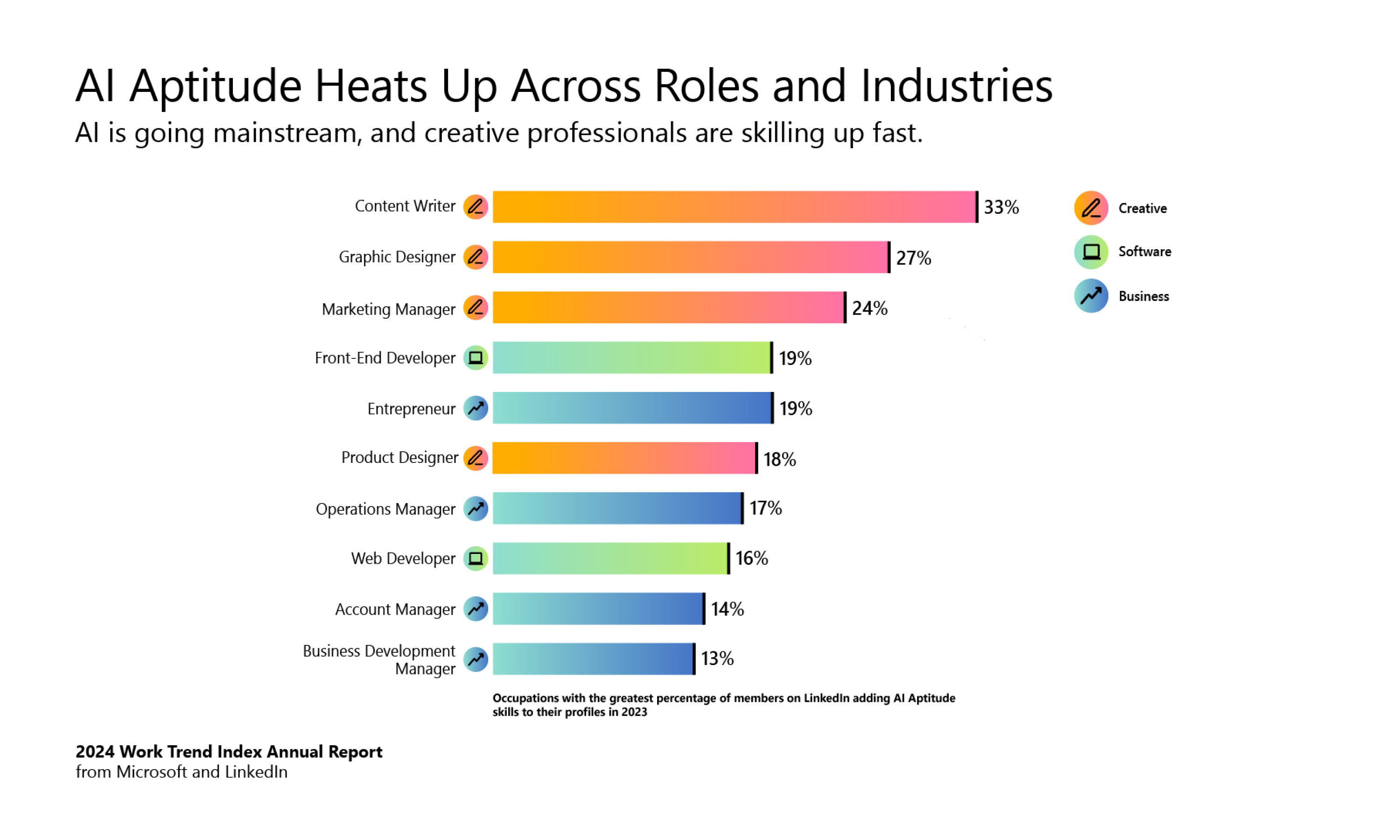

As the Microsoft Work Trend Index Annual Report 2024 shows, 75% of knowledge workers use AI at work today and 84% of the users say that AI helps them be more creative. Among the different roles, creative professionals are skilling up fast.

Rather than thinking of a prompt as a quick instruction such as “generate 10 ideas”, prompting, when done well, isn’t about giving orders to a machine; it’s about crafting direction. We should see prompting as a creative discipline; the prompt has become the new creative brief and it can reveal your blind spots, perspectives you unconsciously prioritize, and act as your psychological mirror and sounding board / sparring partner, as illustrated in How to brainstorm new ideas with AI.

As the Microsoft New Future of Work Report 2025 points out, Human-AI co-creation is shifting from one-shot outputs to context-aware, interaction-driven partnerships. Modern LLMs have fundamentally shifted content creation workflows from assuming perfect results in a single attempt to engaging in iterative, multi-turn collaborative refinement (Mysore et al., 2025). This transformation reflects both technological advances in LLM capabilities and profound changes in how users perceive and interact with AI (Reza et al., 2025), increasingly viewing it as a creative partner rather than a passive tool (Wan et al., 2024).

Conscience

Conscience is about keeping AI grounded in human values. It’s not about what we can build anymore with AI - it’s about what we should build. Luckily, there are frameworks already in place to translate what “good” means, such as the EU AI Act and the NIST AI Risk Management framework in the US.

Although AI is very new in many aspects, it’s also similar to many other IT applications and in terms of governance and compliance. Having the right controls in place, AI is possible even in highly regulated scenarios, and Microsoft’s customer WTS is an example: plAIground - Your AI Tax Software. AI needs to be done right, and the EU AI act is a powerful competitive advantage for AI in the EU, as it sets clear guardrails that mitigate potential investment risks.

Microsoft, in its Responsible AI Transparency Report 2025, highlights the following aspects of combining AI with human conscience:

How to build AI responsibly

How to make decisions about releasing GenAI systems and models

How to support customers in building AI responsibly

How to learn, evolve and grow in responsible AI work

Spotting and reducing bias is crucial to responsible AI. The tricky thing about bias is that it doesn’t announce itself with flashing lights and sirens. It shows up quietly, does it damage politely and by the time somebody notices, it’s already seasoned the entire system with unfairness.

There are open-source tools, such as Fairlearn (Microsoft, 2020), that provide actual metrics and algorithms for testing different types of fairness. They aren’t magic bullets - they’re more like bias metal detectors. They’ll help you find problems, but you still need to decide what to do about them.

Summary

The five C’s is a useful framework to make an AI strategy more human. In today’s world, it’s not about what technology is capable of doing but what we want it to do to serve humanity. Tiankai’s book reminds us to be clear about which tasks should remain human as we are still in the driver’s seat.

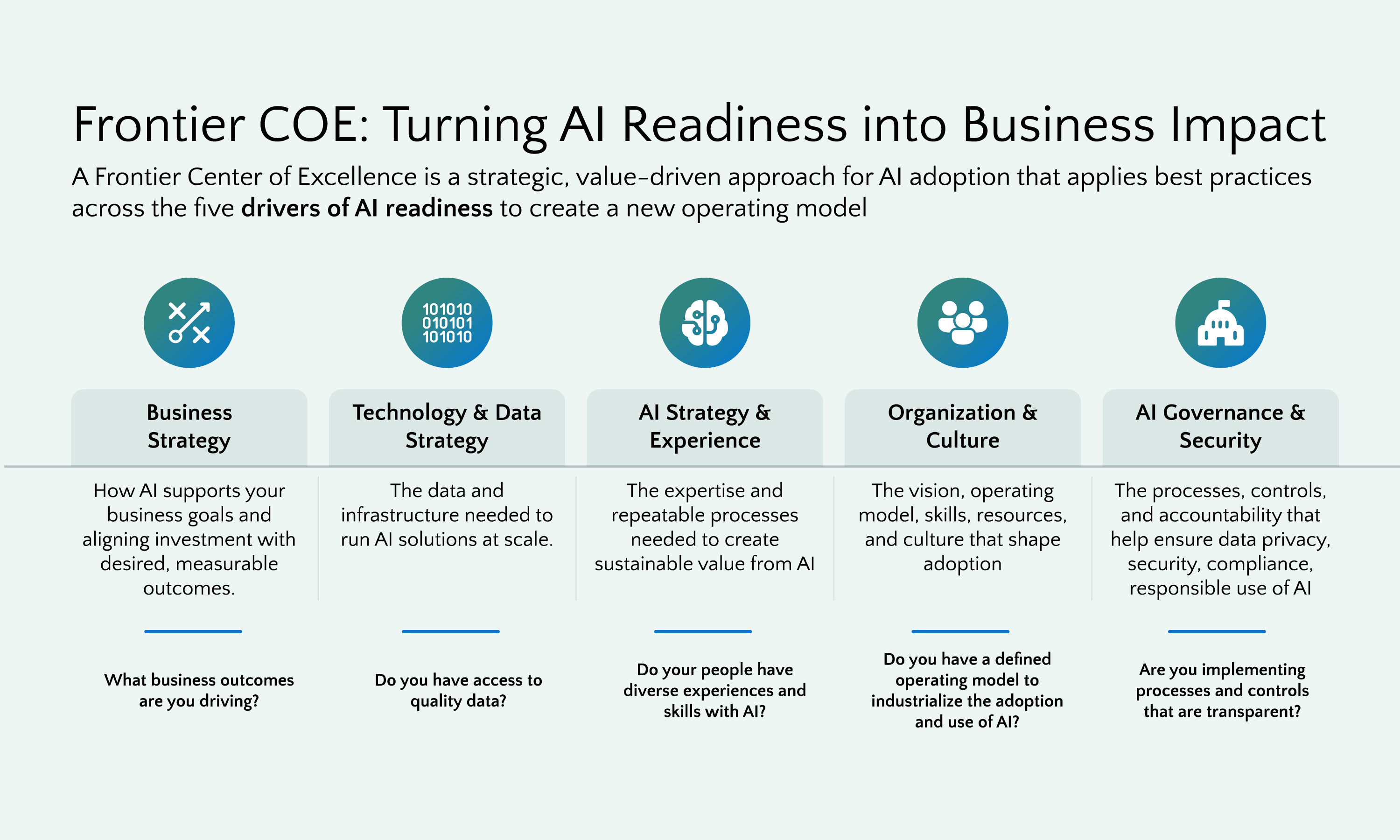

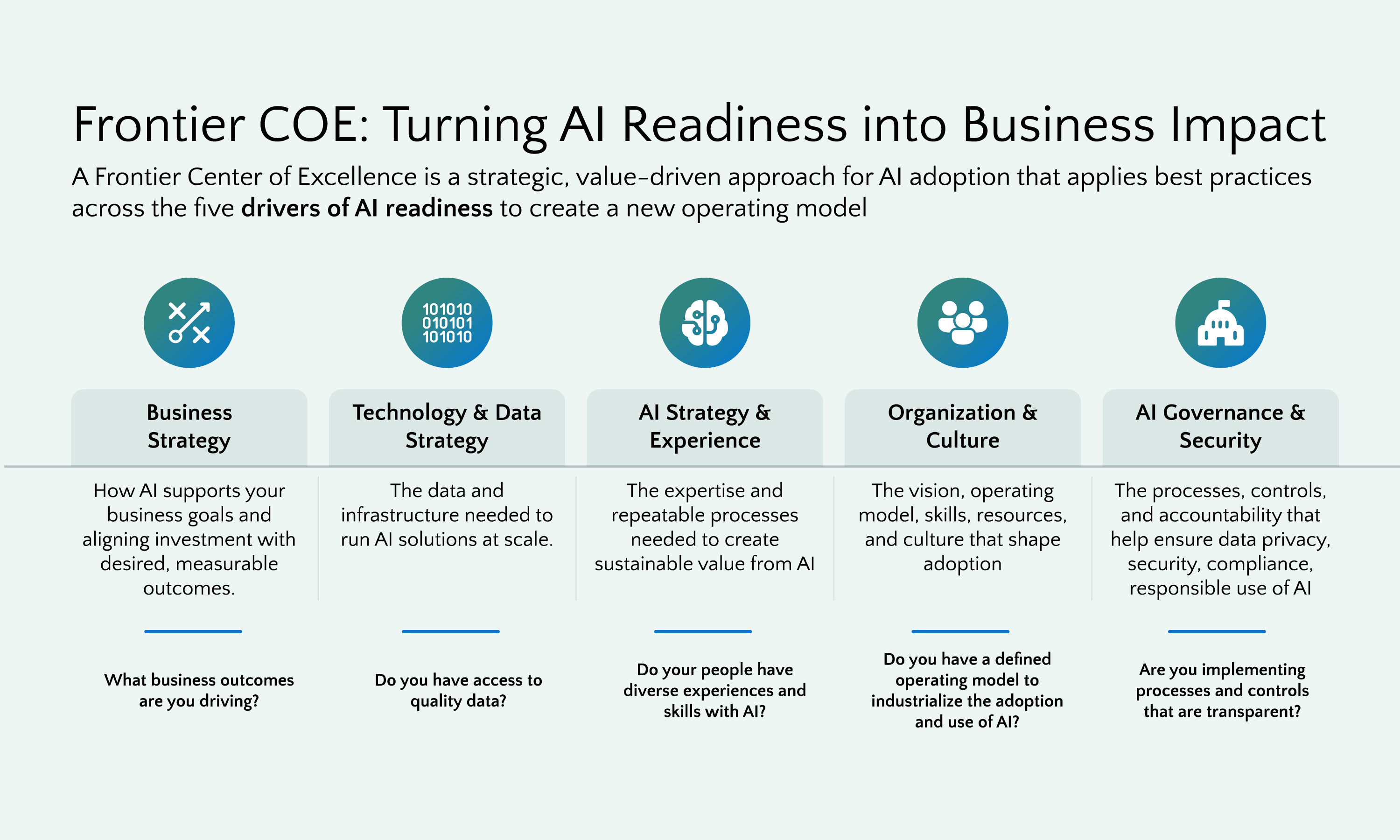

As the following Microsoft diagram also reminds us, organization, culture and change management, all human-centered initiatives, are an important driver, if not the most important, for successful AI projects.

There is no culture-as-a-service nor an out-of-the-box solution for change management that fits all situations.

Thoughtworks helps enterprises succeed with AI by focusing on the cultural and organizational shifts unique to each enterprise. Its human‑focused and individualized approach builds trust, readiness, and new ways of working, enabling teams to confidently adopt and scale AI. By pairing deep change‑management expertise with practical delivery experience and best engineering practices, Thoughtworks ensures AI delivers sustainable value.