Start up automated testing with your existing staff!

I just met the Thoughtworks global "Head of Quality"* this week, Kristan Vingrys. He was able to make me see automated testing more clearly than I ever did before with a quick sketch and 10 minute discussion, and I wanted to share what I heard with others of you who might be more on the management/business end of things, and less on the development/testing end. Keep in mind, there is plenty of room for slippage between "what he said" and "what I heard," so errors here should all be blamed on me. Friends, please jump in and correct what I'm getting wrong here.

First point: automated testing and continuous integration are what make those short bursts of value delivery possible, at any desired software quality level.

As a would-be Agile PM or BA, you are probably saying things to yourself like "wow, we're going to deliver ACTUAL WORKING AND TESTED CODE every two weeks! This is great!" You are thinking about your company web site, which can now have new features actually facing the market every two weeks (a dancing leprechaun! a shopping cart!), or about your internal operational software which can accommodate changing corporate needs within months rather than years.

If you're a typical a BA or PM, even a sophisticated one, you may be very focused on the business rather than the development practices, and you may consider "automated testing" to be kind of an esoteric stretch goal for the team. You might consider the "development" and "testing" activities to be a sort of black box. You write some requirements, put some development and testing hours into your two week iterations, and off you go! "Design-Develop-Test," you think. (DDT)** Easy peasy. Every two weeks you have new software that does new stuff, and is super high quality, because you're testing it before you say it's done. Woo hoo!

Okay, but what you're not thinking about is you are delivering new stuff every two weeks, instead of every eighteen months, so now, in addition to doing DDT for every new chunk of value you're delivering in your two-week iteration, you now need to do this thing called "regression" testing as well, also in every iteration, to make sure you didn't break anything. So now it's Design-Develop-Test-Regression-Test. (DDTRT) And each iteration, there's more stuff to regression test, because there's more stuff that you've completed, and therefore that much more stuff out there to break. Soon you're doing "DDTRTRTRTRTRTRTRT" in each iteration, and your team is doing very little new coding and a lot of regression testing, in order to churn out anything at all that is fully trustworthy.

So in the absence of automated testing, some of the following things will likely happen:

- You will have longer iterations, so that you don't have to go through regression testing as often

- You will do a lot more design up front, so that you don't have to risk changing old stuff as much, and you can spend less time regression testing

- You'll release what you've got infrequently to actual users, and make thorough testing a "release" activity of some length.

These are all perfectly good things to do, and they are things you SHOULD do, if you can't do automated testing. But notice that the lack of automated testing has now pushed you right back where you didn't want to be: design the whole thing up front, test the whole thing infrequently, and most likely primarily at the end, when you don't have a lot of flexibility to change things. You aren't actually getting what you wanted any more: quick bursts of value. You may still be having iterations, daily scrums, and showcases, and those things are all great, especially for morale, but what have you gained in terms of delivering business value? Are you quicker to market now?

Now, if, instead, you start from the beginning of your project with the philosophy that the majority of your code should be checked into your code repository accompanied by automated tests before it is considered to be "done," and you define the testing activity in each iteration to be "writing the automated test we will use for all time to regression test this system," then you're back to the happy world of DDT (at least until someone starts talking with you about TDD, but I'll save that for another day).

You can now define "done" for your project as "Code complete and tested," and what you will mean by that is that at the end of each iteration, the development is done and the automated tests are done, and the software passes the automated tests whenever they are run. And by the way, whenever you check the code in, you run the tests, so you know right away if anything has broken.

Apologies if you already knew that, but I just wanted to do a little review in case it wasn't top of mind. So this brings us to:

Point 2: if your organization is new to agile, you may run into questions about who is going to do all of this automated testing. One thing that happens that I always find horrifying is that the organization goes "agile," and quickly decides to replace all of its current testers, who have done most of their work manually, with bright, shiny new "automated testers." Gah! Please don't do this. The number one value your testers bring is subject matter expertise, and the number two value is a sneaky (even cruel) mind-frame: these people are expert at figuring out how to break things before your users do. These are the people who stop you from having to be paged on the weekend. It is unlikely to me that you really want to get rid of these trustworthy allies in favor of a team comprised 100% of young college graduates who have been trained on QTP.*** As a Thoughtworker, I am compelled to suggest that you should be using Twist, not QTP, anyway.

So how do you keep your existing staff, but still gain the benefits of automated testing? I'm going to focus on two types of automated testing which will buy you a lot, in terms of your development process. I'm not going to talk about data warehouses, load testing, security testing, and so on, although all of these can also be partially or fully automated. Right now, I want to talk about unit testing and functional testing.

Unit Testing

In most agile teams, and even on many traditional teams I have seen, "unit" testing is traditionally done by the developers themselves. This is not cheating--this is just a sensible, normal thing to do. The devs write a unit of software, and they don't consider it done until it is accompanied by a unit test which is also checked into the source code repository and included in the test harness to be run at every check-in. So unit test automation is going to be a matter of working with your development team to include unit tests in the definition of their job. Since the unit tests are typically written in the same programming language that you use to write the actual software, this shouldn't be an especially tough burden, but sometimes developers don't think it's their job. A lot of times, developers actually do like the additional rigor though, so don't be disheartened.

But what about functional testing, where you check to see that when you put all of the little units of software together, the program does something that your actual business people can see, recognize, and sign-off on. Can functional testing be automated? And if so, who is going to write those complicated programs to simulate actual users using the web page or whatnot? Wouldn't that be super tricky? Shouldn't you just manually test and forget about it? No! Go back to point 1. You want to automate it, and you don't want to lay off all of your current testers to get there.

Functional Testing

This is where Kristan's diagram comes in, radiant, like the way the Rosetta Stone is illuminated in the British Museum:

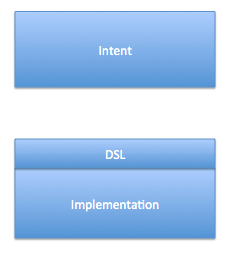

Your automated functional test suite conceptually should be composed of a set of tests which have two parts: an "intention" (recorded in the top box) and an "implementation" (expressed in a software language in the bottom box). The implementation might include a bit of "domain specific language" in it (DSL), and most likely has some other stuff in it which is written in a language like Java, Ruby, or .Net.

Just as in the days of manual testing, a test plan can be fully specified in text, although in this case it doesn't live in a spreadsheet--it lives in the "intention" section of the actual test as a sort of comment. The intention would be words written in vernacular prose and stored forever in the top "intention" box. Functional test intention could be 100% supplied by your current BAs and testers, even if they've never written a single line of code.

- BAs can help out by expressing specifically what the piece of software is supposed to do functionally.

- Testers can then focus on tweaking those tests and adding "edge cases."

Meanwhile, developers on the team can be responsible for writing the test implementation, treating the BAs and testers in the group as their clients--does the automated test work the way you expect it to? Does it test the right thing?

Each test has both parts: intention AND implementation. Your automated test suite runs the tests in a sequence that makes sense, and lets you know whether your software is behaving the way it should. The part that runs is the "implementation," and that is something that needs to be written by a person with programming skills. However, for practical purposes, you can divide the test-writing activity into "intention writing" and "implementation writing," and exploit the exact current skills your team members have today.

And then:

The desired end state is one where the whole team is composed of people who can help each other out. Often, once you have a certain number of tests developed, that code base can serve as templates for testers to get involved in writing actual DSL or automated test code themselves. Over time, your testers will likely become quite proficient automated functional test-writers. Developers can still help out as needed, and in a pinch, you can call on your testers to do actual development.

Another colleague of mine, Chris Turner, says that an even better "ultimate" end state for the automated functional tests, is one where the functional tests are written in a DIFFERENT language from the code base, because it removes everyone's ability to duplicate the "real" code and make it the test. Someone literally needs to express the test in a different language, and this can be a disincentive to lazy test coding.

To get to this state, two things need to happen to some degree: the testers need to really own the test code, and not be leaning too heavily on the developers to help with it. And/or developers should be "polyglots" who can help testers out in a different language than the one they code in on a day to day basis. It's interesting to think about.

Moral: you can keep your current staff, if they are awesome people, and you can all move to automated test driven agile development, along a fairly easy ramp-up path, all other things being equal (which of course they won't be). Your end state can be the highly desireable one where the whole team is working together, and nobody is saying things like "that's not my job." Your testers can stay, right along with your BAs. It's a great day!

**Indeed, "DDT" is a poison which is causing chromosomal damage to fish all over the world, and not a technique you should brag about following to anyone in the real world.

***Final aside: QTP is often used as the example of "bad functional test suite," partially because it uses "record and playback" techniques. Indeed, functional tests need to be actually written in code so that you can "refactor" the functional tests right along with "refactoring" the code base. If you have to re-record your test for every iteration, your functional test suite itself puts you back into the nightmare DDTRTRTRTRTRT world. If you're just starting out with a tool, you should definitely accept the opportunity to have the tool "record" what's going on in the application, but as you become more familiar with the technology, you will want to clean up what you get in the recording, and for any functional test you'll keep in the permanent code base, you want it to be written intentionally by the team.

This post is from Pragmatic Agilist by Elena Yatzeck. Click here to see the original post in full.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.