With the constant pressure software engineers face to deliver new functionality, it can be hard to keep up with regular dependency and framework upgrades. Tools like Dependabot and Renovate have been doing a great job of letting teams know when there’s a new version of a particular package. However, when that new version introduces breaking changes, the team will inevitably have to spend time trying to understand the potential impact, resolve them and then validate that those changes haven’t caused any unexpected failures.

Taking .NET version upgrades as an example, teams that follow the long term support (LTS) cycle will need to update to the next major version once every three years. This involves updating all the Microsoft packages and, more often than not, any third party packages that have been upgraded to support the new .NET version. Each one of those package changes can introduce compile time and potentially run time breaking changes that will need to be resolved. For small components that make use of stable functionality this would typically be relatively trivial— however, for larger components that depend on niche or preview functionality, resolving the breaking changes can be a time consuming task.

Upgrades like these can often take months. During this time teams may need to pause new feature development to focus solely on framework updates. It’s no surprise, then, that with the emergence of agentic coding assistants, teams are beginning to explore how GenAI might automate and accelerate this process. It can be tempting to ask an LLM to perform the entire upgrade all at once and hope for the best. While this could work for small components, it will likely result in an incomplete upgrade at best for more complex solutions; at worst it could lead to a mess of confusing changes with no clear connection to the original problem.

Can AI help?

Our team sought out to experiment with a variety of tools to determine the best way to leverage GenAI to assist in framework upgrades. After experimenting with all the major AI IDEs and extensions, we found that blending deterministic and non-deterministic AI tools yields the best results.

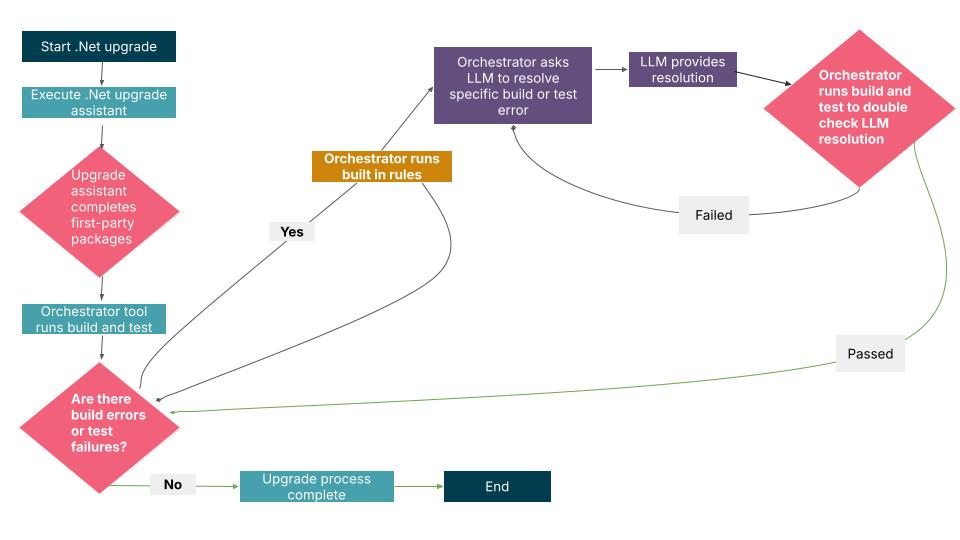

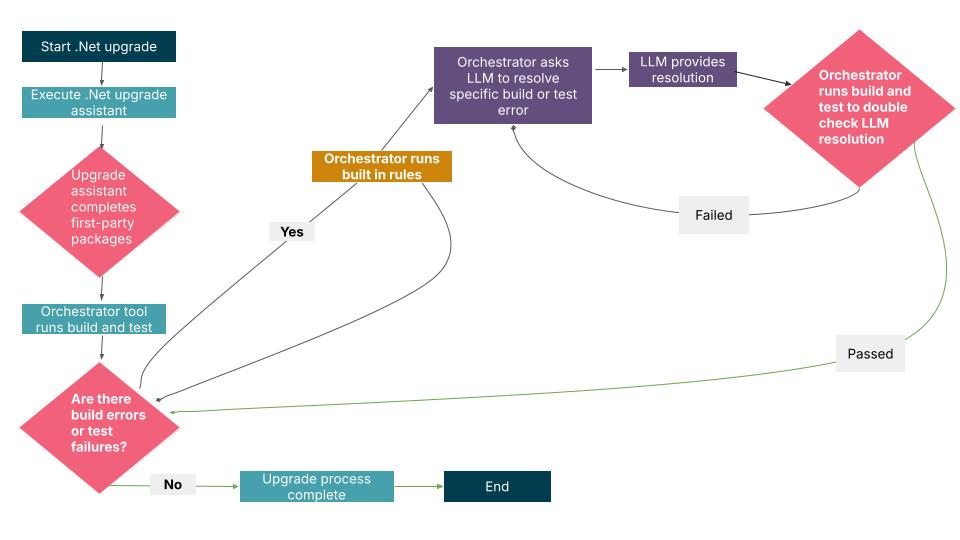

Let’s return to the .NET version upgrade example. The start of the upgrade process should involve executing the official .NET upgrade assistant against all existing projects in the solution. While it doesn’t handle third party package upgrades, it will at least be guaranteed to update the target framework version and Microsoft first party packages.

What comes next is what we found to be the most interesting challenge. We asked a variety of tools and models to perform the next step of the upgrade process to see how far they can go without strict guidance. For trivial components, the LLM was able to complete the remaining steps of the upgrade process following an iterative agentic loop. For the more complex components some tools would give up after a few cycles, asking the human for advice on next steps. Other tools would trip over completely, either returning errors or ending up in an endless hallucination loop.

Experimenting with different prompts

Falling back on best practices for working with LLMs, we experimented with different prompts, trying to narrow the scope of the task and encouraging the model to follow a strategic checklist-based approach. We iterated further on the prompt, providing tips on how to address certain breaking changes and how to reliably run the .NET build and test steps, to ensure it was considering the right feedback while it reasoned about the next steps. This helped us get further with more complex codebases, but the AI again struggled with the much larger codebases.

The value of orchestration

We came to the conclusion that while an LLM is capable of performing the subsequent upgrade steps and solving breaking changes introduced by the upgrade, it cannot be trusted to perform all the steps autonomously. The approach that yielded the greatest success so far was to build a tool that orchestrated the invocations of the LLM, strategically asking it to resolve build error by build error and test failure by test failure. That way, the task it’s expected to solve is bounded and clear, leaving little room for hallucinations to send it off track. Once the LLM has decided it has resolved a specific error, the orchestrator will double check and ask it to fix it again if necessary.

In the case of .NET upgrades there is a wealth of investment in tooling that can analyse .NET build and test errors, which can be leveraged to ensure the LLM is focused only on a specific error. That tooling can then be used to ensure the LLM has actually resolved that specific error.

Another benefit of the orchestrator is that it can have built in rules that handle specific build errors, with known resolutions that can be resolved deterministically. The less we rely on the LLM to resolve known errors, the quicker and more reliable the upgrade process will be.

An example of one of the rules is resolving a package vulnerability by upgrading it to the latest version. The rule knows about the specific error codes and can deterministically identify the impacted package and update it using the .NET CLI. Although in theory the LLM could also perform these steps, the solution it will identify cannot be guaranteed to be the same every time — while also being slower and more expensive to use.

The most fascinating learning from this experience is that the LLM that’s used as part of the orchestrator is the LLM that built the orchestrator itself. The LLM was used to help design and build a CLI tool that will strategically invoke itself and other .NET tooling to perform a .NET upgrade. It then helped test the tool, iterate on it and expand its functionality, after testing it against real complex codebases. The tool can continue to be iterated on as new edge cases are identified and the built-in rules can continue to be expanded on to reduce the reliance on an LLM.

Final thoughts

While the upgrade tool is not guaranteed to handle every potential breaking change and often produces different results on the same codebase after each run, it performs the upgrade faster than a human can. It therefore can play a very useful role helping organizations keep their software up to date and compliant.

When exploring how GenAI can help accelerate framework upgrades, first consider how far the existing tooling in the ecosystem can take the upgrade, then look at how to strategically make use of an LLM to perform the steps that can’t be performed deterministically. We can't always expect the LLM to perform the entire upgrade on its own — not yet, at least.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.