Financial services newsletter

By Omar Bashir, principal financial services and Prashant Gandhi, principal financial services

Increasing volumes of securities trading, largely due to electronic and High-Frequency Trading (HFT), are challenging post-trade operations. Regulations require trading counterparties to match and confirm their trades as soon as possible after trade execution. This reduces clearing and settlement risks because of trading errors. But without due consideration to technological efficiencies, scaling systems to process escalating trading volumes within the regulatory deadlines is financially unsustainable both on-premises and in the cloud. Flexibility and elasticity of the cloud, when coupled with fine-grained scalability and processing efficiencies, offers cost optimisation opportunities for post-trade processing businesses. This leads to higher margins which are sustainable with business growth.

Introduction

Post-trade processing consists of a series of operations performed after trade execution to ensure timely trade settlement and dispute free collateral management. Given the diverse universe of financial products and the various market participants involved, post-trade processing tends to be complex and expensive, resulting in over $20 billion annual spend.

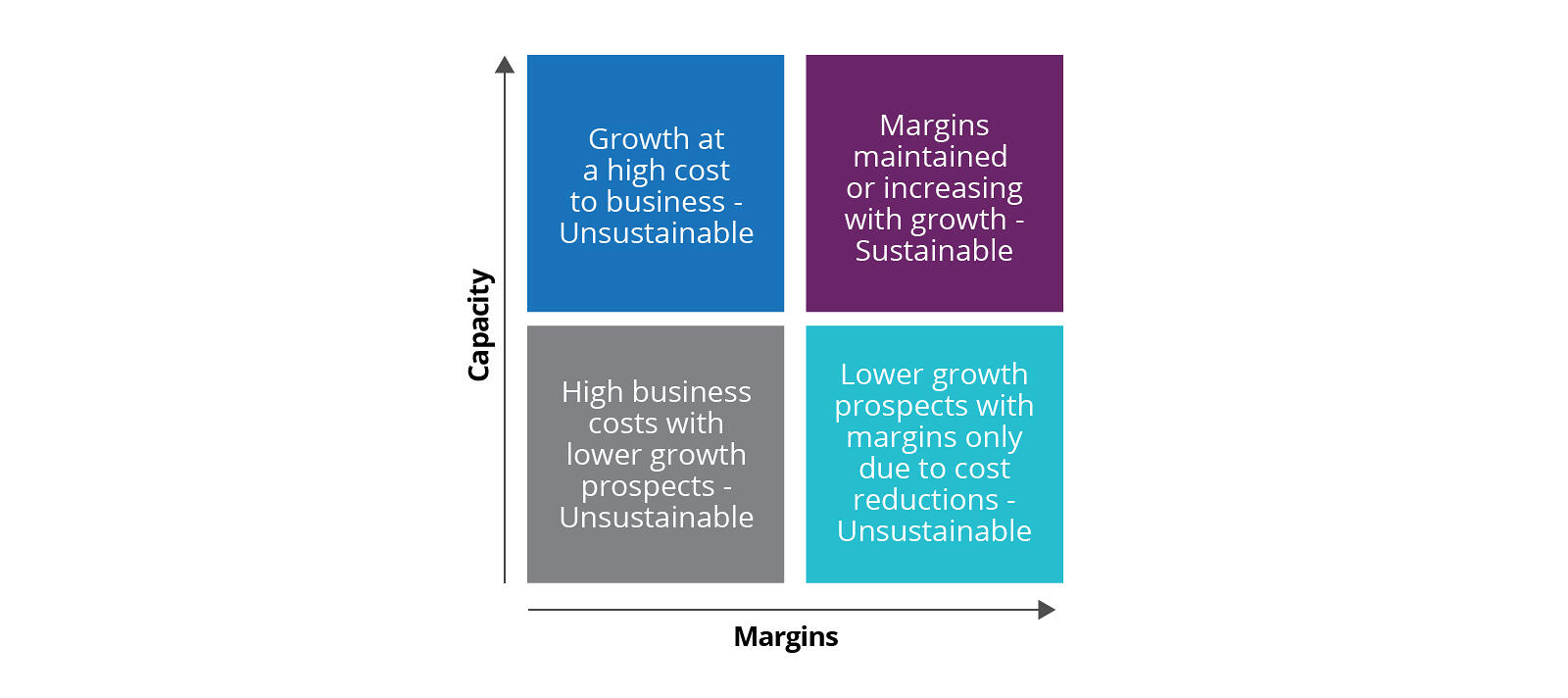

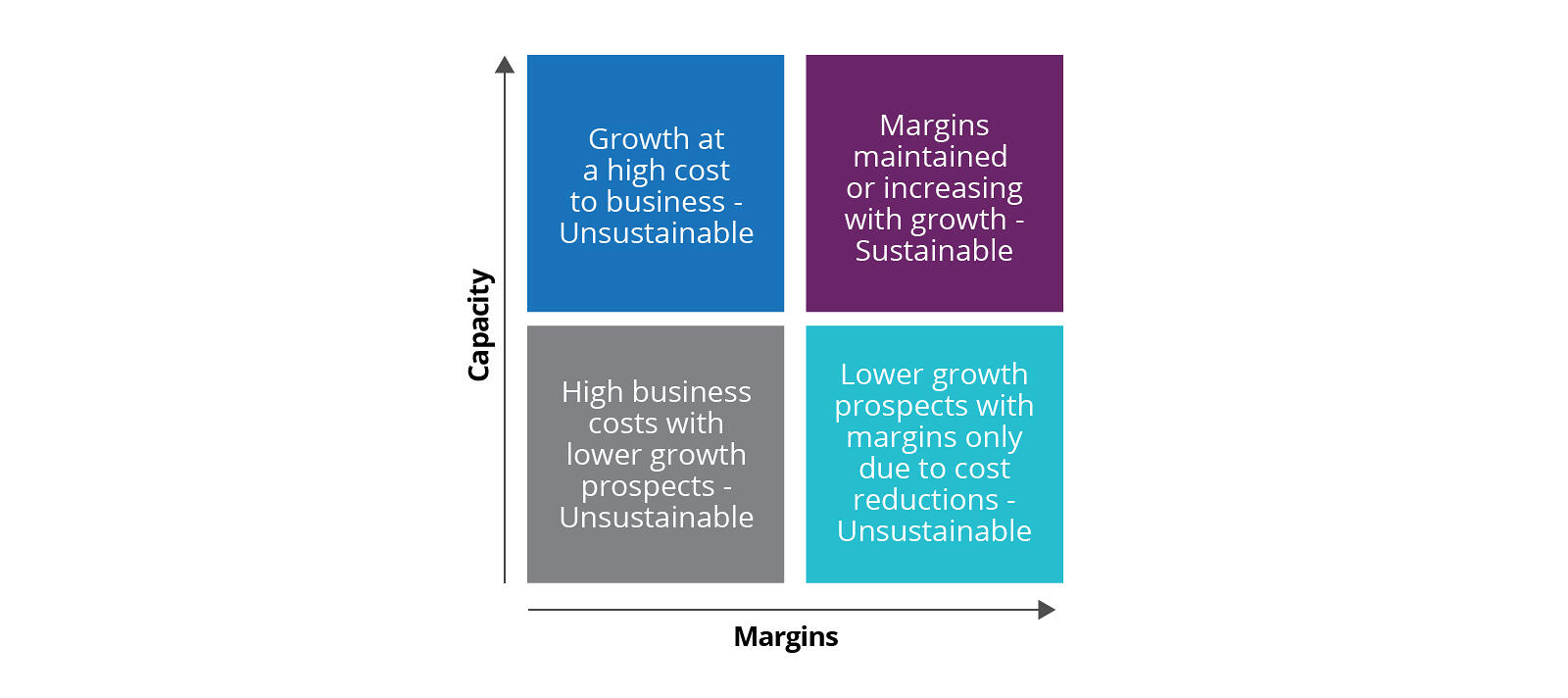

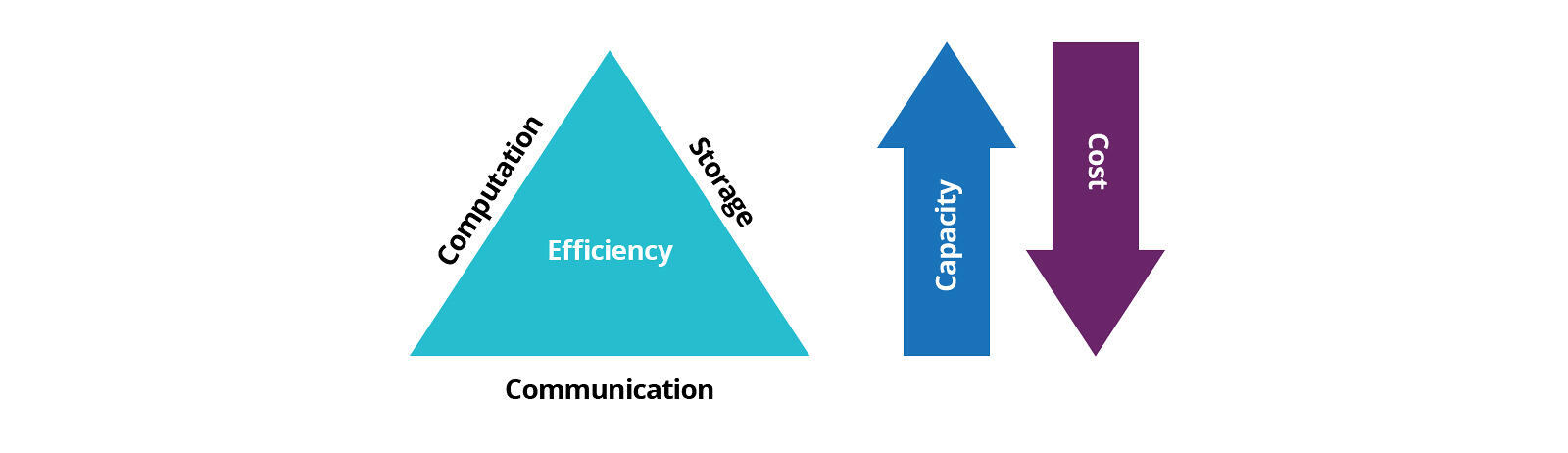

Most market participants employ third-party intermediaries to perform post-trade processing on their behalf to reduce their costs and the complexity of their infrastructure. However, financial sustainability of these businesses is challenging in the face of escalating trade volumes. Most firms scale their infrastructure to increase their processing capacity. As they scale, firms must aim to maintain or grow margins. Without driving margins, business growth is achieved at an unsustainable cost and at the risk of future stagnation. Alternatively, simply reducing cost without increasing capacity is myopic and will only sustain margins temporarily. The tradeoff here is lost business opportunities and eventual stagnation.

Rising demand

Faster and more efficient trading technology and practices, like HFT (High-Frequency Trading), have led to substantially increased trading volumes over the years. For example, US equity trading volumes alone have increased on average by 4.35% per year in the last 5 years to over 9 billion trades in 2019 (as published by Cboe). Overall, exchange-traded derivatives rose by 15% in 2019 when compared with 2018, rising to over 33 billion contracts in 2019.

This presents significant stress on any post-trade processing infrastructure. Since the financial crisis of 2008, various regulatory bodies such as the SEC (Securities and Exchange Commission) and the CFTC (Commodities Futures Trading Commission), have increasingly insisted on electronic Straight Through Processing (STP) of trades all the way to clearing, settlement and regulatory reporting. Reporting, matching and confirmation of electronically booked trades have to be performed within 15 minutes and for all other trades no more than 24 hours. As a result, errors are detected early, risks reduced substantially and settlements achieved within the regulatory settlement cycles. For the SEC, the current regulatory settlement cycle is T+2, i.e. 2 days from the trade execution date.

While matching, confirmations and affirmations are not expected to be performed in real-time - meeting regulatory deadlines with excessive and escalating trading volumes will be increasingly challenging, especially if existing implementations do not have performance as a key consideration.

With real-time processing not a requirement for post-trade operations, the primary performance metric for post-trade technology is system throughput. Throughput indicates the capacity of a system and is defined as the number of tasks completed in a unit interval of time. While scaling a system horizontally increases its capacity, it is suboptimal and expensive without considering the system’s response time. A better strategy for scaling is to also lower the response time. This increases the capacity of the system whilst reducing the scaling requirements and cost.

Horizontal scaling

Firms facing capacity challenges often attempt scaling at any cost and then struggle to contain it. They fail to address efficiency issues in the underlying implementation, adding further technical debt. This cycle is repeated with each scaling event which ultimately leads to an expensive tech estate that is complex for refactoring or redesign.

Many firms assume that the elasticity of the cloud will help them manage costs. Unfortunately, cloud migrations based solely on this premise and without the necessary optimisations will deliver disappointing financial results.

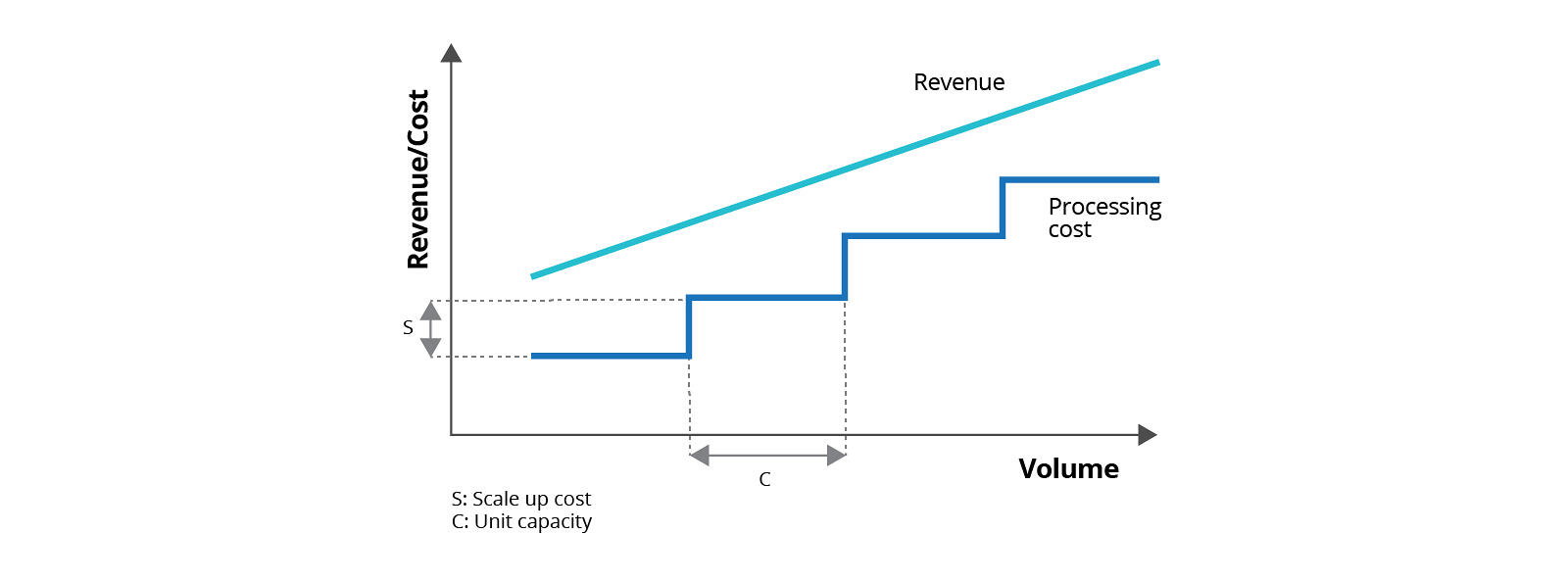

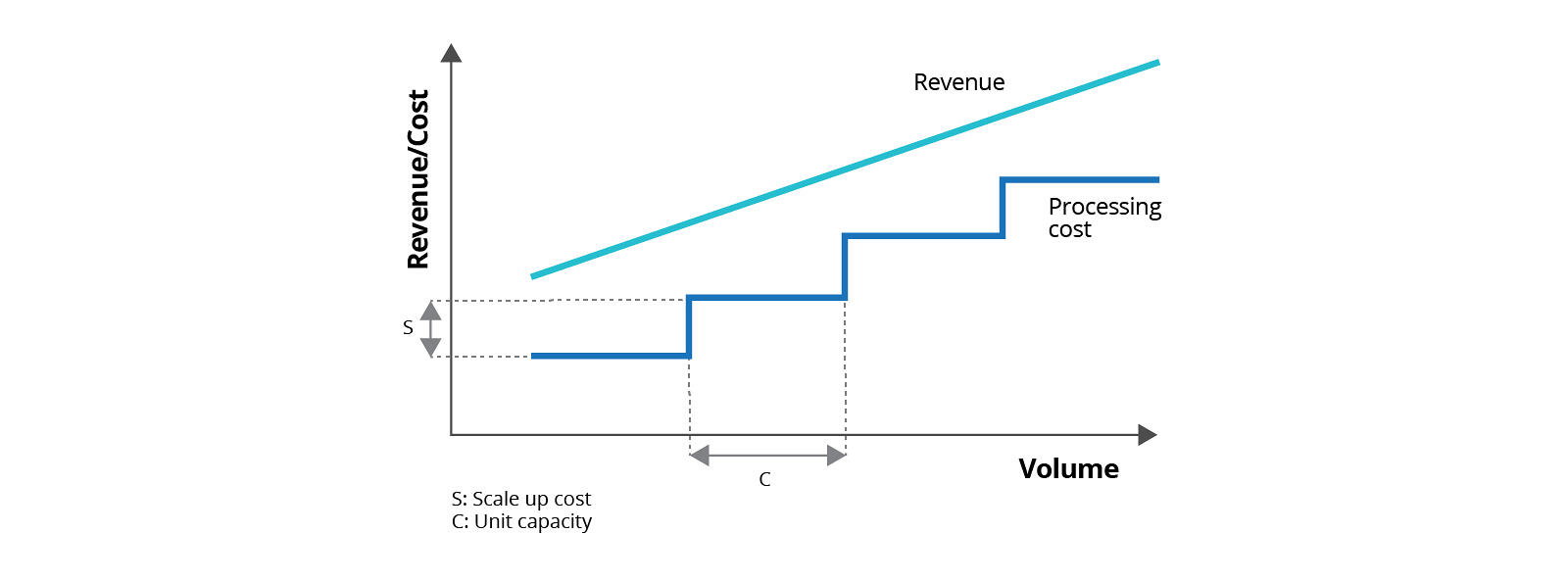

To illustrate, the following figure shows a simple coarse-grained scaling scenario common with monolithic systems. Revenues increase in proportion to the volumes processed and when the system reaches its capacity threshold, it is scaled horizontally, leading to a step-change in the cost. For simplicity, assume that this cost remains constant for each instance of the system.

Profit margins can be managed as long as the incremental cost of adding a unit capacity is less than the corresponding increase in revenue. To meet the increasing volumes sustainably, the capacity has to be increased with efficient processing and the costs reduced through fine-grained scalable microservices architecture.

Higher capacity through higher resource usage without the processing efficiencies is inefficient and expensive. For example, higher capacity can be achieved by increasing the number of CPUs and memories. The resulting scaleup cost negates any benefit of the increase in unit capacity. Subsequent horizontal scaling will continue to be more expensive, eroding margins further.

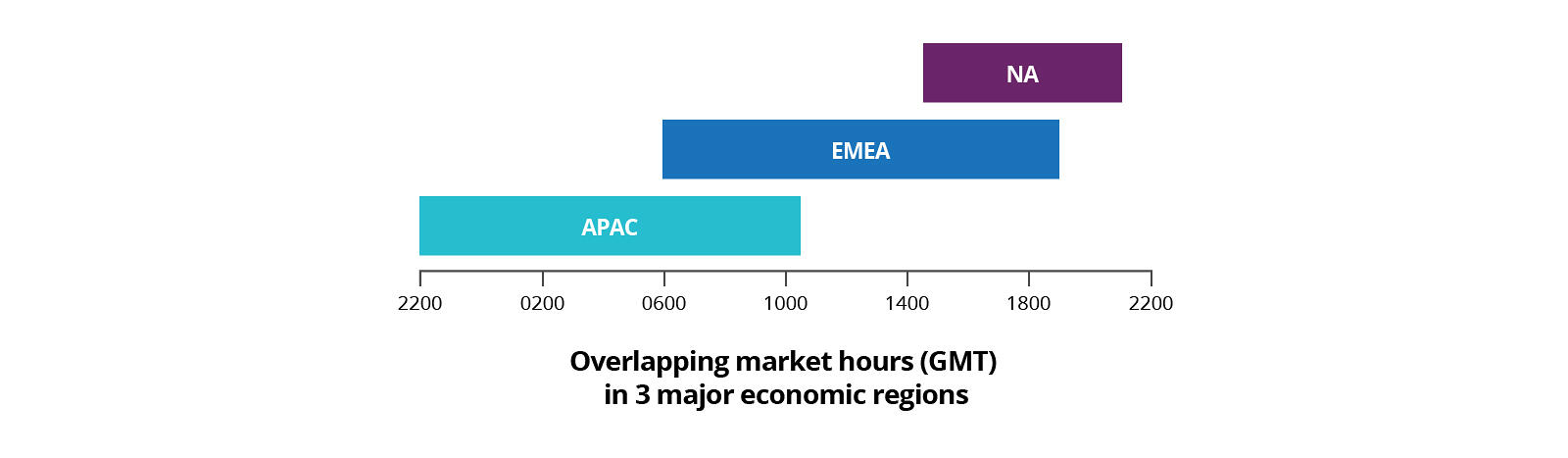

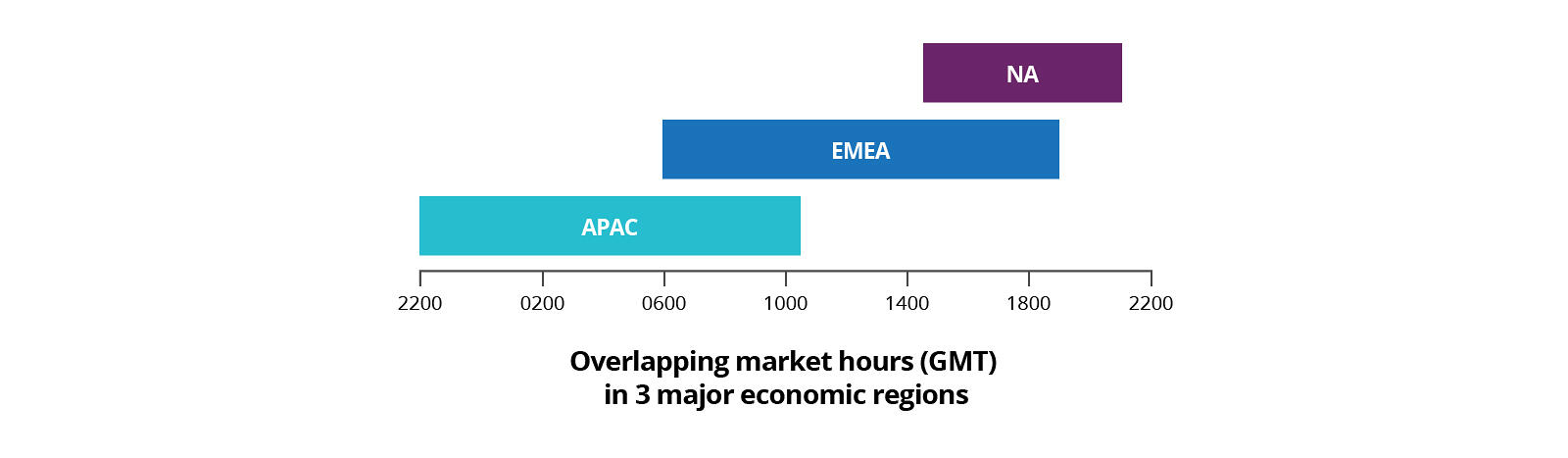

It is tempting to think of peak demand as the ideal capacity. However, this would lead to an expensive infrastructure where much of the capacity remains idle during the day. Regional post-trade systems are in peak demand during market hours when trading is in full swing. Third-party post-trade processing venues that cover global markets will see peak demand at multiple times during the day where market hours in different regions overlap. Hence, post-trade technology should be implemented on a cloud-native platform that provides the elastic capacity to accommodate cyclical demands economically.

Using the cloud to drive down scale-up costs

Post-trade processing businesses can create sustainable margins by developing the capability to rapidly scale on public cloud-native platforms to accommodate fluctuating trading volumes. A cloud-native platform allows them to balance the unit capacity and scale-up cost through the following architectural strategies.

Granular control: Developing a platform on a microservices-based architecture allows the granular control of costs by scaling only the bottlenecked services. This granularity comes at a cost of increased internal communication overheads and needs to be factored while designing the platform.

Leverage usage profile: Public cloud providers extend pricing options and promotions that customers can leverage based on their usage profiles to reduce their costs. For example, on AWS (Amazon Web Services), customers can reserve capacity (processing and/or storage) for long term usage, resulting in substantial savings. They can even sell their unused reserved compute instances on AWS EC2 Reserved Instance Marketplace. For known usage patterns, computing resources can be scheduled to operate, again at a lower price. AWS also provides a spot pricing model for computing resources, where prices vary according to supply and demand. Based on their usage profile, post-trade processing providers can build a fleet of reserved, on-demand and spot compute and storage infrastructure on which their applications can scale while optimising their cost as well.

Serverless Hosting: Serverless hosting on the public cloud, for example, AWS Lambda, offers an additional dimension of flexibility and economy. For services with low to moderate usage, serverless hosting can provide considerable cost saving as pricing is purely usage-based without any reservation or hosting costs. Further, a microservices implementation allows choosing feasible hosting options which offer opportunities for cost optimisations. For example, functionality exposing RESTful APIs for data access, manual data update and report generation may be hosted on serverless. Conversely, trade workflow services exchanging high volume trade messages and events asynchronously may be hosted on a containerised platform over compute instances.

In a nutshell, elastic scalability on public cloud, flexible pricing and hosting options coupled with a microservices architecture present substantial cost optimisation opportunities for post-trade processing systems. All these drive down the scale-up costs. To improve margins further, unit capacity can be stretched through computational, storage and communications efficiencies.

Migrating to a cloud-native platform

Migrating to the cloud remains risky and expensive despite its advantages. According to the Flexera 2020 State of the Cloud Report, most organisations migrating to the cloud are over budget by 23% and waste 30% of that spend. An earlier report suggests that 75% of cloud migrations take over a year with a majority taking 2 years or more.

High costs, long delays and failure to meet migration objectives can result in abandoned or stalled cloud migrations. This leads to wasted spending along with a fragmented IT organisation and an operationally complex and expensive technology estate. Successful cloud migrations require an objective and flexible strategy.

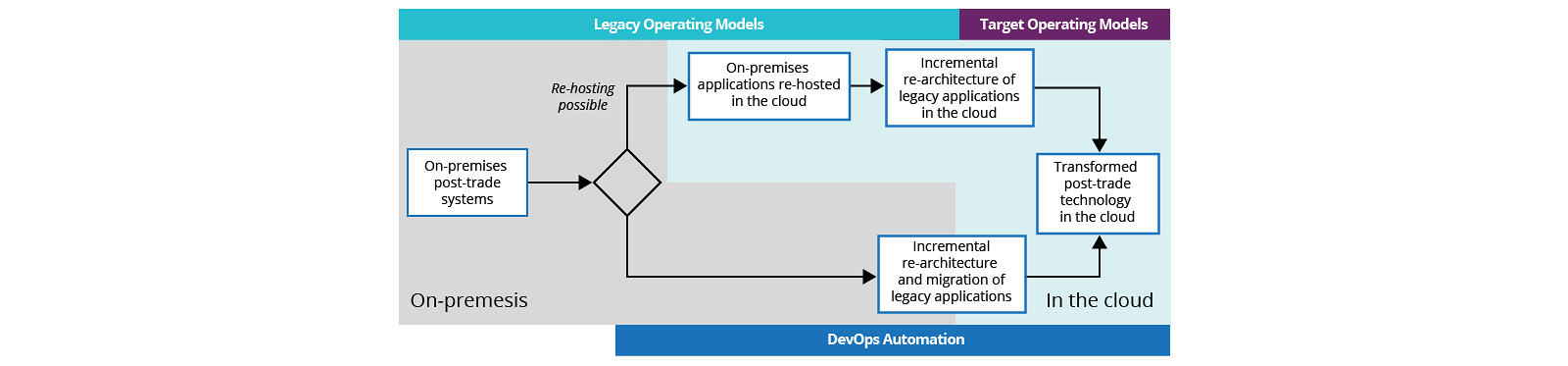

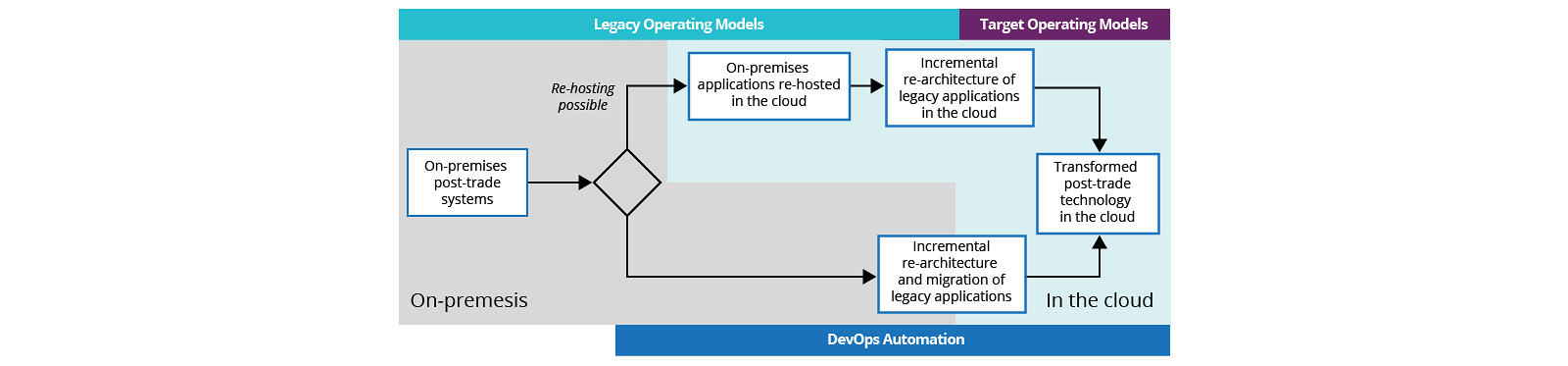

One approach which firms can take to migrate to the cloud is by rehosting legacy systems. This may not be possible where the legacy technology stack is not supported on the cloud or if there is a tight coupling with on-premise services. The benefits of simply rehosting are limited, but they offer an alternate and early mobilisation for re-architecting the services.

Rehosting can therefore be treated as the first stage in the overall cloud migration programme. Through rehosting, cloud migrated applications can not only continue generating revenue but also provide opportunities for fine-tuning and right-sizing the resources for margin improvements. Further refinement is possible by incrementally re-architecting with microservices-based architecture and by reducing the dependency of on-prem services, which can be decommissioned to achieve additional cost improvements.

Firms should pursue an incremental capability driven re-architecture and migration approach to simplify the operating model. This approach helps identify unused or rarely used capabilities and features that can be decommissioned, thus lowering development and operating costs. Where rehosting is not possible, this becomes the starting point of the migration journey.

A mature DevOps practice will deliver additional returns for cloud migration initiatives. By optimising the 4-key Accelerate metrics, we can achieve higher efficiency and economy for promoting changes through the delivery pipeline. ‘Accelerate metrics’ measure lead time, the change failure rate, the deployment frequency and the mean time to recover (MTTR).

A high-level flowchart of decisions and steps for cloud migration

Optimising implementations

Re-architecting on the cloud is a significant undertaking of time and money. To maximise this investment, the re-architected implementations on the cloud should be efficient in their resource usage. Incremental investment in delivering pragmatic optimisations during re-architecture can thus significantly help lower cloud costs.

Executives need to approach optimisation in a thoughtful manner. It is often assumed that developer time and cost is too precious, leading to the obvious answer of investing only in infrastructure for scalability. However, the cost profile for hardware is perpetual rather than a one-off and it provides diminishing returns with increasing volumes.

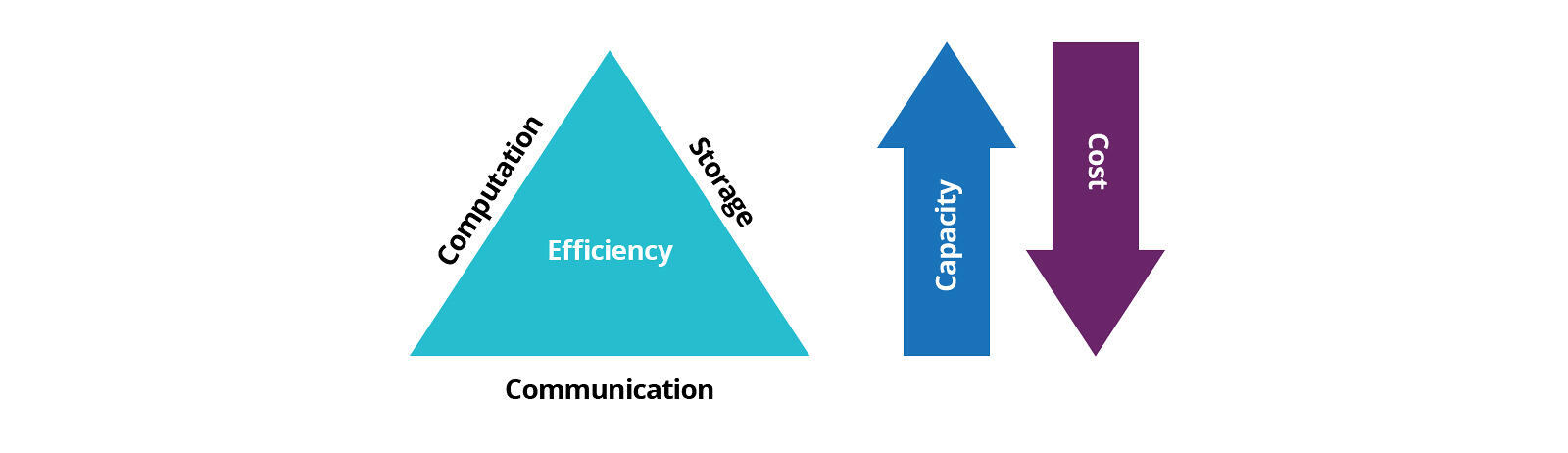

A commonly observed symptom pointing to potential optimisation opportunities is an application operating at its peak throughput but with only a fractional usage of infrastructure resources. Here, scaling the infrastructure will not just increase the cost but also resource wastage. A more balanced approach is to utilise developer time to achieve efficient implementation across the dimensions of computation, communication and storage.

Computational efficiency: This is simpler to achieve through microservices as opposed to a monolith implementation. Each microservice implements a fraction of the functionality of the monolith, thus providing opportunities for targeted optimisations. Integrating the profiling and performance testing of microservices in the DevOps toolchain can provide fast feedback when agreed thresholds are breached. Optimisation opportunities like these can be regularly surfaced and resolved.

Communication efficiency: Fine-grained APIs produce additional communication overheads with chatty protocols and verbose messages that lowers the end-to-end performance. Designing microservices that are aligned to business capabilities helps produce APIs that provide optimal interactions with reduced chattiness. Additionally, message verbosity can be reduced using domain-specific parameters.

Storage efficiency: Microservices allow for decentralised data storage decisions and polyglot persistence. Hence, choosing a technology that optimises for the service-specific need for storage and access requirements would deliver an improved system performance.

Towards risk-based cloud adoption

With an annual cost of over $20 billion globally, the economics of post-trade processing remains a concern for the industry. Escalating trading volumes add further stress as organisations struggle to meet regulatory deadlines. The elastic capacity of the cloud coupled with flexible pricing and diverse hosting options offers cost-effective scaling to meet the cyclical capacity demand in post-trade processing. Process transformation and optimisation on an efficient, flexible and scalable cloud-native post-trade technology platform will offer additional opportunities for cost reductions.

The risks of migrating to the cloud can diminish the potential rewards. To mitigate the risks, the migration should be well rounded, incremental and evolutionary. This will help post-trade operations start generating revenues early and increase business confidence in the opportunities that the transformation promises.

This article was published on August 19, 2020

Want to receive our latest banking and finance insights ?

Sign up for the financial services series by our industry experts. Delivering a fresh take on digital transformation, emerging technology and innovative industry trends for financial services leaders.