In Part I of this series, we described the technology behind ChatGPT, how it works, its applications and limitations that organizations should be aware of before jumping on the hype train. As you try to discern opportunities this technology presents to you organization, you will likely face a fog of uncertainty in terms of:

Which of our business problems should we solve with ChatGPT, and more broadly, AI? (product-market fit)

Which of our business problems can we solve ChatGPT and AI? (problem-solution fit)

What is the effort and cost involved in leveraging or creating such AI capabilities?

What are the associated risks, and how can we reduce them?

How can we deliver value rapidly and reliably?

In this article, we address these questions by taking an expansive view of AI and by sharing five recommendations that we’ve distilled from our experience in delivering AI solutions.

1. Product-market fit: Start with the customer problem, not the tool

With the excitement around ChatGPT, it is tempting to fall into the trap of a tech-first approach – we have a shiny hammer, what can we hit with it? A common business mistake with AI projects is to start with available data or the AI techniques du jour. Instead, start with a specific customer problem.

Without a clear and compelling problem that is backed by the voice of the customer, we will find ourselves in a vacuum that is quickly filled with “expert” but unsubstantiated assumptions. Pressure from inferring threats from competitor media claims and leadership cultures that value “knowing” over experimentation can lead to months of wasted investment in engineering. As Peter Drucker famously said, “there is nothing so useless as doing efficiently something that should not have been done at all.”

There are several practices that we can apply to improve our odds of betting on the right thing. One such practice is Discovery, which helps us develop clarity in the customer struggle, our vision, the problem space, value propositions, and high-value use cases. This investment of a few weeks with the right people at the table — customers, product, business stakeholders, (rather than just data scientists and engineers) — can help us focus on ideas that will bring value to customers and to the business and avoid wasting people’s time on efforts without outcomes.

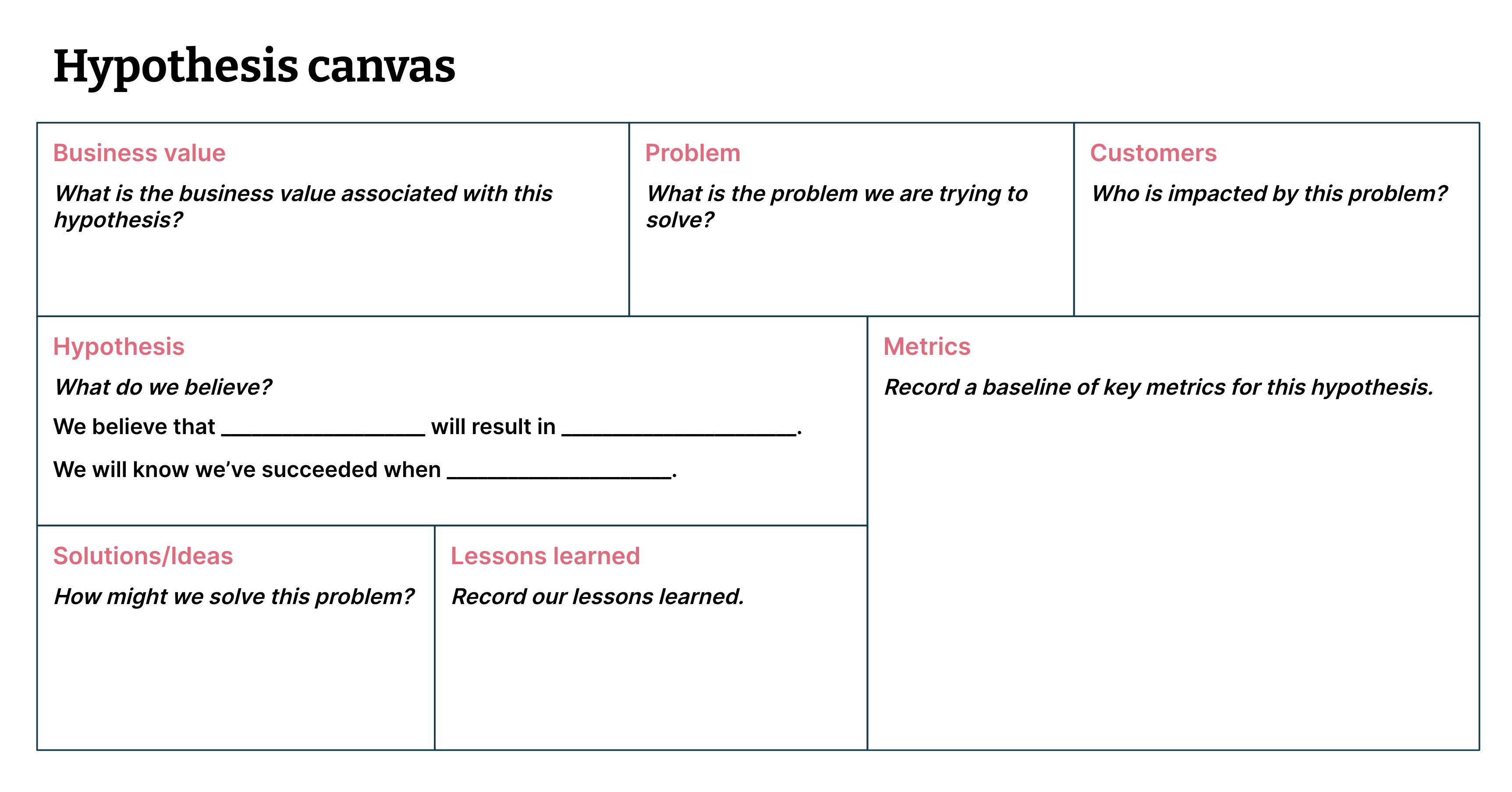

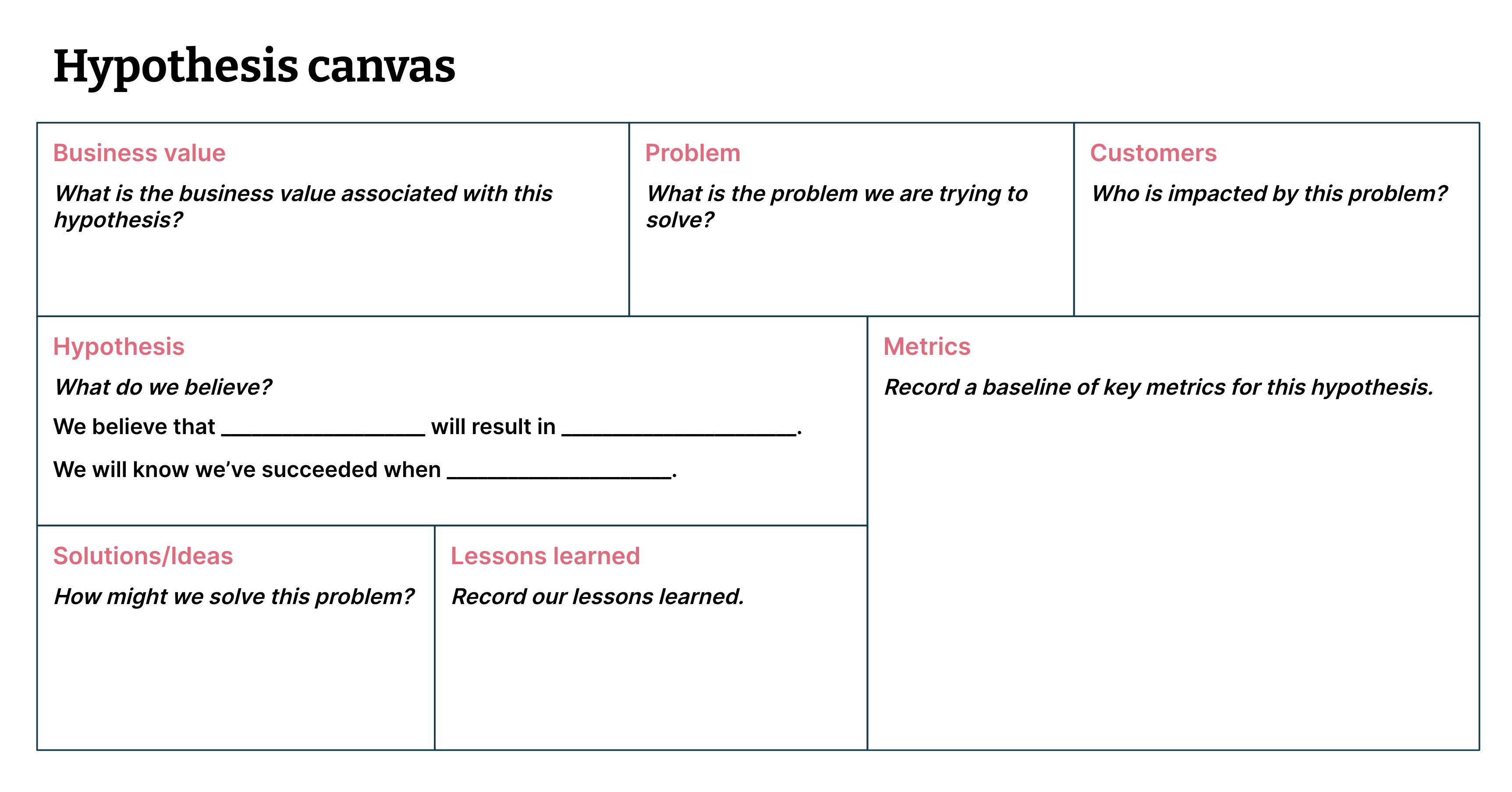

Tools such as the ML Canvas and hypothesis canvas are useful for assessing the value proposition and feasibility of using AI to solve our most compelling problems (see Figure 1).

Figure 1: The hypothesis canvas helps us articulate and frame testable assumptions (i.e. hypotheses) (Image from Data-driven Hypothesis Development)

2. Problem-solution fit: Choose the right AI tools to create value

This recommendation is about expanding your toolkit to find the most suitable and cost-effective approaches for your high-value use case, and avoid wasting effort in unnecessarily complex AI techniques. Recognize that while ChatGPT might trigger conversations, LLMs might not be the right solution to your problem.

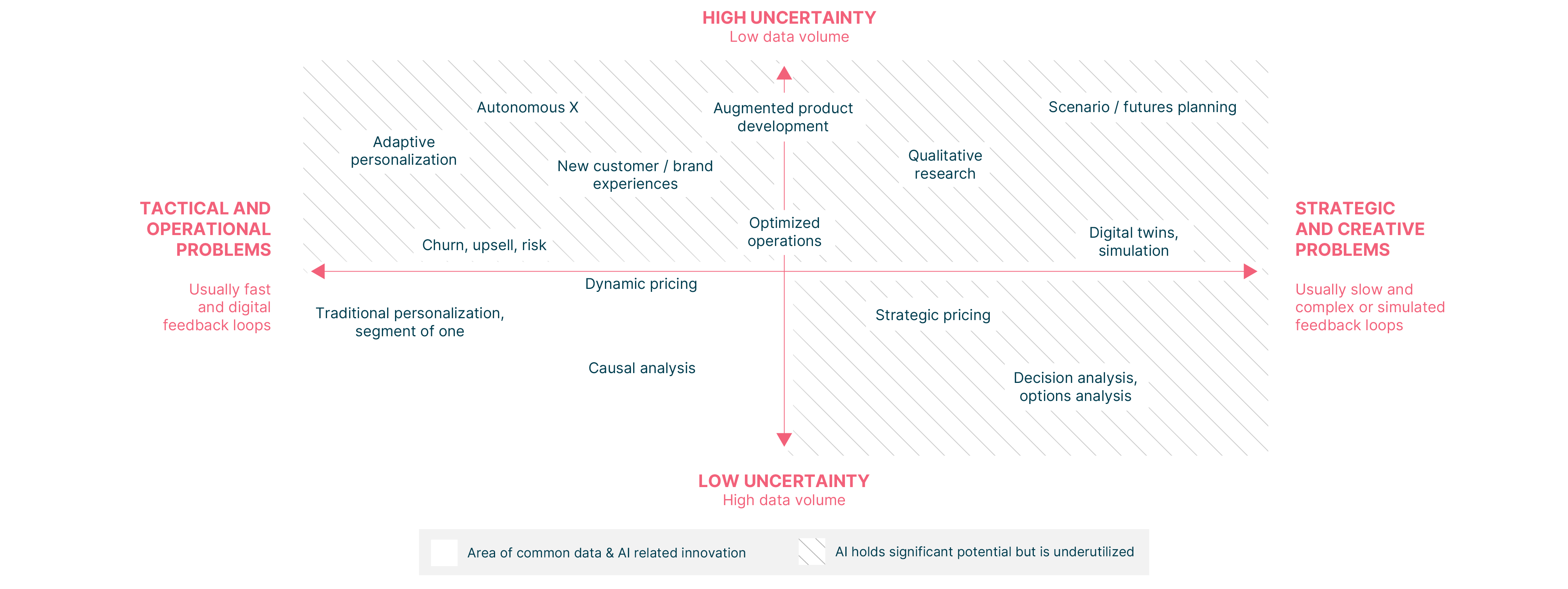

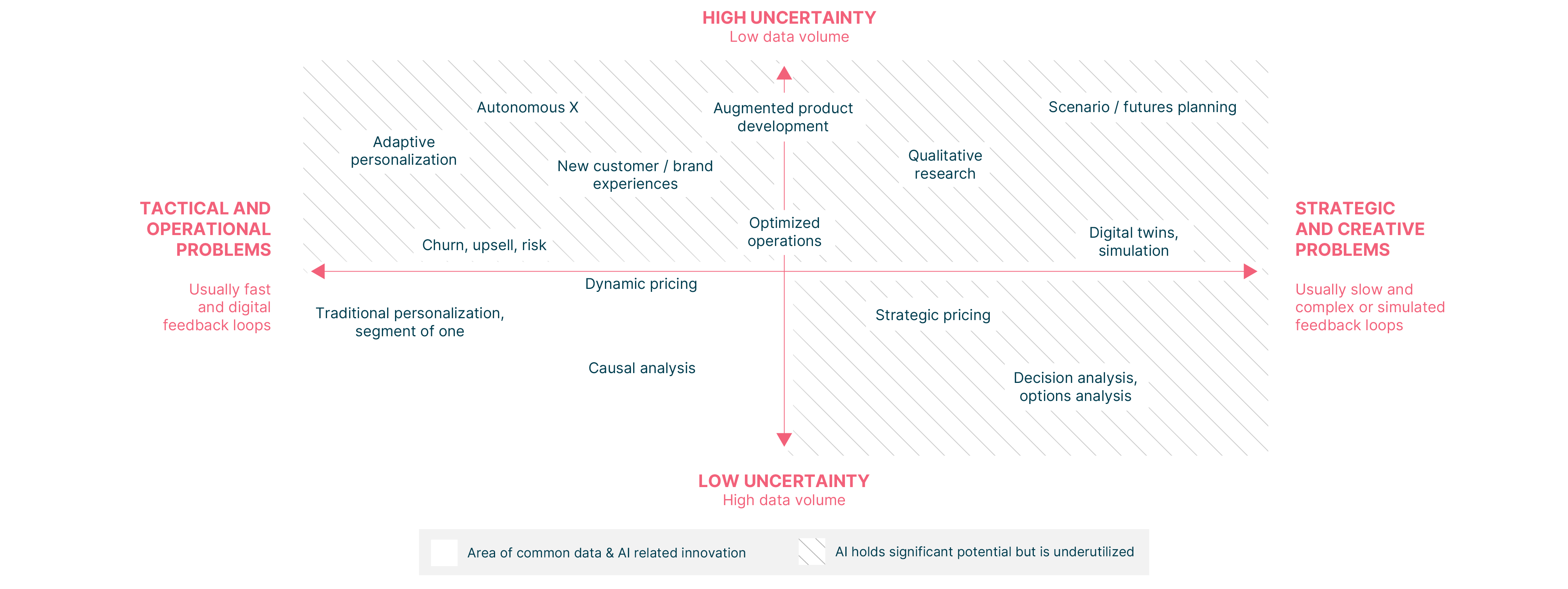

Beyond LLMs, there are other AI techniques that, in our experience, can effectively augment businesses’ ability to make optimal day-to-day operational and strategic decisions, and with far less data and compute resources (see Figure 2).

For example, in the top left quadrant, Thoughtworks worked with a Finnish lifestyle design company and applied reinforcement learning to create an adaptive and personalized shopping experience for customers. We built a decision factory that learns from users’ behavior in real-time, and does not require past data assets nor expert data science skills to be scaled across the company's digital platforms. Within a few hours of release, the decision factory created a 41% lift in front page clickthrough, and after five weeks, a 24% increase in average revenue per user.

In the middle of the top two quadrants, we applied operations research techniques to create a model that helped Kittilä Airport to relieve the heavy pressure on airport infrastructure and resources during peak travel season. This reduced planning time from 3 hours to 30 seconds (a 99.7% reduction), and reduced the share of airport-related flight delays by 61% (even though the number of flights increased by 12% from previous year). The decrease in delays resulted in an estimated monthly cost savings of €500,000 and the airport’s Net Promoter Score (NPS) score increased by 20 points.

These are just some examples of the untapped potential of the family of mature AI Augmented techniques. Choosing the right AI technique for your problem allows you to be smarter with the data you curate, and in so doing, significantly reduces the cost, complexity and risk involved in curating reams of historical data.

3. Effort: With bigger models come greater error surfaces

Artwork by Dougal McPherson

A lesser known contemporary of ChatGPT is Meta’s Galactica AI, which was trained on 48 million research papers and intended to support scientific writing. However, the demo was shut down after just two days because it produced misinformation and pseudoscience, all while sounding highly authoritative and convincing.

There are two takeaways from this cautionary tale. First, throwing mountains of data at a model doesn’t mean we will get outcomes aligned with our intent and expectations. Second, creating and productionizing ML models without comprehensive testing comes at a significant cost to humans and society. It also increases the risk of reputational and financial damage to a business. In a more recent story, a Google spokesperson echoed this when they said that Google Bard’s “$100 billion error” underscored “the importance of a rigorous testing process.”

We can and must test AI models. We can do so by using an array of ML testing techniques to uncover sources of error and harm before any models are released. By defining model fitness functions — objective measures of “good enough” or “better than before” — we can test our models and catch issues before they cause problems in production. If we struggle to articulate these model fitness functions, then we’ll likely eventually discover that it’s not fit for production. For generative AI applications, this will be a non-trivial effort that should be factored into decisions about which opportunities to pursue.

We must also test the system from a security perspective and conduct threat modeling exercises to identify potential failure modes (e.g., adversarial attacks and prompt injections). The absence of tests is a recipe for endless toil and production incidents for machine learning practitioners. A comprehensive test strategy is essential if you are to ensure that your investments lead to a high quality and delightful product.

4. Risk: Governance and ethics need to be a guiding framework, not an after-thought

“AI ethics is not separate from other ethics, siloed off into its own much sexier space. Ethics is ethics, and even AI ethics is ultimately about how we treat others and how we protect human rights, particularly of the most vulnerable.” – Rachel Thomas

The Thoughtworks-sponsored MIT State of Responsible Technology Report observed that responsible technology is not a feel-good platitude, but a tangible organizational characteristic that contributes to better customer acquisition and retention, improved brand perception and prevention of negative consequences and associated brand risk.

Responsible AI is a nascent but growing field, and there are assessment techniques — such as data ethics canvas, failure modes and effects analysis, among others — that you can employ to assess the ethical risks of your product. It’s always beneficial to “shift ethics left” (moving ethical considerations earlier in the process) by involving the relevant stakeholders — spanning product, engineering, legal, delivery, security, governance, test users, etc. — to identify:

Failure modes and sources of harm of a product

Actors who may compromise or abuse the product, and how

Segments who/which are vulnerable to adverse impacts

Corresponding mitigation strategies for each risk

The risks (where risk = likelihood x impact) we identify must be actively managed along with other delivery risks on an ongoing basis. These assessments are not once-off, check-box exercises; they should form part of an organization’s data governance and ML governance framework, with consideration of how governance can be lightweight and actionable.

5. Execution: Accelerate experimentation and delivery with Lean product engineering practices

Many organizations and teams start their AI/ML journey with high hopes, but almost inevitably struggle to realize the potential of AI due to unforeseen time sinks and unanticipated detours — the devil is in the data detail. In 2019, it was reported that 87% of data science projects never make it to production. In 2021, even among companies who have successfully deployed ML models in production, 64% of them take more than a month to deploy a new model, an increase from 56% in 2020.

These impediments in delivering value frustrates all involved — executives, investment sponsors, ML practitioners and product teams, among others. The good news is that it doesn’t have to be this way.

In our experience, Lean delivery practices have consistently helped us to iterate towards building the right thing by:

Focusing on the voice of the customer

Continuously improving our processes to increase the flow of value

Reduce waste when building AI solutions

Continuous delivery for machine learning (CD4ML) can also help us improve business outcomes by accelerating experimentation and improving reliability. Executives and engineering leaders can help steer the organization towards these desirable outcomes by advocating for effective engineering and delivery practices.

Parting thoughts

Amidst the prevailing media zeitgeist of “AI taking over the world”, our experience nudges us towards a more balanced view: which is that humans remain agents of change in our world and that AI is best suited to augment, not replace, humans. But without care, intention and integrity, the systems that some groups create can do more harm than good. As technologists, we have the ability and duty to design responsible, human-centric, AI-enabled systems to improve the outcomes for one another.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.