Is creating a user story in an Infrastructure project different to a user-facing application? Most definitions of a user story will be unconcerned with the specific user or system. However, common examples of user stories are typically focused on the end users of a product. When working on a recent project where we were replatforming an on-prem tech estate to public cloud I found it hard to identify the end user, and the product was something that already existed. Here are some of the lessons I learned, that I feel are transferable across most replatforming projects, if not infrastructure projects in general.

Understanding “done” using story maps & milestones

What does “done” look like? For an end goal, migrating everything makes sense, but that leaves a single milestone, which is difficult to reprioritize, change or estimate. It made a fantastic, simple to communicate goal which helped avoid scope creep (does Task X help us migrate?). But we needed some smaller targets to keep us on track and see value earlier.

To break our goal into smaller, measurable milestones we used story mapping to great effect. Traditionally, a story map will follow a Goals > Activities > Stories structure. I quickly found using “Activities” didn’t fit with how our infra-team and platform SMEs worked. They were used to deploying components sequentially in a way that didn’t match how a user would interact with the system.

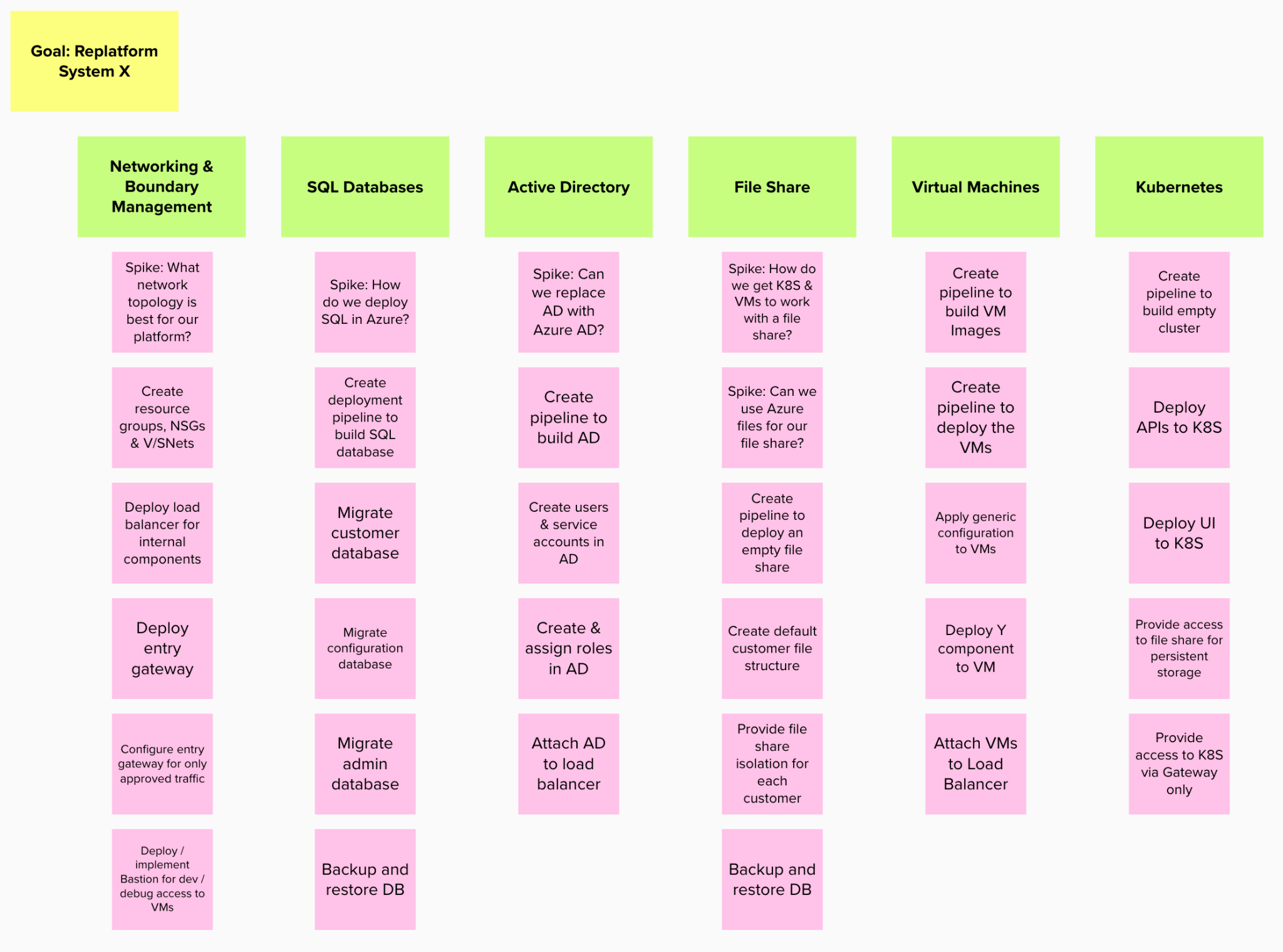

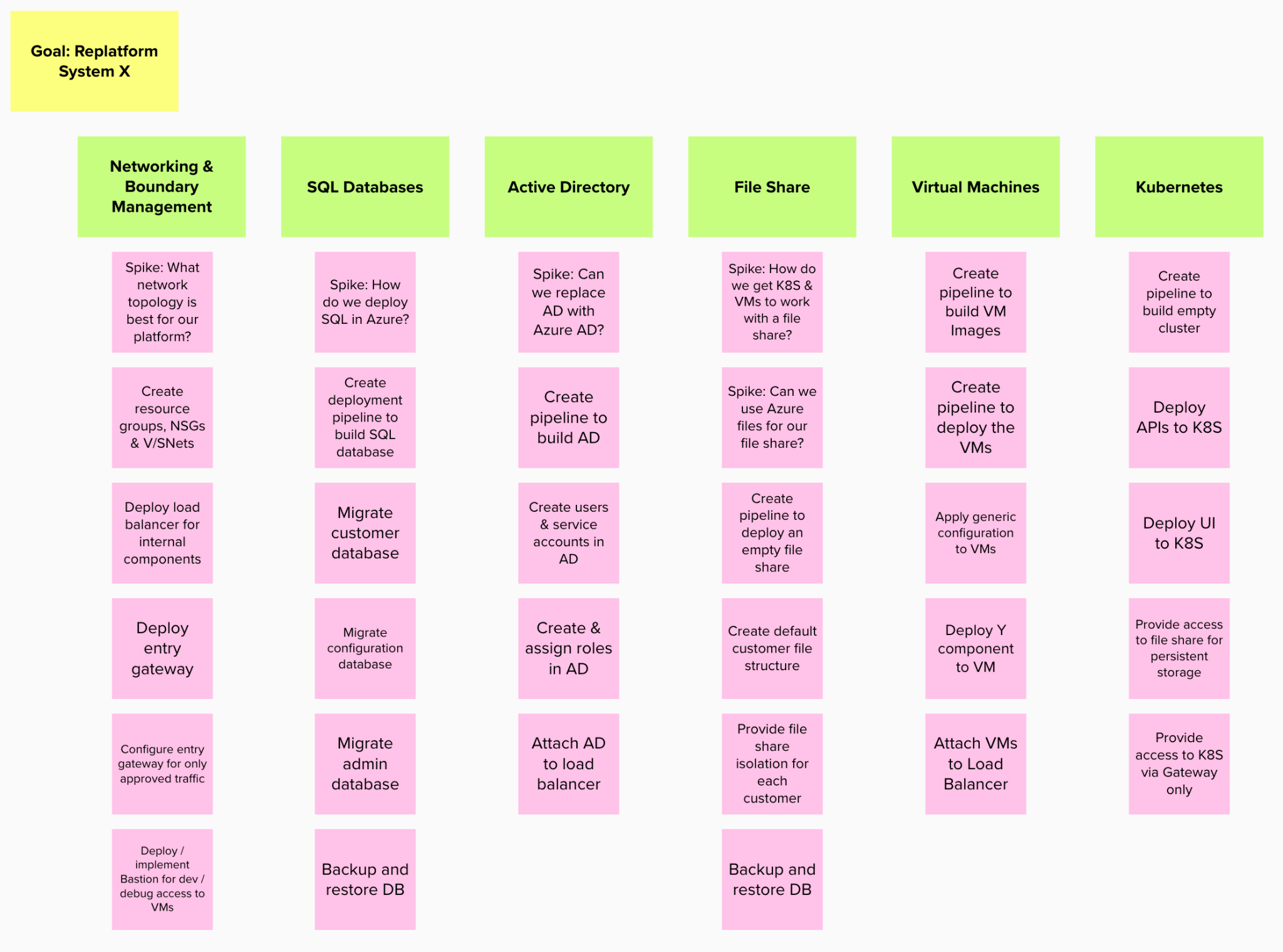

So, we took an extra step to make sure we captured everything. Step 1 was creating a Goal > Component > Stories / Spikes story map. Our end result looked a lot like this:

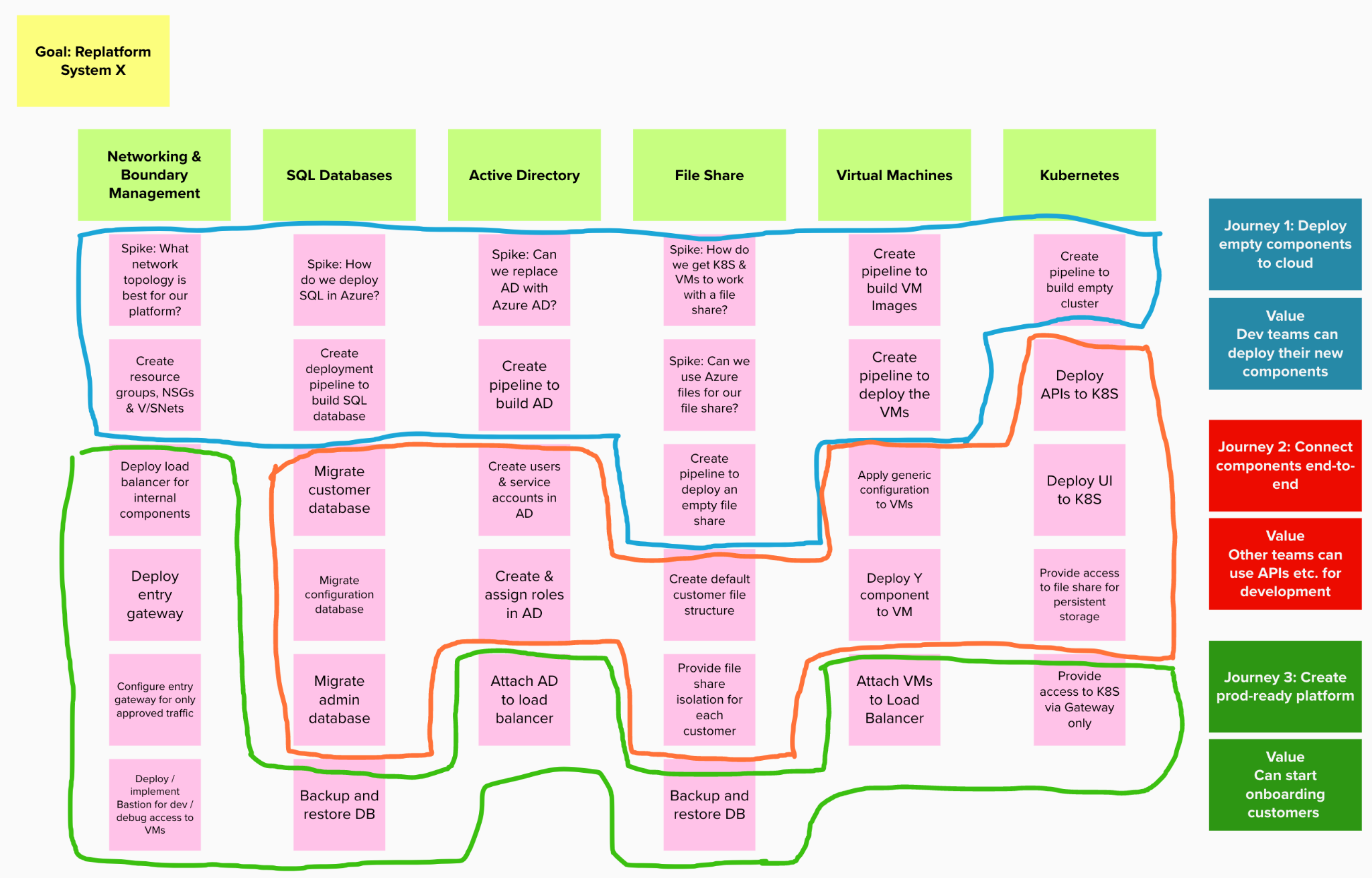

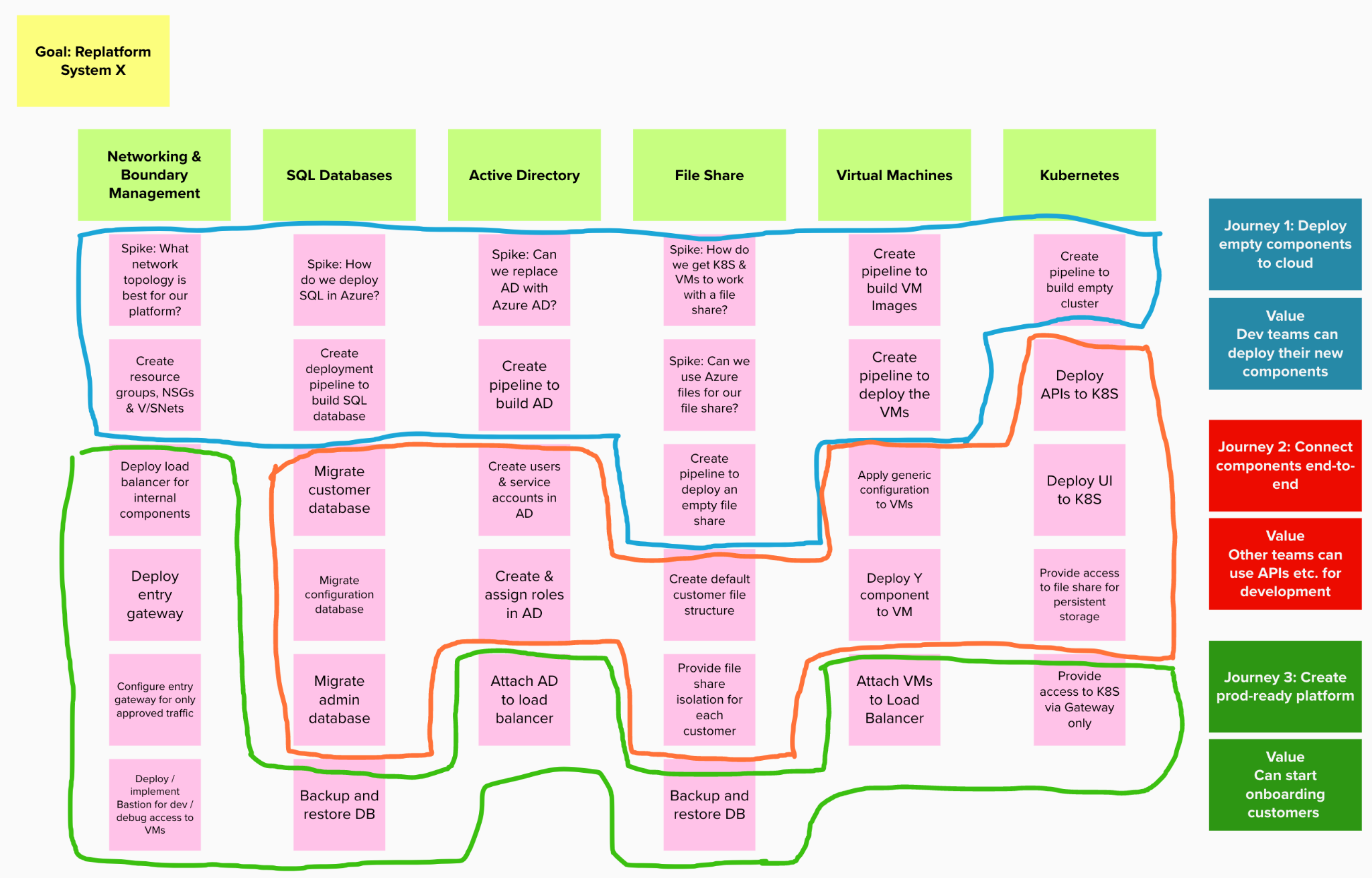

This matched the as-is deployment of the system. Step 2 is where we brought in user journeys, identifying the MVP slices which became our milestones, while still making sure we captured each component in our migration. These milestones were chosen based on our platform’s users: the organization’s development teams.

By bringing journeys in after capturing everything, we were able to use that as a method to prioritize getting things up and running quickly. This also helped us identify where we may have been over-engineering. Does what we want to do meet the goal? If not, we can save it until later!

Spikes to understand technical complexity

One big challenge we had was deciding how to approach the migration. Azure provides several ways of achieving your desired results, depending on your requirements. Given our limited access to SMEs, a complicated legacy stack, and inexperience with specific Azure components, we weren’t ready to jump straight into completing stories.

Spikes are typically used to create the “simplest possible program to explore potential solutions.” We modified this a little bit, using them to explore and then agree a way to migrate a component. This focused information gathering and prototyping phase meant we could de-risk key aspects of the delivery.

What was really important for our spikes were that they contained the following three things:

- A question to answer

Once you were confident in your answer you were done with the spike.

- A timebox

If there is no solution by then, we need to bring in a bigger forum or specific expertise

- Functional or cross-functional considerations

These are the requirements that the solution will need to support. Sometimes there will be very few, other times there will be loads. If there are loads, consider splitting the spike as you may be trying to find a silver bullet.

Spikes also tied neatly into knowledge sharing via Lightweight Architecture Decision Records. Every spike’s output was captured in an ADR, making sure that not only was our learning documented, but all the future development and platform teams could use that information too.

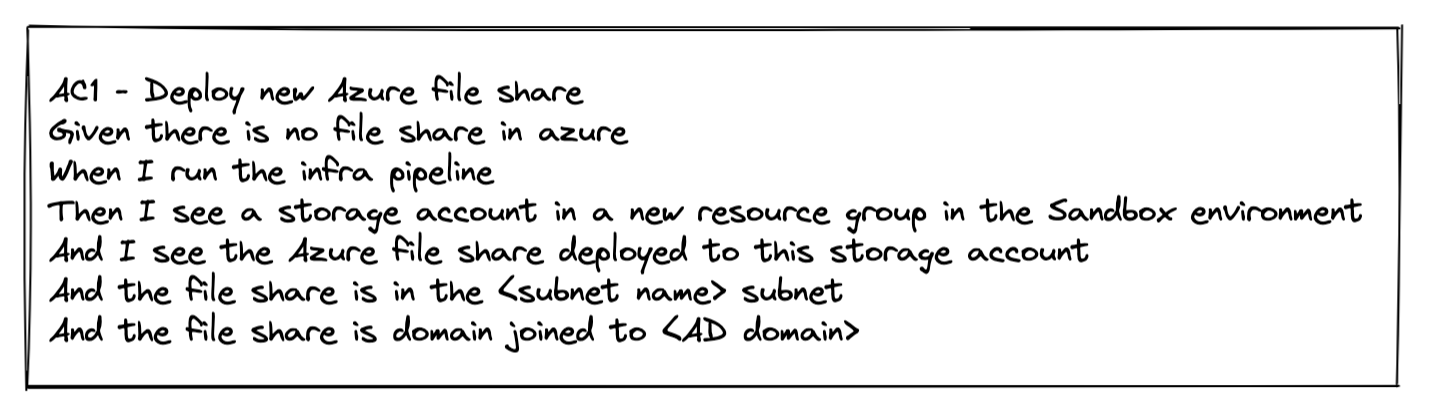

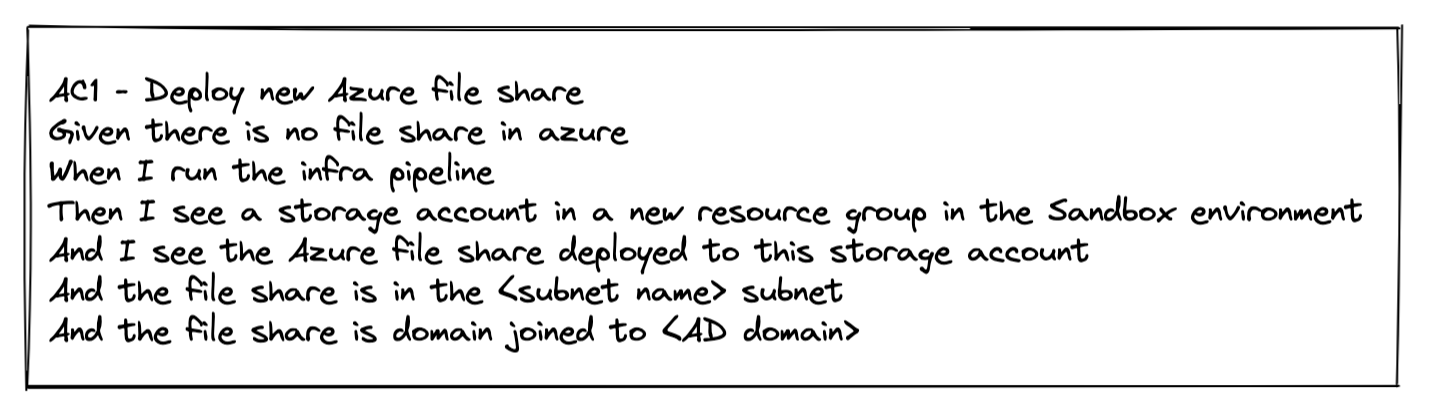

Acceptance criteria – ended up more similar than I expected

Getting ACs to work well was hard. Here are some of the things we tried, which didn’t work for us:

- Bullet points with detailed specification

This specification would change, and totally confused the QAs

- Bullet points without detailed specification

The QAs wouldn’t know what or where to look to begin testing

- User focused, behavior-driven development (BDD) style (Given, When, Then)

We’d need so much of the system migrated before this became testable, we’d end up with stories that would take months to deliver

- A more free-form space for developers to add ACs at kick off or during development

This would end up as a list of what steps the QAs should take to test something, which defeated the point of our QAs

- None!

...I’m sure you can imagine how this ended.

What worked was developer led BDD ACs.

Like in the example above, the action will be something that can be triggered not by the end user (when I press the “OK” button) but by whatever makes sense for that component of the system. The purpose of BDD is to enable easy understanding of the outcome of our story for everyone on the project. Given our project is building a platform for developers, it makes sense that the BDD should be geared towards developer thinking.

So what did I learn?

Infrastructure stories are different, but less so than we initially thought. A lot of the traditional user story principles apply, but need tweaking. Consider the experiences of the people on your team and use that to pull out the detail you need before trying to convert it into journeys. And as always, consider your users! The infra team were very empathetic to the developer users, and were able to gain feedback from showcases and our ADRs. They were able to support the story writing process, and quickly bought in to goals once they saw the enthusiasm the developer teams had around our platform.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.