Large online platforms like YouTube have an enormous impact on our society. Research into their behavior is critical but difficult due to their proprietary nature. At Thoughtworks, we are helping the Mozilla Foundation with a unique engagement to study YouTube’s user control functionality using independent crowdsourced data.

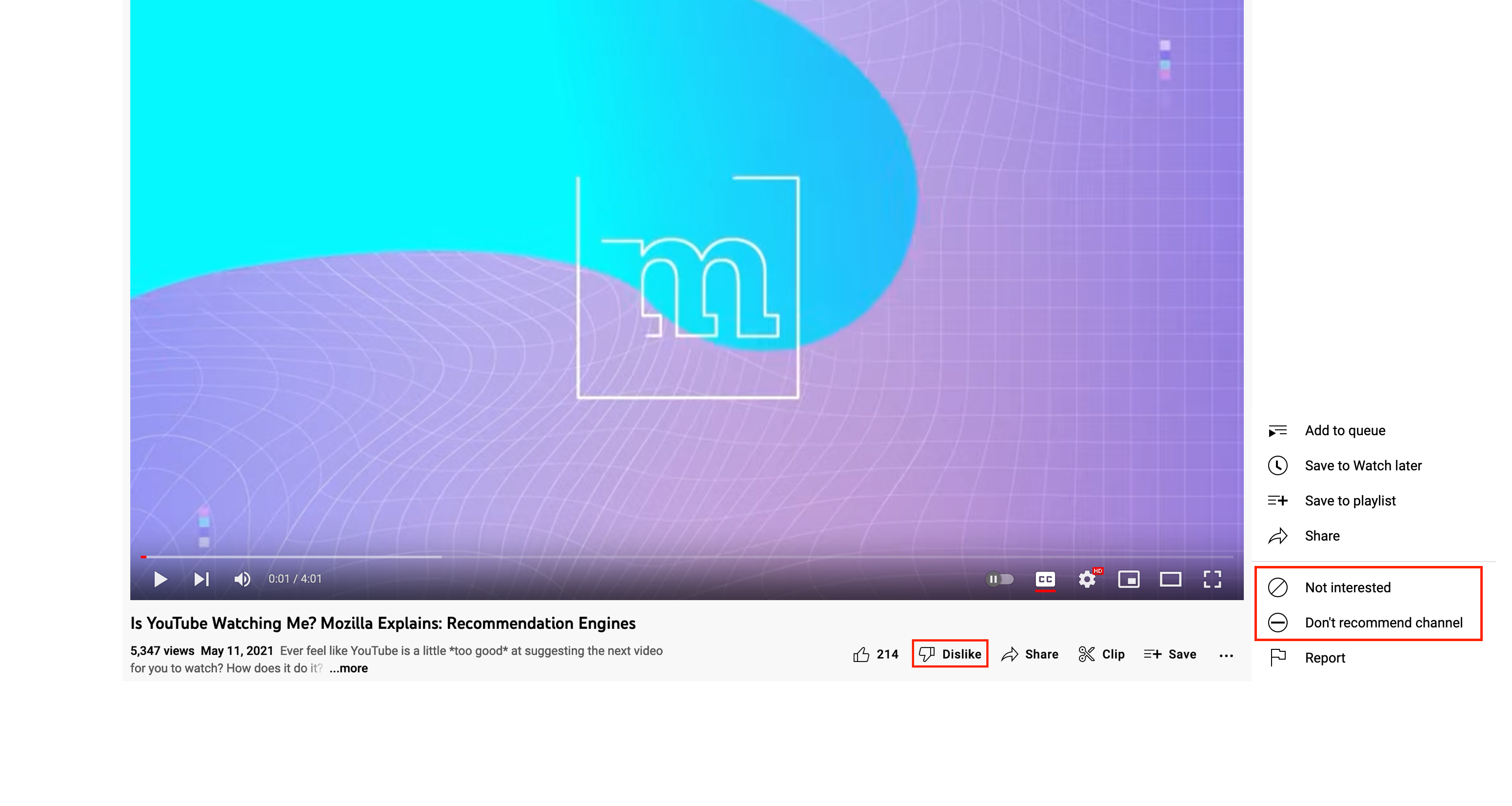

YouTube offers user controls in the form of buttons like “dislike”, “not interested”, and other feedback mechanisms. However, it’s unclear how effective these controls actually are, and whether they empower people to control their experiences on the platform.

With the RegretsReporter extension, we are using sophisticated research methods — a randomized controlled experiment powered by a machine learning model — to better understand how feedback signals affect video recommendations.

The recommendation engine research question

If you’re among the more than 20,000 volunteers that have installed the new RegretsReporter extension and opted into Mozilla’s research, then you are contributing data to help understand how your feedback impacts your recommendations.

What kinds of controls are currently available to users? YouTube suggests a couple of different ways to control your recommendations. These include:

Removing individual videos from your watch history

Using the “Not interested” or “Don’t recommend channel” controls

They don’t explicitly recommend using dislikes, but this seems like a reasonable strategy as well.

But how do these controls influence your recommendations? And how easy are they to use? To answer this, we consider two research questions:

How effective are these user controls at preventing unwanted recommendations?

Can adding a more convenient button for user feedback increase the rate at which feedback controls are used?

The RegretsReporter extension

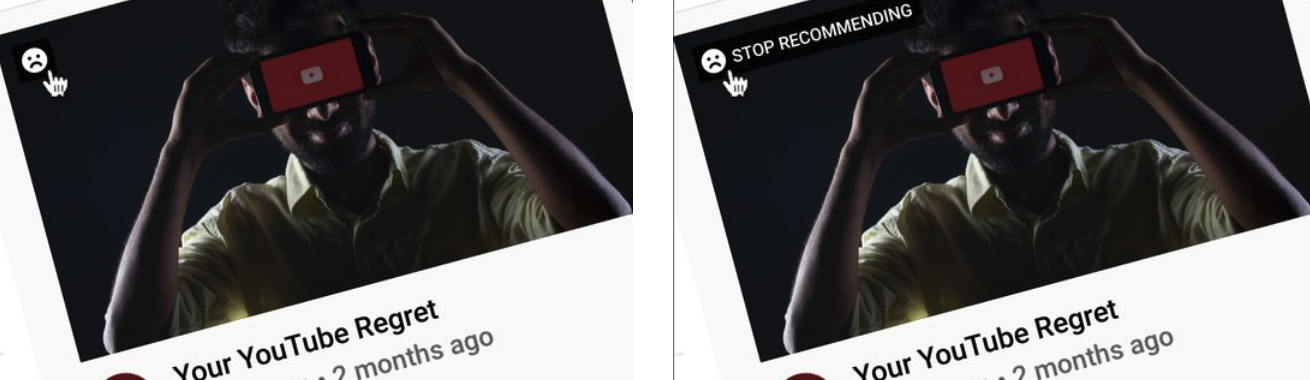

The current version of the RegretsReport extension is designed to help answer these questions. After you install the extension, it adds a “Stop Recommending” button to every video player or recommendation on your YouTube. We’ll refer to pressing the button as “rejecting” a recommendation, which will (usually, as we explain below) submit feedback to YouTube on your behalf that you don’t want recommendations similar to that video.

Assuming you’ve opted into the research, the extension will also keep track (in a privacy-friendly way) of which videos you’ve rejected and what YouTube subsequently recommended to you.

Figure 2: The RegretsReporter extension

An experiment

The research questions are best answered using an experiment. You can think of the experience like a medical trial, in which participants are randomly assigned to either receive the experimental treatment, or a placebo. The random assignment makes it possible to compare the participants between the groups in a meaningful way.

In this experiment there are more than two groups. We assign every participant randomly to one of the following:

Control (or “placebo”). Pressing the "Stop Recommending" button will have no effect.

Dislike. Pressing the button will send YouTube a "dislike" message.

History. Pressing the button will send a message to remove the video from your watch history.

Not interested. Pressing the button will send YouTube a "Not interested" message.

Don’t recommend. Pressing the button will send YouTube a "Don't recommend channel" message.

No button. The "Stop Recommending" button will not be shown, but standard data will still be collected.

These experiment groups are key to answering the research questions. If you’re in, for example, the dislike group and use the button to reject a vaccine skepticism recommendation, we will measure how many more vaccine skepticism videos YouTube recommends to you afterwards. This will give us some idea of whether your dislike is being respected. To measure this precisely, we need to know “the counterfactual'' — what would have happened if you hadn’t sent that dislike? This is where the control group comes in! Participants in that group will also be rejecting recommendations, but no real messages will be sent to YouTube, so the recommendations they receive can act as a reference for comparison.

Crowdsourced data and transparency

There are no public stats for YouTube in particular, but Google runs many thousands of experiments on their users each year. However, YouTube’s experimentation will always be deployed for YouTube’s own interests, and there is a critical public interest gap to be filled. Independent third-party research can address this, by identifying risks and harms to individuals and groups that can arise on, through, and because of YouTube, and which would otherwise happen in the dark. This study is unique as we are able to run our own experiment on YouTube with the cooperation of you as a YouTube user, but without having to rely on the cooperation of YouTube itself.

This sort of crowdsourced research is powerful and is especially essential in the absence of meaningful platform transparency requirements. The recently-passed Digital Services Act in the EU has started us on the path towards greater transparency from the platforms that influence our societies, but in the meantime, crowdsourced research like this can help advance the public interest and ensure platforms aren’t left to mark their own homework.

Analysis

Ease of use

YouTube’s current design does not give people many opportunities to provide feedback, nor does it encourage people to actively shape their experience. The existing YouTube design requires at least two clicks for a user to send negative feedback on a recommendation.

To address this challenge, we implemented an alternative button in our extension through which people can submit feedback. The “Stop Recommending” button that RegretsReporter adds potentially improves upon YouTube’s current design: feedback with a single click. But we would like to know whether this design translates to a better experience: do users submit more feedback when this button is available?

The experiment makes this straightforward. The extension tracks how frequently participants are using YouTube’s user control mechanisms — both the “Stop Recommending” button and YouTube’s native controls like “Dislike” and “Not Interested”. We can compare how much user feedback is submitted by participants in the no button experiment group, who did not see the “Stop Recommending” button, to those in the other arms.

Respect for user control

To measure the degree to which YouTube respects user control, remember our example. Say that you have rejected (pressed the “Don’t Recommend” button) a vaccine skepticism video. The extension will then observe what recommendations are subsequently made by YouTube and whether any of them are also vaccine skepticism videos. This measurement will have different interpretations depending on which experiment group you are in:

If you are in the control (or placebo) group, rejecting a recommendation doesn’t actually send any message to YouTube, so the recommendations you receive will be whatever YouTube would normally have made if you hadn’t submitted any feedback.

If you are in the dislike group, rejecting a video will send a dislike message to YouTube, so your recommendations will change to whatever degree YouTube respects that dislike message.

By comparing what happens to participants in these two groups (and similarly for the other groups), we can make the “counterfactual comparison” that we mentioned above. What would have happened if I hadn’t sent the dislike message? The control group tells us. And this comparison allows us to measure how much impact dislikes have on YouTube’s recommendations.

Of course it’s important to make clear that we’re not just counting vaccine skepticism videos. For any video that you reject, we want to measure how many videos YouTube is subsequently recommending that are similar to that video. This requires a general way to measure how similar YouTube videos are to each other, which we call a semantic similarity model. Developing this model is a fascinating tale of machine learning, large language models, and amazing research assistants. I’ll be exploring this in detail in a follow-up piece which will be live in the coming weeks.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.