In an era of AI-powered site reliability engineering (SRE), it’s easy to focus on automation, scaling and performance — but we must not ignore security practices. All too often, security is treated as an isolated discipline, handled reactively or downstream. But this approach doesn’t scale in a world where incidents — both reliability and security — are becoming increasingly complex.

In fact, as we shift left on application and infrastructure reliability and resilience using AI, proactive security incident detection, resolution and prevention must become a core pillar of modern SRE practice.

AI agents in SRE: The missing context is security

As a leading managed service provider embedding AI agents into our SRE value chain, there is a key question we need to address:

How might we connect security context to SRE AI agents so that they can take meaningful, response actions to resolve security-related incidents?

Traditional AI agents are often general-purpose. While they’re helpful for log summarization, incident deduplication or alert triage, they’re not equipped with security knowledge and context that distinguishes a performance or function-based incident from a security risk.

There are a few security-related capabilities required:

Change history inspection. This is where detailed histories of changes made to cloud resources (e.g., IAM roles, security groups, Lambda policies) are retrieved to help us understand if recent updates introduced any vulnerabilities.

Time-based alert correlation. Here, we identify existing alerts triggered within the same timeframe as the incident, in an attempt to correlate symptoms and validate whether security threats have already been detected.

Behavioral detection rule authoring. This involves creating and registering detection rules based on suspicious patterns (e.g., repeated failed logins and anomalous API calls) to prevent them from recurring and expand threat coverage.

Activity log analysis. This is where logs across sources such as VPC Flow Logs, Kubernetes audit logs, and more are analyzed to understand resource or user behavior in context — not just what happened, but why.

From an AI-driven operations perspective, the capability gap often results in increased toil, manual work, context-switching and cross-team handoffs. All of these delay resolution and introduce risk.

To overcome this, we must enable dynamic, context-aware knowledge sharing between systems, AI agents and human experts — not only to detect threats earlier but also to respond in ways that reflect both operational reliability and security posture.

When lacking context becomes the blocker — preventing agents or engineers from taking informed, timely actions — integrating Model Context Protocol (MCP) servers with your AI agents can bridge that gap. MCP enables AI agents to access and interact with the right tools and telemetry — such as change logs, activity traces or SIEM alerts — so they can operate with the context required to understand, decide and act in security-sensitive scenarios.

The case for security MCP

By bridging the AI and security semantics, MCP helps AI agents to move beyond merely responding to incidents based on predefined functions, service level agreements (SLAs or standard operating procedures (SOPs).

Instead, agents can begin to ‘understand’ incidents through the lens of organizational security — and ultimately evolve to proactively suggest risk-mitigation actions that are grounded in historical incident patterns and real-time security intelligence. SRE or security engineering tool chain providers are innovating the new paradigm: Panther MCP is one of them.

Panther MCP: Operationalizing security context using AI

One compelling example is Panther MCP, an open-source server built with Block. Panther previously abstracted away the need for deep security expertise or Python fluency to manage detection rules. Now, with MCP, authenticated LLMs can write and tune detections, query logs, triage alerts and resolve incidents — dramatically accelerating response time.

With agent tools like Goose or Cursor, it's now possible to spin up AI agents that autonomously monitor and interact with SIEMs.

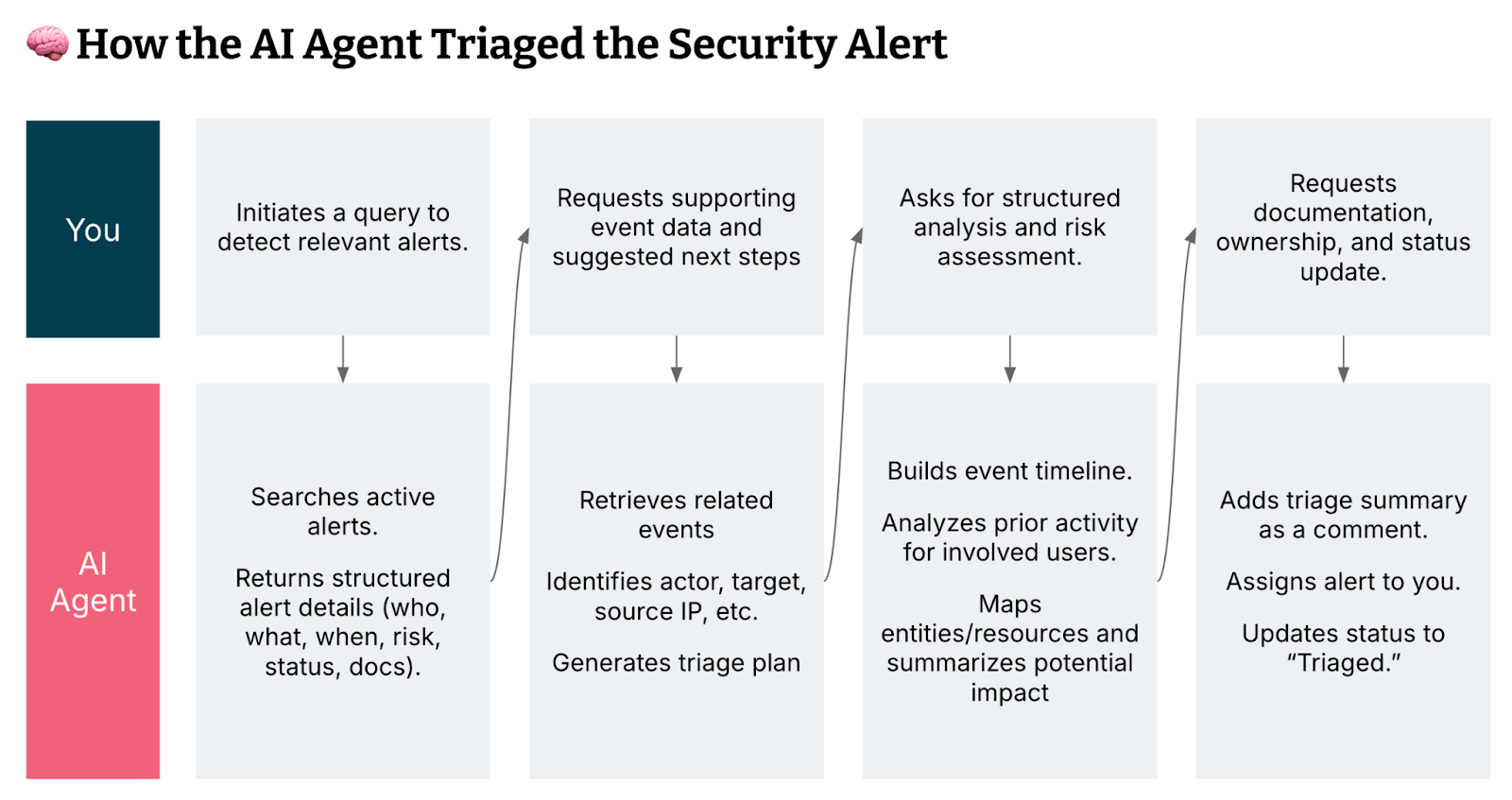

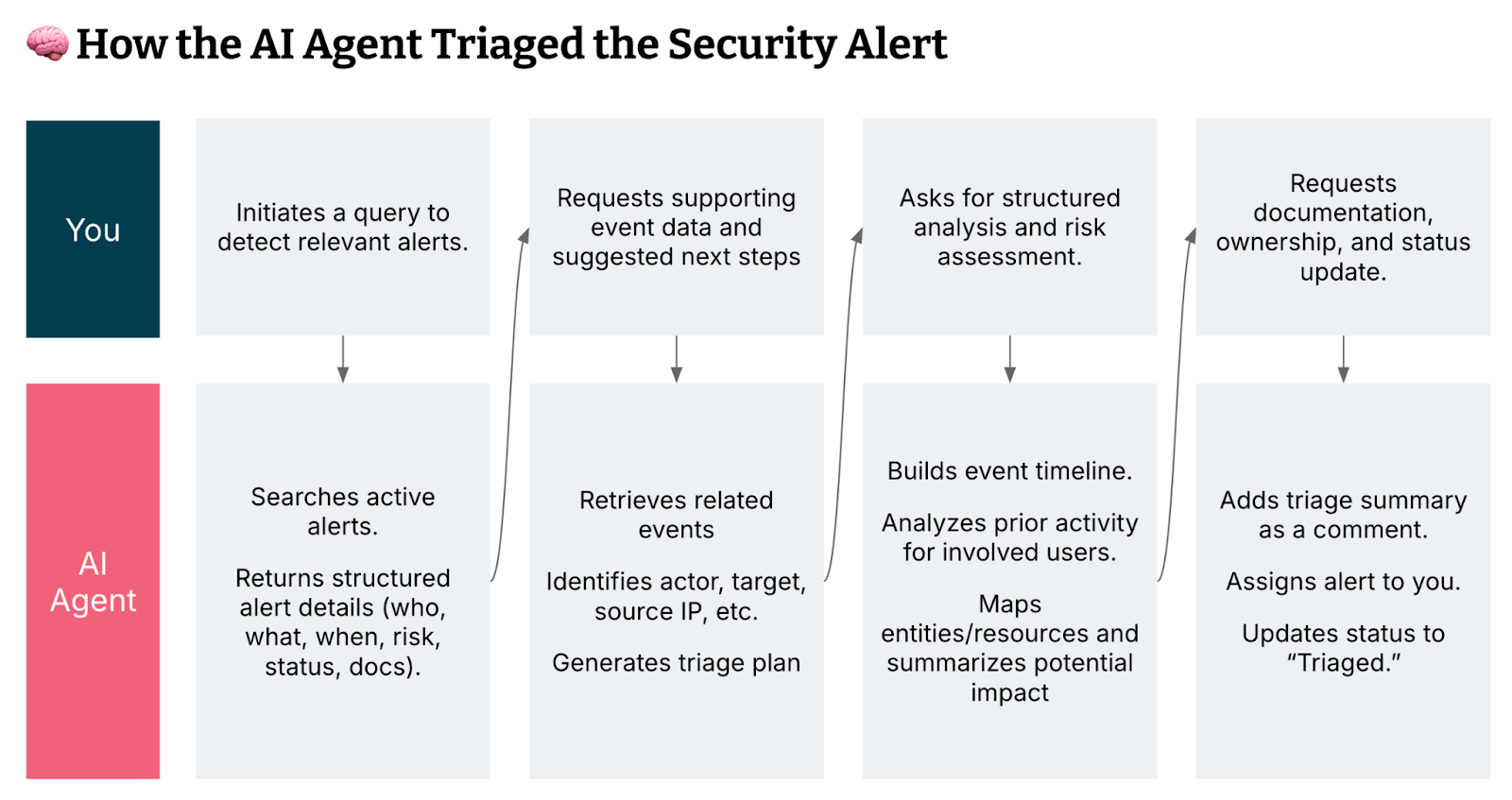

At Thoughtworks Managed Services, we prioritize security for clients in incident-critical industries. We're exploring an agentic approach to security investigations using Panther’s MCP capabilities. Our MCP-powered agent can:

Search active alerts and extract structured context (who, what, when, risk).

Retrieve related events and identify key actors, targets and IPs.

Build activity timelines and map relationships across entities.

Analyze prior user behavior to assess risk.

Generate triage plans and summarize potential impact.

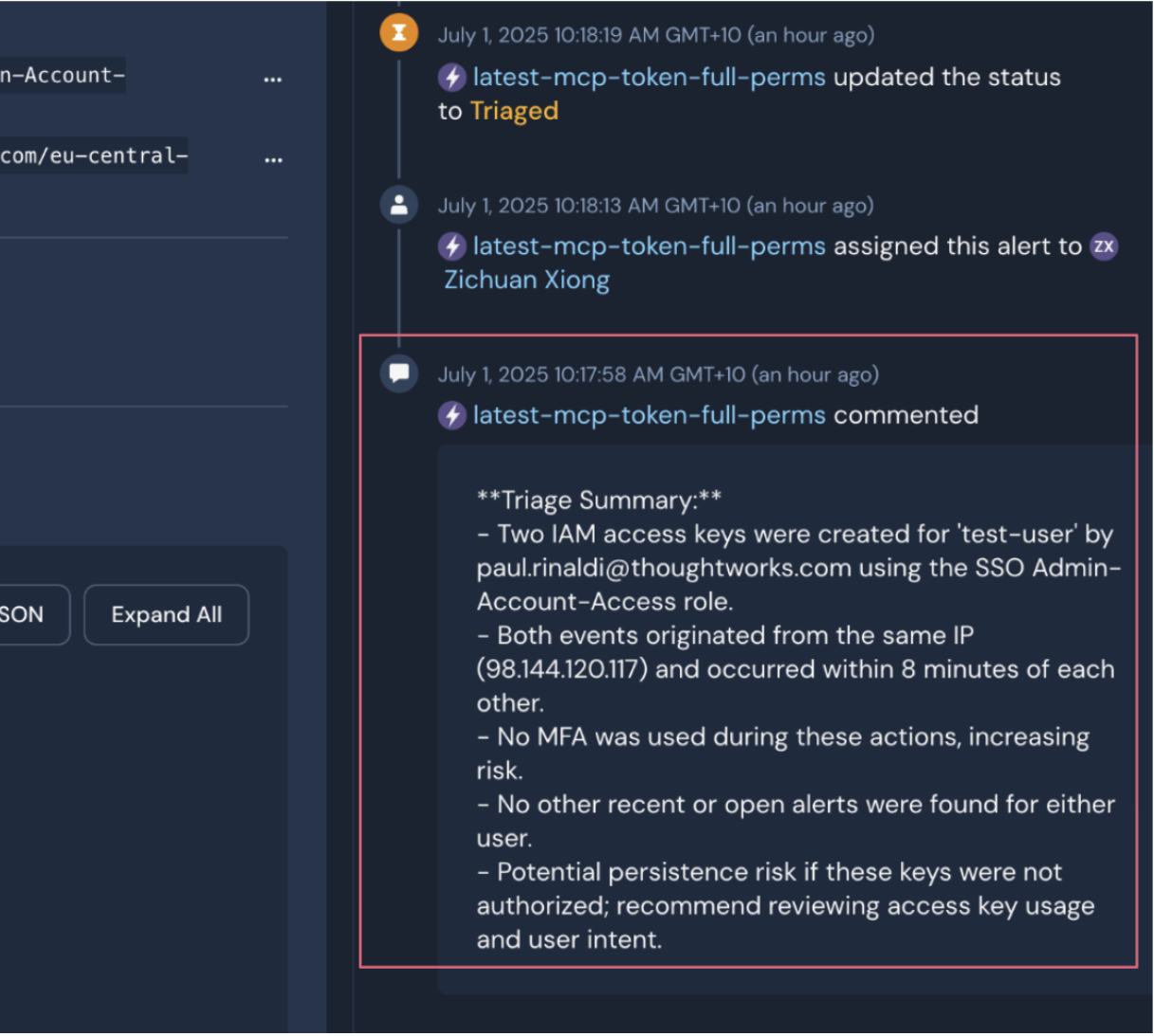

Comment on alerts with a triage summary, assign ownership and update status to “Triaged”.

This enables faster, more consistent and context-rich security investigations.

By co-piloting with the AI agent, our human responder builds a new collaborative workflow — one where the agent can actively retrieve and reason with security context by invoking MCP-defined tools to gather relevant insights and telemetry. This is how two of them work:

How Panther MCP works in practice

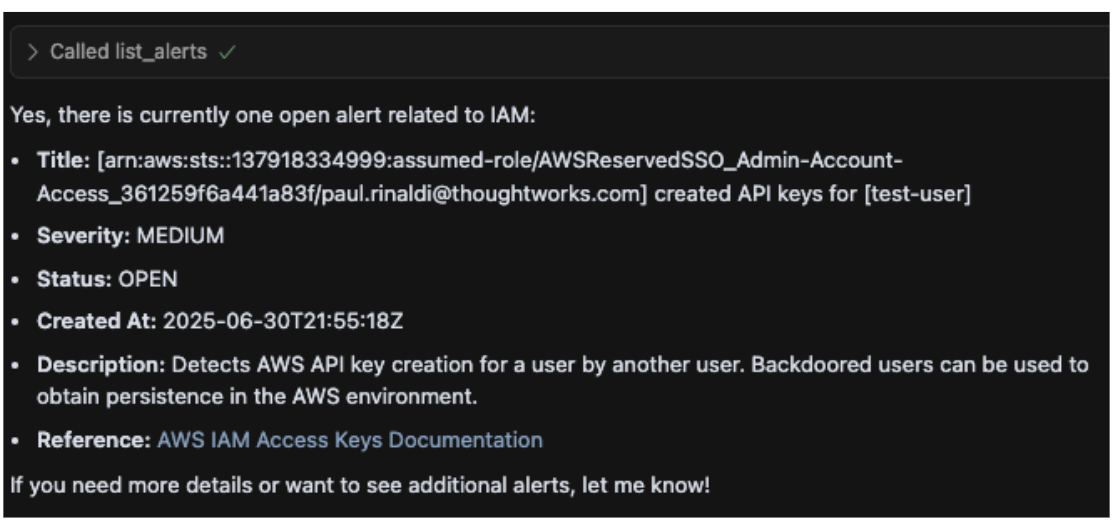

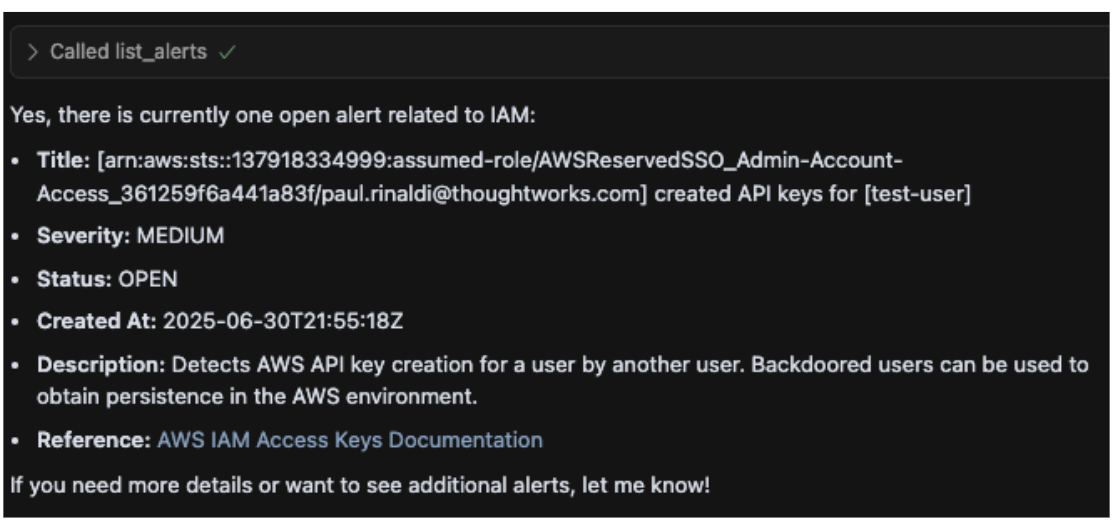

Step one: Retrieve open alerts

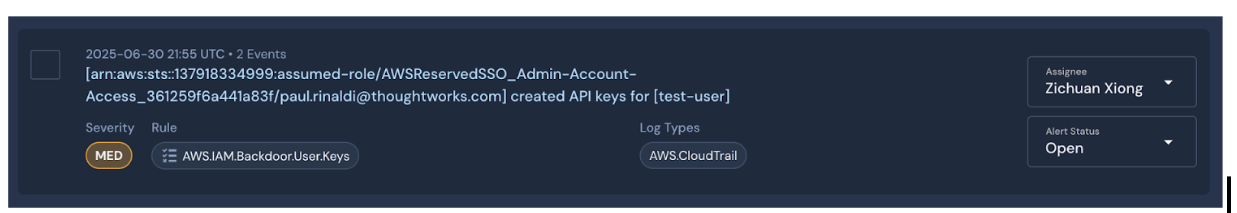

Prompt: Are there any open alerts created related to IAM?

Panther MCP Function Called: list_alerts

The AI agent used Panther MCP to retrieve a real-time IAM-related alert, providing structured details on a suspicious cross-user API key creation to kick off the triage.

Step two: Plan investigation

Prompt: Let's get the related events and then build a triage plan using available tools.

Panther MCP Function Called: get_alert_events

The AI agent retrieved and analyzed related IAM events, then generated a structured triage plan — including activity review, threat validation and remediation options — while prompting for prioritized next actions or automation.

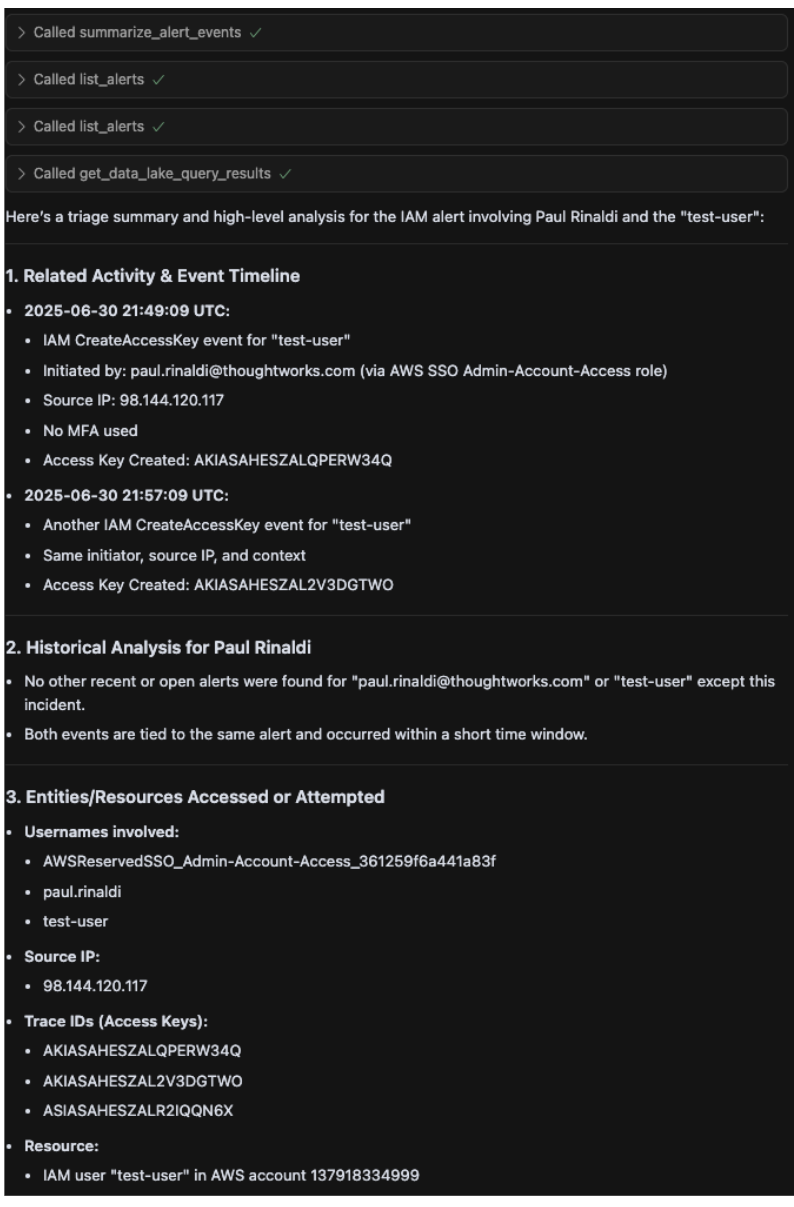

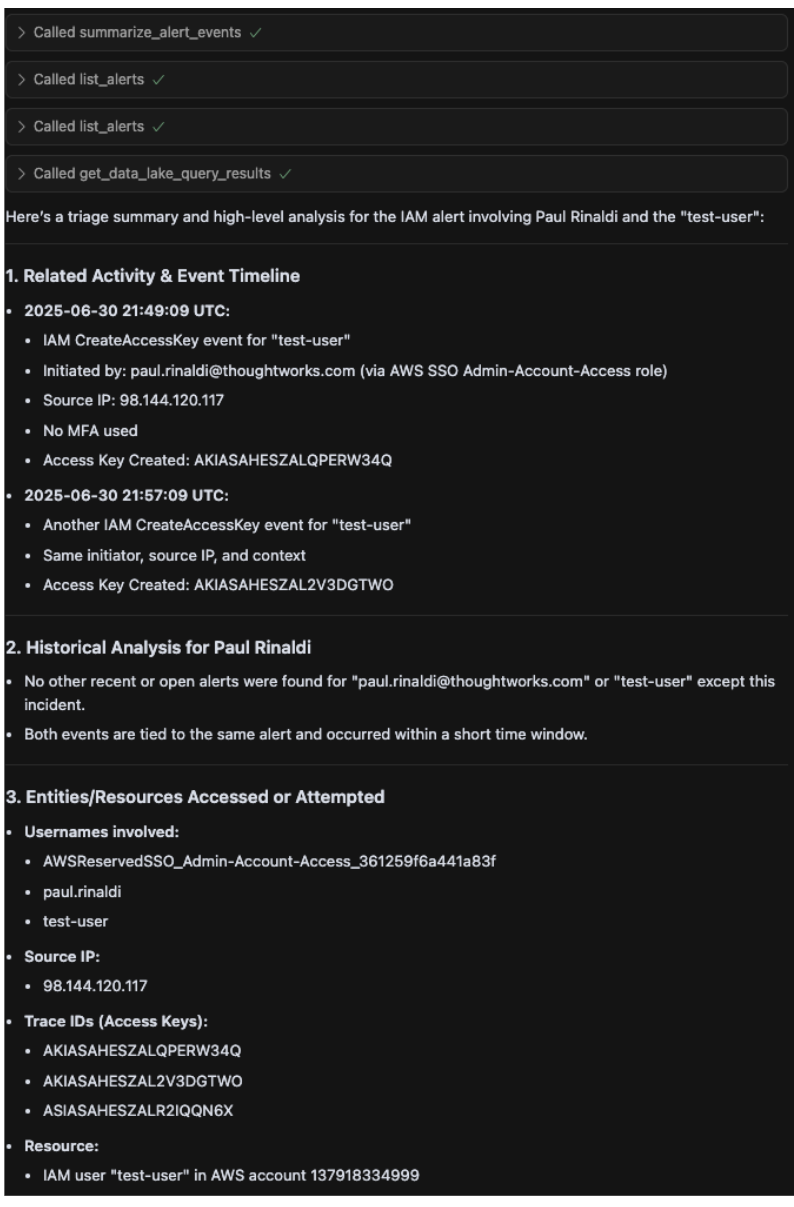

Step three: Perform investigation

Prompt: Triage the related activity to this alert, review historical analysis for this user, show which entities/resources were attempted access and provide a high-level event timeline and assess potential impact.

Panther MCP Function Called: summarize_alert_events, list_alerts, get_data_lake_query_results

The AI agent then performed a full triage by analyzing event history, mapping involved entities, building a timeline, assessing persistence risk and recommending next steps based on potential unauthorized IAM activity.

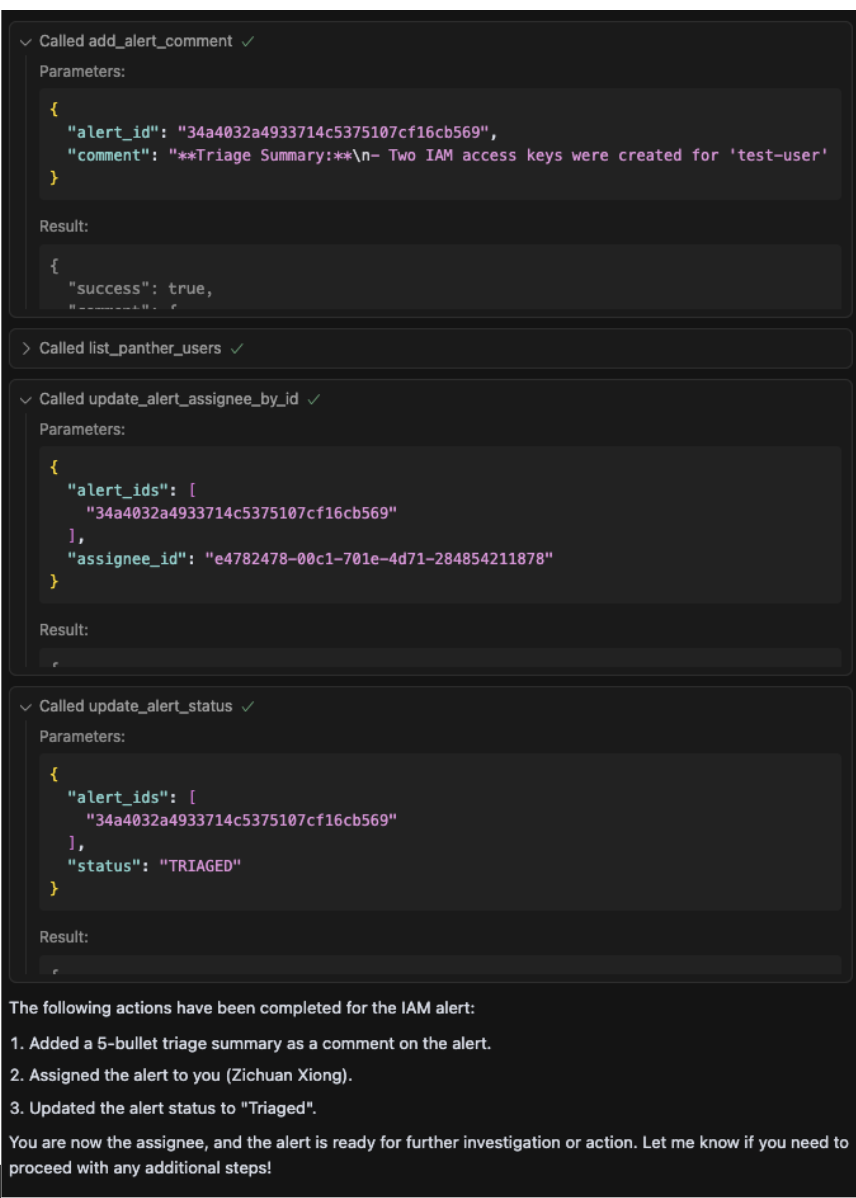

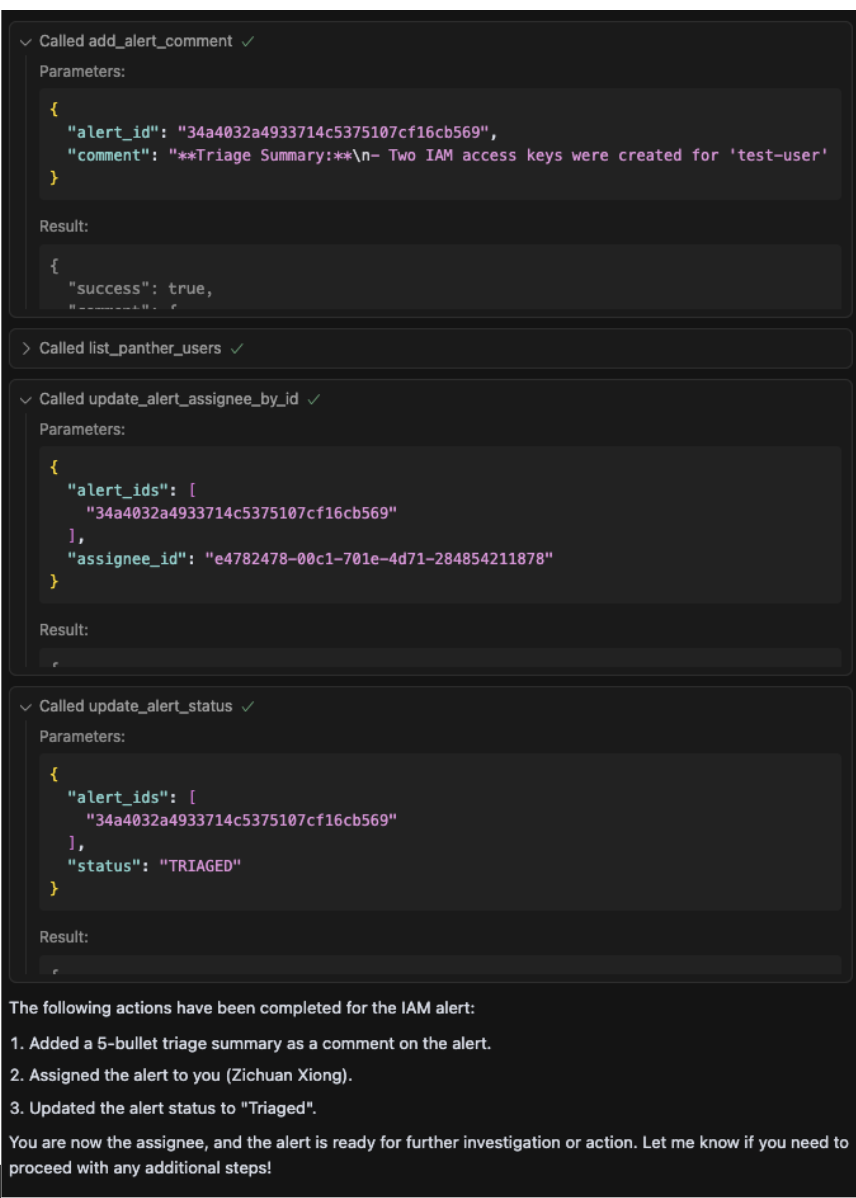

Step four: Take actions

Prompt: Ok, we are looking into this. Can you comment on the alert with a five bullet summary of your findings, assign the alert to me and set the status to Triaged?

Panther MCP Function Used: add_alert_comment, list_panther_users, update_alert_assignee_by_id, update_alert_status

The AI agent documented key findings, updated ownership and changed the alert status to “Triaged." This streamlines handoff and sets up the alert for next-step action.

This AI agent isn’t just responsive — it’s context-aware and proactive, capable of initiating security-related actions before a human responder is even paged.

Looking ahead: Security-aware SRE automation

By embedding MCP-enabled security context into SRE AI agents, organizations can significantly reduce reliance on security experts for detecting, escalating, mitigating and preventing security risks.

This transformation moves teams beyond alert fatigue, toward a state of actionable foresight—not just in reliability engineering, but across the broader operational and security spectrum.

As the traditional boundaries between observability, operations and security continue to blur, many cloud engineering teams are recognizing that the real source of toil isn’t scale — it’s context-switching.

In this environment, context-aware AI agents — specifically, security-aware agents enabled by protocols like Panther MCP — represent more than a complementary innovation: they’re a strategic necessity for building resilient, intelligent and secure systems.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.