The software we use has become so much more than just a collection of digital tools. It’s reshaped how we live, interact, think, and behave in ways that few would have thought possible

Now, the decisions made by software teams don’t just impact their organizations — they can transform the daily lives of billions of people, for better or worse. And as a result, the ethics of technology has quite rightly been thrust into the spotlight.

As technologists, we have a responsibility to make sure that what we build doesn’t harm people, society, or the environment — a concept broadly referred to as responsible tech. The good news is that by following a few simple golden rules, embedding responsible tech into our work can be surprisingly easy and deeply rewarding.

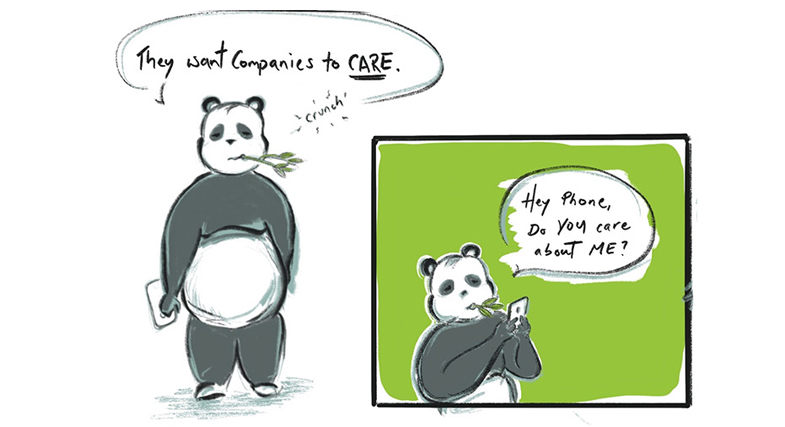

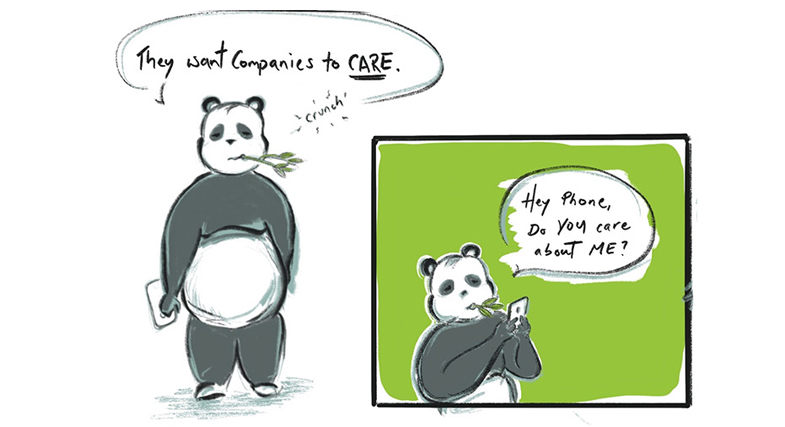

To explore three golden rules, we enlisted the help of a new friend — Pete. A software developer, Pete considers himself an ethical panda. If you’re as active on social media as Pete is, you may well have already seen some of the ethical dilemmas he’s faced.

In this series of articles, we’ll dive into each of Pete’s adventures, looking at the story behind the strip and exploring what software developers and designers can learn from Pete’s experiences.

The first golden rule: Align your hands with your heart

Today, most technology organizations recognize the importance of responsible tech and acknowledge the part they need to play in safeguarding the wellbeing of their users. Increasingly, that’s reflected in their mission and values.

But laying out your values — no matter how noble or ethical they may be — isn’t meaningful if your actions don’t align with them. That’s the first dilemma Pete the ethical panda came up against. And if you’re an active WhatsApp user, it’s one you may well recognize.

In early 2021, WhatsApp announced some changes to its data sharing and privacy policies — specifically around how it shares data and integrates with other Facebook products. It asked users to reconfirm that they agree to the app’s privacy terms, something that millions of them weren’t happy to do. While WhatsApp was perceived critically for many other reasons throughout its existence since 2009, this change to their privacy policy caused an unprecedented backlash from consumers that took its leadership by surprise. But it shouldn’t have.

The move upset customers for two big reasons. Firstly, many of WhatsApp’s users choose it for the encryption and data protection it offers. For them, a move towards more data sharing wasn’t just a step in the wrong direction, it was fundamentally at odds with the app’s purpose and ethos.

Secondly, the step appeared out of line with Facebook’s own values. ‘Building social value’ is one of the company’s five core values: "We expect everyone at Facebook to focus every day on how to build real value for the world in everything they do."

Not only did this move not appear to create social value, but many also believed it destroyed value previously created by the brand. It was exchanging the privacy and control offered to customers for more data that it could operationalize in new ways, and ultimately, profit from.

Interestingly, the changes themselves weren’t actually that significant. But it was this perceived disconnect between the organization’s values and its actions that did the damage. By acting contrary to its values, it showed that Facebook’s users couldn’t trust the company to deliver against its responsibilities and look after their best interests.

Since then, WhatsApp has seen millions of users flock to new alternatives like Signal and Telegram — apps that they believe can better meet their privacy, security, and control demands.

What developers can do for Pete

The big lesson for developers to take away from Pete’s story is that responsible tech is a continuous, ongoing commitment. If you make responsibility part of your vision, it needs to factor into every decision you make. In a study by NielsenIQ, "over 56% of respondents stated a willingness to pay more for products and services if they knew they were dealing with an organization with strong social values."

Conversely, as the WhatsApp story showed, it only takes a few seconds to lose trust that took years to build up. And once that trust is gone and people stop believing that you’re truly committed to acting in their best interests, it’s extremely hard to get back.

If your hands aren’t aligned with your heart, users — like Pete — will quickly start to ask big questions like ”Does this company really care about me?“ And if they can’t see evidence of action that backs up your words, the answer might not be good for your organization.

More golden rules will be published soon – stay tuned.

To learn more about responsible tech and what it takes to enable it, download a free copy of the Thoughtworks Responsible Tech Playbook.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.