Virtual assistants use natural language processing (NLP) and machine learning algorithms to understand and respond to user queries. A crucial part of today’s virtual assistants — which leverage large language models — is retrieval-augmented generation, or RAG.

In this blog post I’ll explain how RAG can be used in virtual assistants to improve a user’s experience. The benefits of such a technique should be clear — and demonstrate how important RAG is in making generative AI successful.

To begin, let’s think through the steps the virtual assistant needs to perform when a user enters a query:

It needs to parse the query.

Then search the catalog and extract relevant information.

Next, search a product catalog to bring relevant products with their details.

Then ask relevant follow up questions to the user.

Amend prompts to retrieve related products from the catalog.

Refine the user’s initial query to understand their intent better.

Identify relevant products based on user input.

Continue the shopping experience workflow for adding products or finalizing the sale.

Let’s now look at what this process looks like in more detail.

Preparing a catalog

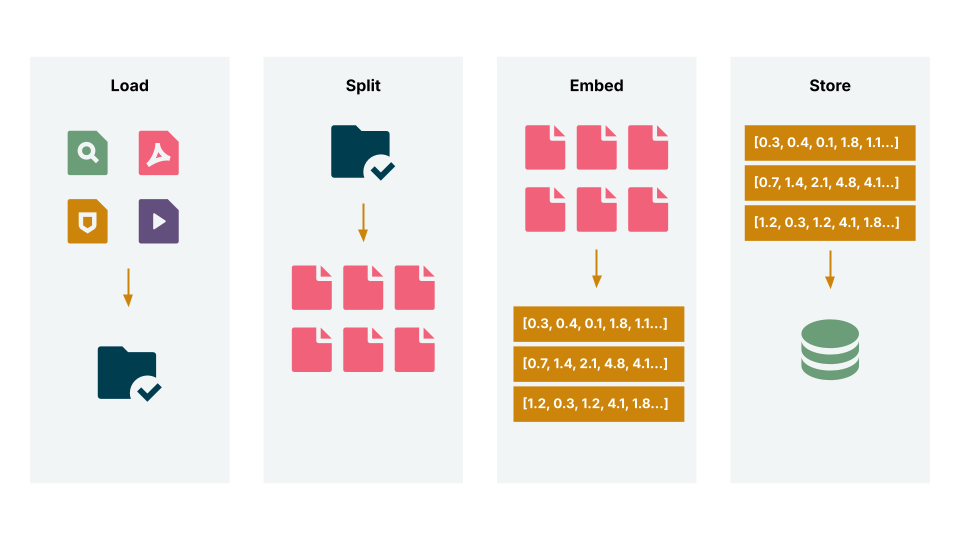

First, we need to prepare a catalog which the virtual assistant will search in order to provide a response to a user’s query.

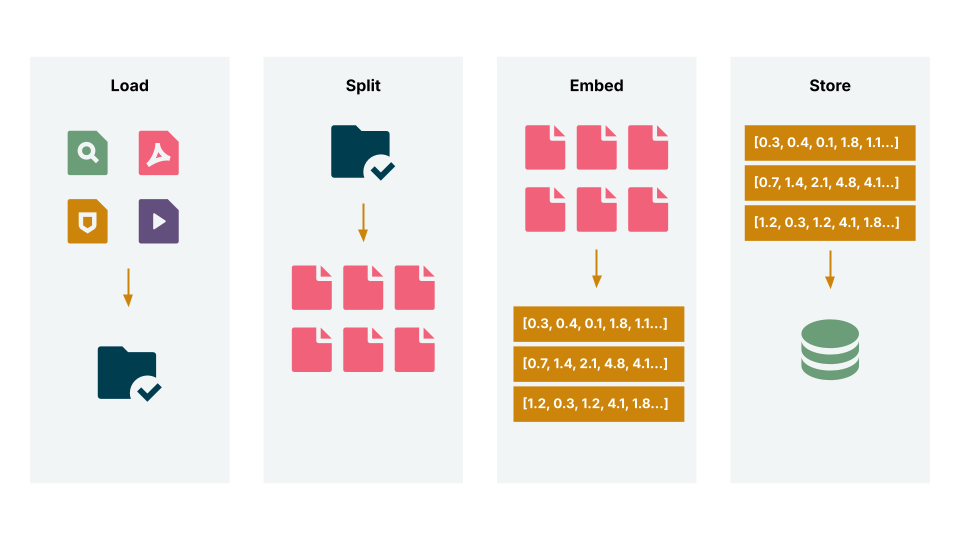

Typically, the catalog will be built up from a collection of documents, which may include product information, products, marketing materials and other content

This text can next be split into smaller chunks: this is a process known as splitting.

These split documents can then be converted into embeddings using a transformer model, such as Sentence Transformer or Titan Embeddings.

These embeddings are stored into a vector database — this could be something like Chroma DB, or FAISS. Saving vectors in a vector database helps in the retrieval process.

We can then create an index using the chunks and the corresponding embeddings.

Writing relevant prompts

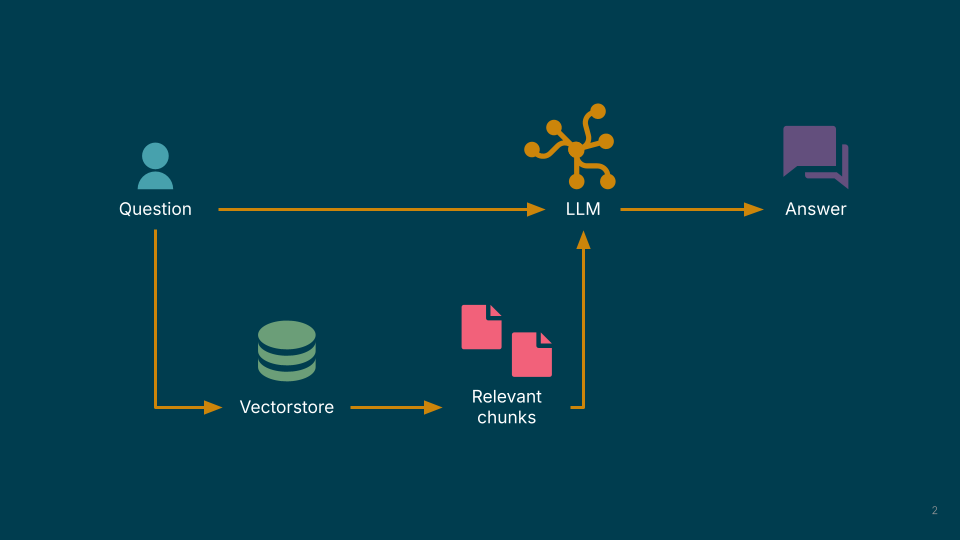

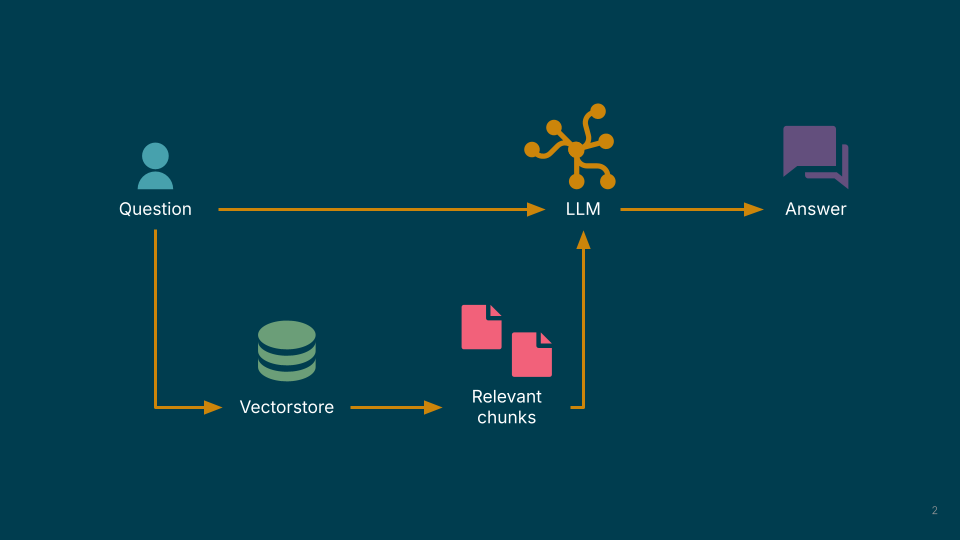

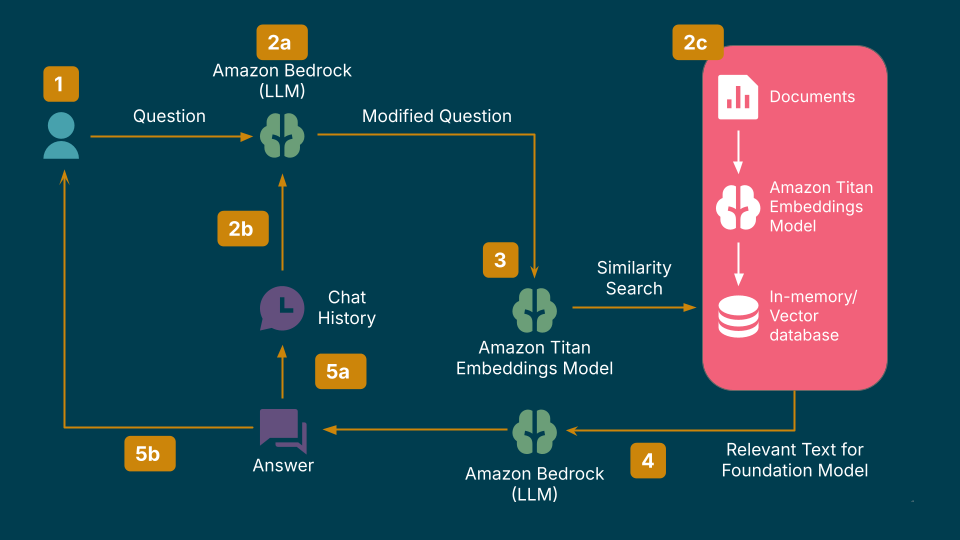

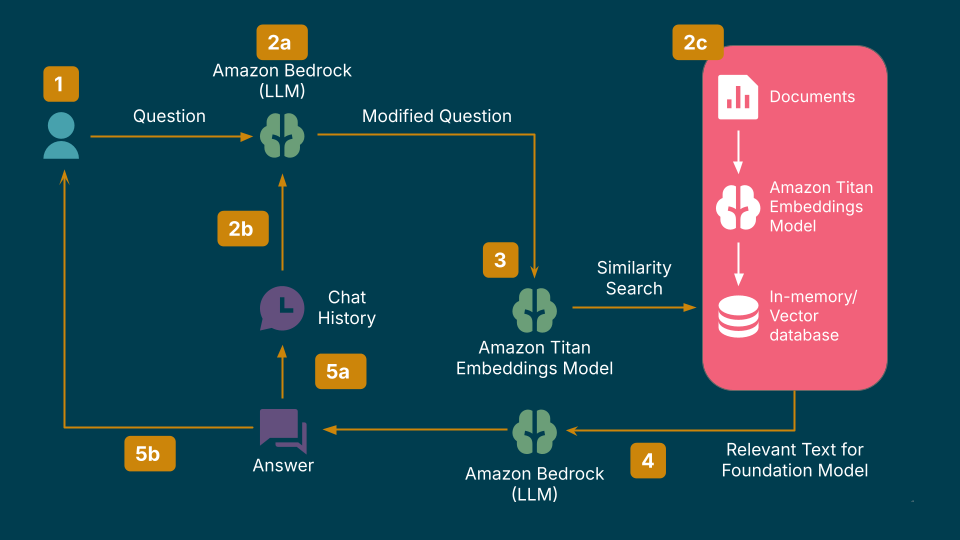

Next, we need to write prompts that take a user query as an input and, using the context given by the embeddings that are stored in a vector database, help the LLM Model to respond effectively. Once we’ve done this, we’re ready to do a question-answer session with the virtual assistant.

Once we enter the initial question the following steps will be executed :

An embedding of the input question is created

The question embedding is then compared with other embeddings in the index

The (top-N) relevant document chunks are then retrieved

Those chunks are added as relevant context in the prompt. These top-N retrieved documents are candidates for the answer.

Send the prompt to whichever LLM you’re using; it then generates the response text retrieved documents that were retrieved.

The final contextual answer is then given based on the documents retrieved.

Example technology stack

There are many different technology stacks you could use for this kind of project, but here’s what I’ve employed when performing this kind of task.

Amazon Bedrock. It’s useful here because it provides access to foundation models from both third-party providers and Amazon.

Claude. It’s a powerful large language model which provides a good foundation for conversational applications.

Langchain. This is a framework that helps developers integrate LLMs into applications. It can help you implement the components required for the virtual assistant to work.

Increasing the diversity of the virtual assistant’s recommendations

Sometimes, users’ may want something different or additional to whatever the virtual assistant has delivered to them. In other words, sometimes it’s necessary for the virtual assistant to deliver results that go beyond what is strictly relevant.

One way of doing this is to use a technique called Maximal Marginal Relevance (MMR).

MMR can be expressed with the equation below:

\[\text{MMR} = \arg\max_{D_i \in R \setminus S} \left[ \lambda \text{Sim}_1(D_i, Q) - (1-\lambda) \max_{D_j \in S} \text{Sim}_2(D_i, D_j) \right]\]

It describes the weighted sum of similarities between:

How much Document Di and Query Q are similar.

how much Document Di and Document Dj are dissimilar.

It’s this linear combination that’s “marginal relevance.” In other words, a document has high marginal relevance if it’s both relevant to the query and contains minimal similarity to previously selected documents.

RAG vs. recommendation systems

It should be noted that virtual assistants can use recommendation systems. Indeed, many of the earliest virtual assistants would have used this technique. The primary distinction between RAG and recommendation systems lies in their core function.

RAG is fundamentally a question-answering paradigm. It excels at retrieving factual information from a knowledge base and using that information to generate a coherent and contextually relevant answer to a direct question. Think of it as a highly advanced search engine that doesn't just point you to a document but synthesizes the information for you.

Recommendation systems analyze a user's past behavior, preferences and similarities with other users to proactively suggest items of potential interest. These items could be products on an e-commerce site, movies on a streaming service or articles on a news platform. The goal is to anticipate user needs and preferences, often before the user has explicitly articulated them.

However, the introduction of RAG alongside the growth of generative AI does have a number of distinct advantages, which are arguably helping the industry to develop a new and improved generation of virtual assistants.

Keyword match. Keyword to keyword matching is the simplest way to recommend similar documents. However, it doesn’t capture the semantic meaning of the documents. Imagine, someone is looking for products from a European brand — with a simple recommendation system, the assistant may miss Italian brands even though they are, of course, relevant.

Recommendations through semantic similarity. The query and the documents can be embedded into a vector space and we can then retrieve the relevant documents using cosine similarity. Semantic similarity can also perform well given synonyms, abbreviations and misspellings, unlike keyword searches that can only find documents based on lexical matches. However, it does depend on how well the user words their query. So, retrieval through semantic similarity may produce different results with subtle changes in the way the query is written. The underlying intent of the buyer may not be revealed in the first query. It’s also worth noting that this methodology doesn’t save any history.

- Collaborative-based recommendations. We need ratings data — that’s something that we don’t always have, at least not reliable ratings data. Also buyers perception changes with time , what you rated 5/5 may not be of any important use today. If a user has no rating history, finding patterns in buying behavior is inevitably almost impossible. Relatedly, what if the ratings are unreliable or data is missing? Sometimes, ratings are such that they don’t play well with the specific implementation of the technology. Occasionally, there is no rating history of users at all — that then makes finding patterns in user buying behavior almost impossible.

- When an LLM generates recommendations, a user can ask better questions to understand why a product has been recommended. Also, there could be further follow up questions through which a user’s intent could be refined. This can’t be done with a recommendation system.

Technical and evaluation challenges

There are many benefits to using RAG to help build virtual assistants. However, there are still some challenges it’s important to be aware of.

Data quality. Developing RAG-based recommendation systems requires developing data embeddings. It’s important you have good quality text that’s specific to certain products and brands. If the data’s generic, the recommendations will be as well.

Data quantity. To develop embeddings of data , we also need a big enough textual corpus. If we don’t, it may need to be augmented.

- Hallucinations. While developing generative AI applications, hallucinations are always a possibility. To reduce their likelihood, you may want to use parameters like temperature , top_k , top_p in Claude — temperature controls the amount of randomness in the LLM’s responses. So, the higher the temperature, the more creative and unpredictable the response.

- Evaluation, precision, recall and ground truth. Once we’ve developed the virtual assistant, it’s also important to measure how good its recommendations actually are. One way to do this is to find overlap between what products are recommended and what the user finally selected. For example, let's say, our recommendations are “laptop” ”keyboard” and ”mouse” and the user selects “laptop” and ”keyboard” — there’s an overlap of two out of three recommendations. Recording the virtual assistant's recommendations and the user's selections is essential if we’re to successfully calculate evaluation metrics like precision, recall and F1-score.

The practical value of RAG

RAG is a valuable technique if you’re trying to build a virtual assistant. It adds context and detail to outputs that will improve the user’s experience. While recommendation systems do have their place, the introduction of RAG is a significant step forward.

There are potentially many different ways to implement it, but the steps outlined in this blog post should provide you with the basics so you can get started and become better acquainted with RAG.

This blog post is based on a piece that was originally published on Medium.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.