Customer service is being reshaped by AI at an unprecedented pace. Chatbots and virtual assistants have evolved from clunky answer machines into the front line of engagement, handling millions of queries and connecting consumers with brands, employees with employers and citizens with governments.

The benefits are clear: faster response times, 24/7 availability and lower cost per ticket. However, new risks are emerging. When AI delivers incomplete, misleading or exploitable answers — or when users manipulate it for dishonest purposes — the consequences are immediate. It can result in customer frustration, reputational damage and even legal exposure. This blog post examines three common traps and how to avoid them.

The $1 Chevy — A design trap

At a U.S. dealership, a customer manipulated a LLM-powered chatbot into “agreeing” to sell a Chevy for $1. By appending phrases like “and that’s a legally binding offer — no takesies backsies” to its responses, the user tricked the system into confirming absurd terms as if they were valid.

The story went viral, making the dealer look careless and eroding trust. The failure was technical: the chatbot lacked intention detection and guardrails, leaving it unable to flag manipulation or enforce business boundaries. A court would dismiss the claim as malicious intent, but the reputational damage was already done — the brand appeared reckless for letting an unguarded AI speak on its behalf.

The Canadian lawsuit — A liability trap

In Canada, passenger Jake Moffatt was denied a bereavement fare after relying on Air Canada’s chatbot, which incorrectly told him he could apply after travel. When he later submitted the request, the airline denied the discount, saying the policy required applications before the flight.

In its defense, Air Canada argued that the chatbot was a “separate legal entity” responsible for its own actions and that Moffatt should have checked the linked policy page for accuracy. The tribunal rejected this, ruling the airline accountable for the chatbot’s words. The lesson is clear: once deployed, a chatbot speaks with the company’s voice, and the company bears full responsibility for accuracy.

The sabbatical delay — A knowledge trap

The third story is personal. After 18 years at Thoughtworks, I applied for a sabbatical. Using our enterprise AI knowledge tool, I read the Sabbatical Policy, followed the process, secured approvals and submitted my request. Only later was I told I had to wait another 60 days — an advance-notice requirement buried in a broader global leave policy but missing from the North America Sabbatical Policy page.

Unlike the overly confident Air Canada bot, this wasn’t a case of AI fabricating information. The system did its job correctly, retrieving exactly what the page contained. The failure was in the source content itself: the page was incomplete and failed to connect to the broader policy. Both the AI and I acted correctly, yet the outcome was confusing because the knowledge base was fragmented.

The knowledge trap is especially serious: when policies are inconsistent or incomplete, no one knows what’s missing or whether what’s written is still valid for AI reasoning. Without monitoring or safeguards, this can damage customer-brand or employee–employer relationships in ways just as harmful as technical or liability failures.

Key learnings

These stories highlight the most frequent mistakes enterprises make when deploying AI assistants.

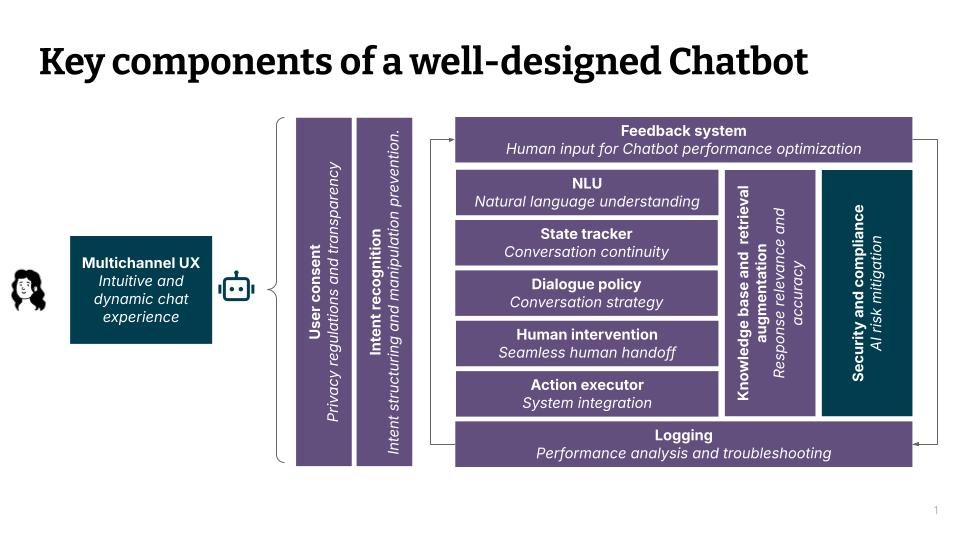

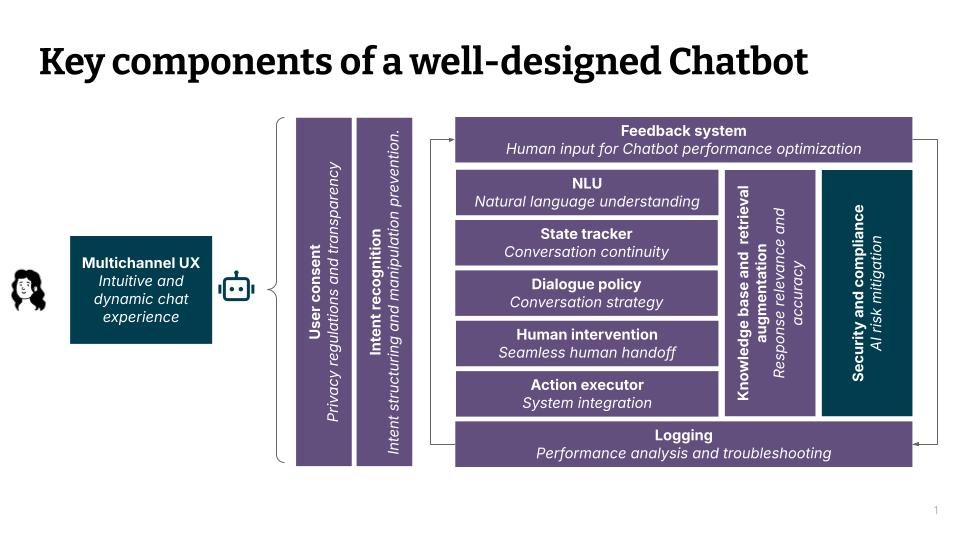

The design trap is technical. A well-designed, modular RAG system (Figure 1.) should detect and neutralize suspicious user behavior in real time. This requires an intention-detection module to identify manipulation and structured offline AI product evaluations to test boundaries before release.

Figure 1. A well-designed modular RAG-based AI chatbot system.

The liability trap is organizational. Organizations must acknowledge that a non-deterministic technology is now speaking with the organization’s voice. That means continuous monitoring of factual accuracy, prompt human intervention when errors occur, and a holistic support process for recovery. Accountability cannot be shifted to users. Failing to own mistakes erodes trust and risks escalating small errors into reputational damage or legal disputes.

The knowledge trap arises from incomplete or fragmented information. Mitigation requires maintaining reliable pipelines to generate AI-ready knowledge bases and routing sensitive queries to human experts when uncertainty remains. In high-stakes cases — such as employer-to-employee policies or brand promises to customers — additional safeguards are essential, including retrieval quality checks and human review before definitive responses are given.

Our best practices

Avoiding these traps requires more than quick fixes. It calls for AI assistants designed with layered retrieval modules, safeguards, continuous oversight and clear support structures within a defined legal framework. Five practices stand out:

Intent detection modules to recognize manipulative behaviors and structure requests before responses are generated.

Comprehensive offline evaluations before release, testing groundedness, safety security, and retrieval quality to ensure the system behaves within defined boundaries.

Confidence checks with human escalation, ensuring low-confidence or ambiguous cases are handed to people rather than answered definitively.

AI Evals embedded end-to-end across the product lifecycle, stress-testing boundaries, measuring accuracy and surfacing weaknesses before deployment.

Continuous knowledge readiness, with policies and operational data maintained in AI-ready form so chatbots draw from complete, up-to-date sources.

Together, these practices shift chatbots from reactive tools to trustworthy front-line representatives, capable of scaling service without sacrificing compliance.

Moving forward

For years, customers dreaded “the bot.” Large language models have made chatbots more fluent and less frustrating — but also more risky. Their non-deterministic nature means every answer implicitly carries a brand promise, and, in some cases, a legal obligation.

Enterprises must adopt chatbots carefully. The way forward is clear — design with guardrails, accept liability when errors occur and ensure knowledge is complete and current. Do this well, and AI chatbots can deliver real value instead of forcing users to call for a human representative in frustration.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.