AI can help us write code quickly — that’s probably not news to anyone. But it can also help us more effectively configure and deploy a system — in other words, it can do DevOps as well as development.

This was something I learned when trying to deploy an event-driven microservices platform on AWS. Needing to bring a new modernized platform to life on AWS but lacking both cloud and platform experience, I turned to AI to help me out.

Some background…

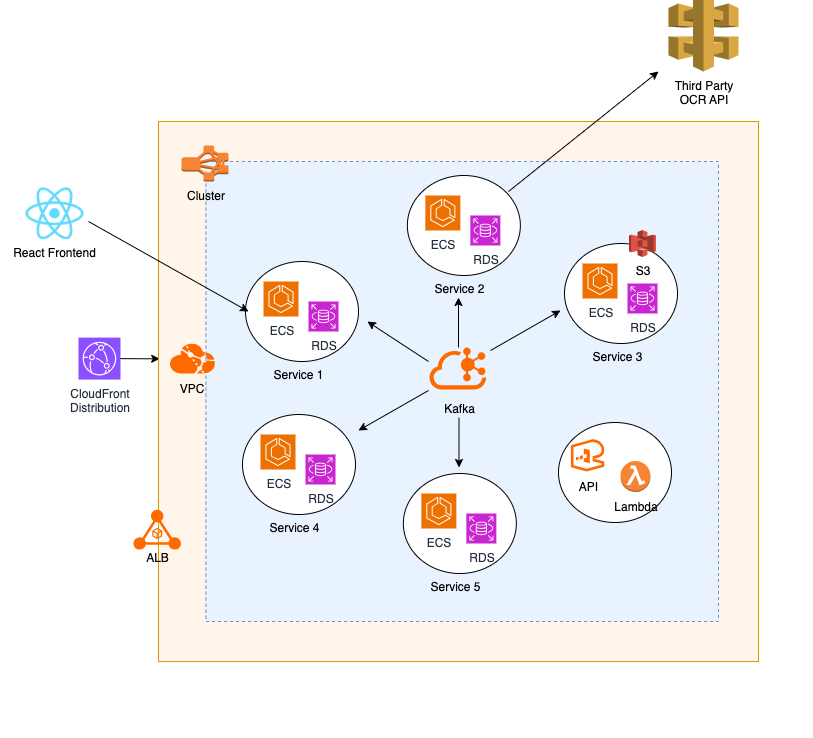

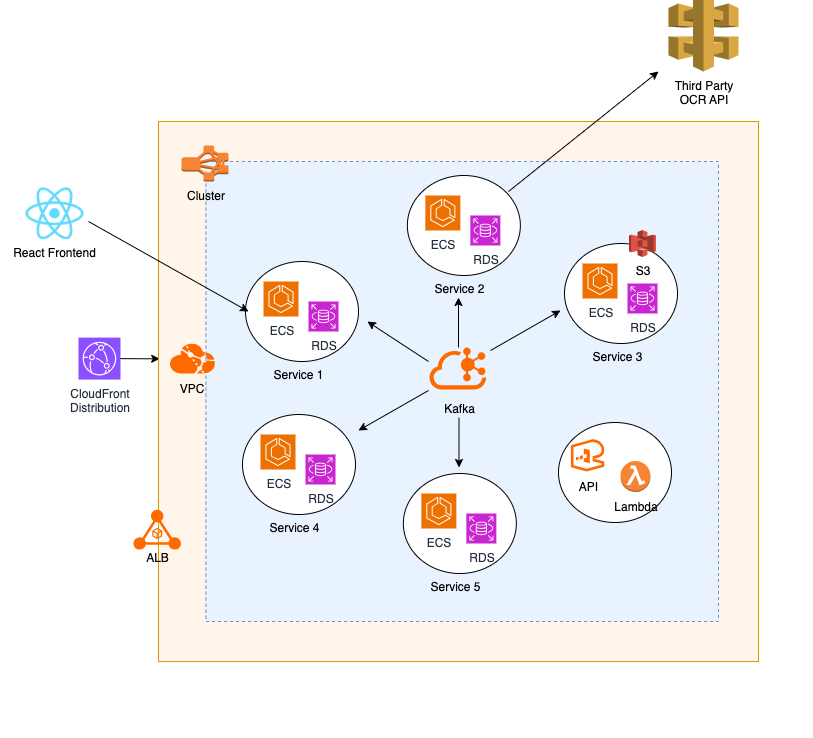

We were doing work for a client in the automotive sector that needed to modernize a platform. Using an AI-accelerated development process we built a new event-driven microservices platform. It consisted of five production-ready microservices and more than 50,000 lines of code. One of the main requirements was for this platform to be integrated with the existing Dealers Touch Point (DTP) system via a SOAP API to receive finance applications from customers. This meant cloud deployment was crucial — we needed scalability and flexibility.

That’s just the prologue. What follows is the story of how I partnered with an AI co-pilot to deploy the platform and the lessons I learned bringing it live in just three days.

Handling architectural complexity

To begin, let's look at the components I had to deploy. This was far from a simple sample application:

There were five microservices. Each one had unique requirements. One service, for example, required Amazon S3 for document storage, another integrated with a third-party credit bureau and a third used an external OCR function for document verification. This meant handling multiple sets of external API credentials.

An event-driven Core. Services were designed to communicate asynchronously using Apache Kafka.

A React frontend. This was hosted on S3 and served globally via CloudFront.

Containerization. The entire system was designed to run on ECS Fargate for serverless container orchestration, with images stored in ECR.

A major challenge was that we depended on external, third-party systems for critical functions like credit bureau checks and OCR processing.

To mitigate our dependency on these external APIs, we implemented a clever strategy: we built mock Lambda functions fronted by an API Gateway.

These mocks perfectly mimicked the behavior and responses of the real third-party systems, and allowed the team to build and test our services in a fast, isolated and cost-effective environment.

AI as my cloud architect: Generating the Terraform blueprint

My deployment strategy was to split the setup into two distinct parts: the foundational infrastructure (VPC, ALB, Kafka) and the microservices themselves. This approach would allow us to create a stable, reusable platform before we deployed any application code.

To generate the Terraform code, I worked within my editor using the Cline VS Code plugin, which allowed me to send detailed prompts to my LLM of choice, Claude. First, I tasked the AI with scripting the entire foundational infrastructure.

Once that core platform was live, I focused on deploying just a single microservice. I again used the Cline and Claude combination to generate the Terraform configuration for its specific needs — an RDS database, ECR repository and ECS Fargate service definition.

Before applying anything, I meticulously reviewed the terraform plan output. This human-in-the-loop step was critical. I would repeat the plan and review cycle until I was confident the changes were correct and cost-effective. This first microservice became our template.

Validating solutions with Gemini

To complement Claude's code generation, I used Gemini as a dedicated research analyst to validate my architectural choices.

This allowed me to rapidly confirm that S3 with CloudFront was our most cost-effective UI hosting solution and that using Application Load Balancer listener rules was the standard, most secure pattern for our service-to-service communication needs.

Gemini provided the data-driven confidence to move forward quickly on these critical decisions.

Key lessons

Lesson #1: AI is a brilliant generator but needs a human cost accountant.

The initial AI-generated code was "enterprise-grade" by default: large Fargate tasks and Multi-AZ RDS instances. While robust, this wasn’t cost-effective. My first and most critical job was to act as the senior architect, meticulously reviewing every terraform plan to right-size the infrastructure and slash unnecessary costs. This human oversight is crucial for managing cloud spend.

Lesson #2: Agile infrastructure is a superpower for evolving requirements.

After deploying the first two services, a new requirement emerged: Service A needed to make a direct API call to Service B. Instead of a complex service discovery implementation, I simply updated our Terraform code to add new Application Load Balancer listener rules. By using path-based routing, I enabled secure service-to-service communication in minutes. This proved the incredible flexibility of an IaC setup — it could be adapted on the fly.

Lesson #3: AI can't predict every operational pitfall.

The system was live and stable, but a few days later, a familiar cloud horror story began: the exploding bill. My CloudWatch costs were skyrocketing.

This real-world "gotcha" was a powerful reminder that AI doesn't yet have operational experience. The culprits were infinite Kafka retries, verbose logging and cluster-level Container Insights — all of which required a human expert to diagnose and fix.

From code to cloud: The AI-Generated README

One of the most mind-blowing parts of this process was that the LLM didn't just write the Terraform code. I prompted it: "Create a step-by-step README for a developer to deploy this service."

It produced a perfect markdown file with the exact commands for our workflow, which used our Cline VS Code plugin — enabled with the AWS Terraform MCP server — to apply configurations. It also included instructions for building a Docker image and pushing it to ECR. This AI-generated documentation became our official playbook.

The confidence this AI-assisted workflow gave me was immense. I could tear down the entire stack of services and infrastructure with terraform destroy and bring it all back online flawlessly minutes later.

This wasn't just a deployment; it was a truly ephemeral, repeatable and resilient environment, built and documented with the help of AI in hours, not weeks.

My final takeaway: AI + expert = unprecedented velocity

This experience was a profound look into the future of cloud engineering. AI didn't replace me. It augmented me. It took on different roles — a code generator, a research analyst, a documentation writer — which allowed me to focus on the highest-value tasks: architecture, optimization, security and cost control.

By pairing my expertise with AI's speed, I was able to deploy a sophisticated cloud platform with complex dependencies at a pace that would have been pure science fiction just a few years ago.

The future isn't about developers being replaced by AI; it's about developers who know how to wield AI becoming the most valuable players in the industry.

An earlier version of this post appeared on Medium.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.