It’s a scenario that sends a chill down the spine of any CTO: a business-critical application is running, but the source code is gone. Perhaps a vendor relationship soured, leaving you with a functional but opaque binary. Or, imagine you do have access to the source code, but it’s so messy that both humans and AI have a hard time understanding and describing what the codebase actually does.

Usually, one of our approaches to accelerating legacy modernization with AI is to use it to accelerate the reverse engineering part first, feed AI with the existing code and then let it help us create a comprehensive description of the application’s functionality, which can be used in the forward engineering.

But what if we don’t have the code, or it’s so messy that it’s useless? How can Generative AI accelerate reverse engineering in this case?

Recently, we’ve been trying to explore this at Thoughtworks: the result was an experiment in "blackbox reverse engineering." We set out to find out if, by combining AI-driven browsing with data capture techniques, we could create a rich, functional specification of a legacy system and use it to build a modern replacement from a clean slate.

This is the story of how we did it, the hurdles we faced and the powerful lessons we learned about the future of legacy modernization.

Setting up our AI experiment

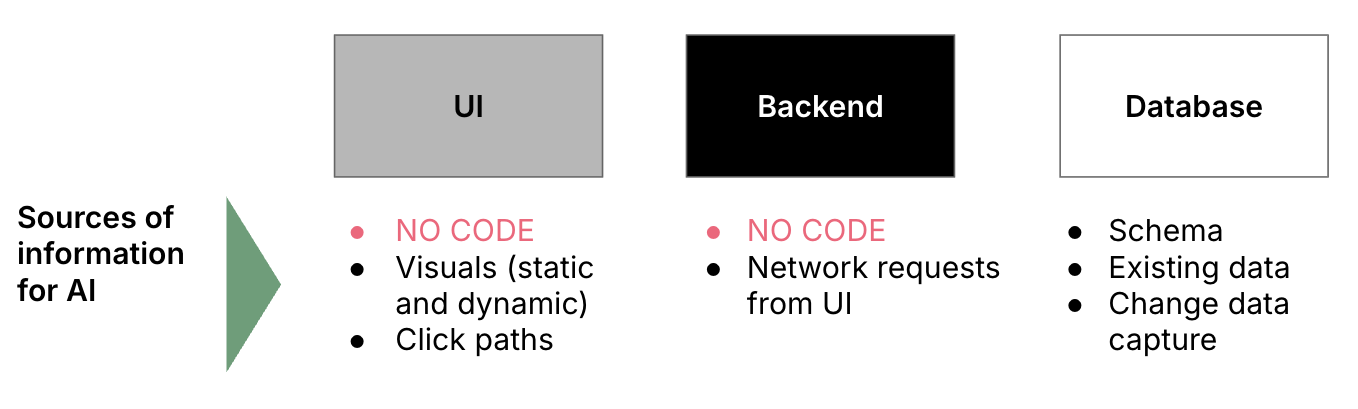

As a test subject we chose Odoo, an open-source ERP platform that was easy to set up and run on our machines. The backend code of the application was strictly off-limits to our AI setup — our information sources were limited to what could be observed from the outside:

The user interface. The AI could see and interact with the application just as a user would, observing static elements, dynamic behavior and click paths.

The database. While the backend code was hidden, the schema and the existing data was fair game.

Network traffic. We also allowed the AI to inspect the network requests flowing from the browser to the backend server as an additional data source.

Our experiment’s scope was to:

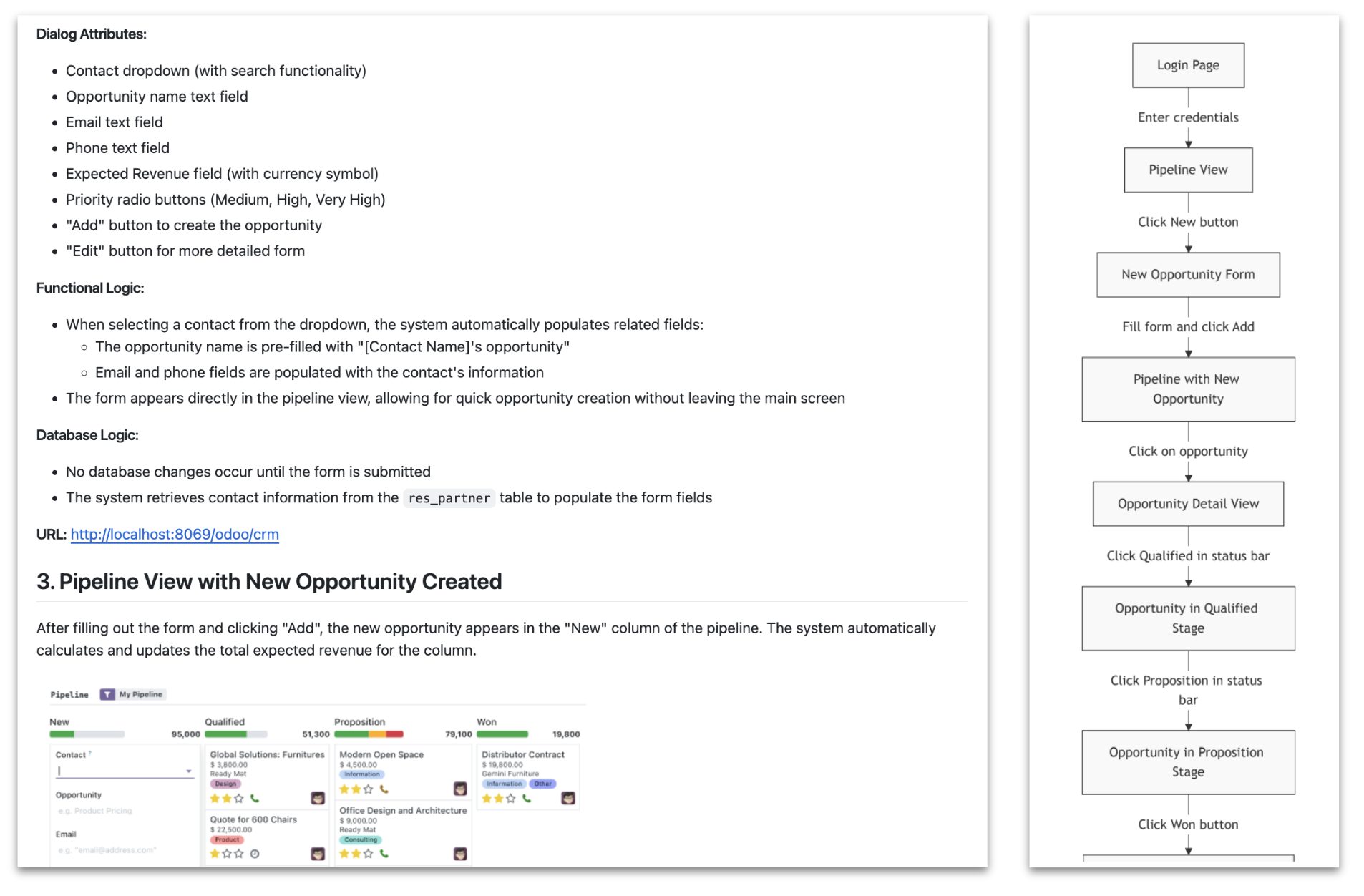

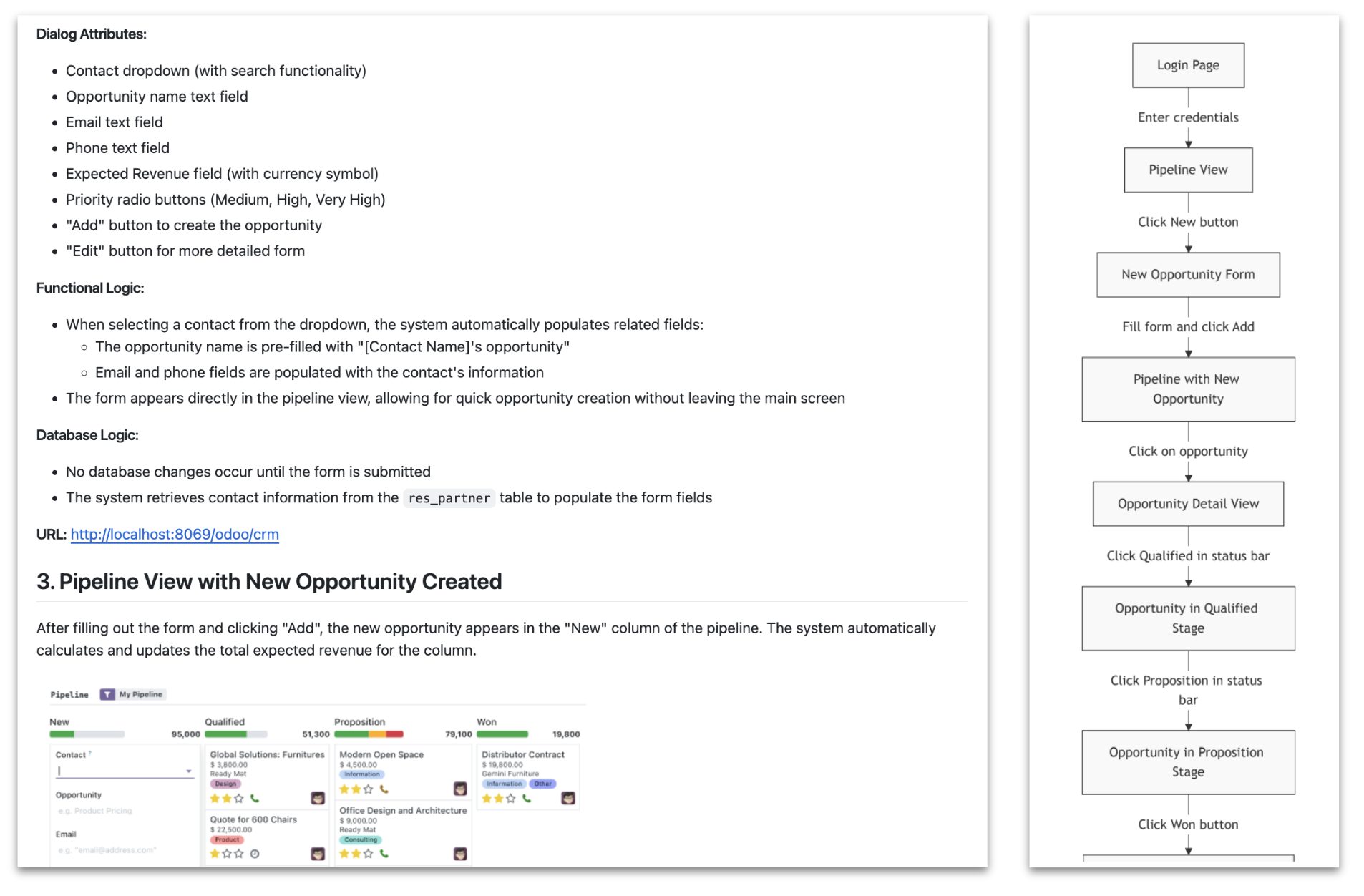

Reverse-engineer the functionality of a single, core user journey: creating a new opportunity in the sales pipeline of Odoo’s CRM. The deliverable of this step was a specification document describing all available information about the application’s behavior, including screenshots.

Explore what new opportunities Generative AI offers for building a “feature parity test” that can be run against both the old and the new application.

Experiment iteration one: Reconnaissance

Our first pass was a broad exploration.

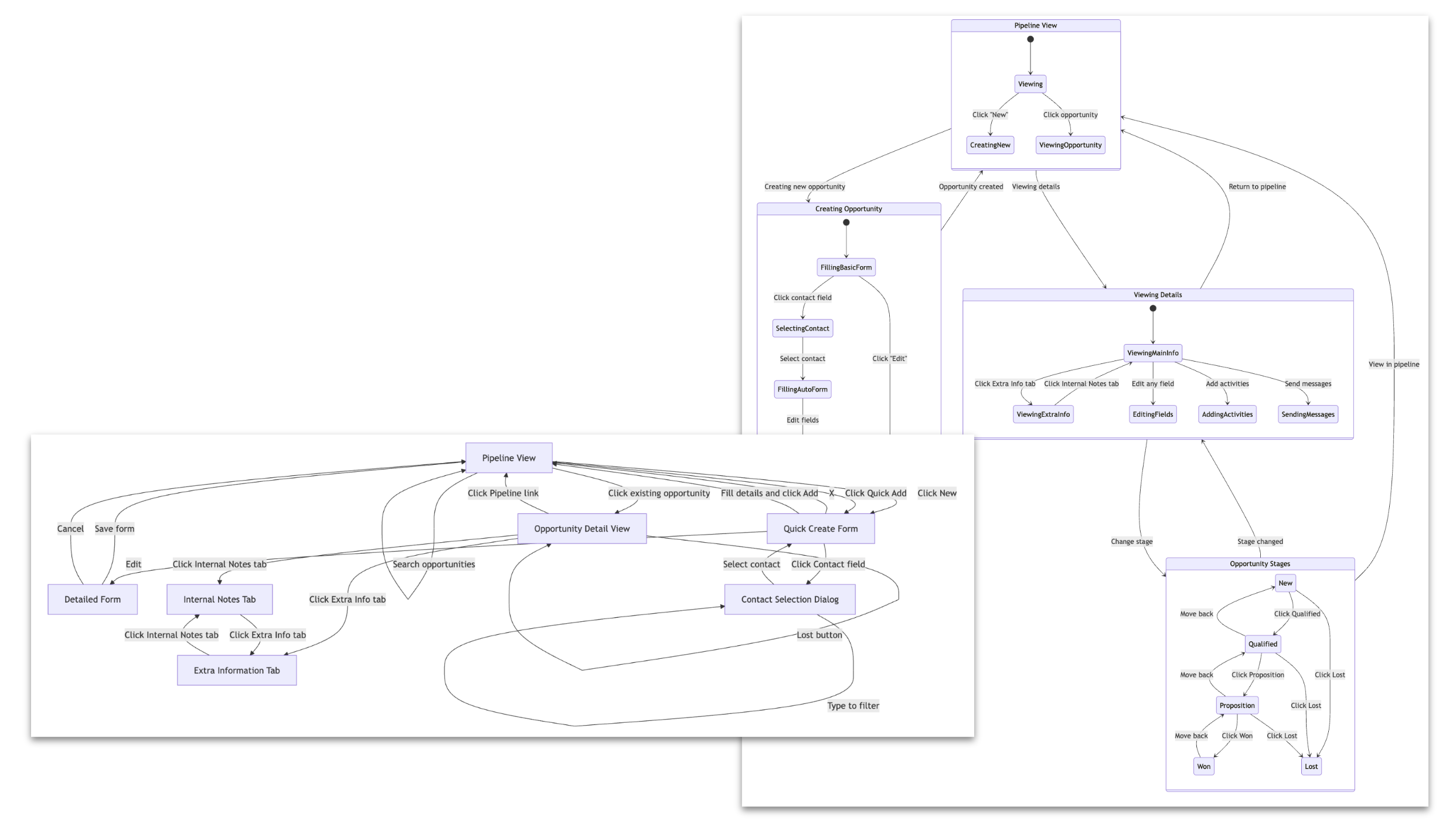

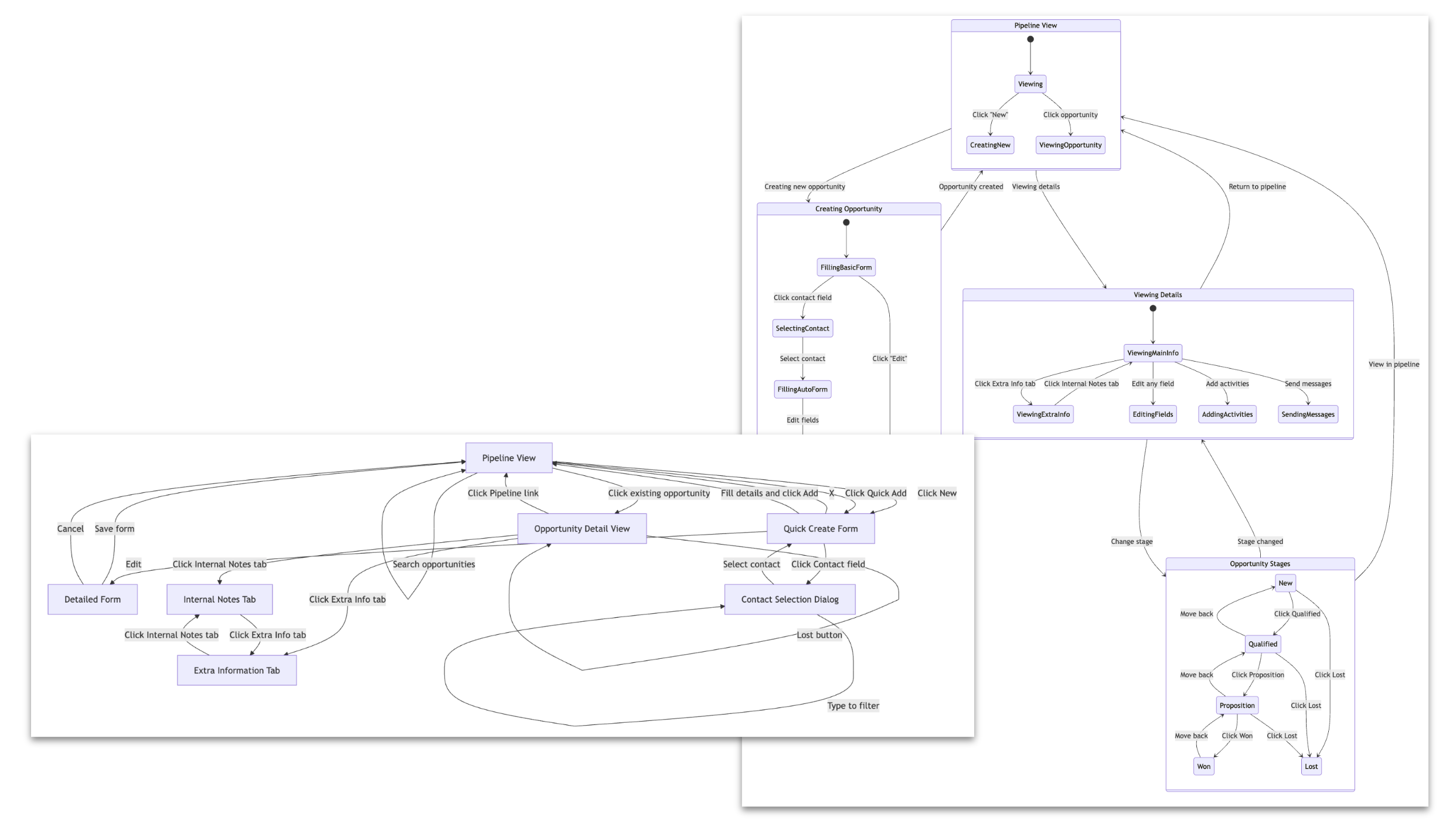

- AI as the user. We sent an AI agent with access to the Playwright MCP server to navigate the application. The prompt asked it to "discover the user journey to create a new opportunity in the sales pipeline." The agent clicked through the UI, took screenshots and generated a description of all the dialogs with their fields and the dynamic behaviors it could observe (e.g. “when an entry is chosen from this dropdown, the fields in the form get auto-populated”). We also asked for a flowchart that described the click path it found. The flow it found was a very linear "happy path" — almost suspiciously simple. No real-world application is that straightforward; we knew there was more to be done to get AI to discover the more complex reality of the paths.

- Change data capture. After we had a first description of the paths, we asked AI to navigate through again and look up the database changes after each click. This was made possible by setting up triggers in the database that created a log of every INSERT and UPDATE operation in one of the relevant tables. We built a small custom MCP server that allowed the AI agent to query this audit log after each interaction. As a result, our functionality spec was enriched with the database operations that happened after each click step. This change data capture (CDC) approach proved to be a powerful, low-effort way to deconstruct the queries from the outside.

Experiment iteration two: Fleshing out the specification

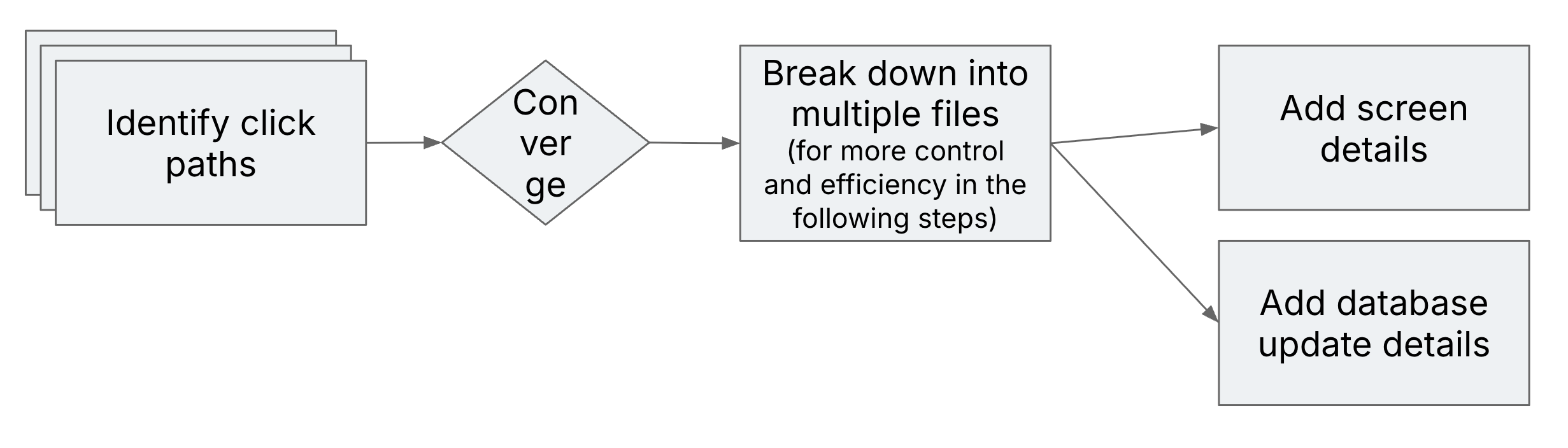

With the insights from our first pass, we improved the workflow and the prompts. First, we refined our click paths discovery prompt, more explicitly prompting it to find and traverse all possible user actions on each screen. And, instead of one run, we ran the agent multiple times, because we suspected that AI might have a hard time finding all path branches in just one go. We were correct: each run uncovered slightly different branches and variations.

We then used another AI step to "converge" these different versions, analyze and double check the discrepancies and create a single, consolidated map of the user flow. The resulting diagrams were far more representative of the application's real behavior.

In this iteration, we also attempted to add another layer of data gathering by capturing network traffic. The idea was to reverse-engineer the backend API by analyzing the JSON payloads passing between the frontend and backend.

While we eventually succeeded in generating a Swagger document of the API, this setup was much more difficult to figure out than the change data capture.

We also concluded that for this specific scenario — where the API is an internal implementation detail, not a public contract — the information about the backend API didn’t really give us anything new that we didn’t already know about the data structures.

Validation through rebuilding

The ultimate test was to use our AI-generated artifacts to create a new application. Our focus in this experiment wasn’t forward engineering, so we didn’t want to spend too much time on this step.

However, we still needed to see if the specification was of any use; so, we decided to feed it into one of the AI rapid application generator tools.

First we fed our detailed specification — the collection of Markdown files, screenshots and database logic descriptions — into a series of prompts that generated epics, user stories and an order of implementation. Then, we fed the first set of stories into Replit.

Some observations from this validation phase:

Prototyping as a validator. Watching the AI build the prototype was a very effective way to validate and improve the spec. When the prototype deviated from the original, it was sometimes easier to spot a flaw or ambiguity in our requirements document than it was when reading the specification.

AI struggles in planning. LLMs often struggle to create a logical, incremental implementation plan; that was the case here. Its first instinct was to build the entire, complex pipeline view in one go, rather than starting with the simplest elements and building up. A good AI workflow and human oversight is important to guide the build strategy in a real-world scenario.

No demonstration of query fidelity. Unfortunately, Replit in this case ignored our inputs about the database schema and the exact queries we wanted done. As we didn’t want to spend too much time with forward engineering, this meant we couldn’t fully show that the new application could be pointed at the existing database. However, Generative AI is usually quite good at picking up on specific examples and schemas and using them in the code; it’s not much of a leap to think that the specific database queries we collected could be reproduced by a coding agent with dedicated prompting.

Can AI help test for parity?

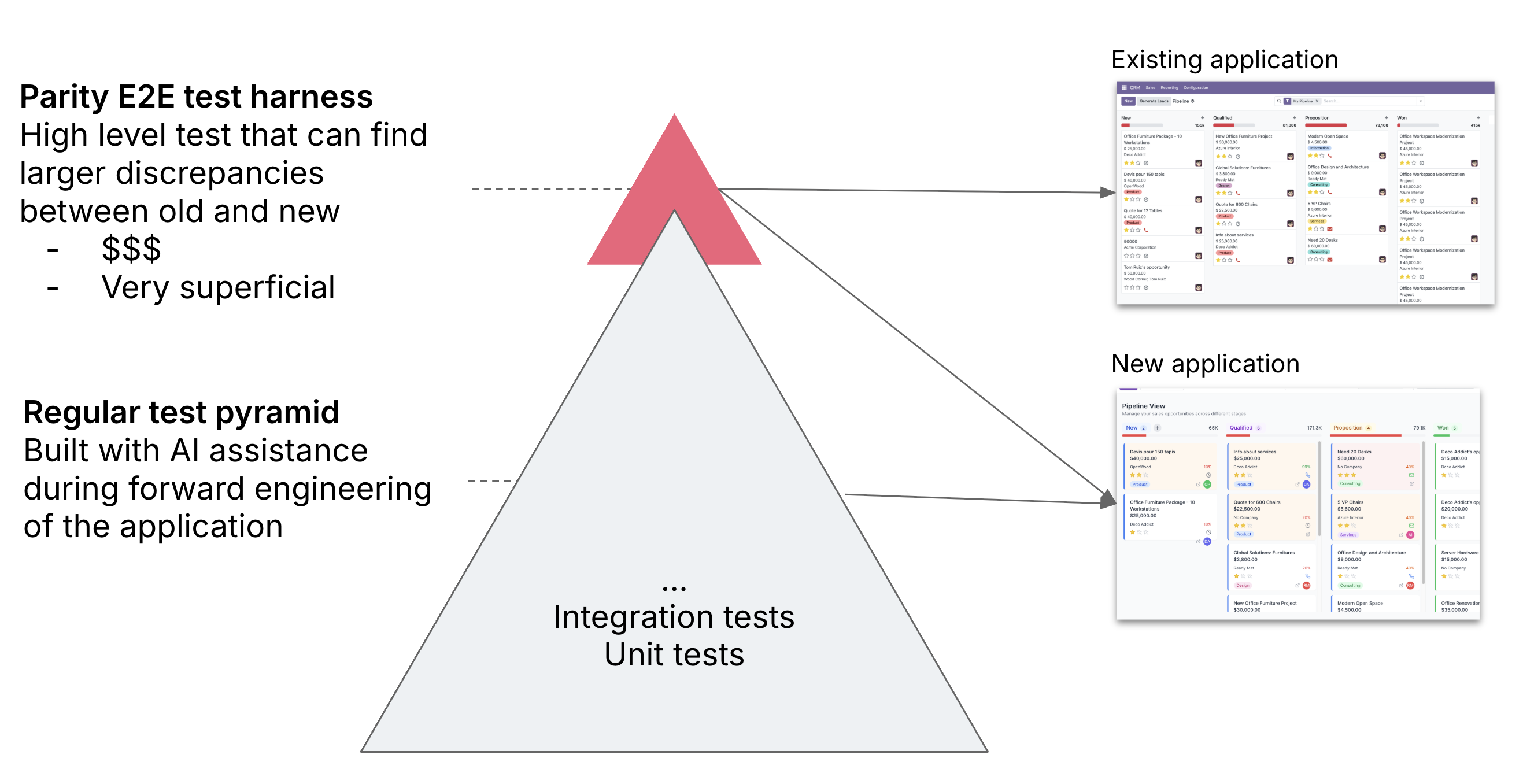

While the generated application wasn’t perfect, it did provide us with an opportunity to do a second experiment: How might we create an automated test suite that could run against both the old application and our new, slightly different prototype?

The challenge is that while we expect the same general user controls, the new application might look slightly different, and will certainly have different selectors in the DOM. AI offers some opportunities here to make test instructions more vague, which is an advantage in this use case. It can use visuals as information, andrespond to more vague UI element descriptions. For example, you could say things like “look for a + icon”, and it might work regardless of if that’s implemented with an image icon or textual representation.

We used a framework that allows you to add natural language test instructions to a Playwright test. Instead of writing a selector like find('button#id-123'), we could then make instructions like await ai('Click the + icon to create a new opportunity'). This way, the test wasn't tied to a specific DOM structure, which meant it would be high level enough to run against two different implementations of the same UI.

When we built a test suite that ran against both applications, we mostly ran into typical issues with end-to-end browser testing, like adding wait times. Adding AI's non-determinism on top of the notorious flakiness of these tests creates a new set of challenges. This is a promising use case, but one that requires careful implementation to avoid more noise than signal.

Prerequisites and limitations

This approach relies on having a safe, sandboxed test environment where AI agents can freely explore and data capture scripts can be run without impacting live operations.

Depending on the application type and complexity, we would expect the resulting reverse-engineered specification document to have varying levels of gaps in it that would require inputs from subject matter experts — like if there’s more complex logic that’s not observable in a user interface. We also didn’t explore techniques for landscapes involving service-to-service communication or other backend side effects. More network data capture might be useful here.

Conclusions and takeaways

Our experiment showed us that there’s a lot of potential here for using AI to reverse-engineer an application without having access to its source code.

Here are three key takeaways:

AI is an accelerator, not an automator. A recurring theme in all our experiments is that while AI is a powerful force multiplier, it doesn't replace human expertise. It takes the first pass, generates the baseline documentation, and frees up subject matter experts to focus on refinement and validation. The process is a human-AI collaboration.

Iterate and decompose. Breaking the application down into user journeys, and the reverse-engineering process into small, iterative steps (discover, converge, add details) helps achieve more high-fidelity results.

- Validate continuously. Using techniques like rapid prototyping and parity testing allows you to create early feedback loops.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.