Large language models (LLMs) are transforming academic publishing. The integration of LLMs brings significant advantages, including greater efficiency and greater accessibility for authors and audiences. However, it also introduces complex ethical challenges, especially regarding the integrity of knowledge production and ensuring fairness for authors, who are at risk of having their findings shared without accreditation. Historically leaders in editorial and technology haven’t been aligned. Integrity has been seen as primarily an editorial concern, while efficiency, cost effectiveness and capability delivery have been the primary concern of the technologist.

For technology leaders, editors and governance committees, deploying LLMs thoughtfully and responsibly is mandatory. This framework provides the necessary lens, based on risk stratification, to make effective decisions, helping leaders fulfil their role as gatekeepers of knowledge and upholders of organizational principles.

This framework builds on the approach outlined in Thoughtworks' Responsible Tech Playbook, which emphasizes assessing, modelling and mitigating values and risks in software development. It also builds upon and synthesizes principles and experience from major academic publishers (including Springer Nature, Elsevier, Sage, Wiley and Taylor and Francis), research guidelines published in Nature Machine Intelligence and incorporates guidelines from international bodies (ICMJE, COPE, STM).

The five critical questions for deployment

Before deploying any LLM application, technology leaders in academic publishing organizations must assess the systemic impact by asking the following questions:

- The stakes question: "If this system makes a biased decision, what's the worst-case impact on an individual researcher's career or a field of knowledge?" (If significant harm, proceed with extreme caution or not at all)

- The transparency question: "Can we clearly explain to affected parties how the LLM was used and the factors that influenced the decision?" (If you can't explain it, don't deploy it in high-stakes contexts)

- The oversight question: "Do we have the resources and expertise to meaningfully audit this system for bias on an ongoing basis?" (If you don't have the resources to continually audit, what is your mitigation strategy?)

- The reversibility question: "Can humans easily review, override and correct LLM decisions?" (Systems creating irreversible outcomes are higher risk)

- The necessity question: "Does using an LLM here genuinely improve outcomes for researchers and knowledge production, or primarily serve our operational efficiency?" (If not, how will we decommission the input to mitigate our climate impact? Can we achieve our objectives with traditional technology — often the answer is yes)

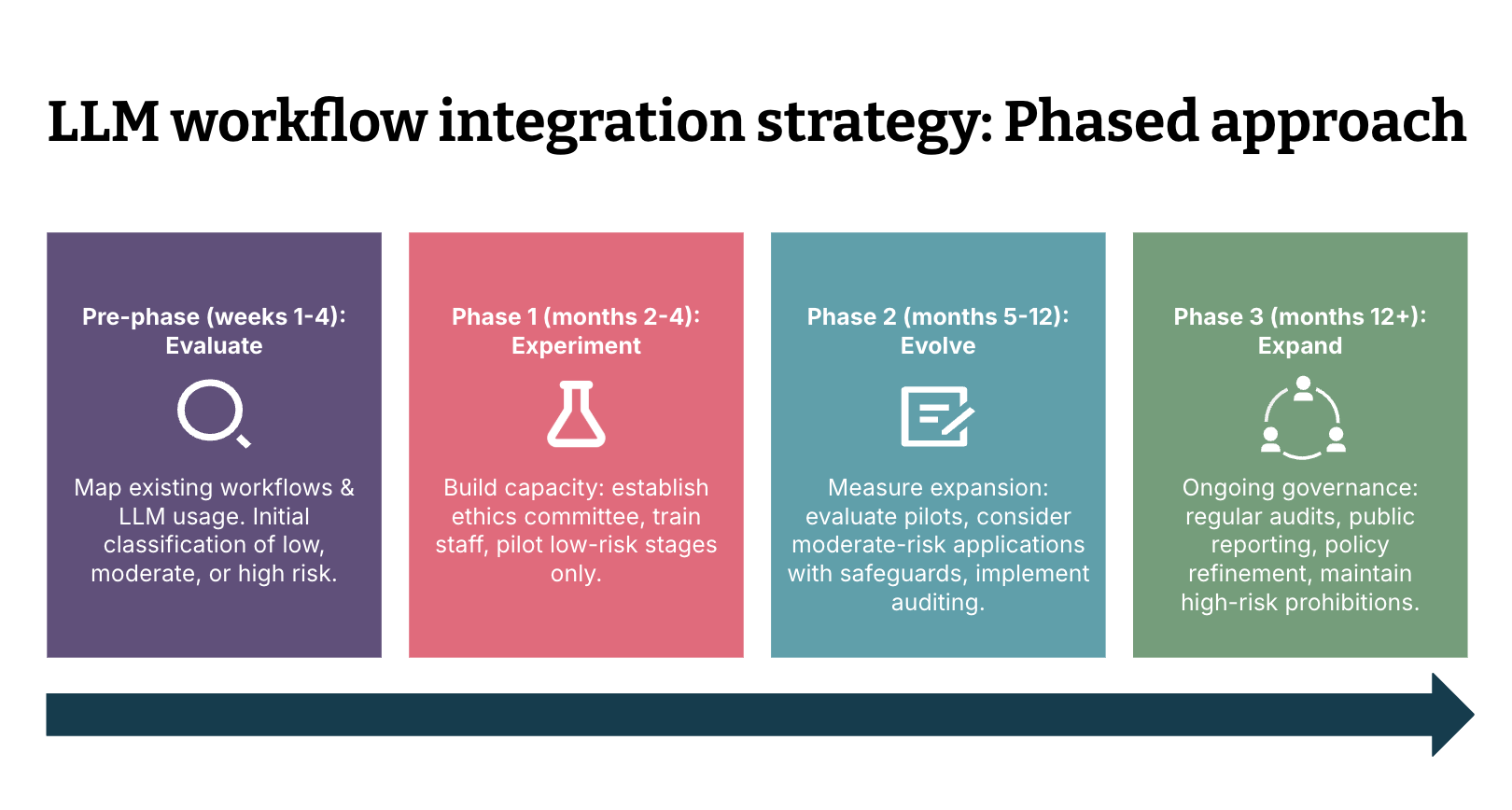

Risk stratification across the publishing value chain

Let's examine what this stratification approach might mean in practice by looking at concrete examples along the publishing value chain.

Value chain stage |

Risk level |

Appropriate LLM uses |

Critical safeguards |

|---|---|---|---|

Pre-submission: Author support and discovery |

Low |

Literature search assistance; plain-language summaries; translation assistance; language polishing (with author control); reading lists |

Present as starting points only; disclose LLM use; encourage verification |

Submission management: Administrative processing |

Low |

Formatting and copyediting; organizing/categorizing submissions; metadata summaries; scheduling; routine correspondence drafts |

Human review before external communications; spot-checking of categorizations and metadata summaries. |

Initial screening: Scope and completeness |

Moderate |

Preliminary scope/fit checks; completeness verification; technical format compliance |

Human review of ALL negative decisions; regular bias audits; clear appeals process; transparency with authors |

Peer review process: Reviewer support |

Moderate |

Optional methodology analysis as supplementary input; literature coverage checks; statistical approach review |

Frame as optional tool only; extensive training on limitations; monitor for bias patterns; never generate review text |

Editorial decision-making: Final judgements |

High - DO NOT USE |

DO NOT USE: Accept/reject decisions; publication priority ranking; merit/novelty evaluation; quality scoring; reviewer selection/evaluation |

Publication decisions shape careers; LLMs cannot evaluate rigour/significance; high bias risk; requires human accountability |

Integrity and ethics: Quality assurance |

Moderate |

Flagging potential plagiarism; identifying unusual patterns; detecting possible AI-generated content |

Flagging only, never automatic decisions; human expert review of all flags; extra caution with non-native speakers; regular bias testing |

Post-publication: Communications and engagement |

Mixed |

APPROPRIATE: Accessibility features; translating abstracts; supplementary formatting. DO NOT USE: Rejection letters; review feedback; editorial statements |

Authentic human engagement for official communications; transparency obligations; maintain trust |

The deployment of Large Language Models (LLMs) requires informed decision-making, as the associated risks vary across the stages of the value chain. By understanding this risk stratification and its implications, organizations can apply effective principles to manage LLM deployment.

The role of technology advisers in governance

As mentioned at the beginning, technology advisers must augment their technical capability with their understanding of editorial integrity. All too often technology advisors come under pressure from editorial owners to approve a technology that will make a process ‘more efficient’ but the longer term success of the organization’s content requires the consideration of:

Prioritizing outcomes: Asking whether LLMs genuinely improve outcomes for researchers and knowledge production, not just efficiency. This is the necessity question, requiring us to step back and ask what problem we’re trying to fix.

Clear communication: Translating LLM limitations and bias risks into plain language for editorial teams.

Championing oversight: Insisting on resources for ongoing bias auditing and establishing an AI ethics committee with diverse representation.

Preserving human judgement: Maintaining meaningful human involvement and pathways for humans to review, override and correct LLM decisions.

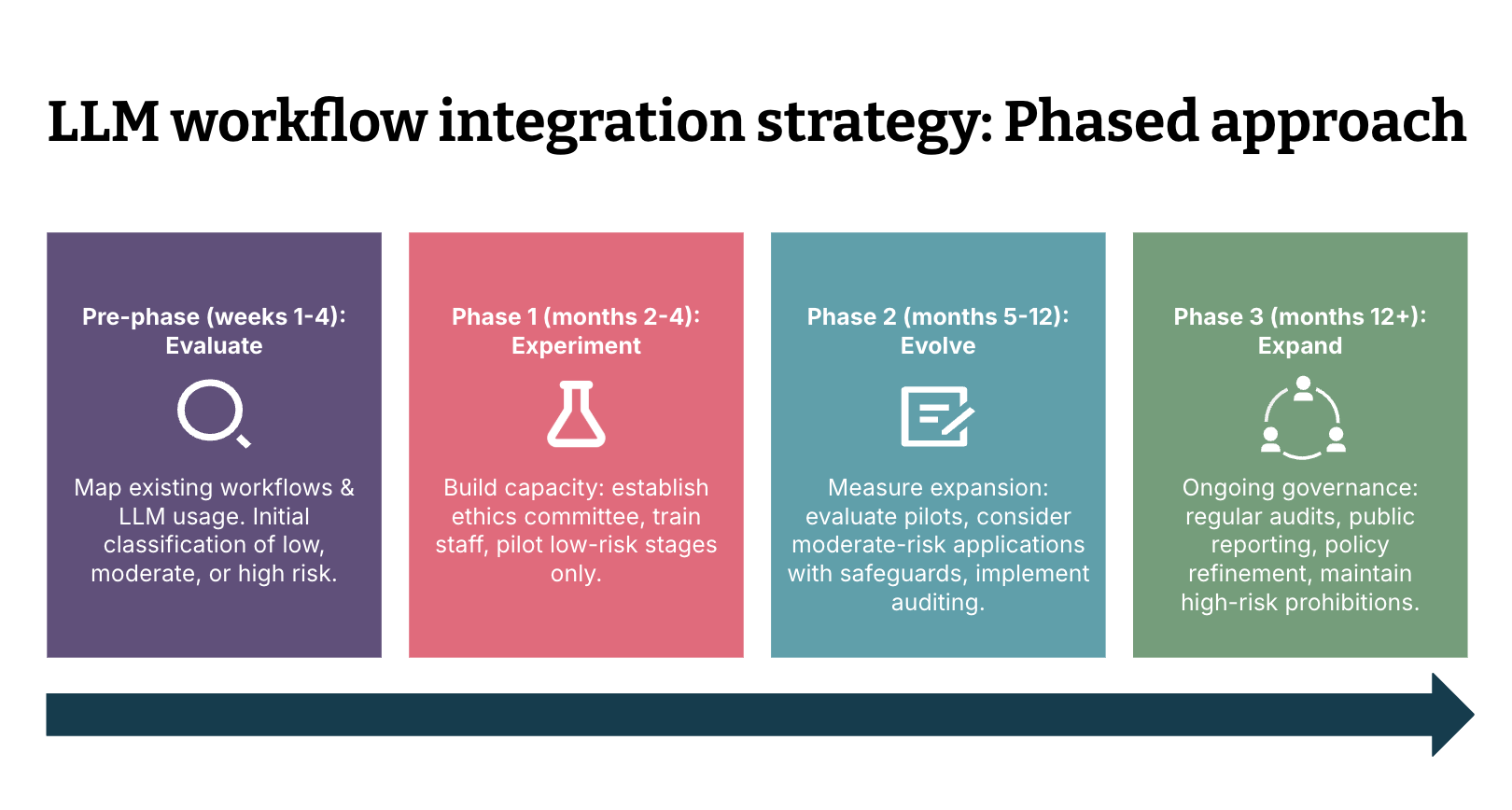

How to implement

As is often the case, Thoughtworks' approach to implementation is to start small and then scale. This means you can constantly improve quality and develop appropriate governance practices before you increase the content throughput and overall risk.

1. Start small with LLMs and balance tech and governance.

Pre-Phase (Weeks one to four): Evaluate

Map existing workflows and instances of LLM use across all touchpoints in a publishing workflow and do an initial classification of low, moderate, or high risk.

Phase one (months two to four): Experiment

Build capacity: Establish an ethics committee or ensure connection between existing editorial integrity teams, train staff and pilot low-risk value chain stages only. Staff training can make use of games such as the singularity game.

Phase two (months five to 12): Evolve

Measured expansion: Evaluate pilots, consider moderate-risk applications with safeguards and implement auditing.

Phase three (month 12+): Expand

Ongoing governance: Regular audits, public reporting, policy refinement and maintain high-risk prohibitions.

The consensus in academia is that large language models (LLMs) should be used, but as tools to assist human work, not replace it. Their use must be responsible, transparent, and under strict human oversight to maintain the integrity of the scientific record.

We’ve looked at the specific ethical risks associated with LLM integration across the publishing process, but further consideration is needed on whether content itself carries differential ethical risk. Academic content is not all equal in terms of risk.

Typically, journals segment research into different categories

Original research generates new data through experiments or observations; it's primary evidence.

Systematic reviews comprehensively find and synthesize all existing studies on a specific question using rigorous, pre-defined methods to minimize bias.

Narrative reviews provide expert overviews of a topic by surveying literature, but with flexible, subjective selection rather than systematic methodology.

Narrative reviews pose the highest risk of AI influence due to their reliance on author expertise, unlike the more structured systemic reviews and original research. Greater safeguards applied to narrative reviews will significantly improve knowledge quality overall preserving the integrity of the knowledge base.

2. Accelerate cycle time — without using LLMs

Not all bottlenecks in scholarly publishing are AI problems, many stem from the workflows themselves. To improve knowledge quality and accelerate cycle times, the academic process should first be streamlined through better data integration, clearer handoffs and redesigned workflows, crucially there is much that can be done to accelerate cycle time without the need to use AI and expose publishers to any risk at all.

Some stages, however, particularly peer review, are constrained by human capacity and disciplinary norms. While it may be tempting to use AI to speed these stages up, caution is required: reviewer identification, reviewer workload and LLM-assisted reviewing all carry risks of bias, copyright infringement, and reduced scholarly rigour. AI should only be introduced once the underlying workflow issues are addressed and never as a substitute for human academic judgement. There are many LLM based tools out there to accelerate the workflow but care should be taken that human decisions are not unduly influenced by LLM’s conclusions.

Essential organizational safeguards

Using AI in a way that’s responsible and ethical requires a number of organizational safeguards listed below, following our approach to LLM implementation can help you confidently ensure these safeguards are in-place:

- Governance: An AI ethics committee with diverse representation; technologists, editorial and ethics, clear approval pathways; board-level accountability.

- Bias auditing: Ensuring LLM outputs are systematically tested for demographic bias and measured over time; spot checking of output, external audits; public reporting; and adopting consequence scanning as part of agile software development ceremonies.

- Transparency: Disclose where/how LLMs are used; provide opt-out options; document limitations; publish regular reports.

- Human-centred design: LLMs augment, never replace; meaningful human involvement in all significant decisions; staff training on limitations.

- Continuous learning: Stay current with research; participate in industry conversations; willingness to pull back deployments; share learning.

- Clarity over data ownership and protection: Clear policies on how data can/cannot be used and who has ownership of the data.

Summary

LLMs can significantly boost efficiency and broaden access to content through their summarization and translation capabilities. However, they should not replace human judgment in crucial decisions that influence research careers and the creation of knowledge. Use LLMs for routine tasks, content discovery and accessibility improvements. The core principle is to preserve human expertise, judgment and accountability for the most important decisions: determining which research is published, amplified and whose voices shape academic discourse.

Maintaining the integrity and trust of the academic record is critical as innovation cannot flourish without it. Trust, in turn, hinges on transparency and accountability. This is why organisations such as STM, COPE and UKCORI dedicate resources to establishing core principles and guidelines. The imperative to accelerate delivery must not compromise the quality of published material, which means preventing the release of content that contains errors or relies on inaccuracies and false citations.

Don't forget there are many areas where significant gains can be made without using LLMs — those that require human intervention, such as peer review can be made simpler using existing technology and good design.

As this field rapidly evolves, demands for change from funders, researchers and publishers will increase as will the number of vendors providing solutions for different parts of the publishing value chain. Technologists could and should become trusted advisors and partner with editorial in safeguarding the integrity of the knowledge base. Technologists should actively partner with editorial teams to safeguard the integrity of the knowledge base, becoming trusted advisors in the process. Given the ethical risks introduced by LLMs, it is essential that technologists understand these risks and collaborate effectively with editorial to manage them. Achieving ethical technology deployment requires a sustained, organizational-wide focus, rather than relying on isolated initiatives.

Remember: ethics is an engineering requirement, just like security or performance. Ethics and a given technology’s impact on society fall within the domain of the technologist or product leader to be aware of.