Microservices - How A Large Enterprise “Grew Up” Doing It

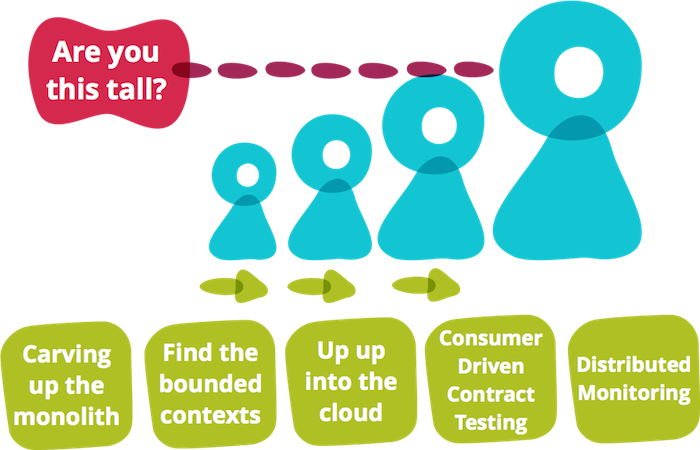

Am I tall enough?

Martin Fowler describes a set of “baseline competencies” that an organization requires to even consider implementing the microservices architecture pattern. Often large enterprises are hesitant to try and grow to meet these competencies for a variety of reasons. There is much written about the benefits that microservices provides once the journey is finished. This post will describe the challenges and resolutions that one such large enterprise experienced as they grew to meet Fowler’s baseline competencies.

Image by Fabio Pereira

In the beginning

Microservices are characterised differently in the industry. Some define this architectural style in terms of building small autonomous processes tasked with doing one thing well, as in the style of unix programming. Others characterise it as the first post DevOps revolution architecture that is a result of taking all the learning from DevOps and Continuous Delivery and optimizing around them.

Our initial reason to adopt microservices was to provide a means for scaling up teams. We started with a monolithic application, and Conway’s Law inspired us to split up this monolith. We ended with many small optimally sized teams for delivery.

The team structure influencing the architecture of a system is nothing new. Nygard sums this up well - “team assignments are the first draft of the architecture”. This initial requirement around scaling teams was the catalyst for bringing a number of best practices to microservices and Continuous Delivery.

Carving up the Monolith

One of the first challenges we faced when splitting up the monolith was trying to find the seams or bounded contexts of the system. This exercise brought about an interesting side effect, namely decoupling our application by identifying those parts of the system that are less likely to change than others. This is akin to refactoring code such that your code is more likely to be amenable to change, but at an architectural level.

The task of carving out many services from a monolith is non-trivial. This involves creating a separate repository, build pipeline and infrastructure, and that all take time. As most organisations don't optimise to build new services frequently there is often hesitation around "if it has taken us this long for one service, how do we do it for all other services?”

By tackling this problem iteratively, we were able to begin the process of improving our build pipeline, project creation, provisioning and deployment. That’s a large part of the benefits around architecting for microservices - it forces you to build quality and maturity into not only your software but also the processes and practices around producing it.

Up up into the cloud

Provisioning hardware is a roadblock in improving DevOps best practices. If it takes someone in another department weeks to provision a new box to host your new service, you have problems. Fortunately we had already begun the journey of moving into the cloud and bringing in place the necessary toolsets to support cloud deployments in an enterprise setting.

Even with these tools, developers need to start owning their software, including production deployment and support. Siloed organisations have a tendency to “shield” their developers from the realities of production systems. The idea that "you build it; you own it" is very important and again it promotes maturity around best practices. Namely, if developers have a vested interest in the stability and support of production systems they are more likely to build them accordingly.

Consumer Driven Contract Testing

So we had RESTFul APIs deployed into the cloud and maintained by our optimally sized teams. This means that teams are able to develop things independently without stepping on each others feet. However, then we started noticing that services which we depended on started changing on us. The testers and business stakeholders started complaining about the stability of the system - this wasn't a problem when we had a monolith.

The technique we used to resolve this was to flip integration tests around. Instead of the consumer writing integration tests for the contract, the service provider maintains the suite of tests for the consumers of its API. This has a number of benefits. First, when the service provider breaks the contract, it’s notified straight away. Also, if the consumer adds a new test scenario which breaks the contract it will also break the build. These considerations drive something more important, namely communication between the teams and starting those conversations as soon as possible. The APIs still have the ability to evolve, but with the proper constraints required to ensure stability.

The butterfly effect

Another side effect of decoupling a monolith is that now the monitoring effort has multiplied. Whereas before you might have a single endpoint for a single application you now have many services. In addition to ensuring that the application is up you also need to provide sufficient monitoring around the infrastructure that supports your service.

In a monolith, if a service call fails we know exactly where to go. This is no longer the case with a decoupled architecture with many moving pieces. When a service is failing, the actual failure may be many services down the dependency tree. The mean time to recovery (MTTR) will also be affected - we have to investigate the affected service as well as all of the transitive dependencies for that service. We solved this problem with PRTG, a monitoring tool that gives us high level visibility of the different parts of the system through a dashboard.

Another form of monitoring which we found to be essential was distributed log aggregation. This allowed us to aggregate logs from different services and do federated queries. This can be enhanced even further by introducing correlation ids to specific requests. Distributed logging allowed us to watch the life of a request going through our system hopping across different services.

Going forward

We certainly have difficult problems we need to address in the future. This includes tough organizational changes such as aligning delivery with products rather than projects and empowering developers to be able to function in a DevOps environment. There is also scope for further automating our provisioning to include more automation of infrastructure via Ansible. In spite of these future challenges we have started to reap some of the benefits that introducing microservices pre-requisites provides. What about your microservices journey?

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.