Risk-based failure modelling for increased resilience

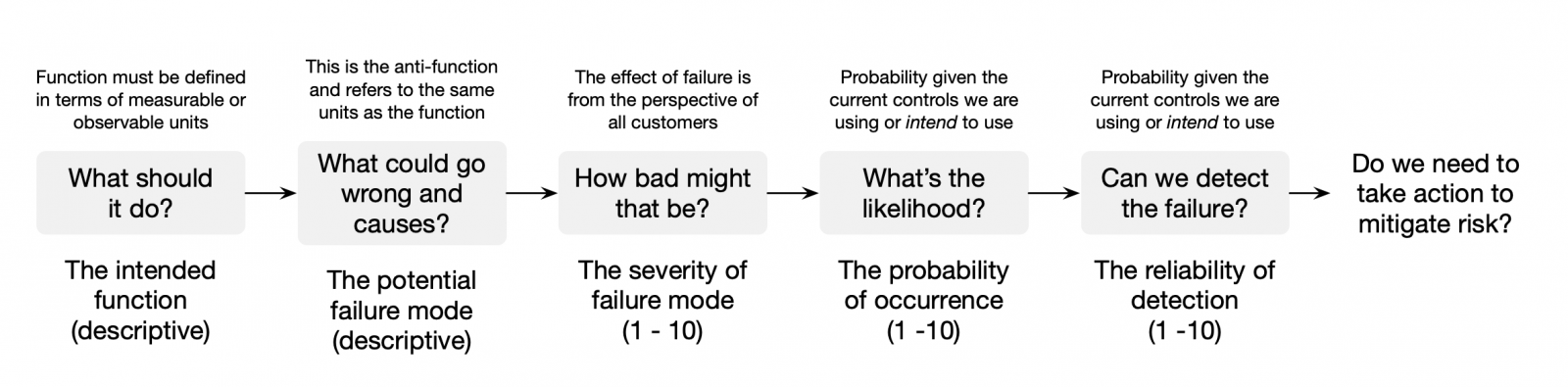

In product engineering, risk can be understood as a combination of three things: the severity of the effect of a potential failure, the likelihood or chance of the failure occurring, and the ability to detect the failure (or effect of the failure) if it does occur.

A failure is the inability of a system to perform its normal, intended function over its specified life or comply with an intended requirement. Failures can cost time, money, reputation, resources, and even the health and wellbeing of the people involved.

Risk-based failure modelling is a technique based on failure modes and effects analysis to attempt to understand the risk associated with the decisions we need to make to improve those decisions.

Understanding risk

Risk needs to be deeply understood both from the perspective of how design decisions we make create risk and how we manage risk once a decision is made and the risk is built into a system. The definition of a system here can be taken as a discrete product or a business process. Be aware that we are not talking here about what the possible causes of risks might be, only the potential for the system to fail (or produce undesirable outcomes) based on the decisions we make.It is important to understand that systems, by themselves, do not fail; components or individual functions within a system fail that result in system failure. Risk analysis needs to be conducted both at a system and at the component or function level (so that we understand how and why a component or function could fail) to be effective.

When we make fundamental engineering or architectural decisions, each decision carries risk of failure, depending on the environment within which the system exists and how it is being used. This happens whether we know it or not and these risks become a characteristic of the system.

While no-one has a crystal ball to say exactly how and when a system will fail, we can apply logical thinking and past experience, together with use risk prediction tools, to help understand the likelihood of different types of failures. We can then identify appropriate controls, both prevention and detection, to help mitigate or reduce the risk.

Failure modelling

In product engineering, risk-based failure modelling is a formal process conducted by the team responsible for both delivery and run. It cannot be delegated to a risk team or a risk function. To be effective, the modelling needs to be as objective as possible so that there is consistency of evaluation (across teams and projects and over time) and there is a way to compare risks for prioritization of action.To this end, an agreed set of descriptive ratings are needed to rank each potential failure. Descriptive ratings help avoid, or at least minimise, biases and fallacies - confirmation bias and false equivalence are two common ones - often present in evaluating risk.

Good failure modelling is done from the perspective of both the design and architectural decisions we need to make and the run conditions in which we intend to operate. Often potential risks identified in one can be used to inform the other. There is a common approach to analysis in both phases - in that similar questions are posed to evaluate risk - but there are differences in how we evaluate the likelihood of a failure and determine our ability to quickly and accurately detect a failure if it was to occur.

Controls in the design and delivery phase and the run phase are different (although our experience is that most high-performing components make little distinction between design, build and run - they are a continuum). However, both phases value a combination of prevention controls (controls that prevent the cause of the potential failure) and detection controls (controls that identify the effect of the failure or the failure itself). This is especially true where we are trying to mitigate critical risks, risks that, when realised, represent an unacceptable level of failure and loss, especially to an end user.

Examples of controls include organizational design rules and architectural principles, good engineering standards and practices, and information obtained from corrective and preventive actions from previously solved problems (lessons learned).

Mitigating potential failure

The best way to mitigate potential failures, especially failures that can be influenced at the design phase, is to increase our ability to make better decisions. The risk-based failure modelling process utilizes as much previous knowledge and experience as possible to answer the following questions about each function of a system compared to the desired state of each function.- How could the component or function within the system fail? What does failure look like?

- How bad might the failure be (from the perspective of any customer reasonably expected to experience the failure)?

- What are the possible root causes of the failure? What are the possible mechanisms by which the failure might occur?

- What is the likelihood, given the design decisions we are about to make and the run conditions and noise factors the system is expected to experience, of the failure occurring?

- How good are our controls (for example, automated testing or observability and automated correlation of events) at detecting the failure or the effects of failure?

- What, if anything, should we do to reduce or mitigate the risk? How can we improve the controls to reduce the chance of a failure occurring and/or increase our ability to detect a failure?

Risk analysis and mitigation process

Criticality (C) = Severity x Occurrence (SO)

Risk Priority Number (RPN) = Severity x Occurrence x Detection (SOD)

These questions prompt us to consider the conditions in which the system and its functions need to operate and the noise factors that the system will be subjected to.

Noise factors are anything that interferes with a system performing its normal, intended functions and can include things like unexpected loads from other services, security patching and updates, hardware faults and failures, and denial of service attacks. With a little imagination, we can quickly develop a list of noise factors that we should consider when making decisions that affect how the system is built and operated.

Where we are able to identify the possible causes of a serious failure, we can introduce or improve controls that work to prevent the cause from occurring in the first place. And where a failure is potentially catastrophic, we should consider both controls to prevent the failure and controls to detect it as early as possible so that we minimise the chance of the failure affecting customers.

Let's use a simple example to illustrate the difference.

If a specific function of a software component is to provide secure authentication between a user and a service, there is a risk of the loss of integrity of the user's authentication information (the potential failure of the function of the component). One possible cause of the loss of integrity of the users' authentication information could be determined to be no, poor or incomplete encryption of information in transit.

Therefore, a decision is made in the design phase to use a security certificate issued by a Certificate Authority for end-to-end encryption of the user's authentication information. Based on the decision to use a digital certificate (the intended design control), the risk of the likelihood of the potential failure occurring is then evaluated using the rating system. Further mitigation may be required as a result of the evaluation if the risk is deemed too high.

Correspondingly, in the operation of that same function in the run environment, the same failure is considered (the potential loss of the integrity of the users' authentication information) but in this case a possible cause of the failure might be determined to be an expired security certificate (no encryption).

Assuming there are no existing controls in place to address this risk, the risk of the expired security certificate could be mitigated by the planned introduction of an automated check and notification script to flag a certificate when it is less than 1 month from expiry. The service management plan would need to be updated to incorporate the new control.

Problem solving as a preventive activity in risk management

When failures do occur in live systems, proper problem solving (with deliberate root cause analysis) can be used to feedback information to improve future risk analysis. Actual root causes of failures are found and verified and the knowledge can be used to inform future decisions at risk of similar failures.

This information becomes proprietary knowledge about how systems actually work in the real world and it increases the accuracy of predictions of future failures for better decision-making and better delivery and run controls.

The knowledge must be centrally stored within the organization and must be accessible for use in all new projects and incidents. Over time and across projects, this knowledge grows and is supported by real-world experience. This, in turn, reduces risk and increases the resilience of systems that we build to survive in the wild.

Risk needs to be deeply understood, both from the perspective of how design decisions we make create risk and how we manage risk once a decision is made and the risk is built into a system. Like problem-solving skills, risk-based failure modelling skills are essential to anyone involved in architectural and engineering decisions and product build and run. The better we understand how what we do affects risk, and by extension, the experience of the customers who use the systems we build, the more robust, reliable and resilient we can make those systems.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.