The current excitement around using generative AI (GenAI) to make software delivery faster is putting a spotlight on how collaborative software delivery actually works. We're finding that the following three points are getting a lot of renewed attention in the context of conversations about using GenAI for software delivery:

When setting expectations about the potential gains of GenAI coding assistants, we once again need to reinforce a shared understanding among stakeholders that software development is not just a coding factory.

Deciding where to use GenAI for software delivery tasks means understanding the mechanisms that make teams more effective, to avoid pitfalls and find the right leverage points.

As organizations are considering the use of GenAI coding assistants, there is a resurgence of interest in the question of how to measure if they're successful at software delivery.

Understanding these three facets is the basis for successfully using GenAI to improve software delivery.

Software delivery is not a code factory

When the potential of GenAI for coding assistance became more apparent, many leaders, frustrated with the slow speed of their custom IT, got excited. And rightfully so: GitHub claims developers can complete tasks 55% faster when using GitHub Copilot. And while we think that it's not quite as easy as that one number— because it depends on various characteristics of the task and the developer's background — our experience also shows that GenAI coding assistants are indeed very useful tools that bring a net positive to developer productivity and experience.

However, even if coding assistants could create a consistent overall 55% gain in coding speed, we should not conclude that you can now do the same work with half the people. To do so would be a simplification of what software engineering actually is, reducing it to the activity of coding only, treating it like production work in a factory. Instead, software creation includes a high amount of design activities, and comes with much more uncertainty, and less repeatability, than a smooth running assembly line. Software delivery is more comparable to a design process in a studio than to a factory.

To turn once more to GitHub's research: One of their 2023 surveys found that the teams questioned spend only a little more than 30% of their time on coding. The rest of their time is spent on collaboration, talking to end users, designing solutions to things they haven't done before or learning new skills.

Leaders should therefore not expect to boost a team's throughput by 50% just by introducing a coding assistant.

Effective teams are systems of collaboration

To use GenAI for the things that have the most potential to make a difference, we need to understand the mechanics of effective software delivery, many of which are counterintuitive. Other than a code factory, software delivery is a highly collaborative process, a team sport. The focus, therefore, always has to be on the team and the collaborative system as a whole, not just on the individuals and individual tasks.

We see the following pitfalls when introducing GenAI to improve software delivery teams without keeping the overall system in mind:

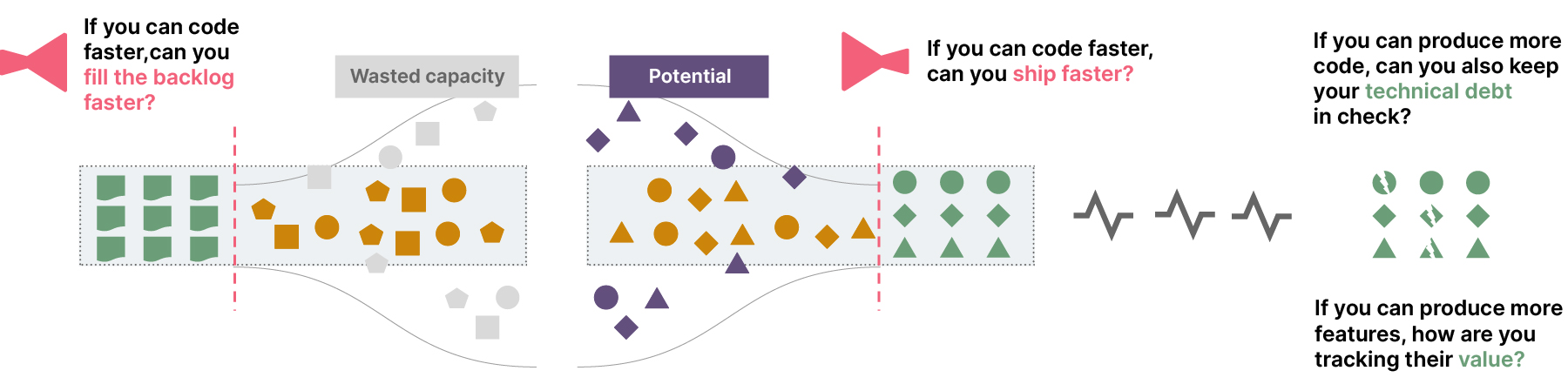

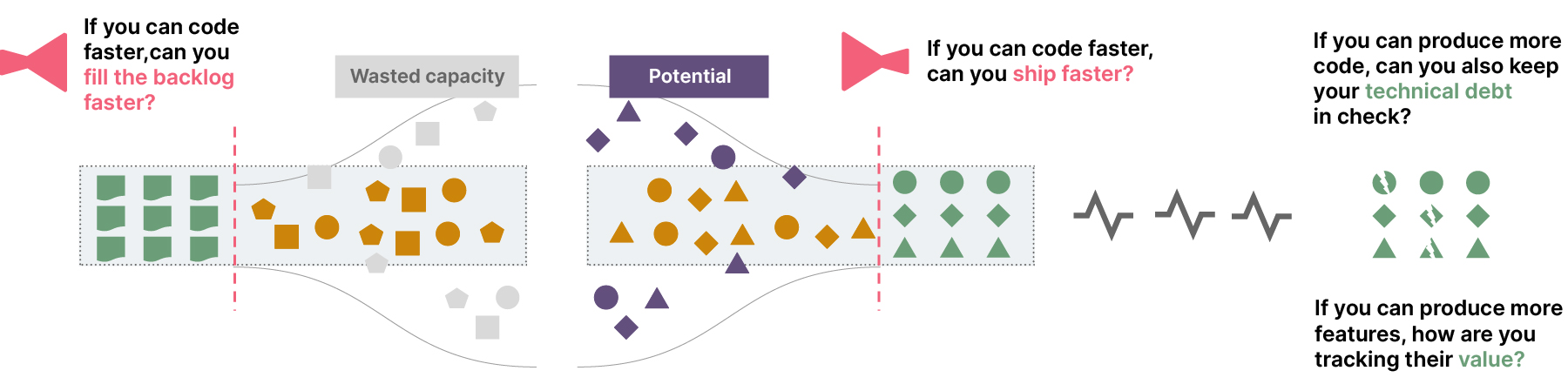

Pitfall: Creating bottlenecks by boosting individual parts

Let's once more say that, hypothetically, a coding assistant could enable a team to code 50% faster — doubling the code throughput. What happens to the rest of the process? If you can code faster, can you fill the backlog faster? Can you ship faster? More lines of code means more maintenance and technical debt, can you keep this in check? More code throughput means more feature throughput; are you ready to monitor the value of all of those features, provide customer support for them and provide the underlying non-IT processes?

Solid engineering and product development practices are still crucial to make software delivery successful, maybe even more so with the amplification of GenAI.

Pitfall: Reducing team collaboration

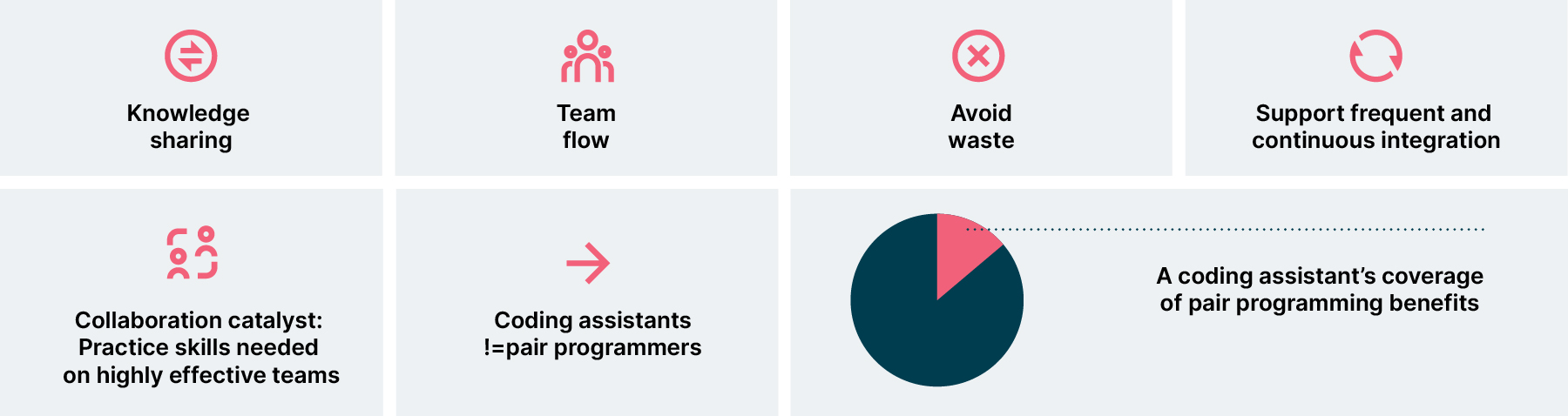

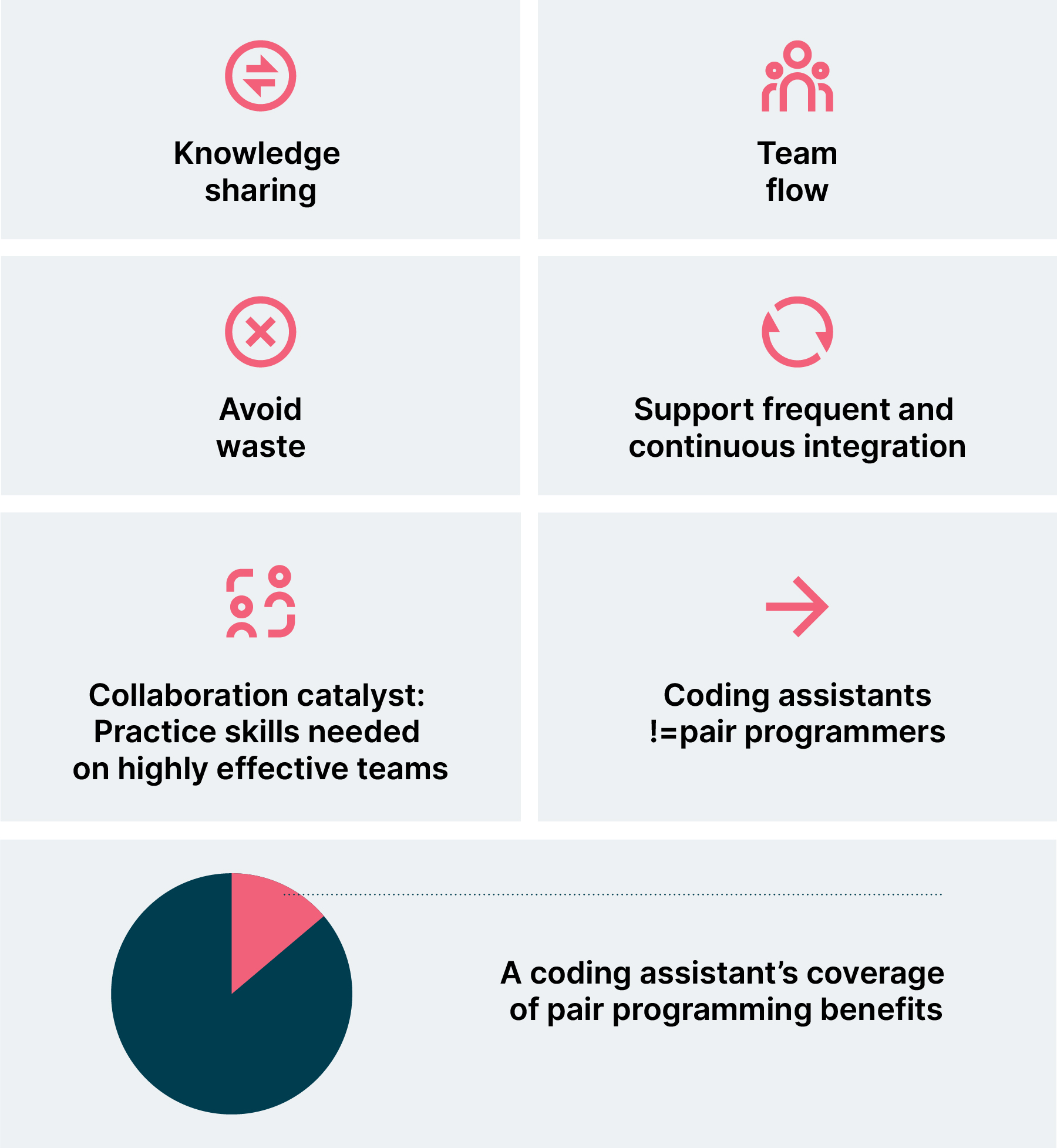

As big advocates of pair programming, one of the frequently asked questions we get about coding assistants is, "does this replace pair programming?" At one level, this question may seem like an obvious one to ask. One of the potentials of GenAI, after all, is to improve flow by improving information discovery, thus reducing the dependency on other team members for knowledge sharing or collaborative brainstorming and problem solving — precisely those things that pairing gives us. However, while we very much embrace the potential of GenAI here, we are wary of taking this too far. It could, for example, further isolate team members from each other, a trend that has unfortunately already been on the rise with the proliferation of remote work.

Therefore we believe that pair programming, or pairing among team members in general, is still a crucial part of effective software delivery, maybe even more than before. Pairing is about making the team as a whole better. So, consider using GenAI to boost pairs, not just individuals. That also has the nice side effect of mitigating some of the quality and hallucination risks, as two brains can see more than one.

Benefits and purposes of Pair Programming

Pitfall: Amplifying the bad and the ugly

Large language models (LLMs) repeat patterns of language; they do not actually understand the meaning of that language, or understand if what they are reproducing is "good" or "bad". Coding assistants are a great illustration of that. They will repeat what they see, either in their training data, or in the codebase the developer is working on, without distinguishing "good" code from "bad" code.

GenAI amplifies and boosts what we apply it to. So, if the quality of the data we feed it for a task is bad, we amplify that. If we let GenAI generate documentation based on very badly written, inexpressive code, that documentation probably won’t be useful; it may even be harmful. If we create a GenAI knowledge search based on documentation that is outdated, ambiguous and badly written, then we are amplifying bad information.

Even worse, we ourselves can be the ones amplifying the wrong thing. If we use GenAI to assist with tasks in our processes that perhaps we shouldn't be doing in the first place (like the wrong type of testing, or not properly automated deployment processes), then we’re doing little more than reducing the pain threshold that might otherwise prompt us to improve the process. In other words, instead of making a task slightly less painful with GenAI, we could invest and automate it. When these improvements are not done for crucial and frequently done parts of the process, it can do more harm than good.

Measuring delivery performance and success

The introduction of GenAI-powered tools like coding assistants is shaping up to be a process that many organizations want to measure extensively. Everybody wants to know, "what do we get out of it? How do we measure it?" The answer to this question does not change with GenAI though. I always answer this with a counter question: "What are you measuring today? Can you observe the trend of your current measures after introducing the tool?"

How to best measure the effectiveness and performance of software teams is a wide-ranging and contentious field. There are some recent examples of how contentious this topic can be, like these analyses of the still prevailing misconceptions about measuring software team performance by Kent Beck and Gergely Orosz, or this one by Daniel Terhorst-North.

The following are some of the key concepts we usually turn to when we think about delivery metrics at Thoughtworks:

Building the thing right

We like the DORA metrics as lagging indicators of team performance. For leading indicators, we look to measures that drive the focus to sources of overhead and waste, because waste is easier to measure than productivity. A great place to start here is to look at measuring feedback loops, like the examples in this article on Maximizing Developer Effectiveness. The DevEx approach to measuring productivity also drives attention to feedback loops, flow state, and cognitive load.

The SPACE framework for developer productivity offers another useful mental model for thinking about categories of metrics — as long as metrics in the "A" category (for "Activity") are not overused. GitHub's research shows an example of how to apply SPACE to answer questions about GitHub Copilot’s effectiveness.

Building the right thing

All of the above metrics focus on "building the thing right". It's even more important to measure if you're "building the right thing", and if your product value is actually manifesting itself. You can be as fast and effective at building as you want, but if what you are building is not valuable, you're just building something useless, but faster. So, think about how to measure the improvements and value you want to provide with your software, like the improvements in experience, in user efficiency, your general ability to provide a high quality service and, of course, profit and revenue.

Metrics in general

Across all metrics, the following are some of the general principles we apply to measuring:

Measure team effectiveness, not individual performance.

Measure outcomes over outputs.

Look at trends over time, instead of comparing absolutes across teams.

Dashboards are placeholders for conversations.

Measure what is useful, not what is easy to measure.

We also recommend you read Patrick Kua's timeless advice about the use of metrics, with advice like linking your metrics to goals, and seeint them as drivers for change.

The GenAI technology trend should be viewed as an opportunity to revisit your approach to measuring software delivery effectiveness and to think about what you want to monitor when you introduce GenAI tooling.

Conclusions

Generative AI is currently creating a lot of hype; there’s a real fear of missing out across the industry. Under pressure, we tend to fall back on simplistic answers to complex questions. Three complex things will remain constants though, regardless of this technology shift:

Building software is a design process that is exposed to a lot of variables; it isn’t a predictable assembly line of creating code. And the process of creating software will become even more focussed on design, as GenAI coding assistants will become more and more useful.

We still need to build software in collaboration and need to cater for the mechanics and complexities of human teamwork.

While some of the details of which metrics exactly to use might shift over time, the principles of measuring outcomes will remain the same.

The more we keep those constants in mind, the more effectively we can use GenAI in a way that makes things better, not just the same — or even worse.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.