Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.

In Part 1 of this post, we explained where you might be misled by your A/B testing result, and how to interpret your A/B testing result. In this second part, we’ll give an example to show you how to A/B test step by step.

How to apply this in practice?

Here I would like to give an example of how we do A/B testing.

We have a SaaS product called Mingle that is an online collaboration tool for software teams. As an example, let’s say we want to A/B test two different signup forms. We put people into 2 groups: group A gets the current form while group B gets a new one (0ur hypothesis is that B will be more effective at generating signups). We would like to find out which one has better conversion.

First, we need to ask ourselves 2 questions:

1. How much improvement do we expect?

2. What sample size is sufficient?

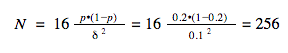

Our conversion baseline is 20% (p = 0.2). With the planned changes, we are expecting a 10% improvement (σ = 0.1). We can use this formula to count the sample size (N) we need:

In total, we need 516 trials (for 2 groups, across both A and B). We have about 500 sign-ups every week. To get a result of statistical significance, we would need to run the test for about 1 week. This is a reasonable amount of time for us.

Then, a little dev work

We need to setup the A/B test infrastructure. On the Mingle team, we use a very nice analytical tool called Mixpanel. We send events to Mixpanel, which then helps us organize and display the results. It’s easy to setup.

Now, we just need to wait

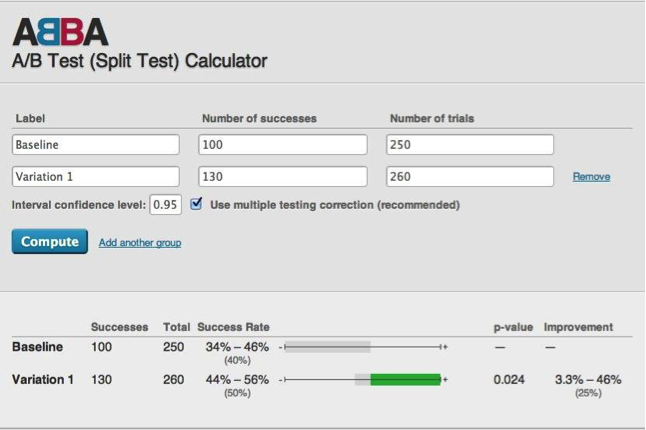

After 2 weeks, we get the following result:

Group A: 100 out of 250 succeed

Group B: 130 out of 260 succeed

Here, we can use p-value calculator to interpret the result:

The p-value of this experiment is 0.024%, which is much lower (in a good way) compared to the commonly occurring number 0.5%. And the calculator also tells us the number means there is a 3.3% to 46% chance that the new form is an improvement.

With this number, we can confidently make the changes in group B across the board, knowing that it will result in higher conversions, and move on to other exciting work!

Share your A/B testing stories with us!

Now, hopefully this post has made you feel ready to start your journey of A/B testing. We would love to hear how you do that and what you learn. Please share your stories with us!

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.

Thoughtworks acknowledges the Traditional Owners of the land where we work and live, and their continued connection to Country. We pay our respects to Elders past and present. Aboriginal and Torres Strait Islander peoples were the world's first scientists, technologists, engineers and mathematicians. We celebrate the stories, culture and traditions of Aboriginal and Torres Strait Islander Elders of all communities who also work and live on this land.

As a company, we invite Thoughtworkers to be actively engaged in advancing reconciliation and strengthen their solidarity with the First Peoples of Australia. Since 2019, we have been working with Reconciliation Australia to formalize our commitment and take meaningful action to advance reconciliation. We invite you to review our Reconciliation Action Plan.