How we used AI to modernize a custom-built app in six weeks vs. an estimate of six months

Despite the era of AI, many enterprises still rely on legacy systems that slow down the delivery of new products and services. Built on older technologies, these systems are typically poorly documented, difficult to maintain and carry significant security risks due to a lack of personnel expertise. Although they remain at the core of critical business capabilities and data, they have become a major bottleneck for broader AI adoption.

While the need for legacy modernization is clear, organizations are hesitant to invest due to a history of costly, unsuccessful projects. This article details how we leveraged AI to overcome these common challenges and to modernize a custom-built app in six weeks vs. an estimate of six months.

Legacy modernization complexity

Legacy modernization is complex. Whether the “legacy system” under consideration is a mainframe system working as a core banking solution or an Angular v2.2 dashboard used to allocate funds across a portfolio of initiatives, there are certain common factors that make the task challenging.

Loss of historical context

Before we start the work of modernizing the legacy system, we first need to understand the business problem it solves. This implies a dependency on the individuals who built and operated the system. More often than not, even the team that is responsible for maintaining and running the system in production only has limited understanding. Trying to replicate a system without understanding what it does is a big risk and is a reason why a number of modernization initiatives fail.

Limited automated tests

A good suite of automated functional tests helps in two ways. First, they can be relied on as good documentation of the current codebase. This of course assumes that tests have been written in a manner which makes it easy to read and understand them. Second, the tests can be used to evaluate the correctness of a new system, in particular if the interfaces of the systems remain more or less the same. One can rely on these tests to act as guardrails, ensuring that the new modernized system works as per specification.

Limited expertise in legacy tools, languages and frameworks

Even with good tests and context the task can be challenging if code is not readable. It can happen because of code quality issues or because the languages and frameworks are no longer in use. Irrespective of the tests and documentation, code is the only true source of the system’s capabilities. Therefore, teams that have a better understanding of the code are more confident in their ability to change it.

Time investment

Time investment is a function of the team's understanding of business capabilities and what the underlying code does. While business leaders understand the need for legacy replacement, the estimates provided by the engineering teams often come out as a reason not to invest. The engineering team is constrained by the limited understanding of a business-critical system that it has to modernize and business is constrained by stretched timelines.

While the challenges are real, we believe that the usage of AI-assisted coding tools can significantly reduce the time and effort required for legacy modernization. In the next section, we talk about the challenge that was posed to us and how we came up with an answer.

Tackling legacy modernization with help from AI

Context

Our client relies on a critical dashboard, used daily by thousands of users, to track and allocate funds across various initiatives. This application, written in the outdated Angular v2.2 framework, had not been upgraded, leading to business complaints that even minor changes took months to implement. A proposal to modernize the application (either by upgrading Angular or completely rewriting it in React) stalled after the original team estimated a minimum of six months of work. Seeking an alternative, the client requested a second opinion to explore how AI tools could accelerate a solution.

Our approach

Our initial thought was to leverage Claude Code, which we were already using for code assessment in a different part of the business, to understand the Angular application. We operated under severe constraints: we could not contact the original team, nor did we have access to the application in any lower environment. Our only resources were the high-level application description and repository access provided by the CTO. Furthermore, our team was small, just two individuals, neither of whom had Angular experience, and only one who could be considered a front-end developer. These internal limitations made the restrictive external constraints even more pronounced.

Claude to the rescue

We used Claude Code to understand the codebase, its business capabilities and its technical details in minutes. Claude also provided the step-by-step tasks to upgrade to the latest Angular version, advising against a direct jump in favor of small, incremental version updates.

Since the organization was building new applications in React, a rewrite made sense, but we were blocked by not knowing what the original dashboard looked like. Nick then had a great idea: ask Claude to generate a static HTML view to see if it could produce one. Claude succeeded, creating the dashboard with mocked data. Although the look and feel differed slightly from the production dashboard (which we later confirmed), it provided crucial information for our work.

Next, we had to figure out how to rewrite the pages in React. To test the limits of Claude, we fed the static HTML pages back to it and asked it to generate the complete React codebase that would render an identical UI. Claude delivered again, generating the React codebase in no time, giving us a new, rewritten dashboard running on mocked data.

The initial codebase, while encouraging, was not optimal for long-term maintenance, and we needed to avoid hardcoding the mocked data. Nick, leveraging his expertise, took charge. Working in iterations with Claude as a pair, he improved the code quality and implemented a service worker to return the mocked data. We also found that Claude performed more efficiently when given prescriptive guidelines (guardrails) detailing requirements for clean code, automated tests and accessibility. Claude complied, for the most part.

After socializing our progress, we immediately secured the business buy-in, added a developer and a business analyst, and began productionizing the new dashboard. We were also able to modify the high-level automated tests using Claude. Furthermore, manual testing was performed to soothe nerves and scenarios identified for further automation.

Ultimately, the entire effort required was roughly 20% of the initial six-month estimate.

Outcomes

A demo of the new dashboard was delivered within two days.

Within the next four weeks, we plugged in some of the missing pieces (backend integration, authentication and authorization, and extensive testing).

A fully responsive, accessible dashboard was deployed to production in six weeks.

Our learnings

Understanding the code and the business context

We had all the challenges of a typical legacy modernization. This is where Claude outperformed our expectations. Within a couple of hours, we had a good enough understanding of the codebase and the capabilities that it provided to give us the confidence to go ahead.

Code generation, quality and guidelines

This is where we realized that while Claude is good, it performs significantly better when paired with a human expert. While Claude mostly complied with the guidelines, it occasionally ignored them. Nick had to continuously review the generated code and tweak the guidelines to help Claude better understand what we expected from it.

Guardrails are an important factor in enabling the developer to steer the AI in the right direction and to perform the actions that the developer intends. These guardrails come in two forms: implicit and explicit. Implicit guardrails are referenced by the AI each time a prompt is executed and typically include documentation and existing code. These provide comprehensive guidelines for the AI to follow and help ensure alignment to existing patterns and processes. However, it is unrealistic to expect implicit guardrails to provide coverage for every scenario and, additionally, the AI may not consistently interpret implicit guardrails as expected. This is where explicit guardrails yield tremendous value by providing quality gates that the AI must adhere to in order to proceed. These quality gates include automated tests and code quality configurations (linters).

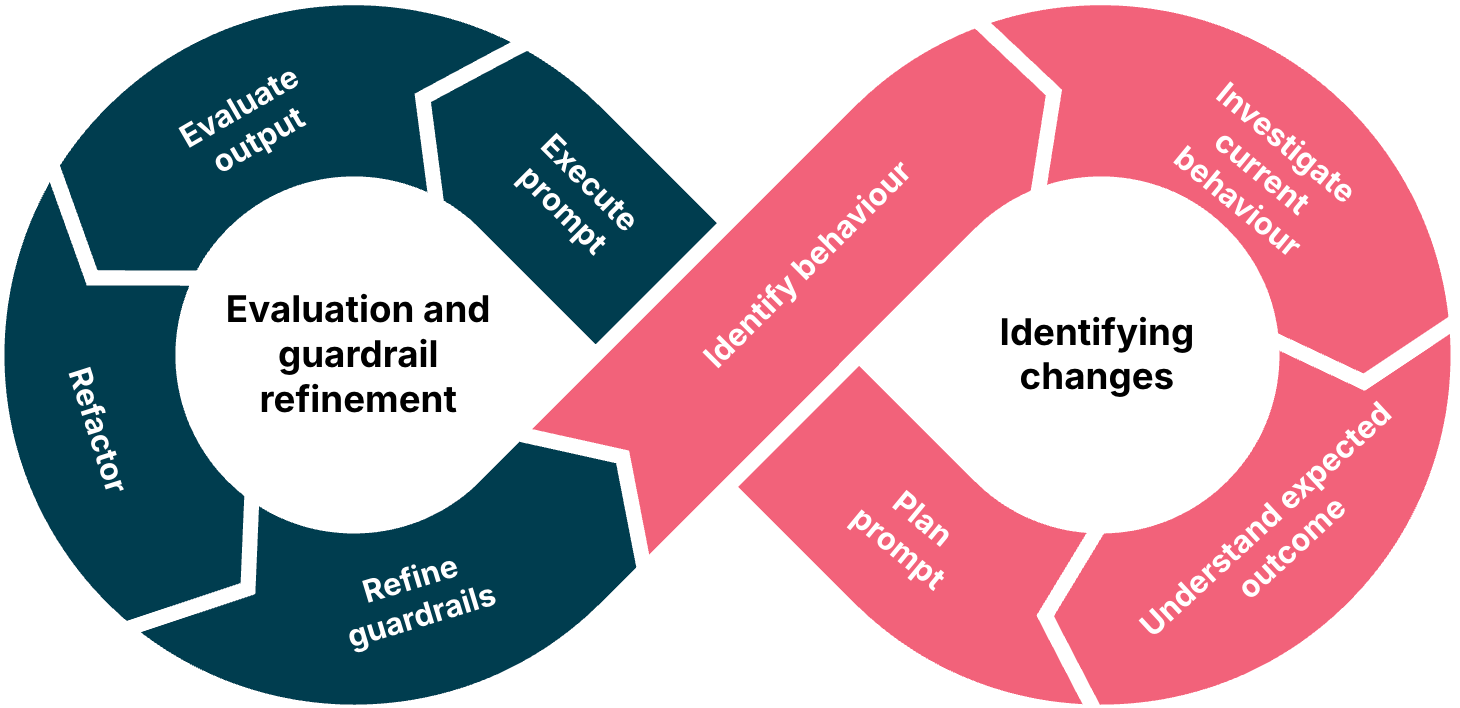

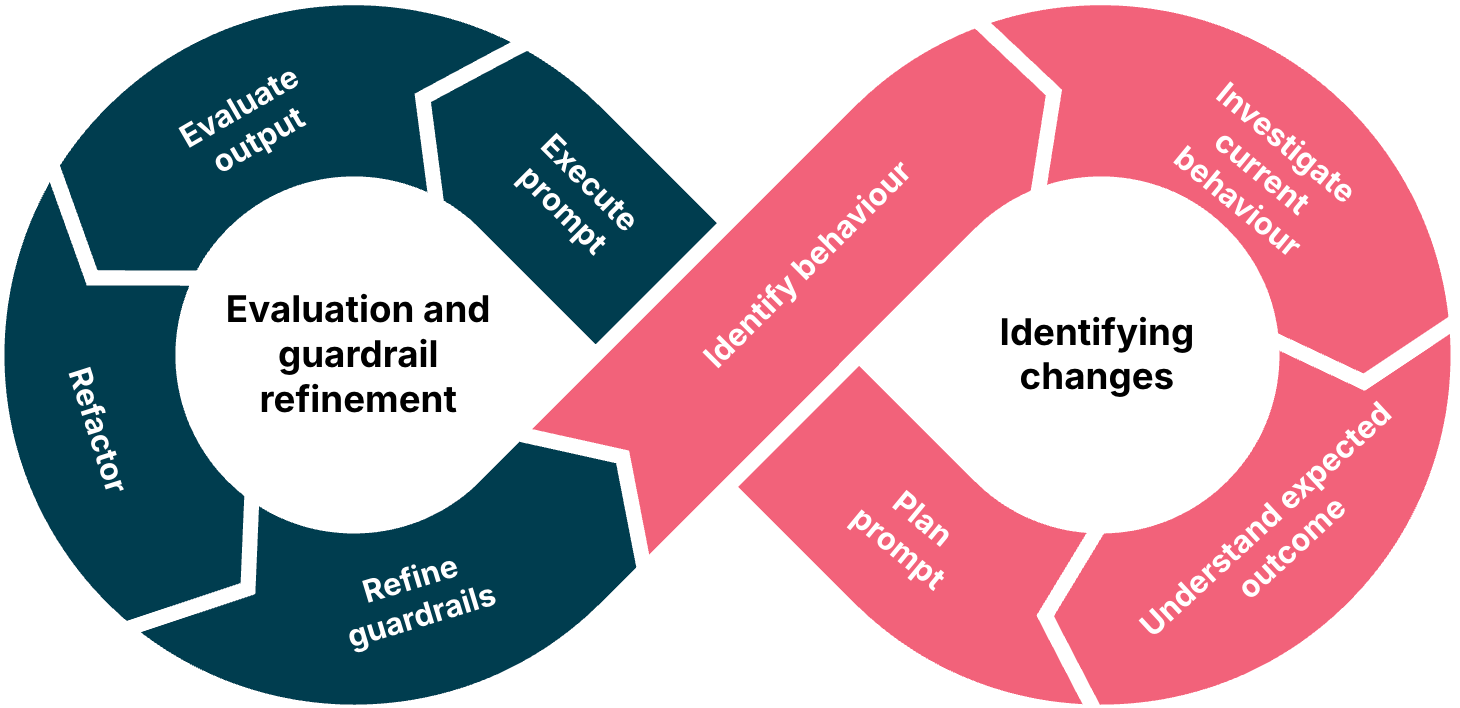

Creating a continuous development workflow is essential to effectively generating high-quality code with AI. The development loop empowers developers to clearly understand the behavior they wish to implement. This helps form the prompt that is fed to the AI. Once the AI begins actioning the prompt, the developer can leverage their expertise to evaluate the generated code against quality standards, architectural guidelines and ways of working. Typically, the outcome of this is a refactor and refinement of the guardrails before repeating the process. This feedback loop is crucial to ensuring a positive developer experience by reducing the number of manual changes needed after each prompt. Additionally, continuously refining implicit guardrails, such as documentation, is a great way of ensuring documentation is kept up to date!

This systematic integration of AI into the engineering loop is the foundation of AI-first software delivery, an approach that Thoughtworks is actively pioneering. In this model, AI agents and human experts collaborate throughout the entire development lifecycle to accelerate value delivery without compromising on quality or security. You can read more about our approach here.

Conclusion

We made remarkable progress in analyzing and rewriting legacy systems in a matter of weeks. We started with a team of two, and eventually had an effective team of three (two developers and one business analyst) who rewrote an entire application and pushed it into production in six weeks. This represents a significant reduction from the initial estimate of six months for a team of six.

We further understood that AI tools like Claude are powerful assets that organizations should consider as part of their toolkit, provided they retain skilled humans to guide them toward extraordinary results.

We were able to achieve a better user experience and a modern codebase in just days. Our users loved the result, and this work has sparked strong interest within the organization to use AI more effectively to achieve both tactical and strategic goals.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.