It should come as no surprise that scientists and engineers have long been fascinated with the mind’s ability to rapidly and accurately recognize patterns, and much research has been geared towards attempting to recreate this ability in a machine as a demonstration of Artificial Intelligence (AI). One of these abilities is facial recognition; something humans do with relative ease, but which has been exceedingly difficult to mimic using programming logic and advanced algorithms.

In Ray Kurzweil’s book How to Create a Mind, it is argued that the human brain contains approximately 300 million ‘general pattern recognizers’ arranged in a hierarchical pattern. Input signals feed through various layers, and concepts are learned in an increasingly abstract sense until a new pattern is learned or an existing pattern recognized.

The closest parallel to this in machine learning are artificial neural networks (ANNs). ANNs use mind-inspired mechanics including simulated neurons and layering to learn concepts from experience (exposure to data). ANNs have been applied to a number of problems in pattern discovery including facial recognition but until recently have had limited success in real-world facial recognition systems.

This is now changing with the use of Deep Learning; a set of algorithms in machine learning that attempt to model high-level abstractions using an approach more closely aligned with how our minds recognize patterns. Using a large number of layers, so called Deep Neural Networks (DNNs) can mimic human learning by adjusting the strength of connections between simulated neurons within many layers, just as the human mind is believed to strengthen our understanding of a concept. Each layer can model an increasingly abstract concept built from the more basic concepts learned at earlier layers.

Deep Learning is breathing life back into the use of ANNs and some researchers consider the use of deep learning neural nets to be a small revolution in AI. What makes deep learning so attractive is its property of getting better by simply throwing more data through its networks. More data to a DNN is like more experience to a child. Although these networks have been around for decades, only now do we have the volume and variety of data to expose them to enough information for a DNN’s “understanding” to rival that of humans.

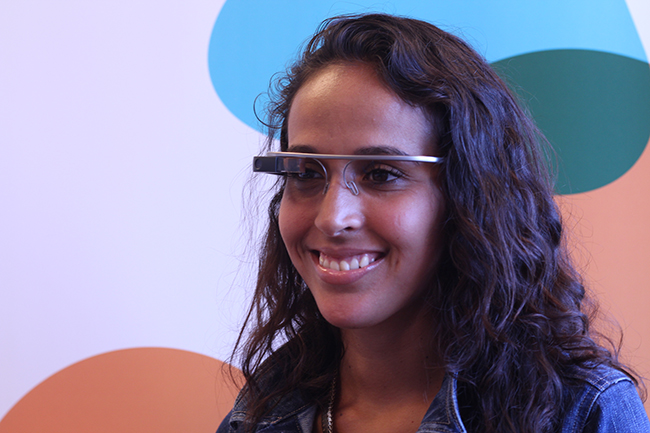

In science and industry, deep-learning computers are being used to search for potential drug candidates and to predict the functions of proteins. Companies like Google and Facebook are using their massive data stores of images and text to push DNNs to never before seen levels of machine learning. A small start-up in Las Vegas called NameTag has developed a beta version Facial Recognition App for Google Glass. The App uses the FacialNetwork.com database to search through millions of photos, learn what features define a human face, and match them to facial features detected from the App. The exact use of this in Google Glass is still unknown and Google has yet to approve the use of facial recognition Apps for Google Glass.

Facebook is moving forward with a facial recognition project called DeepFace. DeepFace can detect whether two faces in different photos are of the same person and is reporting accuracies that rival a human’s ability to do the same. DeepFace uses DNNs to model highly complex data and multiple features. What makes DeepFace so exciting is its ability to detect faces regardless of lighting or camera angle; two factors that have stumped most facial recognition algorithms to date. This could lead to new photo tagging applications and authentication technologies. DeepFace uses a 3-D modeling technique to rotate a single flat image in 3-dimensions, thereby allowing the algorithms to “see” different angles of the face. Using a DNN with over 100 million connections, and its database of millions of photos, DeepFace teases out the features that can be used to recognize human faces, and uses this knowledge to discover very high-level similarities between 2 photos of the same person. Facebook has reached an accuracy of 97.35% on the so-called Labelled Faces in the Wild (LFW) dataset, and has reduced the error of the current state-of-the-art by more than 27%, approaching human-level performance.

The advantage of DNNs over other learning approaches such as Support Vector Machines and Linear Discriminant Analysis (LDA) is its ability to scale to extremely large datasets; something required for problems in speech and facial recognition. This means large, inexpensive clusters of computers are required to process and calculate all the data and make learning using DNNs feasible. In the now famous Google Cat experiment, Google scientists used 16,000 processors and the internet as a data source to recognize the appearance of a cat. What makes the experiment so game-changing is that the algorithms were ever told what a cat is or looks like. The DNN came up with the concept of a cat all by itself.

Many researchers and start-ups will be jumping onto the DNN bandwagon to see where we can push machine learning. Any application requiring the most important features to be detected among myriad variables is a potential candidate for DNNs. Neural networks have two big advantages; they deal well with many ill-defined features, and they scale efficiently, meaning extremely large datasets can be used. This is the latest approach to machine learning and the true point to Big Data; that throwing more data at the problem bypasses the problems associated with traditional statistical analyses on small datasets. Although the somewhat blackbox nature of DNNs take us further from understanding true causality, they do offer the exciting opportunity to mimic human intelligence and automate more sophisticated tasks. More abstract areas like psychology may soon see benefits in the application of DNNs in both targeted marketing and in helping us understand how the human mind works.