By David Tan and Mitchell Lisle

Approaching the development of data products as you would approach building software is a good starting point. But data products are typically more complicated than software applications because they are software and data intensive. Not only do teams have to navigate the many different components and tools that are part and parcel of software development, they also need to grapple with the complexity of data. Given this additional dimension, it can be all too easy for teams to get mired in cumbersome development processes and production deployments, leading to anxiety and release delays.

At Thoughtworks, we find that intentionally applying “sensible default” engineering practices allows us to deliver data products sustainably and at speed. In this article, we’ll dive into how this can be done.

Applying sensible defaults in data engineering

Many sensible default practices have their roots in continuous delivery (CD) – a set of software development practices that enables teams to release changes to production safely, quickly and sustainably. This set of practices reduces the risk of error in releases, reduces time to market and costs, and ultimately improves product quality. Continuous delivery practices (such as automated build and deployment pipelines, infrastructure as code, CI/CD and trunk-based development) also positively correlate with an organization’s software delivery and business performance.

From development and deployment to operation, sensible default practices help us build the thing right. These practices include:

Trunk-based development

Test-driven development

Pair programming

Build security in

Fast automated build

Automated deployment pipeline

Quality and debt effectively managed

Build for production

As we will elaborate later in this chapter, these practices are essential in managing the complexity of modern data stacks and accelerating value delivery because they provide teams with the following characteristics which help teams deliver quality at speed:

Fast feedback: Find out whether a change has been successful in moments, not days. Whether it’s knowing unit tests have passed, you haven’t broken production, or a customer is happy with what you’ve built.

Simplicity: Build for what you need now, not what you think might be coming. This lets you limit complexity, while enabling you to make choices that allow your software to rapidly change and meet upcoming requirements.

- Repeatability: Have the confidence and predictability that comes from removing manual tasks that might introduce inconsistencies and spend time on what matters – not troubleshooting.

Engineering practices for modern data engineering

While there is a rich body of work detailing how you can apply continuous delivery when developing software solutions, much less is documented about how you can use these practices in modern data engineering. Here are three ways we’ve adapted these practices to build and deliver effective data products, fast.

1. Test automation and test data management

Test automation is the key to fast feedback, as it allows teams to evolve their solution without the bottlenecks that result from manual testing and production defects. In addition to the well-known practices of test-driven development (guiding software development by writing tests), it’s also important to consider data tests.

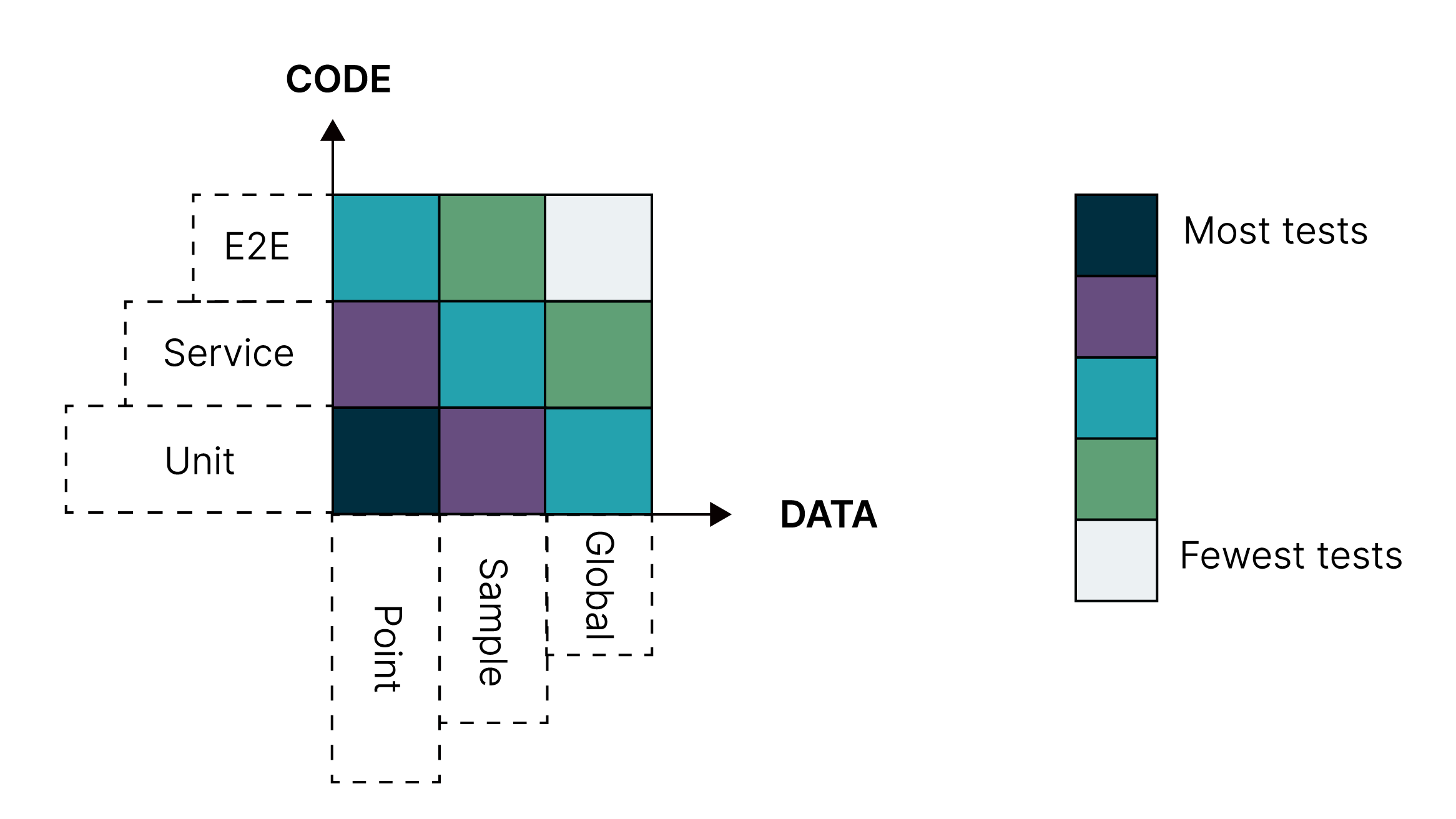

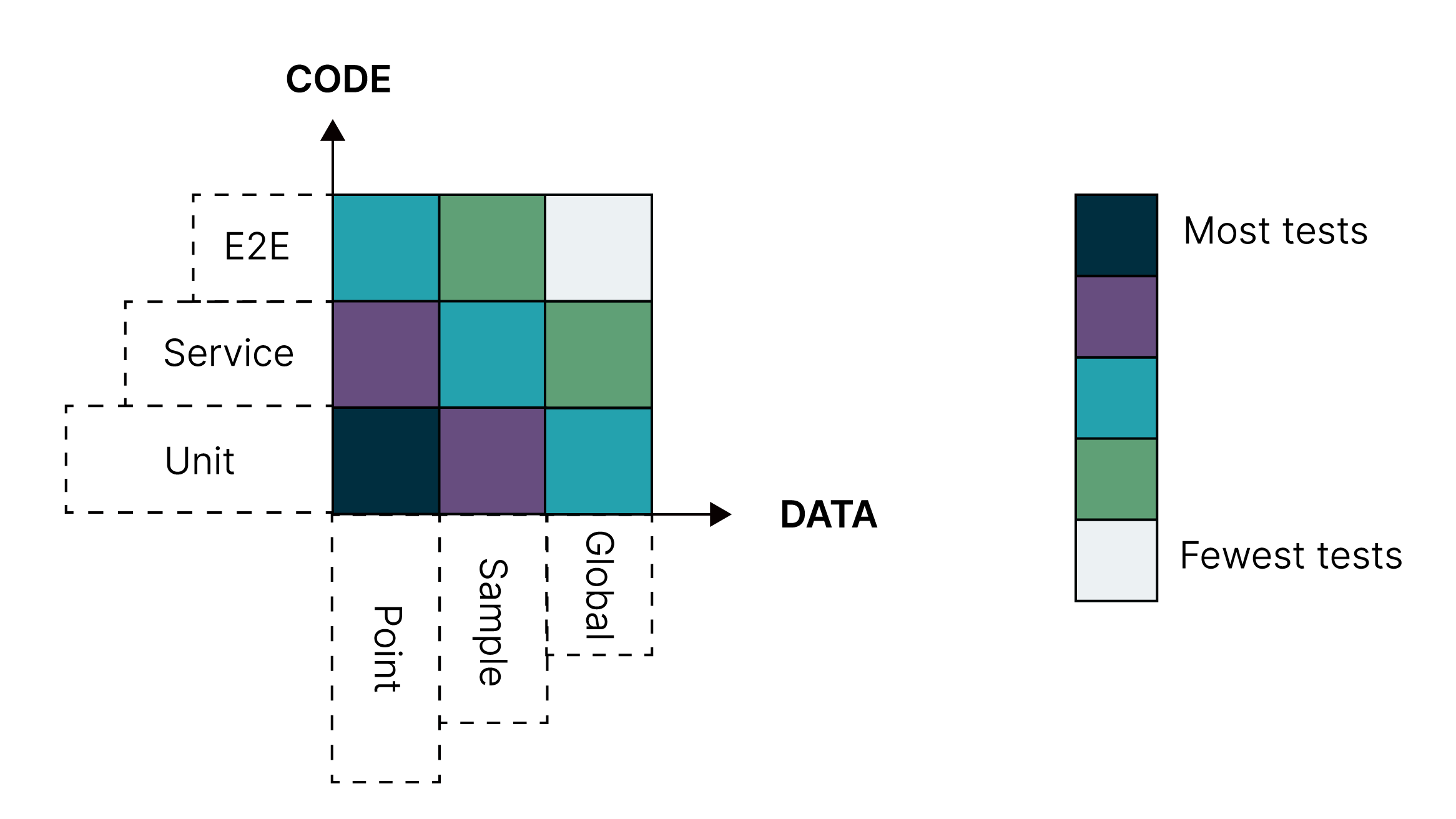

Similar to the practical test pyramid for software delivery, the practical test data grid (Figure 2) helps guide how and where you invest your effort to get a clear, timely picture of either data quality or code quality, or both. The grid considers the following data-testing layers:

Point data tests capture a single scenario which can be reasoned about logically, for instance a function that counts the number of words in a blog post. These tests should be cheap to implement and there should be many of them, to set expectations in a range of specific circumstances.

Sample data tests provide valuable feedback about the data as a whole without processing large volumes. They allow you to understand fuzzier expectations and variation in data, especially over time. While they bring additional complexity and require some threshold tuning, they will uncover issues point tests don’t capture. Consider using synthetic samples for these tests.

Global data tests uncover unanticipated scenarios by testing against all available data. They’re also the least targeted, most subject to outside changes, and most computationally expensive.

Figure 2: The practical data test grid

You can apply these tests to data alone or combine them with code tests to verify various stages of data transformation – in which case you would consider the two dimensions as a practical data test grid. Again, this is not a prescription, you needn’t fill every cell and the boundaries aren’t always precise, but this grid helps direct our testing and monitoring effort for fast and economical feedback on quality in data-intensive systems.

A word on test data management

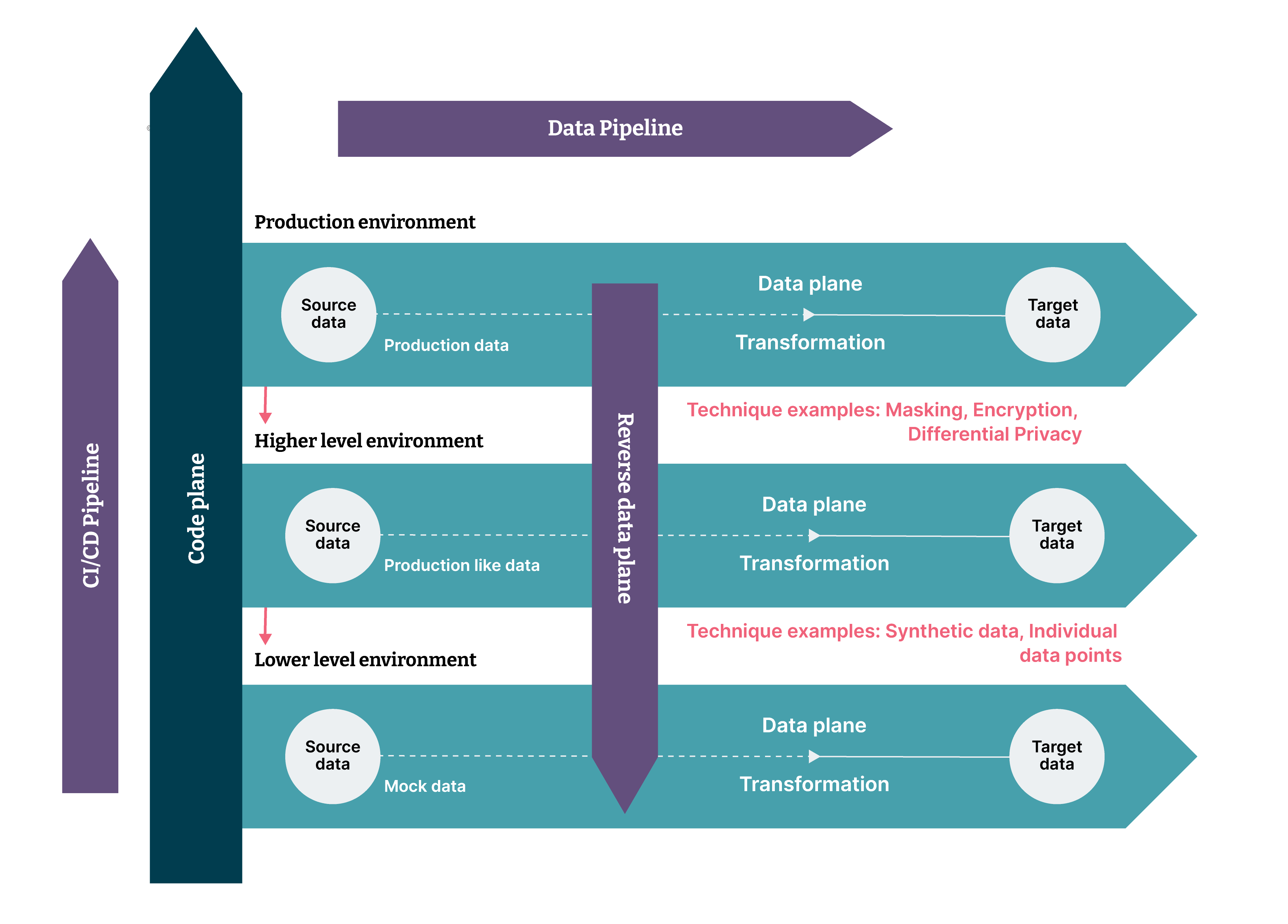

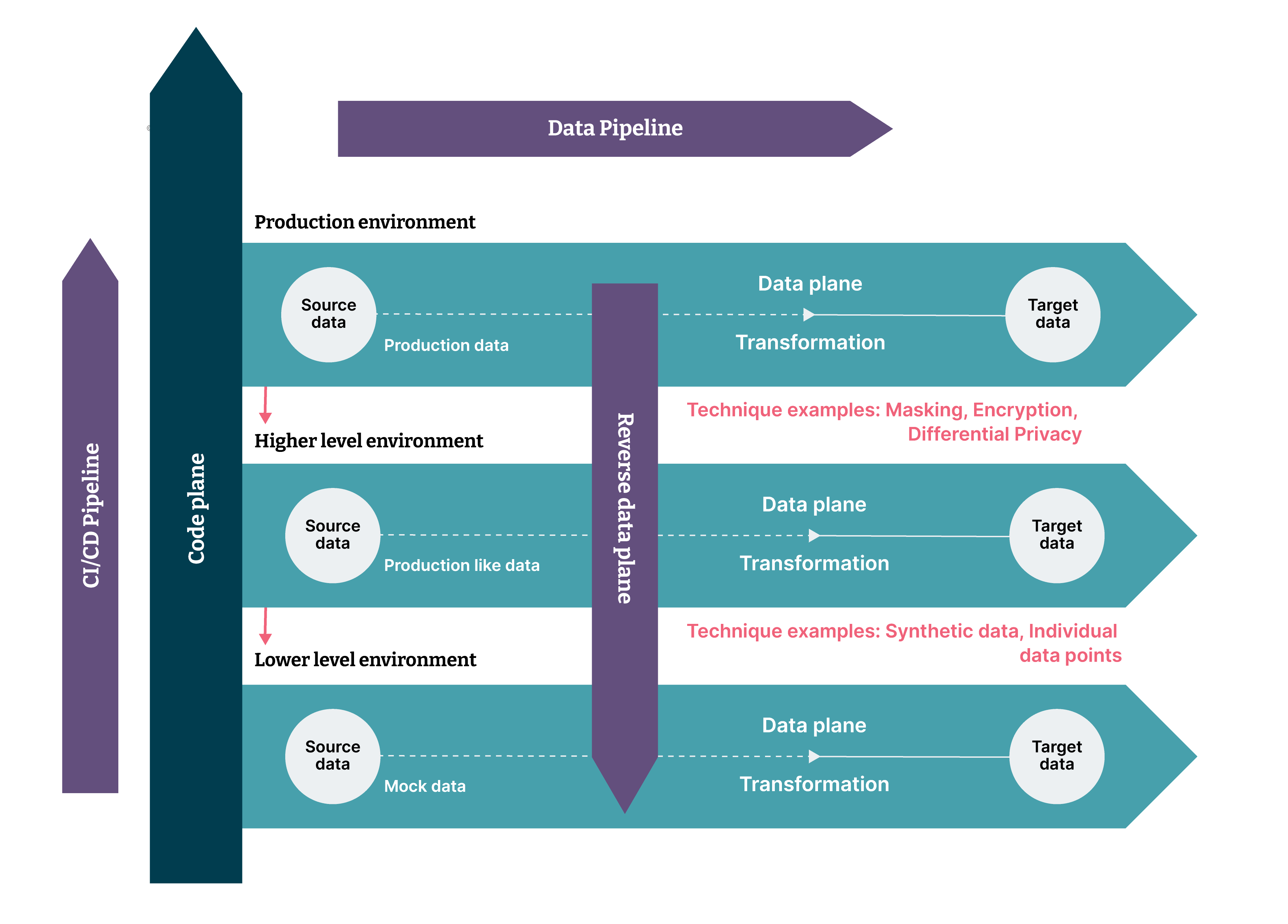

You will need production-like data to use the practical data test grid. Start by thinking about three planes of flow (Figure 3):

- In the code plane, code flows along the Y-axis, from bottom to top, between environments (e.g. development, test, production). In traditional software engineering terms it's a CI/CD pipeline – the flow that software engineers are typically familiar with.

- In the data plane, data flows along the X-axis from left to right in each environment where data is transformed from one form to another. This is a data pipeline and is something data experts understand very well.

- In the reverse data plane, data flows along the Y-axis in the opposite direction of the code plane. You can create samples of production data into test environments using different privacy preserving or obfuscation techniques such as masking, differential privacy – or you can create purely synthetic data, using samples of production data.

Figure 3: Visualization of a reverse data plane in a data pipeline

2. Case study: Accelerating delivery with sensible default engineering practices

Company Y is a large-scale software company whose customers use its software for reporting purposes. Company Y wanted to build a new feature that allowed its users to see historical as well as forecasted data that helped them make better informed decisions.

However, the historical data needed to train machine learning models to create forecasts was locked up in operational databases, and the existing data store could not scale to serve this new use case.

We applied sensible default engineering practices to build a scalable streaming data architecture for Company Y. The pipelines ingested data from the operational data store to an analytical data store to service the new product feature.

Sensible defaults in action

We adopted pair programming where developers write code together and work on the new feature, providing each other real-time feedback through test automation. This involved unit, integration and end-to-end tests run locally.

Pairs accessed test data samples that represent the data we expect in production and used git hooks that lint, check for secrets, run the test suites. If something was going to fail on continuous integration, the hooks shift feedback left and let the developers know before they pushed the code and caused a red build.

We made sure code is always in a deployable state by adopting continuous integration (CI) – a practice that requires developers to integrate code into a shared repository several times a day. This sped up deployment, while a fast automated build pipeline running the automated test suites on the CI server provided fast feedback on quality.

Our team built the artifacts once and deployed to each environment through automated deployment. We applied infrastructure as code (i.e. application infrastructure, deployment configuration, observability are specified in code) and provisioned everything automatically. If the build breaks in the pre-production environment, we either fixed it quickly (in 10 minutes or less) or rolled the change back.

Post-deployment tests made sure everything was working as expected, giving us the confidence to let the CI/CD pipeline automatically deploy the changes to production (i.e. continuous deployment).

- Observability and proactive notifications allowed everyone on the team to know the health of our system at any time. And logging with correlation identifiers helped trace an event through distributed systems and observe the data pipelines.

- Where necessary, we refactored (rewriting small pieces of code in existing features) and managed tech debt as part of stories and iterations, not as an afterthought buried in the backlog.

Performing at an elite level

In just 12 weeks, we delivered a set of end-to-end streaming that continually updated the analytical store and powered the forecast feature that provided users with real-time insight. These practices helped the client team accelerate delivery and become an “elite” performer, as measured by the four key metrics:

Deployment frequency: on-demand (multiple production deploys a day)

Lead time for changes (time from code commit to code successfully running in production): 20 minutes

Time to restore service: less than 1 hour

- Change failure rate: < 15%

For more complex changes, our team uses feature toggles, data toggles or blue-green deployment.

A note on trunk-based development

Throughout delivery, we apply trunk-based development (TBD), which is a version control practice where all team members merge their changes to a main or master branch. When everyone works on the same branch, TBD increases visibility and collaboration, reduces duplicate effort and gives us much faster feedback than the alternative practice of pull request reviews.

If we are averse to committing code to the main branch, it’s usually a sign that we’re missing some essential practices mentioned above. For instance, if you’re afraid your changes might cause production issues, make your tests more comprehensive. And if you need another pair of eyes to review pull requests, consider pair programming to speed up feedback. It's important to note that TBD is a practice that’s only possible if we have the safety net and quality gates provided by the preceding practices

In our next chapter, we’ll look at how to build a team to help you build the right thing.