Using AWS with Security as a First Class Citizen

Settings up and structuring AWS accounts in a secure way is no easy feat. Amazon provides facilities such as multi-factor authentication (MFA), password policies and cross-account credentials and role sharing, but setting all of those up correctly still is largely a task of combing through blog posts and best practice analysis. This document, with the help of the hardened concepts introduced at our client AutoScout24, is to illustrate how secure account setups on AWS are accomplished and what it takes to get from using single purpose, individual IAM accounts to a multi-cross-account role sharing concept. It will also tell you about steps to take to ensure compliance with company guidelines

AWS Account Setup

AWS account are pretty easy to set up...sign up with your email, assign a password to your root account user, and you’re done. However, this root account user is an all-capable god within your AWS setup and should never be used except for certain provisional or billing tasks.

First steps

These are the core steps you must take in order to ensure the most basic level of security within your AWS account. Pretty much everyone should be running through these steps one by one, as they are a part of Amazon’s official documentation on how to secure IAM:

- The very first thing you should do is create additional IAM users and groups. There should a dedicated account for every single user supposed to work with your environment. Do not use the root account user for anything but creating one additional admin user and switch to it immediately after. Afterwards, lock away the credentials to the admin account somewhere safe and vow to never use them again.

- Most of them times you want to create groups for users and assign access policies to groups instead of users directly. That way you can easily delegate access to certain people without tying policies to their specific account.

Note: Even if there’s just a single set of permissions required for a single user you should probably think about creating a group for them. In the end, you might want to assign the same permissions to somebody else (4-eyes-principle) in the future. - Enforce a strong password policy and require the use of password managers by the team.

- Monitor the lifetime of your AWS keys and rotate them frequently (<= 30 days). Especially AWS keys are an open door to access and are easily recognizable and once acquired can be used without any knowledge of the system at hand. You can largely automate the process of rotating AWS keys which makes it a fairly effortless requirement.

-

Mandate the use of MFA for your user accounts. A second token for authentication is an absolute must if they are working with the web console directly.

Note: It will also be for using access keys/tokens, but more on that later - Use the AWS CLI’s native profile integration and do not commit/enter your credentials into any application specific configuration file. Any and all of the AWS SDKs are capable of fetching credentials from multiple sources, including the environment (variables), profiles, instance credentials or role credentials. One of those should do and no application should be trusted with your AWS access and secret keys.

- Use federated users with an existing Identity Provider. If your company already uses a central user directory, like Active Directory, LDAP or a managed identity provider service like OneLogin or Okta, integrate your AWS environment with this provider instead of using AWS IAM users. This enables you to facilitate lifecycle management of users in a single space.

AWS supports SAML and OpenID Connect. Should you require a more granular control mechanism you could think about implementing a federation proxy like AFP.

Cross-account credentials

Sooner or later you will want to create multiple accounts to manage your resources on AWS, to improve overall security through separation of concerns. Having access to a user’s account credentials or tokens must not entail compromising all of your account’s resources or applications.

- Lock down the account holding your user accounts and groups information. None of the users should be able to run/use any other resources but IAM to manage their own keys and password. No other AWS service should not be accessible in this account. This ensures we are not giving away the key to the kingdom should an account be compromised.

- Create a second, distinct AWS account (Account 2) that has all the necessary roles and policies to access what you what you want to protected.

Instead of logging into Account 2 directly, log into Account 1 (the account holding your user and group information) and temporarily assume roles defined Account 2.

This allows for working with least privilege, is seamless and merely requires you to gather credentials from Amazon’s Security Token Service before executing operations against their APIs (since tokens are working in the scope of defined accounts). You can use a tool such as awstools for this. The roles you define should be relevant to the teams and their work, i.e. should only includes access to resources the team requires to do their job. If you want to compromise you can also allow team members to assume a single generic account where they have read access to most services, but only write access to a select few. This will still ensure a reasonable amount of security while retaining a little more flexibility for day to day work. - Enforce MFA authentication when assuming cross-account roles. This ensures that none of the access keys used to interact with any resources on your accounts can be used by just obtained an access key. An attacker will always have to have the MFA token ready as well to obtain temporary credentials, e.g. should they acquire a single token for a certain time window the impact and time for account access is limited to the timeframe of the validity of the temporary security token issued by IAM for the assumed role.

- Deny write access to IAM resources and implement a 4 - 6 eyes principle when modifying role, user and group policies in every account. Ideally, you should be able to manage any and all IAM related policies in a separate code repository such as Git with the help of tools such as iamy, Chef or Terraform. Changes should then, ideally, be going through a review process and only a very small, trusted subset of people should be able to assume a special, closely monitored role, whose only purpose is to access IAM and update its policies. If you need to test your IAM policies create unit tests for your frameworks/tools, use mocking facilities and the policy simulator or create a “playground” account that is wiped and recreated each night.

- Monitor differences between the reviewed security configuration in source control and the currently active configuration. In order to ensure the review process is not circumvented by an attacker, alert on security configuration that is not reflected in the version control system.

Logical account sharding

Once you have your two accounts talking to each other and Account 1’s users/groups are able to use Account 2 as their main driver for development try to think of further logical sharding within your account structure.

Accounts like “Production”, “Development”, “Staging” or “Playground” are a good idea. Compromising one of them doesn’t mean you have access to the other. Communication between the two should be avoided if possible, but can be enabled through VPC VPN endpoints or VPC peering. Use these features wisely and sparingly. The more you directly access resources across accounts the greater your attack surface becomes. For most purposes it should also not be necessary.

Ideally, you have your services also assume roles with temporary credentials across accounts and use the official, audited and hardened APIs supplied by Amazon. Tools like an AWS signing proxy make it even easier to ensure IAM is your one source of truth regarding credentials while retaining a sufficiently streamlined development process, even across scattered environments.

We would advise against delegating direct IAM write access to a continuous delivery role or integration environment, as those credentials could theoretically be obtained without supervision and used to take over IAM by a rogue actor or developer via manipulated artifacts or build instructions.

Attack scenarios and mitigation

[Note: For the purposes of this listing password and MFA authentication in combination are considered secure and hard to compromise.]

To show you the advantages of the concepts we described earlier let’s take a look at a plausible (and usual) attack vector and how the steps we talked about earlier help to mitigate it:

- Losing your access key does not result in access to resources or vital information: This is the most important part. Should any attacker gain access to your key (for whatever reason) they may only create new access keys or change your password for the same account. That’s where their reign ends.

- To cause actual damage an attacker would need to know which roles to assume in foreign accounts. An attack therefore requires knowledge of two arbitrary values, the account ID/name and the roles to assume and AWS does not allow for listing of existing roles/policies without prior access. In the end, both the account ID(s) and the roles to assume should not be public knowledge and shouldn’t be documented publicly.

- Even then the attacker still needs to get ahold of a valid MFA token (or the token producer). Otherwise assuming a role in a different account will not be possible. The relevant temporary credentials will only be valid for a short amount of time, meaning they will have to re-authenticate and will not be able to re-use the compromised credentials on another system.

Action items

- You are using a dedicated account to manage your users and groups.

- MFA is obligatory for all user accounts.

- You access tokens (passwords, access keys) have strong security policies and are rotated frequently.

- You have multiple accounts set up with clear use cases and purposes.

- You have people assume roles in those relevant accounts to acquire temporary security credentials to work with resources. Furthermore these roles and the process of assuming them are secured by MFA.

Auditing and Monitoring

CloudTrail

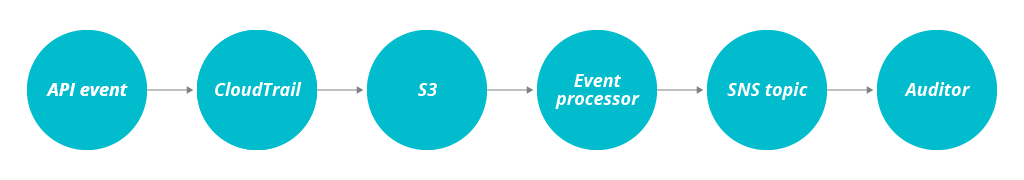

All security measures are for nothing if you are not diligent about auditing relevant calls to protected systems and monitoring the impact of their actions. AWS provides you with a powerful tool capable of auditing most calls made to its API called CloudTrail. CloudTrail also allows for log events to be aggregated and stored in S3, which makes is possible to do dynamic processing based upon the collected events. By keeping a whitelist of roles, services and access policies you can then set up an automated way of discovering possible security incidents and be notified almost instantly.

The general design would look like this:

Be sure to enable CloudTrail for all regions, otherwise it will only log in the region you originally provisioned it in. With the number of regions AWS is available in steadily growing, creating/using unsupervised and probably undetected resources in an obscure region is a common vector of attack.

Extended auditing

There are a number of ways to extend this system:

- Automated alerting in case of suspicious activity. Amazon Push Notification Service gives you the ability to call pretty much any HTTP endpoint you want or send email to an aggregated list.

Triggering a series of events would also be possible, for example shutting down a certain part of even a whole service in case of detected intrusion patterns. - Aggregate all event information into a single, dedicated account with a limited user access. Log events can be highly sensitive and a gold mine for attackers. Giving direct access to CloudTrail for all users should be discouraged and only allowed under a 4-eyes paradigm.

- Detecting massive scaling/abuse operations in progress. To introduce arbitrary rate limiting on service APIs you can set upper boundaries for groups of accounts when it comes to creating, modifying and deleting certain resource types (e.g. EC2 instances, EBS volumes, RDS instances etc.). That way, you ensure that even with proper credentials the impact of a probable attack is limited to as few resources as possible.

- Visible security KPIs. How many breaches of policy? How many access attempts? How many suspicious or flagged events within the a certain timeframe?

Visibility is always key when arguing in favor of security related provisions, and CloudTrail is giving you the ability to visualize, in (almost) real time even, security related incidents or issues.

Security Monkey

Netflix is famous for pioneering a lot of open source tools they build to run their infrastructure on AWS. Among them is Security Monkey. It’s a part of the Simian Army concept of automated auditing and compliance tools, with, currently, Chaos Monkey, Janitor Monkey and Conformity Monkey being the other three available.

Security Monkey by itself is still actively developed, albeit sparingly. It provides a service which is able to continuously scan your account(s) for possible violations of predefined policies and gradually either address these violations, acknowledge/justify them, or even classify whole accounts as “friendly” to alleviate the severity of the issues raised.

Security Monkey is also able to send you either daily or per-result email notifications, keeping you informed of possible security related changes in your account setup.

Regular review of auditing events

The best monitoring and auditing measures are useless without a continuous cycle of reviewing and resolving possible incidents. You need to establish a regular rotation of engineers dedicated to keeping track of the findings, actively engaged in resolving the incidents highlighted.

Better still, make it a part of a regular on-call schedule, so everyone has to assume responsibility from time to time.

Awareness is one thing, accurate, coordinated and timely response is another.

Action items

- You are using CloudTrail to keep track of all AWS API related events

- You are actively monitoring possibly harmful events for your most important accounts

- Incidents are automatically propagated to a group or multiple rotating groups of responsible teams capable to react in real time.

Application secrets

Managing secrets in distributed, heterogeneous environment can be very tasking. Luckily though, AWS provides you with a well integrated toolset for incorporating secret management into your workflow seamlessly and effortlessly. The service is called AWS KMS, or Key Management Service. The basics are pretty easy to explain: You can create a secret key, within AWS, which then lets you encrypt secrets. The result is a base64 encoded binary blob, which contains both information about the key needed to decrypt it, and the actual secret. You then need to explicitly allow users, groups and roles to access certain keys in KMS to encrypt/decrypt secrets.

This whole workflow allows you to use KMS to effectively manage secret credentials within AWS. KMS can be used to encrypt S3 buckets, RDS databases, CloudTrail logs and to use many more features of other services more securely.

Best practices should be separation of concerns, also when it comes to KMS keys, i.e. every team or even every application should get their own.

Scenarios

- You want to make sure only your applications are able to access their credentials.

- You could, for example, let a certain group of users only encrypt credentials, and only allow your application’s roles to decrypt them at a later point in time, effectively barring anyone, even with correct user credentials, to ever steal any sort of confidential information available to your applications (this assumes the attacker is unable to access the running application environment or add malicious code to soon to be deployed artifacts)

- You want to manage your infrastructure on AWS using common provisioning tools and this should include your secrets

- Keep your keys in KMS and let your application decrypt them at runtime. This could be anywhere, an EC2 instance (using instance profile credentials), a Lambda function (using the role associated with the function), or your local debugging instance (through a role you assumed on AWS externally to your application; remember, your users should probably never have direct access to KMS to decrypt credentials), using the AWS SDK within your application. There are SDKs readily available for all major languages, and even if there isn’t for your language of choice chances are there is a package provided by the community to ease your burden. Lastly, if there are no credible community tools, Amazon’s API is well documented and therefore let’s you write your own integration with ease.

- Just create a new key for encryption purposes and switch the old key to decryption-only mode (by retiring the corresponding encryption rights through IAM). Then have all your teams encrypt their credentials again using the newer key(s) and disable the old key entirely. You can even automate this process, or scan for encrypted secrets in your source code control system still using old, read-only keys.

- You want to frequently rotate keys for encrypting credentials

Action items

- You are using KMS to provide your teams with separate encryption keys, either on a per-team or even application level basis

- All of your application secrets are stored encrypted, at rest (and persisted in source control)

- You are limiting access to KMS keys based on a need-to-know or need-to-use basis using IAM policies and roles

- KMS keys are rotated frequently and automated provisions are checking for outdated encrypted credentials constantly (via a blacklist or querying the KMS API)

Security Within Your Organization

Not everyone in an organization is interested in or even shares the same beliefs concerning security. Some are outright negligent, some are zealots without regard for processes or business value. The key within an organization is to hit the right tone for everyone and find a fair compromise between “maximum security” and driving the business with security as an afterthought.

The security interest group

A successful model for addressing security concerns, especially with distributed, agile teams carrying individual responsible for separate business goals, has been to formalize the process of sharing security concerns, security related ideas and efforts as well as responding to strategic security requests in the form of an interest group.

A proposed solution would be the formation of a security interest group consisting of a limited number of individuals interested in security and security related topics, but at least one member from each team within the organization. These individuals form a team outside the confines of their current team’s structure and are meeting on a regular (mostly weekly) basis to discuss possible topics in and around a shared subject (in this case: security). They are also the proponent and watchdog when it comes to security within their team.

You can extend this role concept by also assigning each member of this group a superset of rights, delegating some security related tasks (e.g. creating roles and policies, creating keys or even users and groups) to its members, with a set of strict rules (e.g. a 4-eyes/6-eyes principle for roles/policies) attached to them.

Make sure members of the group are rotating continuously to avoid overexposure and fatigue. This also gives you the ability to spread knowledge more efficiently and effectively.

Interest group members have to hold themselves accountable against their own expectations. Furthermore, they will have to share vital aspects of their group discussions with their relevant teams on a regular basis.

Structuring the interest group around these key objectives, i.e. building an internally rooted community around security and security related topics, with advocates in every team, while delegating key tasks requiring an elevated level of security creates a foundation for an integrated thinking process which relates closely to a security minded but value-driven business.

Security KPIs

It is essential that the organization gives itself and upholds a number of KPIs related to security. Examples could be “Outdated packages are updated within X days”, or “Security incidents are resolved within X hours”, or “A member of the security interest group acknowledges an open issue in X number of minutes”.

These KPIs are very important to combat the otherwise hard to come by negligence (after the broken window theory). However, rules and preventative measures are one thing. Making sure everybody sticks to them is another. Following up on KPIs in a regular and organized fashion (e.g. during stand-ups, incident reports and post-mortems) is just one of a few possible ways of implementing a culture with security as a first class citizen.

Post mortems

Should it come to the unfortunate event of a security related incident, conducting at least a post-mortem with scope of the people or the team involved and related to the security incident at hand should be a given. Getting everybody into the same room and discussing the outcome of the incident, what went wrong, what needs to be improved and preventative measures for the future.

Ideally, these post-mortems are made available to a much broader audience, be it on an organizational level, company wide or even involving your target audience or customers. The way the company GitLab is regularly doing public post-mortems on their blog and it has served them well in terms of community building, raising awareness and creating trust amongst their customer base. Sharing your failures (and a security related incident most certainly is a failure most of the time) and describing your action items, next steps and/or how you dealt with them is an opportunity to solidify your standing as a proponent of security and secure environment.

This strategy favors transparency over secrecy and increases awareness while improving education within the affected team, your organization and, if discussed publicly, with your customers and consumers.

Action items

- You have formed a security interest group which consists of at least one (ideally security minded) member of each team.

- You have given these members an elevated level of rights, for effective task delegates and to be able to respond to incidents adequately.

- You are circulating the findings and points of discussion of the interest group’s meetings regularly

- You have security minded KPIs in place, with strong and effective rules of enforcing penalties upon violation.

Closing Thoughts

Amazon's Web Services provide a lot of facilities to help securing environments, but it requires them to be understood and set up correctly. Identity and access management are difficult systems to get right, and AWS is no exception. Amazon’s solution reflects the inherent conflict of security and pragmatism since it’s neither straightforward nor easy to implement.

If you are unable to implement every recommendation in this document, try to get as far as you can and pick up the pace at a later point in time. Focus on the AWS recommended steps first and move on to the cross-account processes later.

Each and every one of them is a leap forward when it comes to the level of security you should strive for with your AWS cloud deployment.

Being aware of the shortcomings and tackling the most important issues when it comes to securing your AWS accounts is paramount and we hope this article will give a closer look at and understanding of what hardening your AWS account setup means and the incentive to drive your own process to secure your cloud infrastructure forward in a reasonable and effective way.

This is just the start.

Thoughtworkers Lisa Therese Junger, Ben Cornelius, Folker Bernitt, Cade Cairns, Daniel Somerfield and Johannes Müller (AutoScout24) have contributed to this article. Thank you!

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.