We all remember the SaaS vendors’ promises: tools that would put critical data at our fingertips. Most of us were left disappointed. These often cumbersome tools force users down labyrinthine paths to get to partial answers, at best.

Wouldn’t it be easier if you could just ask a simple question and get your answer back in a flash? That’s where PerformanceAI comes in.

What is PerformanceAI?

PerformanceAI is a cross-agent marketing insights application developed by Thoughtworks’ to make marketing analytics smarter, more accessible, and actionable. Its purpose is simple but powerful: any marketer should be able to ask a natural-language question about performance data — for example, “Show me win rates for our campaigns in financial services for H1 2025” — and get an instant, reliable answer.

The application was built using Python, Flask and React, it runs on Google Cloud’s BigQuery and Vertex AI, integrating Claude and Gemini models for precision reasoning and summarization. Using PerformanceAI, marketers can instantly look up data for a specific opportunity or account using retrieval augmented generation. They get insights on all marketing interactions, campaigns used, contacts who responded whether the opportunity was an inbound.

In short, our goal was to enable a marketer to go to the agent and ask a business question — instead of having to go to an analyst or manually pull the data from systems like salesforce.

Why did Thoughtworks build it?

Before PerformanceAI, marketing teams had performance data, but it was difficult to navigate. Data was often fragmented across systems. Functional teams were producing their own reports, resulting in inconsistent metrics and no single source of truth.

Instead of marketers being able to spot opportunities early, they were left waiting for the numbers to be cleaned up and crunched. PerformanceAI makes that experience simpler, more human: marketers can go straight to insights and act faster to impact the pipeline — whether that means spotting an insufficiently active buyer group at the qualification stage, or gaps in mid-funnel acceleration, or opportunities to increase win rates during solution development.

How was PerformanceAI developed?

For Enterprise AI to work it needs to have the right data foundations. In 2023, we revamped our Go-to Market KPI framework, and invested in data architecture on Google Cloud. This included onboarding more than 20 critical sales and marketing data sources into BigQuery, freeing us from fragmented SaaS interfaces, and aligning data with workflows. When GenAI arrived, our data was already AI ready.

We built out custom agents on Vertex AI so they could access the data directly. When the project began, the PerformanceAI team restructured marketing data for large language models. Early experiments led to a crucial insight: AI performs best when data is organized in a way that’s also intuitive for humans. By relabeling and restructuring datasets, the model could generate accurate, contextually relevant responses.

The first working version reached heads of marketing in mid-2025 — just months after the initial concept. Within the application, users could ask questions about opportunity data, bookings, win rates or campaign impact — and get validated answers immediately. The model evolved from 15 core questions to handling the entire spectrum of growth related questions.

Inside PerformanceAI

Under the hood, PerformanceAI runs on a multi-agent architecture built using Python and Flask, with a React front-end. It connects directly to Google BigQuery and leverages Vertex AI, integrating models such as Claude and Gemini for precision and contextual synthesis.

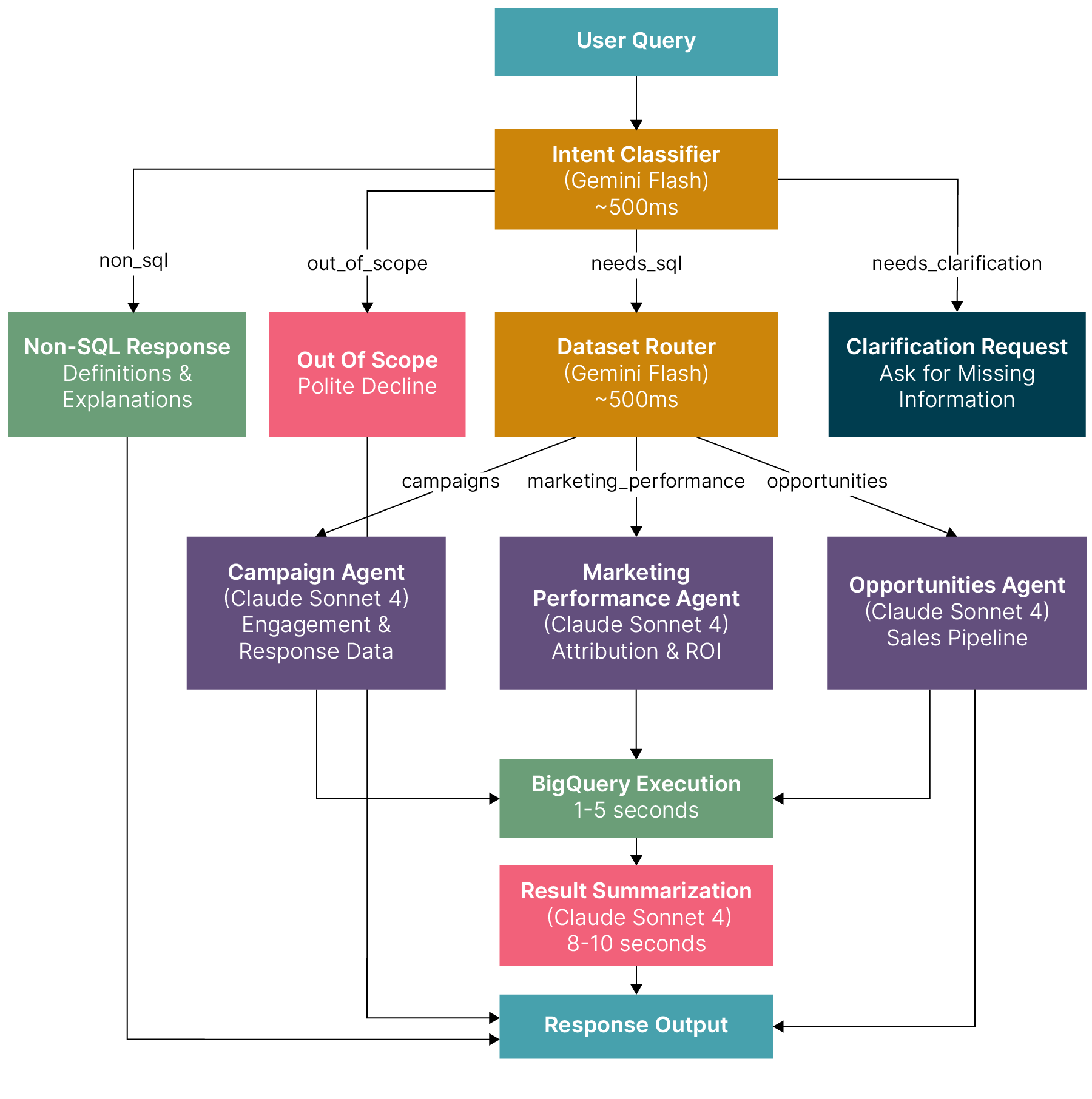

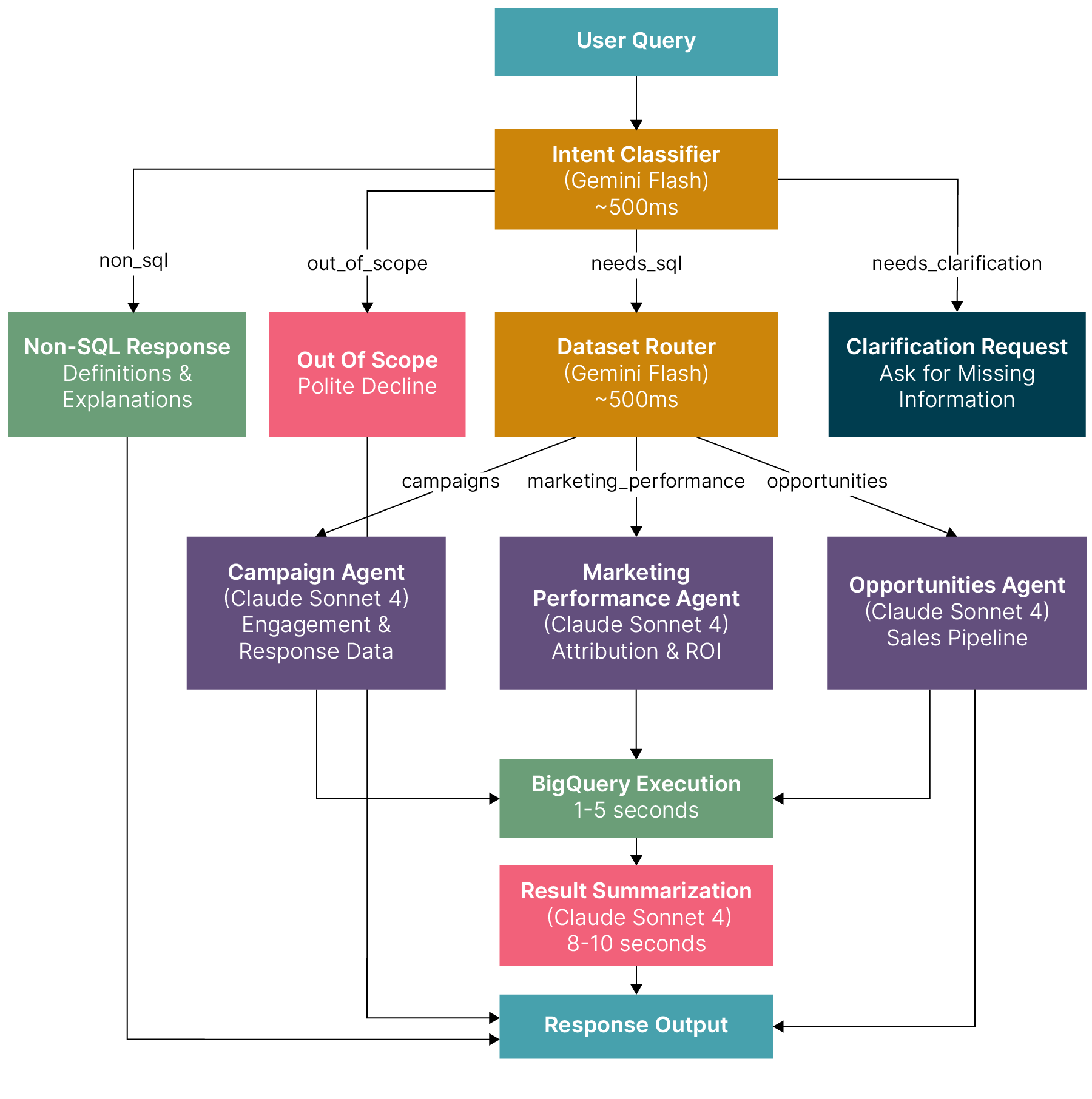

Initially, the user's question is inputted into a routing agent which decides what type of question the user is asking and decides on the dataset and agent it calls next. In which it has three agents to call for three datasets. For each agent it has access to different business rules and fields in BigQuery.

At the heart of the system is an autonomous prompt architect, which interprets a marketer’s question, fetches the relevant BigQuery schema, applies a 10-rule domain framework and builds a context-perfect prompt in real time. This is passed to Claude and Vertex AI, which convert natural language into enterprise-grade SQL queries.

Each query passes through an AI-aware guardrail that validates and tunes it before execution, preventing heavy or unsafe calls. Once run, the results are distilled by an insight synthesiser, which summarizes full-dataset statistics and a smart sample for the user interface — typically within seconds.

Alongside this, PerformanceAI employs a retrieval-augmented generation (RAG) Search Agent, powered by Vertex AI embeddings and Gemini, to perform semantic searches across datasets. When a user asks a question, the system embeds it, performs millisecond-speed semantic lookup across indexed marketing and sales data, retrieves the most relevant records, and generates a contextual answer.

Together, these components make PerformanceAI capable of answering natural-language questions with real-time precision, surfacing insights like which buyer groups are under-engaged or which campaigns most influence mid-funnel progression.

What makes this innovation different?

What sets PerformanceAI apart isn’t just the technology but the collaboration behind it. The initiative was a joint effort between marketers, AI engineers embedded in marketing and the IT team - a practical expression of Thoughtworks’ principle of breaking silos.

It’s also part of a larger vision: to create “super agents” for every business function. PerformanceAI, a superagent, is evolving into a conversational assistant that not only reports but acts, for example, updating buyer groups in the CRM or flagging unengaged opportunities to field marketers.

In essence, it’s a move toward AI-enabled marketing, where data doesn’t just inform decisions — it helps make them.

What results has PerformanceAI driven?

A simple but important test for any tech tool is: are people using it? On that basis, PerformanceAI hit the ground running. Field marketing leaders use it daily to track new opportunities, report on marketing-influenced deals and identify gaps in engagement.

The two regional heads of marketing that were first to trial the tool report saving hours of work by being able to instantly identify open opportunities and share insights and actions with their teams — a task that once took hours. The CMO refers to PerformanceAI as a ‘tooth brush’ product as she uses it at least twice a day.

From a business perspective, the project has strengthened alignment between marketing and sales. It’s now possible to monitor live performance metrics, identify unengaged buyer groups, and plan targeted outreach in one go — all powered by PerformanceAI.

What lessons were learned along the way?

Building an AI system for marketing wasn’t without challenges. The team had to adapt quickly as the technology evolved, and rethink architecture to connect other ‘super agents’.

From a leadership perspective:

Executive sponsorship is essential. Having the CMO and CRO involved from day one ensured adoption.

Cross-functional collaboration breaks silos. GTM Ops acted as a bridge across marketing, sales and IT.

Iterative delivery wins trust. Combining manual and automated reporting built confidence early.

Invest in AI engineers in the function who own the intelligence layer, while IT provides the architecture, guardrail and support for scaling and AIOps.

What next for PerformanceAI?

The team is now training the model to handle a broader set of questions and automate recommendations. The vision is to connect PerformanceAI into Thoughtworks single intelligence workspace, so users can ask performance questions and have the system call the right super agent in the background. All voice-enabled, with ability to take actions without having to go to traditional CRMs to get things done.

PerformanceAI is no longer just an experiment — it’s becoming an everyday companion for marketers, a tangible example of how Thoughtworks applies its own AI expertise to improve performance, productivity and collaboration across teams.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.