Chances are you’ve worked with huge monolith systems that had to be broken down into an ecosystem of microservices. If so, you’ll also know this transition journey is hard but worthwhile.

This two part blog series discusses how you could scale these microservices using either gRPC or Envoy Proxy. Part one will expand on the use of General-purpose Remote Procedure Calls (gRPC) and part two, on Envoy Proxy.

Network latency

Network latency refers to the delays in data communication over a network.

With monolithic systems, network latency would be the time delay in a client request reaching the server and the response from the server reaching the client.

With microservice architectures, different functionalities are served by different microservices, and each is deployed on a different node.

Imagine you're booking a ride on any ride-hailing application. This flow could have dependency on multiple services, each incurring their own network latency cost. Also, higher the request and response’s byte size, higher the incurred network latency when transferring data across the wire.

gRPC helps reduce this network latency.

Let’s look at a few examples of how:

Comparing gRPC with HTTP 1.1

gRPC is a modern RPC protocol implemented on top of HTTP2. HTTP 2 is a Layer 7 (application layer) protocol that runs on top of a TCP (Layer 4 — transport layer) protocol, which runs on top of IP (Layer 3 — network layer) protocol.

Since gRPC is built on top of HTTP 2, it offers all the advantages of HTTP 2 over HTTP 1.1.

Here’s how one can achieve better response times from gRPC:

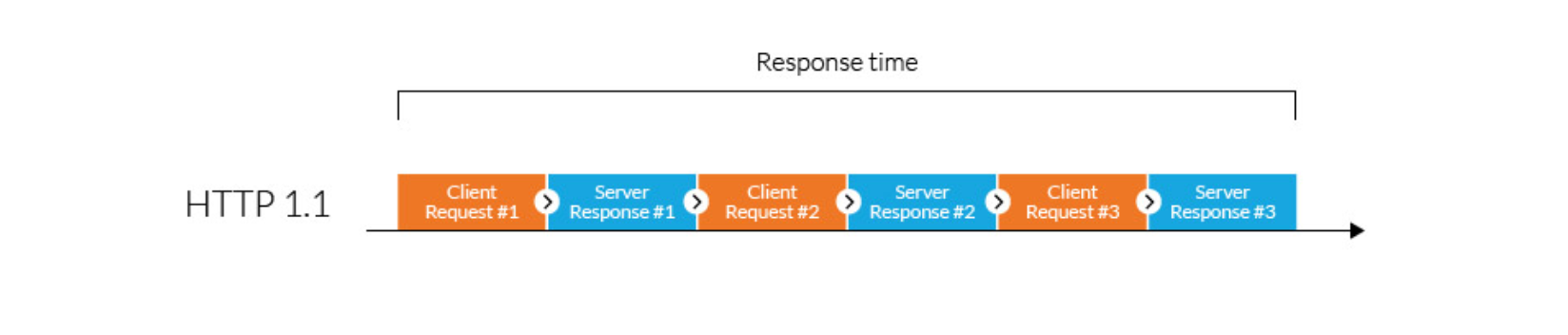

Multiplexing of requests. Let’s consider the situation where the client the booking service (of the earlier mentioned ride hailing app) is trying to validate user information using an authentication service.

The booking service makes the first request to the authentication service asking to validate user information. Let's call this Request #1. Before the client receives the response for Request #1, there may be another user who tries to book a ride and needs to be authenticated.

Booking service has to also make Request #2 to the authentication service as shown in Fig.1. The booking service need not create a new connection for Request #2 as HTTP 1.1 supports persistent connection, but, it’s still blocked until it receives response for Request #1. This is due to Head of Line Blocking, one of the disadvantages of HTTP 1.1.

Now, if there is another parallel connection to the authentication service, the booking service will not be blocked.

Therefore by opening up multiple HTTP connections and making concurrent requests, one could alleviate the Head of Line Blocking issue.

But there are limits to the number of possible concurrent TCP connections between any client and server. Also, each new connection requires significant resources.

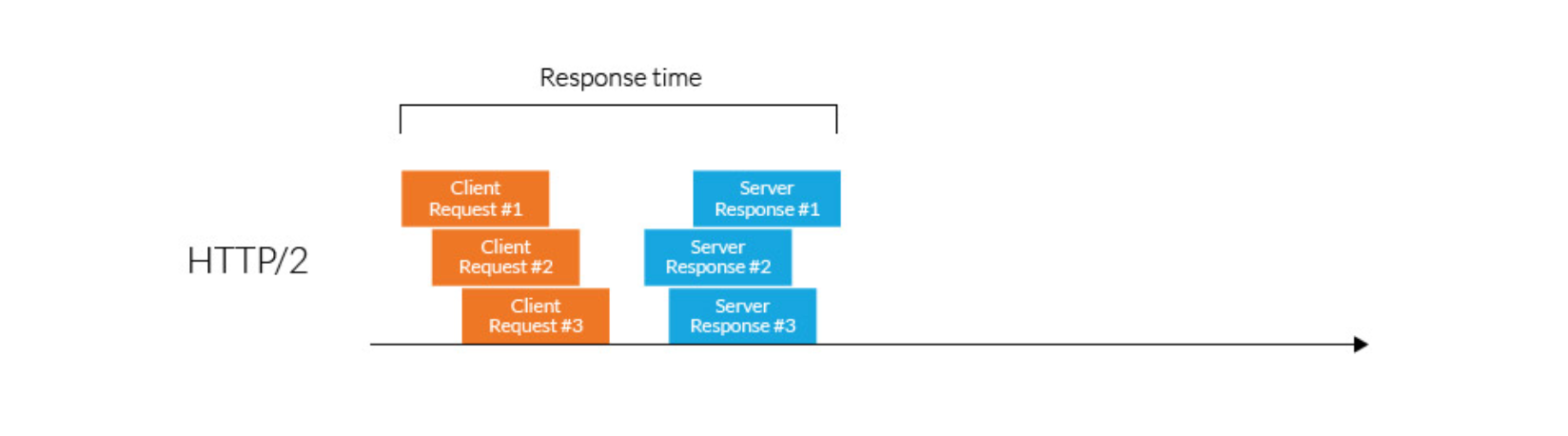

HTTP 2 solves this issue by offering a Binary Framing layer and Multiplexing the requests to the server.

HTTP/2 establishes a single connection object between the two machines, within which there are multiple streams of data. Each stream consists of multiple messages in the familiar request-response format.

Now, let's go ahead and replace the HTTP 1.1 connection between the booking service and authentication service with HTTP 2, as shown in Fig.2, below.

Request #1 is tagged to a particular stream, say Stream #1. When Request #2 needs to be sent to the authentication service, the booking service need not wait for Response #1. It could just tag Request #2 with a different stream identifier (say Stream #2) and send it.

The stream tags allow the connection to interweave these requests during transfer and reassemble them at the other end.

Fig.2 Impact of HTTP 2 Multiplexing on Response time

With HTTP 2, there is no need to create multiple parallel HTTP connections to make concurrent calls which improves response times.

Here’s more information on HTTP/1.1 vs HTTP/2 for those interested.

Smaller packet size. Network latency incurred for every request-response also depends on the size of the data packets being transferred. The smaller the packets' size, the lower the latency.

Let's consider the authentication request from the previous example and say the request contains the user's name and email address.

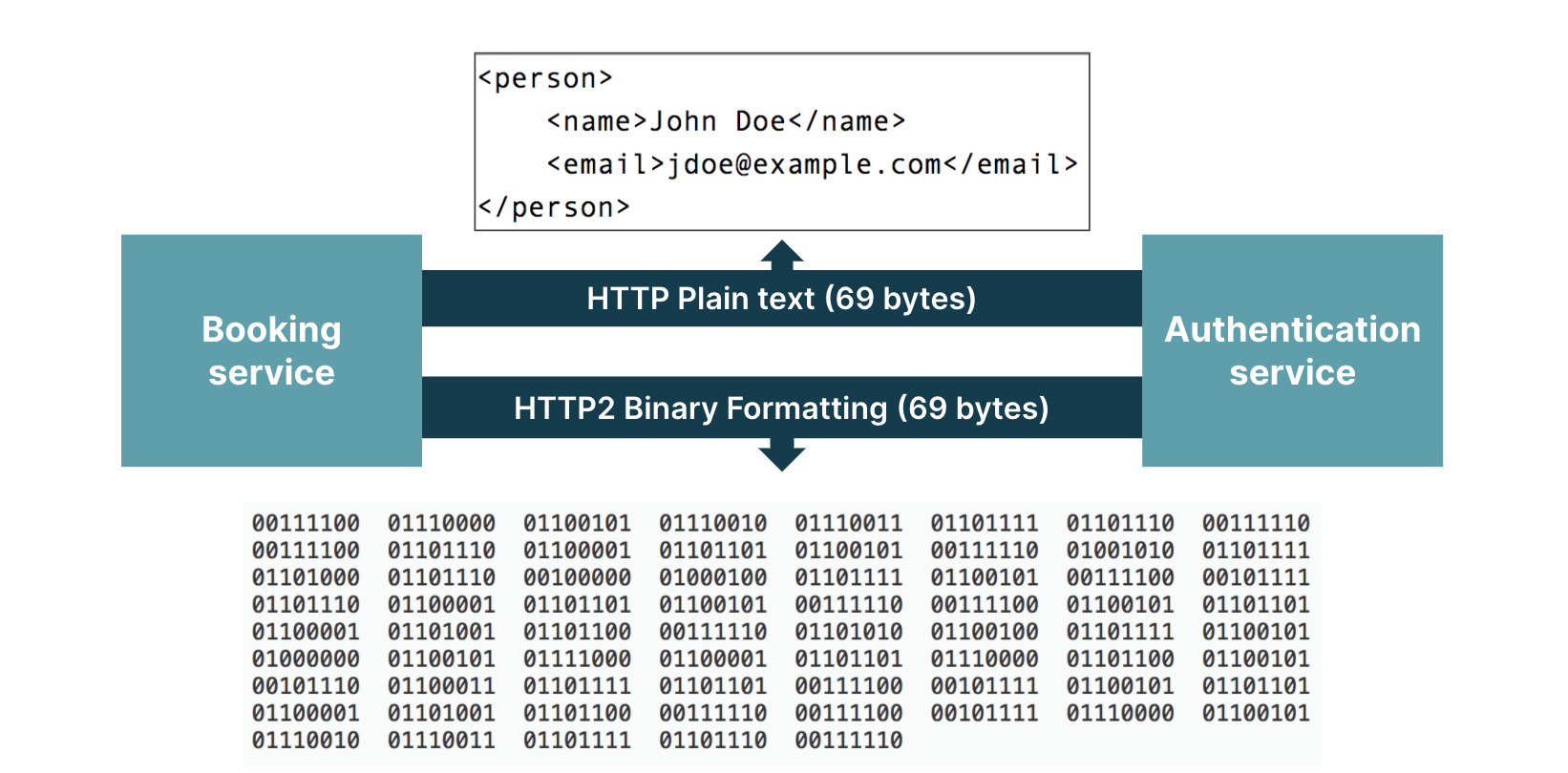

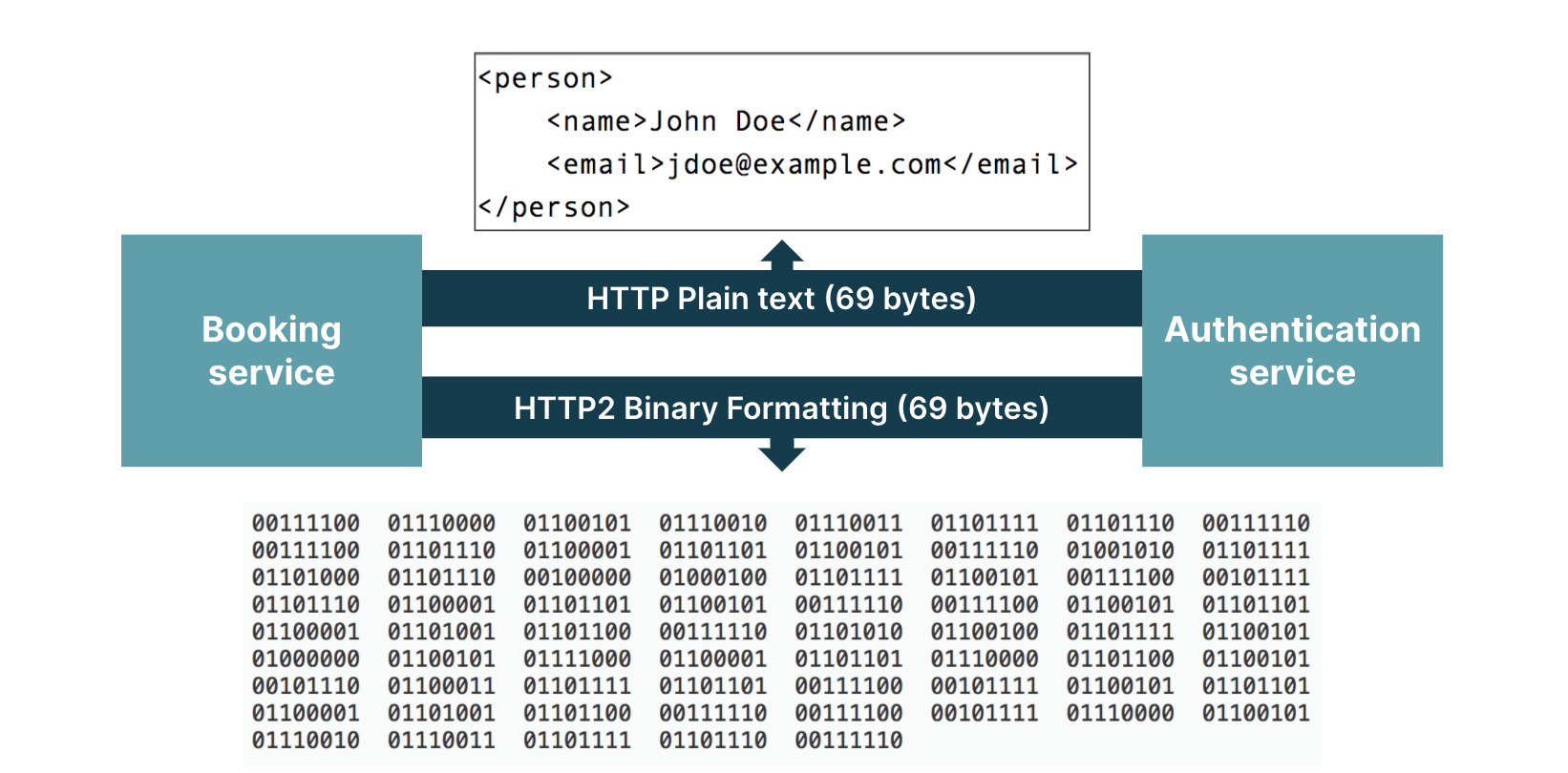

Consider this data being transferred as an XML document using HTTP 1.1 protocol. As shown in the figure below, one will see that the number of bytes being transferred in HTTP 1.1 plain text format is 69 bytes. (Here’s the code used to generate the data on the wire and their sizes.)

In HTTP 2, the binary framing layer encodes requests-responses.

Which means even if the number of total bytes of data being transferred are the same, as shown in Fig.3., the binary framing layer cuts them up into smaller packets of information and multiplexes them — increasing the data transfer’s flexibility and lowering network latencies.

Here’s where one could read more about the advantages of the Binary framing layer.

Fig.3 Packet sizes using HTTP 1.1 and HTTP 2

(whitespaces in the HTTP plain text are added only for better readability)

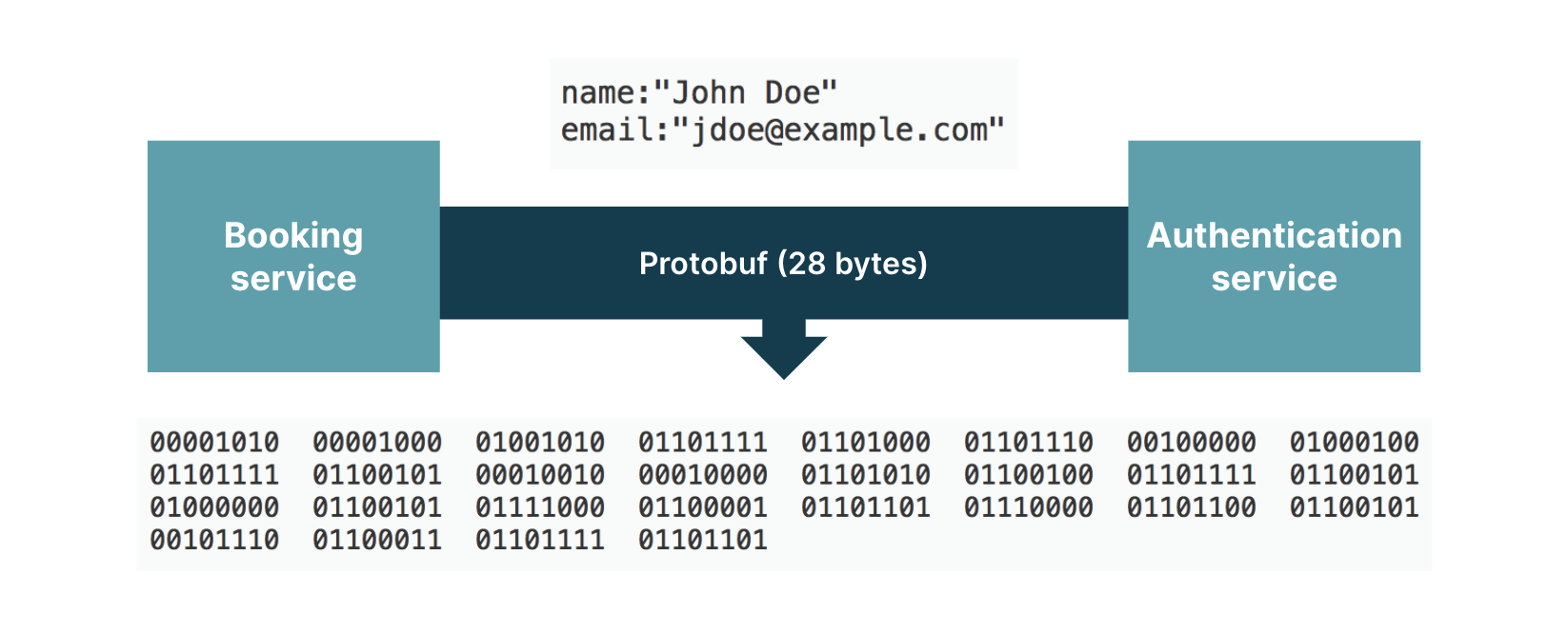

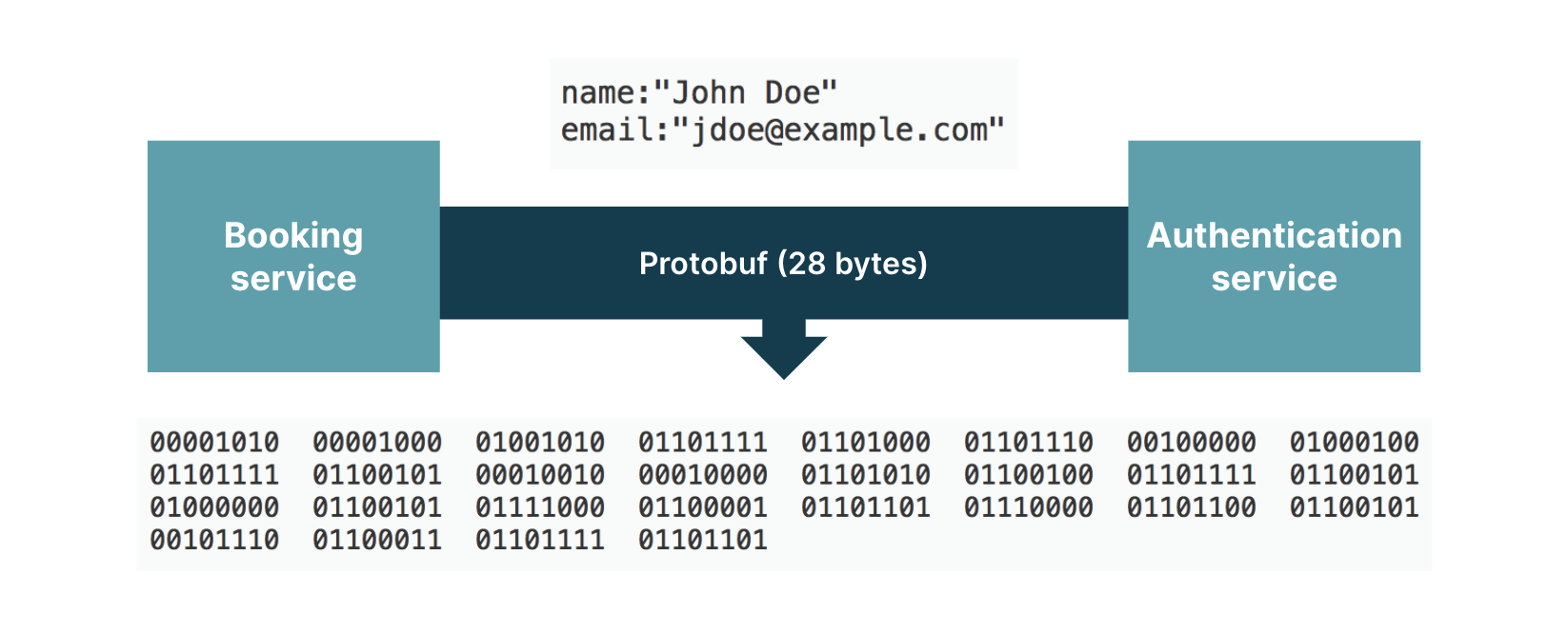

Using the same ride hailing app’s example, let's say the booking and authentication service decide on a contract as shown in Fig.4., where ‘name’ will be the first field and ‘email,’ the second.

message Person {

string name = 1;

string email = 2;

}

Fig.4 Contract decided between Booking service and Authentication service

The data to be sent across the wire is only the user's name and email, comprising just 28 bytes as shown in Fig.5.

This reduced packet size can be achieved using Protocol buffers. With Protocol buffers, the client and server agree on a contract — a proto message that defines the order of values being sent in the request.

Thus the data sent on the wire is only the values for the fields and not the field identifiers. More on this in the next section.

Fig.5 Packet size using Protocol Buffer

Protocol buffers

"Protocol buffers (a.k.a. Profobuf) are a flexible, efficient, automated mechanism for serialising structured data – think XML, but smaller, faster, and simpler."

You can specify how you want the information you’re serializing to be structured by defining Protobuf message types in .proto files.

Each Protobuf message is a small logical record of information, containing a series of name-value pairs. Fig.6. shows the Person.proto file from the previous example with name and email.

message Person {

string name = 1;

string email = 2;

}

Fig.6 Person.proto defining a Protobuf message

Once the messages have been defined, you run the Protobuf compiler for your application’s language on the .proto file to generate data access classes. These provide simple accessors for each field (like name() and set_name()) as well as methods to serialise/parse the whole structure to/ from raw bytes.

For instance, if you have chosen to work with C++, running the compiler on the above example will generate a class called Person, which you can use to populate, serialize, and retrieve Person protocol buffer messages from/in their application — with the code as shown in Fig.7.

Person person;

person.set_name("John Doe");

person.set_email("jdoe@xample.com");

fstream output("myfile", ios::out | ios::binary);

person.SerializeToOstream(&output);

Fig.7 Sample code to populate and serialise protocol buffer messages

Here’s where one could read more about Protocol buffers and generating the classes.

Benefits of gRPC

gRPC has many advantages over traditional HTTP/REST/JSON mechanisms:

Binary protocol (HTTP/2)

Multiplexing many requests on one connection (HTTP/2)

Header compression (HTTP/2)

Strongly typed service and message definition (Protobuf)

Idiomatic client/server library implementations in many languages

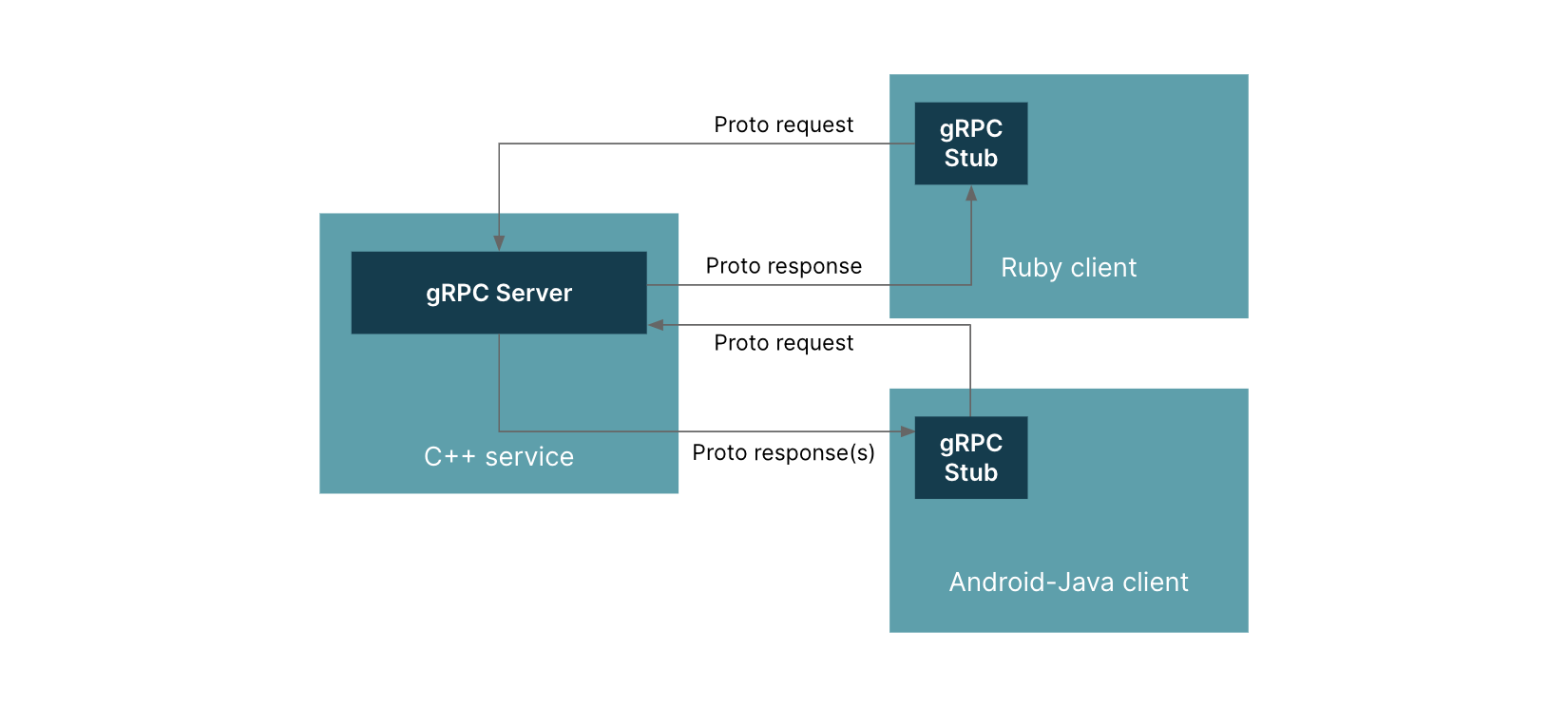

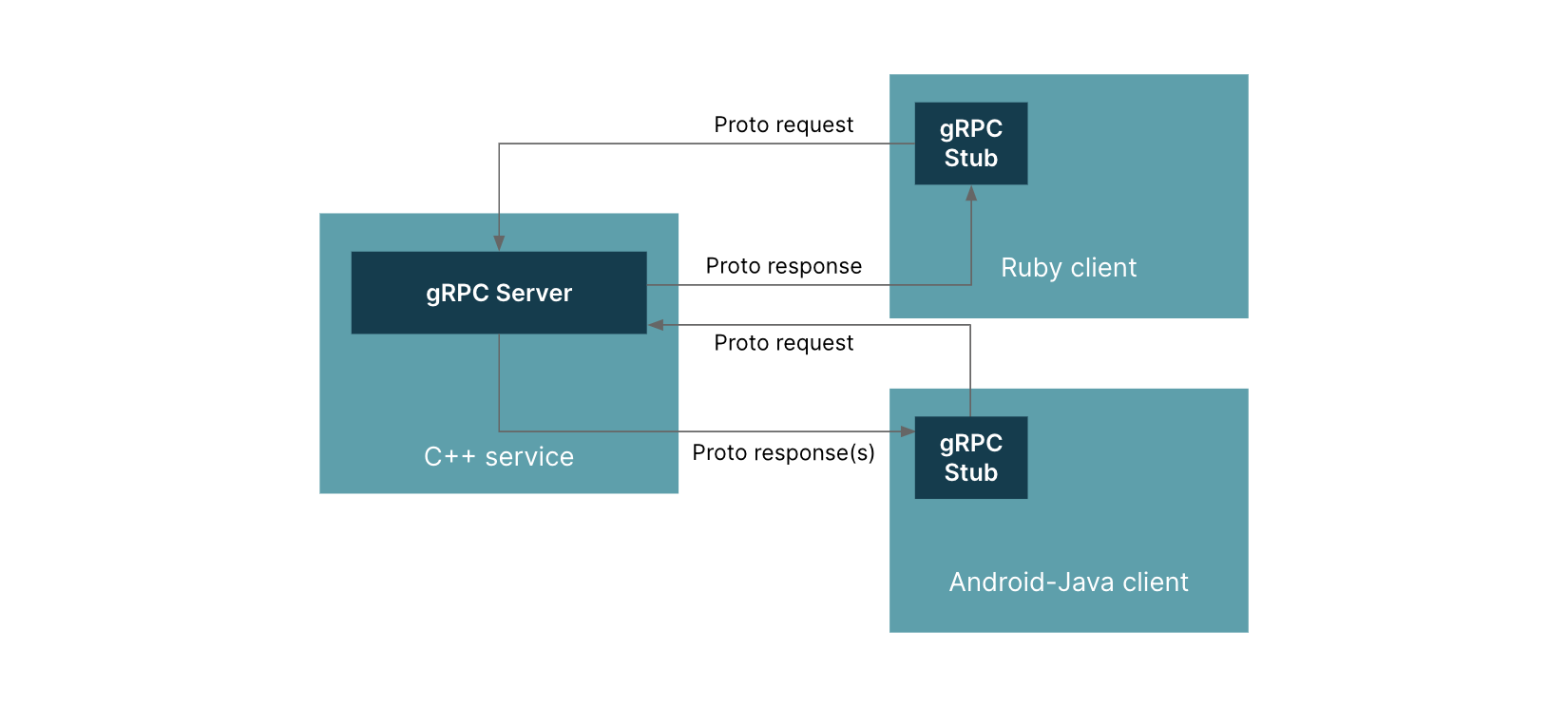

In gRPC, a client application can directly call a method on a server application on a different machine, almost like it were a local object, which makes it easier to create distributed applications and services.

gRPC is based on the idea of defining a service and specifying methods that can be called remotely with their parameters and return types.

On the server-side, the server implements this interface and runs a gRPC server to handle client calls. On the client-side, the client has a stub (client) that provides the same methods as the server.

Fig.8 gRPC architecture

With gRPC, you can lower network latencies and serve more requests in a less time. This also means the servers will now handle more requests per minute.

To avoid overloading the existing servers, we could spin up more servers to share the load. But that incoming load could still be distributed unevenly causing few servers to be over-utilised and the remaining to go under-utilised.

This is when you should probably introduce a load-balancer to distribute the incoming traffic. Part two of this blog series expands on the load balancing and Envoy Proxy.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.