In just over a decade, cloud has cemented itself as one of the foremost platforms in modern computing. That said, when it first emerged it was far from clear how powerful cloud would become, nor how profound the implications would be on software development. In this article, I’ll explore some of the hidden story of cloud’s rise to prominence.

A modest start

Back in 2008, you would have had two primary choices when it came to cloud: Amazon EC2 and Google App Engine (GAE). Today, we’re well aware that the two products correspond to two types of cloud computing models: infrastructure as a service (IaaS) and platform as a service (PaaS), but back in 2008, they were the only "cloud computing" platforms designated by the two giants.

Both EC2 and GAE advocated making computing into a utility, like electricity: something accessible on-demand, at low costs and where you pay based on usage. Where the services differed was in defining what they meant by on-demand computing power. EC2 defined it as a hardware capability, while GAE defined it as “computing services in certain scenarios”. Later, computing services in certain scenarios was further divided into PaaS and on demand software — SaaS. Then, the three computing models of cloud computing, IaaS, PaaS, and SaaS, became widely-known concepts.

In these early stages, it was hard to envisage these three computing models having any revolutionary characteristics. IaaS was incredibly similar to plain old server hosting in terms of the concept and usage scenarios. GAE had flaws as a web framework and was completely different from the mainstream programming models at the time. While for SaaS, it had been around for nearly a decade without suggesting anything particularly revolutionary would emerge.

As a pragmatic technician, my personal view was that cloud computing was a poorly-constructed gimmick. It was only as cloud evolved gradually that I began to appreciate cloud’s true potential, and the far-reaching influence it would have on the software industry. The cloud computing platform itself has gradually developed over the past decade, becoming the actual standard for the industry.

Let's briefly review the key technical points of cloud computing over the past 10 years and their impacts.

Virtualization and IaC

Long before EC2 was released, we’d been using ghost images to do a better job in server maintenance in our data centers. But from a software development perspective, the impact was limited: copying and transferring images between physical machines was time consuming and laborious.

With virtualization, things get a bit different. Where virtualization excels is that it can boot up a virtual machine with virtual images directly, which makes managing virtual machines no different than managing virtual images. But conceptually, it is a big change. For data center operators, they’re not maintaining a set of different server environments/configurations anymore, instead they’re managing the virtual images. All the servers could have the same environment, which is much easier to manage compared to before.

EC2 built on this and took it a step further: as a remote service, it provides sufficient and effective APIs for its virtual machine management and image management. As a result, the allocation, starting, stopping, and image loading of machines could be completed through automation.

At this point, the true significance of the technology and the revolution it would foment in the industry starts to become clear.

Firstly, hardware is abstracted into a description of computing power.

Now, hardware specifications — like CPU, number of cores and memory — matter little. This sea change radically alters how we think about — and purchase — hardware.

When we describe computing power rather than detailed hardware specifications, it’s as though the hardware is now defined by software. Take the EC2 platform as an example: running scripts written based on the API and AMI images, we can generate a hardware system in the cloud that meets our requirements.

This practice has dramatically changed the hardware procurement process. No longer are we thinking about the number of servers per rack or what processors our servers have. All we need to do is define our requirements for specific computing power.

Moreover, this software-defined hardware can be managed like software.

Since the birth of the IT industry, software and hardware have existed as entirely separate entities. Hardware was relatively fixed; any updates or maintenance required considerable lead times. Software evolves and improves more easily.

In software, we have efficient and automated change management processes. Software configuration management (SCM), workspaces, construction pipelines, continuous integration, and continuous delivery all played their part. However, hardware still relied on manual operations, which are error-prone and inefficient compared to the change management we see in software.

With EC2, startup scripts and the generation of virtual images can be done automatically. That meant that all the change management practices that apply to software could be applied to virtualized hardware as well.

This is what we call today Infrastructure as Code (IaC). IaC is undoubtedly a huge change — not only from a technical perspective but also from a conceptual perspective. It has fundamental impacts on how we understand software development, and laid the groundwork for subsequent developments, such as software-defined storage (SDS), and software-defined everything (SDE).

Elasticity and DevOps Revolution

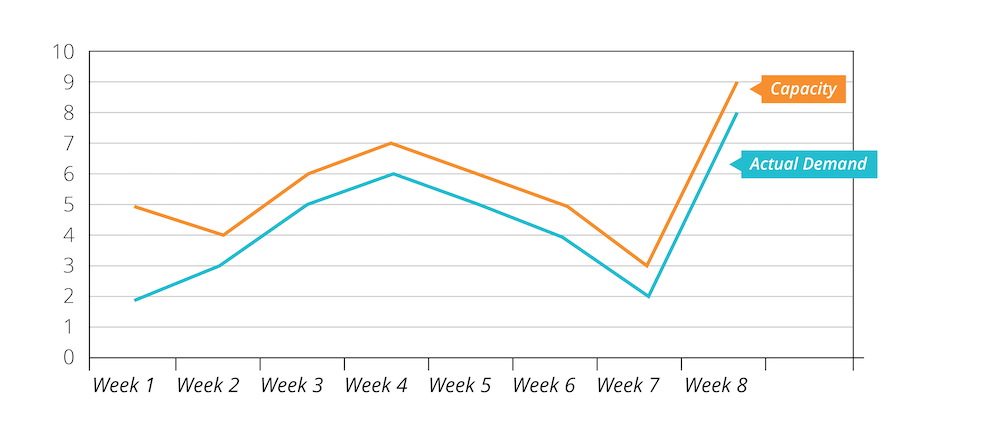

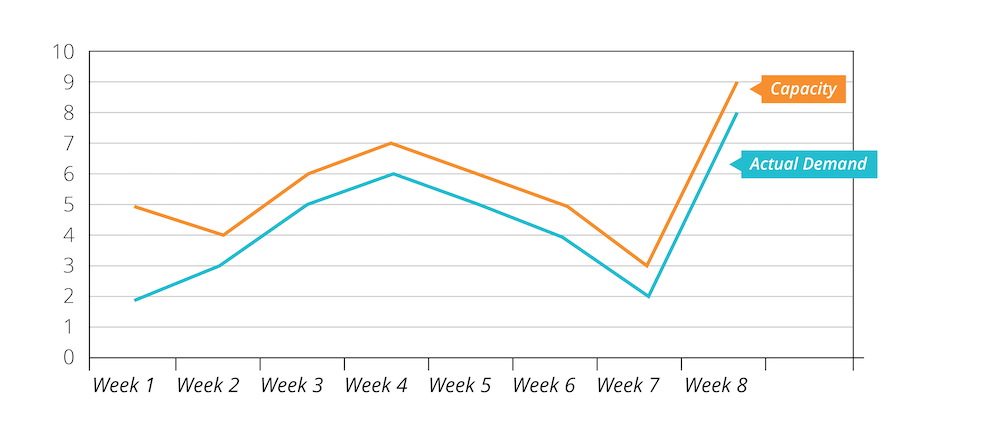

Another major benefit that cloud delivers is the notion of computing elasticity. Typically, computing elasticity is regarded as the ability to adjust computing resources on-demand. Need extra capacity for your busy season? Simply spin up 10,000 extra machines. And when things quiet down, you simply spin them down. No more having to build over-provisioned data centers that sit largely idle, outside of peak moments.

Elasticity delivers more than just capacity on demand

That particular facet of elasticity is easy to understand. However, to truly appreciate how revolutionary it is, we need to consider more specific scenarios.

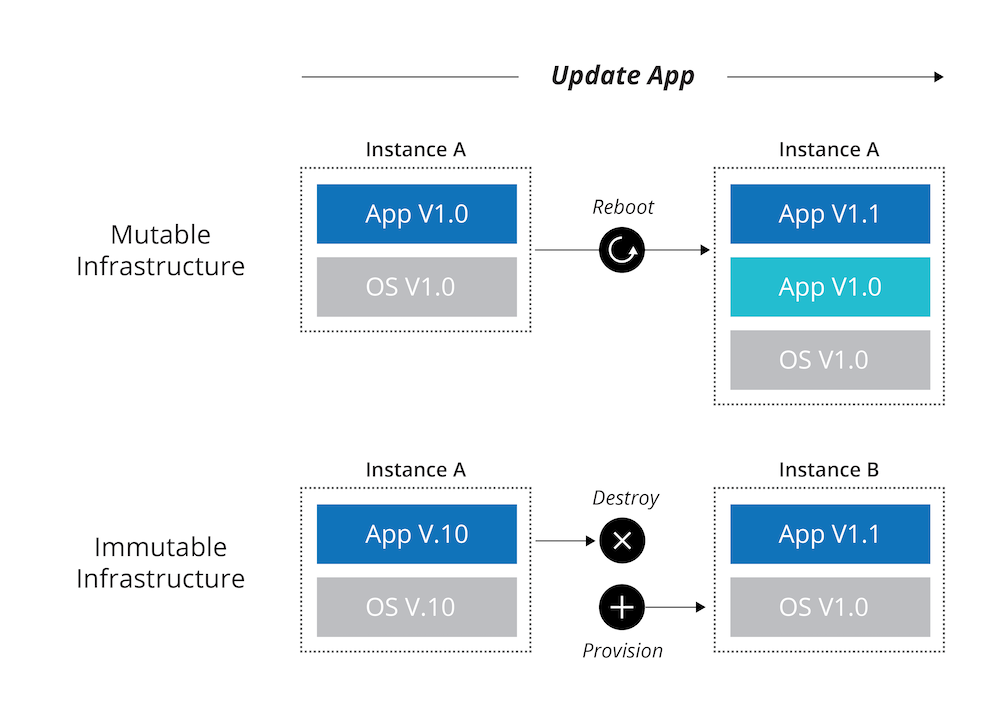

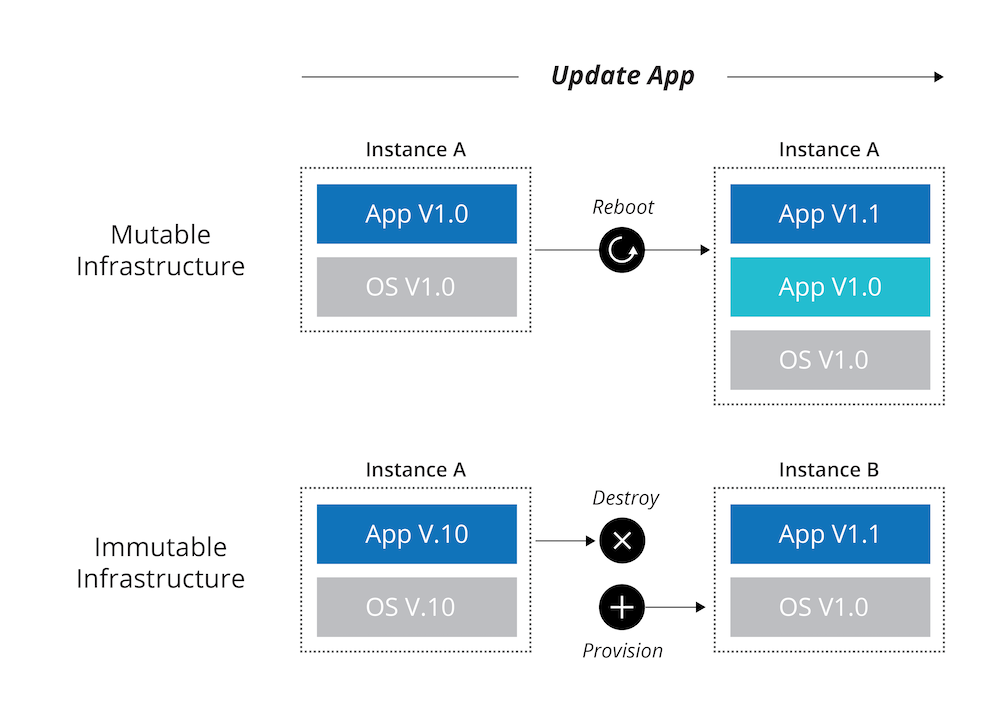

Let's take a look at a seemingly unrelated scenario: When we use software A with version 1.0 on one machine and need to upgrade it to 2.0, what should we do in a cloud environment to achieve this??

From the perspective of machine management, common practice would be to log into this machine and use scripts to upgrade the version.

From the elasticity perspective, we can describe it as three different elastic demand phases:

- Only one machine uses A 1.0;

- Two computing resources are needed, one using A 1.0 and the other using A 2.0;

- Roll back to one machine using A 2.0.

These two practices operate according to vastly different concepts. In the “updating via jump server” approach, we assume that the environment has no elasticity, which was, of course, historically true. We were deeply convinced that the computing environment has limited or even no elasticity. Even if a cloud platform provides elasticity, we may not be able to make good use of it.

With elasticity, we can use a server that cannot be modified at all and maintain the existing server through the replacement of different images. The advantage of this approach is that you can avoid snowflake servers.

If we apply this practice to the entire software development environment, the "updating via jump server" approach is not needed anymore. We can conduct a similar operation through the overall replacement of the environment.

While we’ve grown to understand more about the elasticity of cloud computing platforms, we’ve also made many mistakes and been distracted by short-term rewards such as efficiency.

Getting to grips with configuration management

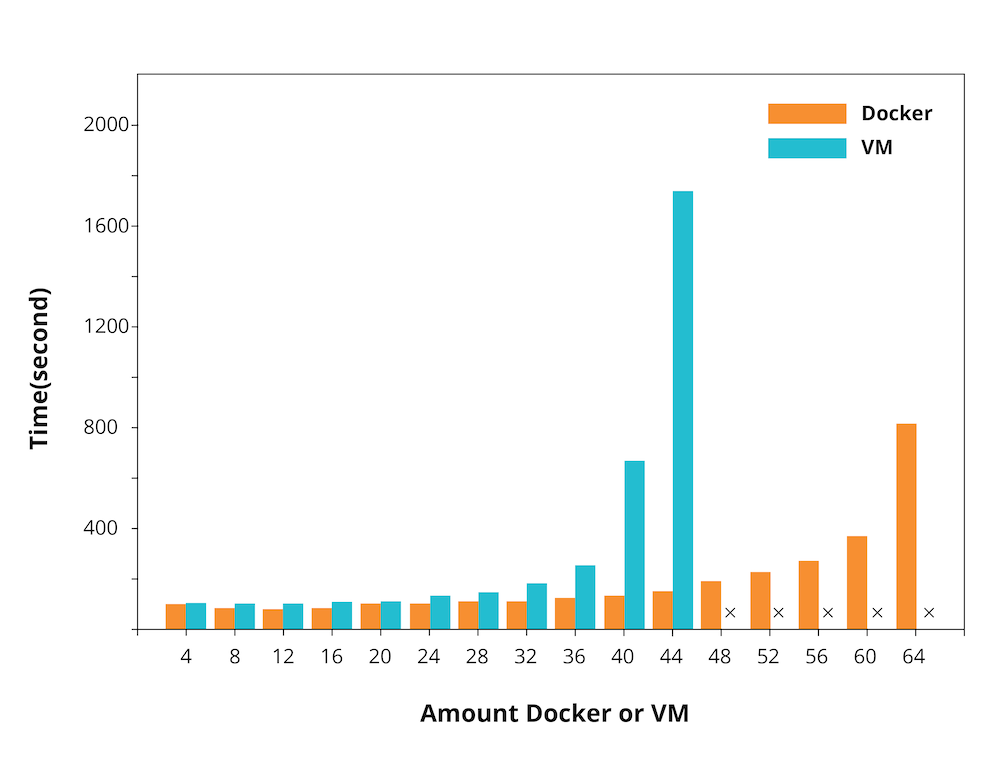

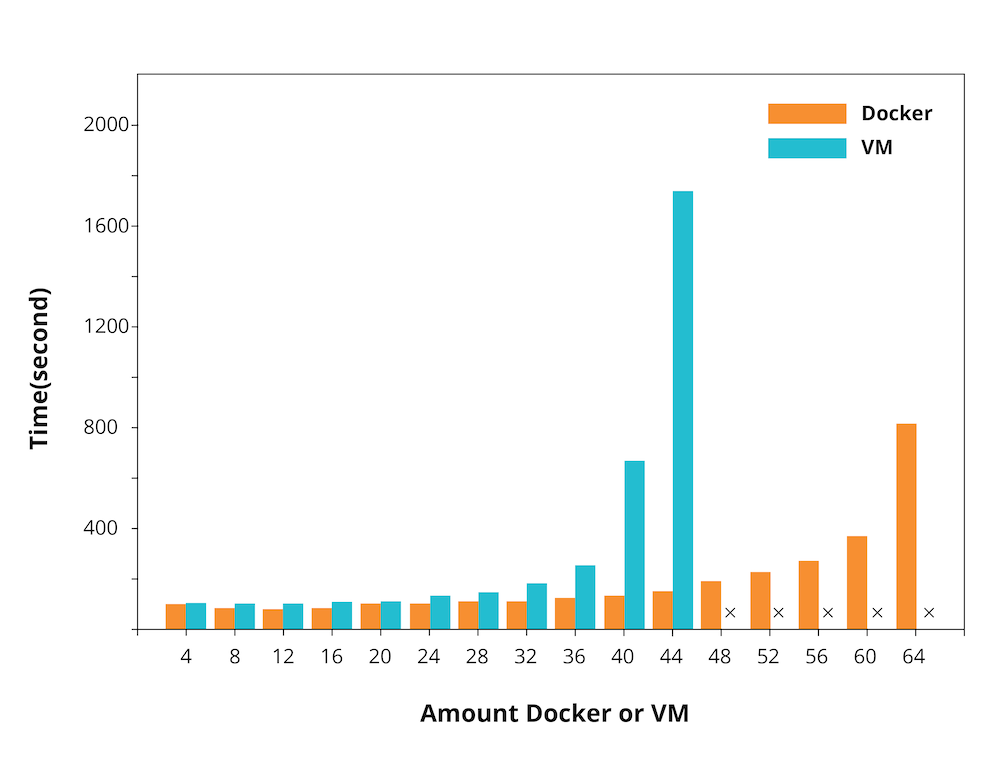

If you control 3,000 EC2 virtual machines, version upgrades through a phoenix environment is quite time-consuming. But logging into each machine and modifying the configuration in an automated fashion can be faster. For example, two pioneering products of DevOps, Puppet and Chef, coincidentally chose to ignore the elasticity of cloud platforms (partly because cloud was not the mainstream at the time). The recommended deployment practice was to update machines in a production environment through a central control configuration management server.

History has shown that when simplicity competes with efficiency, there is always an inflection point where efficiency eventually loses its appeal.

As our experience of cloud platforms increases, we’re understanding more about elasticity. The user-friendliness, high repeatability and consistency of elasticity have gradually shown advantages. Our recommendation of Masterless Chef/Puppet on the Radar reflects this thinking.

Elasticity is a unified software deployment and upgrade model. It greatly simplifies the two operations, eliminating the need for software deployment professionals. IaC achieved the unified deployment of hardware through software definition and elasticity, which eventually led to the DevOps movement, an ongoing revolution in software delivery.

Virtual Image Management

Software developers have long understood the importance of change management. So when we began to think about managing hardware like we do software — as suggested by IaC — we also needed to think about managing change of the virtual images we use to deploy artifacts. But here, things were more complicated. Virtual images exist in a binary format, detecting changes isn’t straightforward.

Two traditional ways of managing virtual images

In traditional software, change management can be done through text comparison. For example, the software version control system can compare the written code and detect differences in the text. For virtual images, in a binary format, you can’t make such comparisons easily.

To get around this, a variety of practices were developed to manage images. In general, they can be divided into two categories: a basic image with scripts; and a final image with verification.

The first method used a basic image as concise as possible. Then, a script could be executed through the hook once the virtual machine starts to install software until the initialization of the image was complete.

There are many advantages to this approach. Change management of the images was transformed into the management of the image initialization scripts. Good practices, such as version control and construction pipeline, could be easily applied to the image initialization scripts. And only a few basic images needed to be maintained, with all customization work being composed of scripts. In terms of centralized management and development team autonomy, it achieved an obvious balance.

But it wasn’t a perfect approach because it meant the virtual machine initialization process had a low response speed. That’s because the base image is very basic, it doesn't have the required software, tooling, libraries set up to run the service. To install those and also deploy the service, you’ll need a script. But usually provisioning an environment takes some time, so it's more time consuming, especially if you're doing dynamic scaling.

When quick response times are required, the second approach was needed. This approach went as follows:

- create a new version of the service

- get a base image

- get a virtual machine running with the base image

- install everything needed in the virtual machine

- deploy the service

- create a new image from the virtual machine

- delivery the new image as the artifact

Since most installation and configuration work is done during the image building process, no additional waiting time was required, thus increasing the response speed and reducing the flexible cost.

The disadvantage of this practice was that it greatly increases the number of images that need to be managed. That’s especially important when the entire IT department shares the same cloud platform, because each team could have dozens of different images, resulting in hundreds of images that need to be managed simultaneously. These binary images cannot be compared using the diff command. Therefore, it was difficult to tell the exact differences between the images.

In addition, once stored separately as a binary image, the software code used to build the image is difficult to trace. In theory, marking the configuration management tool version can provide certain traceability. But beware, the information is likely to be incomplete because of factors such as accidental modification or similar.

It’s also difficult to create an intuitive impression of the content in the image. Ultimately, we have to convert one way and manage images via the management server test code. We can use a virtual machine test, such as Serverspec, to verify the content of a binary image. As a result, the virtual machines’ specifications have become software code that can be managed.

These two different practices have very different elasticity and management costs. As a result, you need to think about your specific use case to establish which is best suited. Take the example of Travis CI, a continuous integration service provider, which isn’t sensitive to the elasticity costs for customers using CI, but is extremely sensitive to the image cost. Therefore, Travis CI will not adopt the second practice.

In terms of practical implementation, the first practice adopts a coding perspective, which means that the image becomes code that constructs the image, and the image is managed through the code. The second practice is based on image management, but it lacks support for the management of the code related to the image.

A unified approach to image management

There was briefly, thought given to a third alternative, which would be the ideal management mode for images: unified management of images, component codes and test codes in a version control tool. The idea was that a clear image format can be provided and be stored in the version control tool. The approach had the benefit of enabling us to extract the diff of the codes on the images.

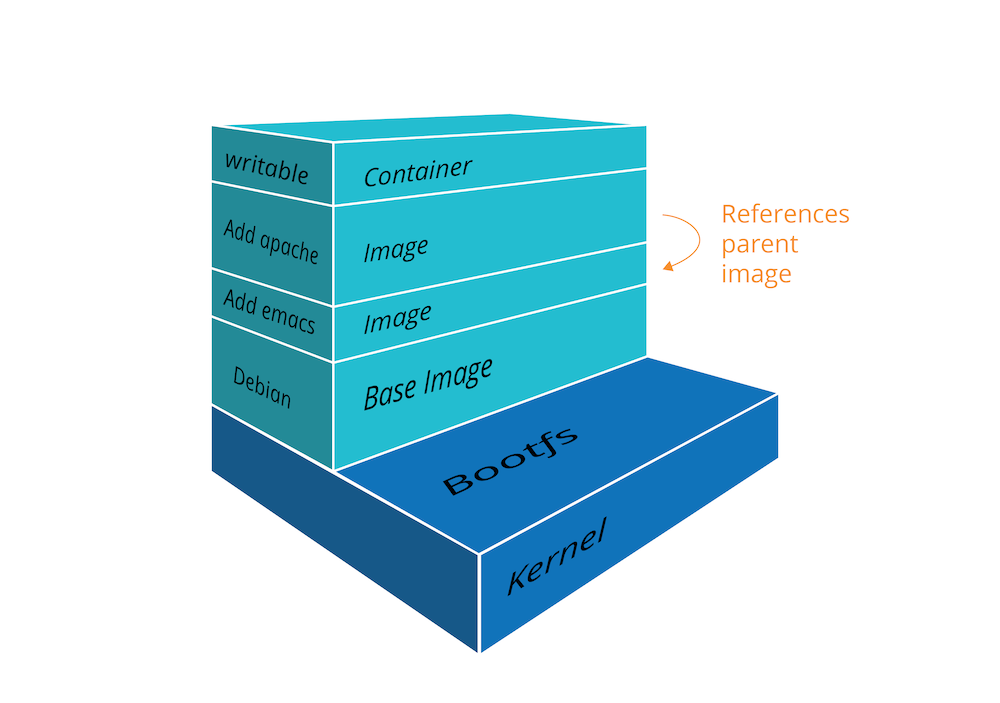

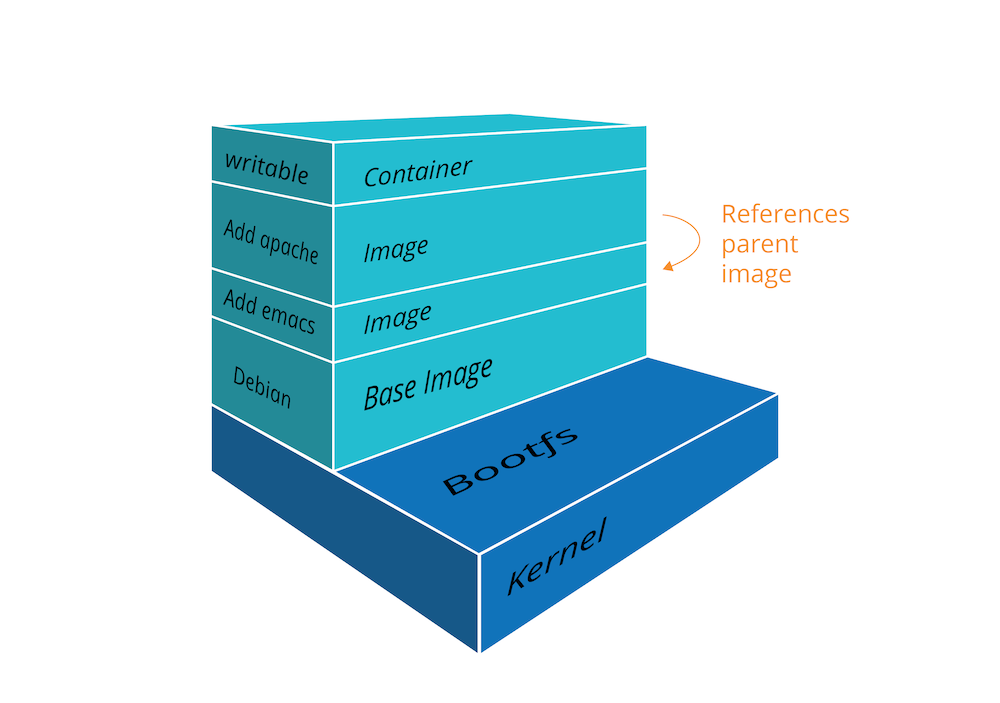

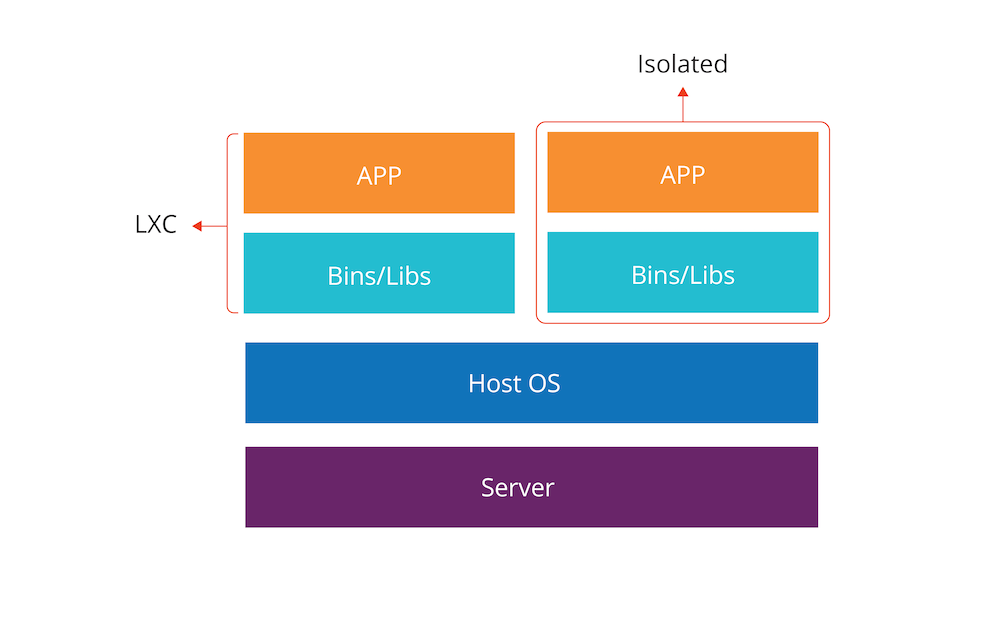

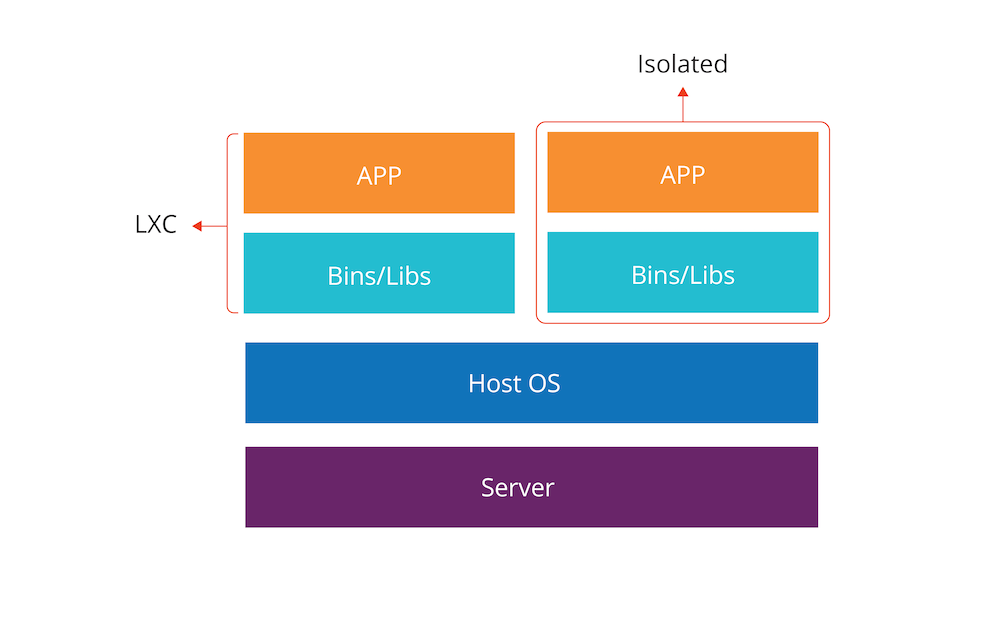

As it turns out, this tool never existed because of the emergence of Docker. Based on Linux Containers (LXC), Docker was the final puzzle piece of perfect IaC: a version management tool that starts from an image, or an image repository with the code version control capability.

Rethinking containerization and cloud

Docker and LXC both represent novel concepts for the well-established practice of virtualization.

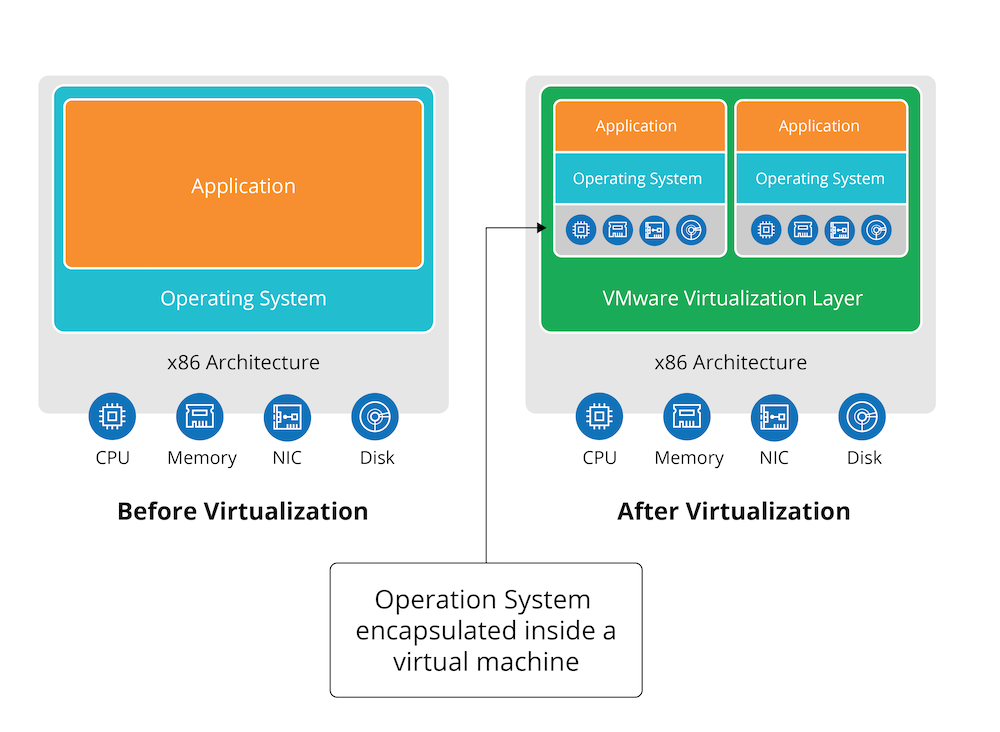

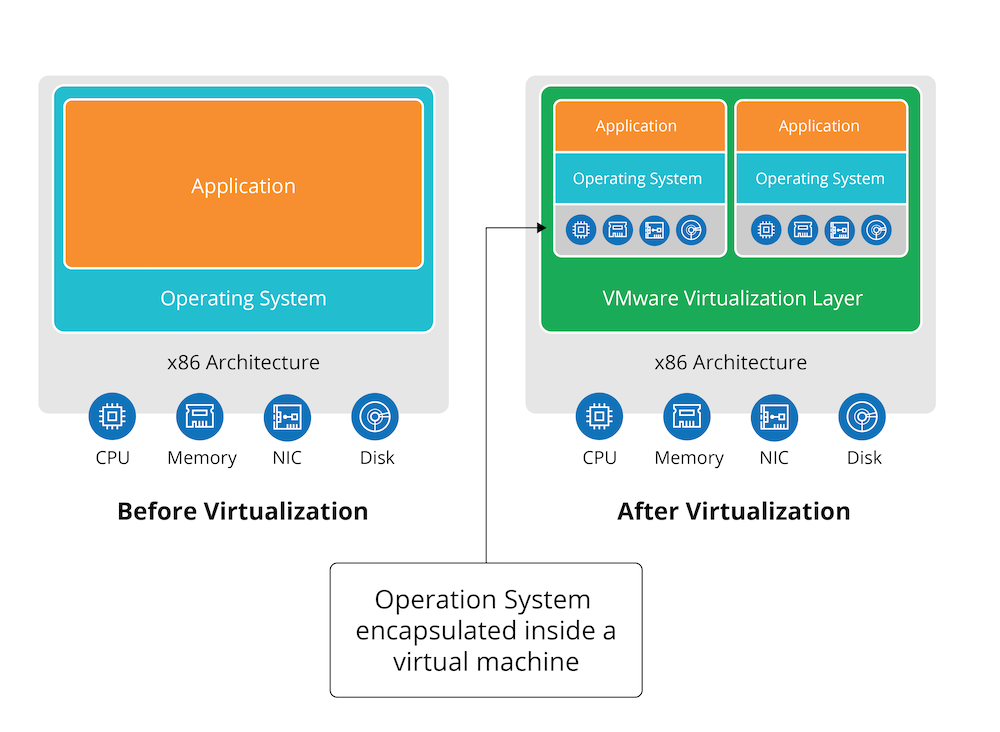

Virtualization and a new wave of containerization

Virtualization creates a complete machine through hardware simulation and executes a corresponding operating system on this machine. It inevitably consumes a huge amount of performance capability. Although existing operating system kernels allow virtual machines to improve performance by performing operations directly through the operating systems, additional consumption remains inevitable.

In terms of the final result, the virtual machine technology provides independent deployment units and an isolation sandbox. The isolation sandbox means that a program or operating system crash in a virtual machine won’t be transmitted to the other virtual machines. At the same time, the virtual machine's hardware device settings won’t be passed to other virtual machines.

LXC adopts this perspective: if it can provide an equivalent isolation capability, it doesn’t matter whether a virtual machine is used. Containerization provides a container with sandbox isolation capability comparable to that of a virtual machine on the operating system, and to provide lightweight virtualization based on it.

Containerization heralds the cloud-native era

Containerization is in some ways similar to the SaaS model of cloud computing. We can take a container as one of multiple tenants on the same computer. The isolation mechanism provided by the operating system ensures that the containers are isolated from each other; and in terms of the applications running in the container, each application can utilize all the computing resources.

Through containerization, even a single machine can support the SaaS model of cloud computing. This blurs the difference between the cloud and a single server. It matters less whether it is a computing network composed of hardware servers, or a server that executes multiple applications through multiple containers (or multiple instances in the same application). In terms of the concept, it hardly makes any difference.

For most of its history, cloud was seen as a derivative concept — you build cloud on top of a set of servers. Servers, on the other hand, are a native concept. But when you look at this through the lens of IaC and elasticity, cloud and single server are the same concept, the only difference is the extent of their capabilities. At this point, cloud was no longer a derivative concept, but a native concept. When we look back from a cloud native perspective, a data center or server is a cloud with limited elasticity. This indicated that we had completely entered the cloud era — the cloud native era.

DCOS helps pave the way for successful private cloud

In the cloud native era, we can define and understand any computing platform from the perspective of cloud: IaC — software-based description of computing power and infrastructure — elastic capacity, and cost. A single server, a data center, or a public cloud platform can be seen as a computing platform with certain elasticity under this framework.

In particular, lightweight containers represented by Docker containers have huge advantages in terms of IaC and computing costs. In a very short time, they were applied in various fields as the foundation of new-generation cloud computing platforms. Now, organizations re-visited the idea of private cloud data centers, based on Docker and using data center operating systems (DCOS) as the core.

Before talking about DCOS, we need to understand port binding, a seemingly simple technology.

Port binding earns its stripes

Docker containers provide isolated sandboxes, which need to be connected in order to achieve useful functions through collaboration. For instance, your purchase management system will probably want to be able to talk to your supplier API. How do we make configuration visible between different sandboxes without affecting the isolation? There are three common approaches: a distributed configuration management library-such as etcd or ZooKeeper; dynamic DNS; and the simplest and most elegant solution, which is port binding.

Port binding adopts a localization assumption, which means that all required external resources are conceptually local services. The services serve applications inside containers through local ports. For example, when accessing a database, the applications in the container don’t need to know the database's IP address or port number. Instead, they only need to assume that the data is available on a local port, such as 8051, and visit the port to access the database. When the container is started, a controller can bind this port to another local container or server.

The advantage of port binding is that all background applications become local, and the container scheduler is responsible for mapping to specific services through the port. This practice makes applications deployed through a container similar to processes in an operating system, and scheduling across physical machine boundaries is similar to operating system scheduling.

Towards an OS for the data center

Can we treat the container as an enhanced process space, and the container scheduler as an operating system working on a group of physical machines? ("Containers are processes, PaaS is a machine, and microservice architecture is the programming model.") This is the origin of the data center operating system (DCOS).

DCOS is a transparent elasticity expansion scheme. For example, if the database and applications running on a single machine are separated and moved onto two machines, the container for these applications and database doesn’t need to be changed. All that is needed is to bind the database and applications to the remote port when the container starts. The expansion practice can be easily applied from several machines to the entire data center. The cloud computing platform can be used to increase the managed computing resources.

In addition, DCOS is a progressive solution as it doesn’t need to plan the capacity of the entire platform from the beginning. It manages one machine and one hundred machines in the same way. The deployment method remains the same from the first to the 500th day of operation. Some even claimed that "mesos/k8s (DCOS) is worthwhile even if you only have one machine".

DCOS solved the problem of elastic expansion and high switching costs associated with the first cloud era of privatization. Enterprises could adopt DCOS as a fundamental deployment solution based on specific applications, and gradually expand capacity according to elasticity needs, once the system goes online.

This time, enterprises no longer pursued the cloud platforms with general-purpose computing capabilities that Amazon provides, but paid more attention to actual elasticity, and built private clouds based on key applications. This process is also known as private PaaS, or domain-specific PaaS.

Rise of PaaS platforms

Looking back on the development of cloud computing in the past decade, it may be reasonable to conclude that GAE, the pioneer of early cloud computing platforms, hasn’t received the attention it deserves. That’s partly because Google has positioned GAE for the development of specific types of web applications; and has lacked corresponding privatization solutions. It has forced companies to face the dilemma of choosing either GAE or nothing. PaaS vendors, such as Heroku, have been in the same dilemma. Private PaaS solutions, such as Deis and Cloud Foundry, are rapidly maturing, even so, not all enterprises lack the maturity to embrace private cloud.

Underpinned by the great success of DCOS in recent years, Mesos and Kubernetes have emerged as the prime choice for the enterprise when it comes to basic platforms. Building a PaaS platform around applications has become the primary focus in cloud computing development. As a result, the once thriving public cloud platforms have also introduced containerized elasticity solutions.

Today, we’ve reached the point where enterprises have finally found a way to implement private clouds satisfactorily using third-party data center resources, meanwhile, cloud offerings have evolved from basic computing resources to platform computing capabilities.

In this process, the different cloud providers have even developed their own special areas of expertise. For example, Google Cloud Platform today offers advanced machine learning capabilities through TensorFlow; Microsoft’s Azure platform has image and language recognition; and AWS Lambda and serverless architecture promises continuously reduced elasticity costs.

Hybrid and polycloud have increasingly become the essential strategies for the IT organizations. Cloud computing is fulfilling its promise: making computing a basic resource like hydropower and accessible at low costs at any time. The way we deliver and operate software, and even our understanding of software and hardware, have changed dramatically during this process.

We hope that the brief review of cloud computing can help you better understand where this trend came from and where it will lead.