Serverless architecture and microservices are very popular concepts these days, but can they go together? Could a microservice be deployed as a serverless function or is it better to always use containers or dedicated virtual machines (VMs)?

The right answer of course is, ‘it depends' – on a number of variables and ‘it evolves’ – over time, based on usage and performance. This article, part one of a two-part series, explores serverless functions as a deployment model, discusses why you should consider it and when you should use it.

What are serverless functions?

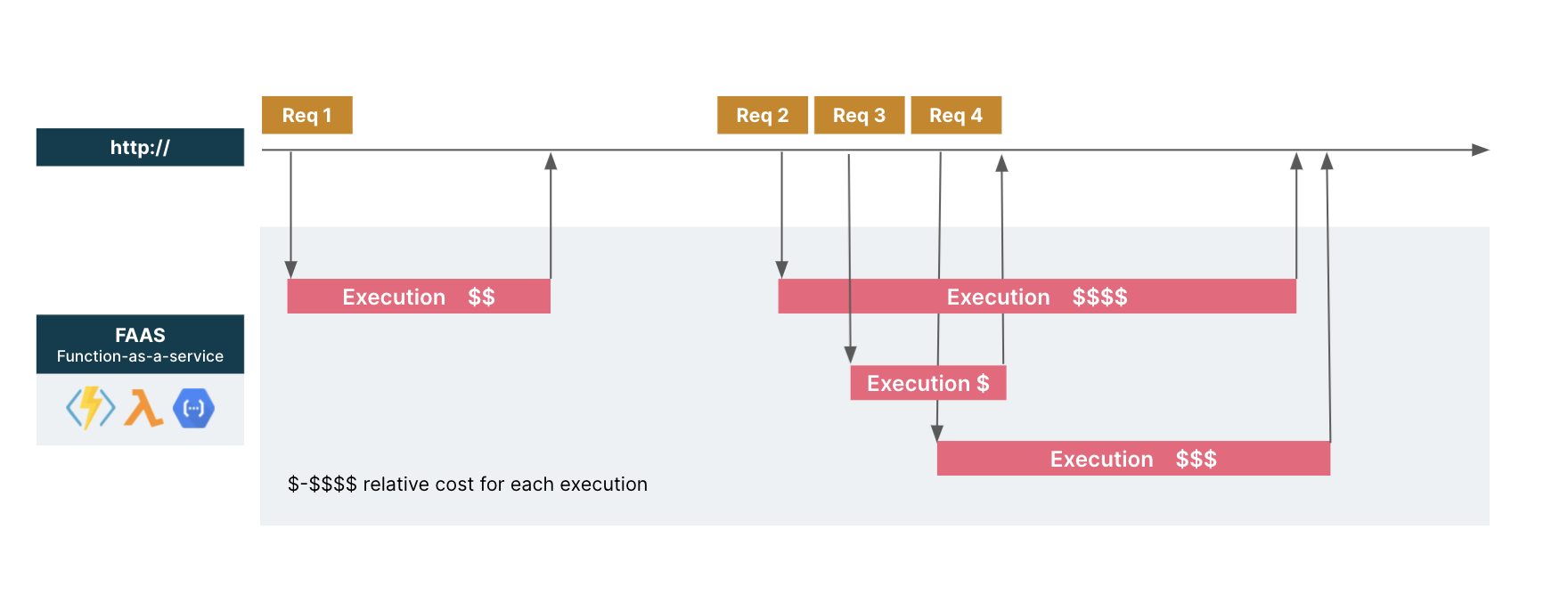

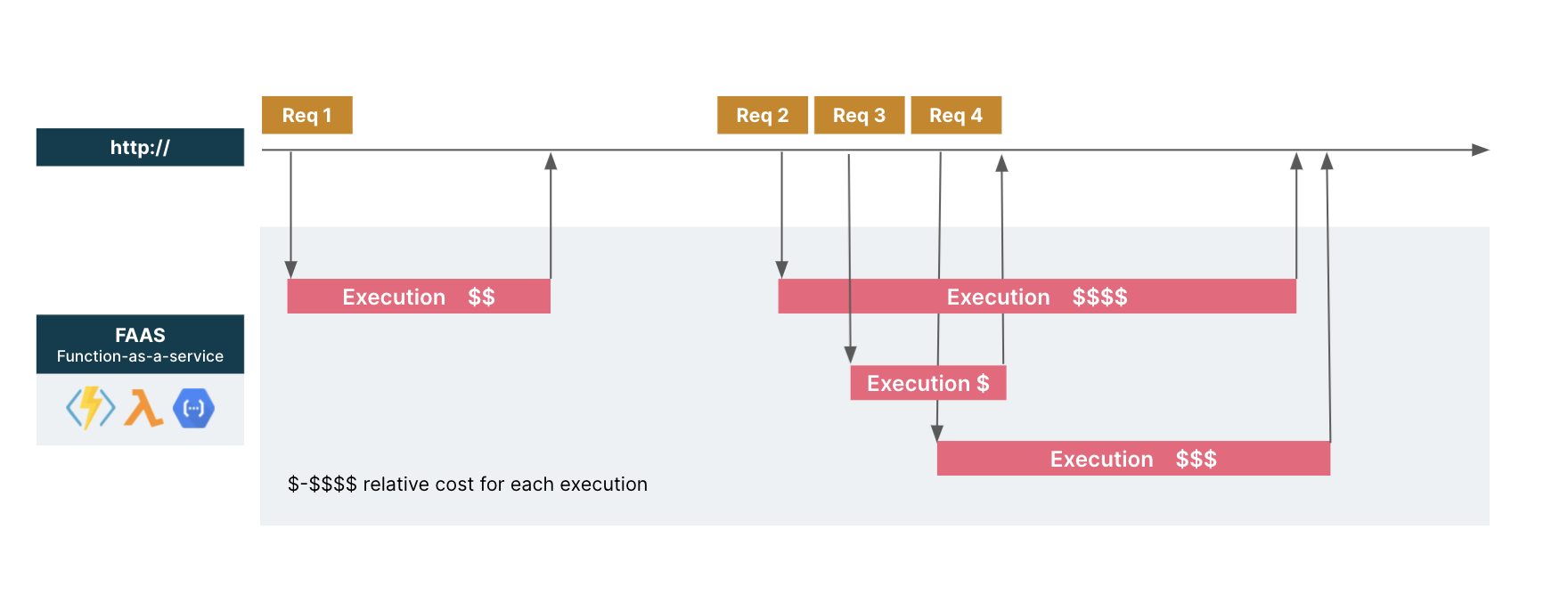

Serverless functions (also known as functions-as-a-service or FaaS) are units of logic that are instantiated and executed in response to configured events, like HTTP requests or messages received in a Kafka topic. Once the execution is completed, such functions disappear, at least logically, and their cost goes to zero.

FaaS responding to HTTP events: multiple parallel executions and 'pay per use”'model

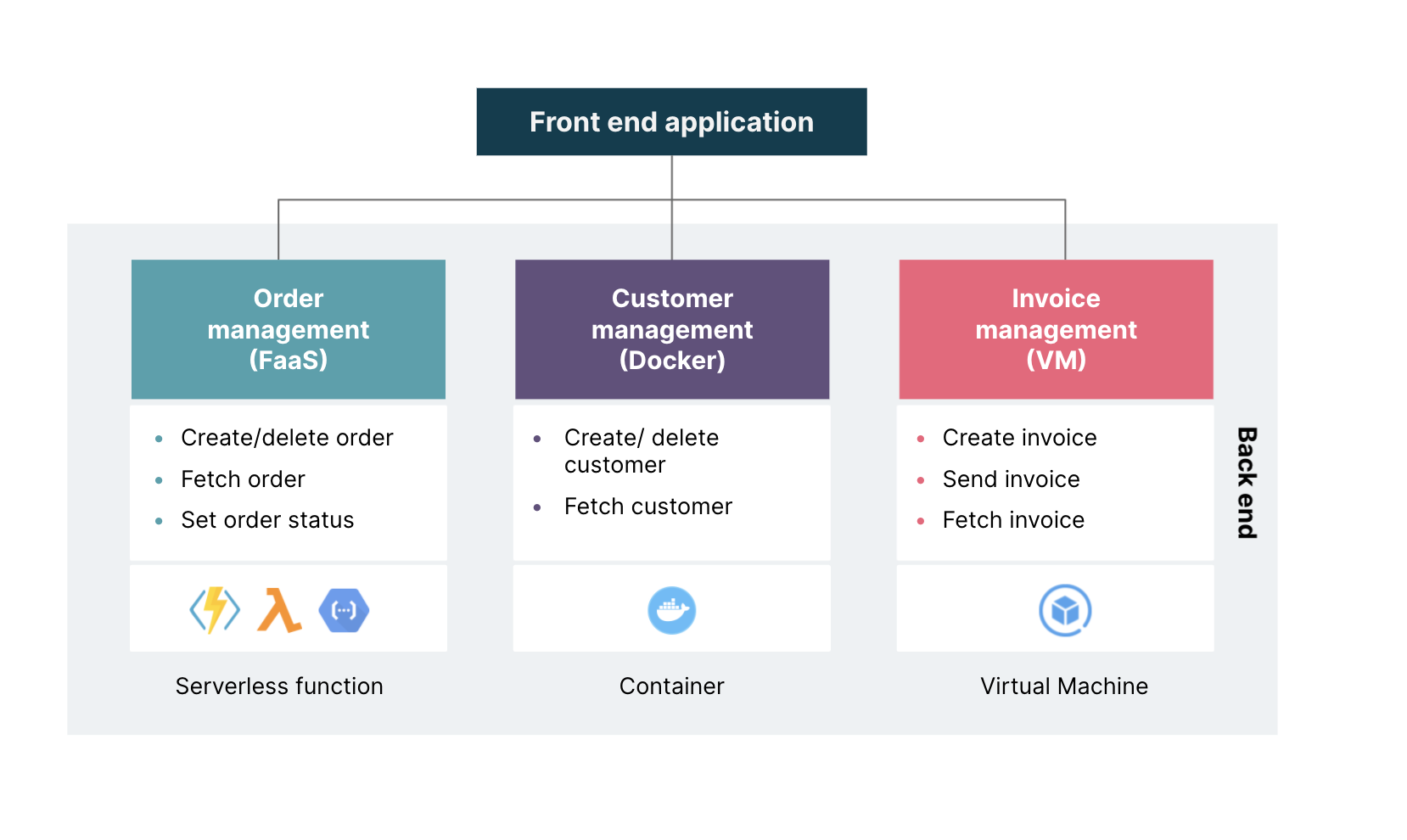

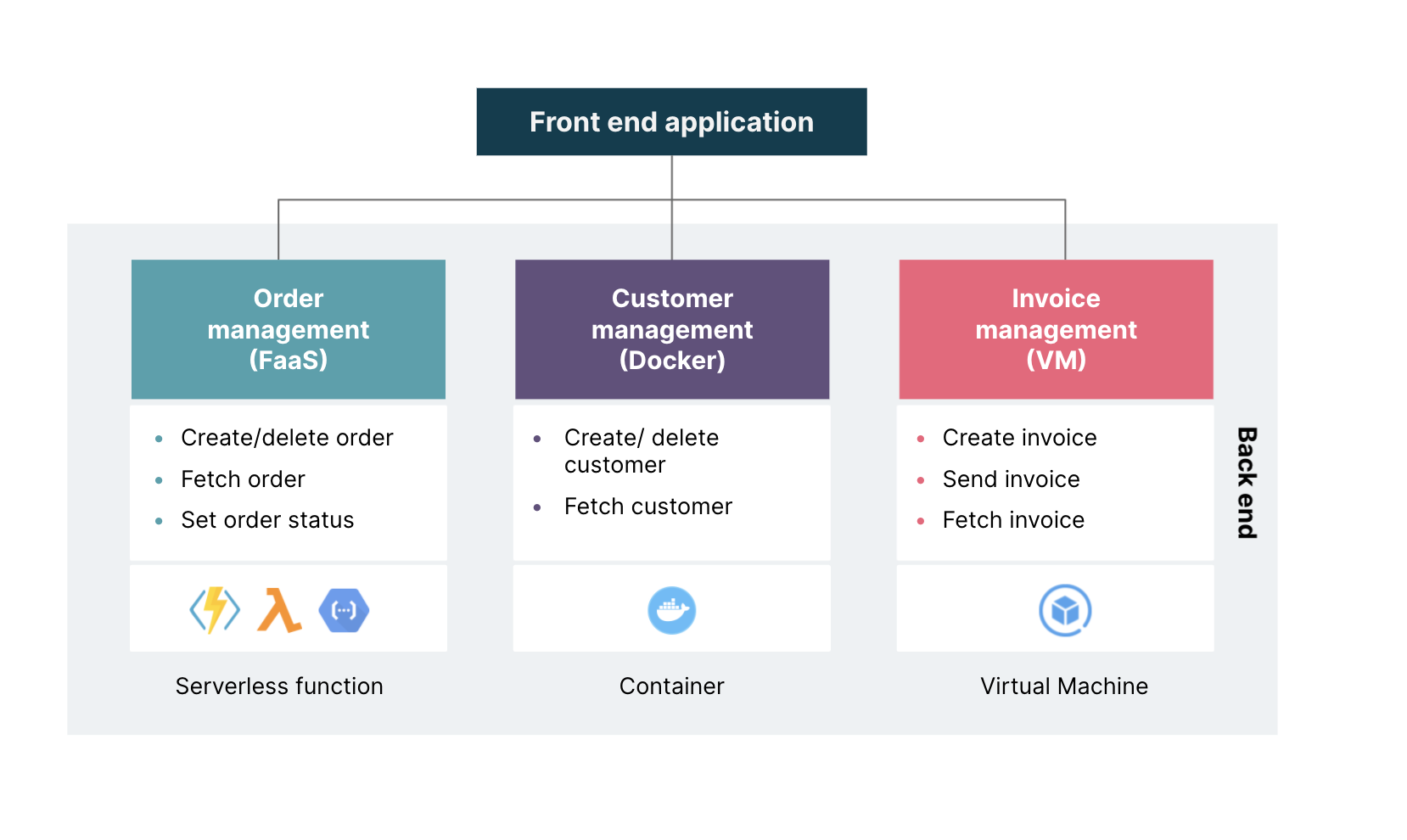

All major public clouds have FaaS offerings (AWS Lambda, Azure Functions, Google Functions, Oracle Cloud Functions) and FaaS can also be made available on premise with frameworks like Apache OpenWhisk. They have some limitations in terms of resources (for instance, on AWS, a maximum of 10GB memory and 15 minutes execution time) but, can support many use cases of modern applications at varying levels of granularity.

They can handle most things from a single, very focused responsibility, such as triggering an SMS message when an order is confirmed, to a full microservice living side by side with other microservices implemented on containers or running on virtual machines. Read Sam Newman's overview of the FaaS-microservices relation.

A front end application served by microservices implemented with different deployment models

Why should app deployment teams consider FaaS?

The two main benefits of serverless functions or FaaS are elasticity and cost savings.

If a serverless function is called by 10 clients at a time, 10 instances of it are spun up almost immediately (in most cases). The entire provision of infrastructure, its management, high availability (up to a certain level) and scaling (from 0 to the limits defined by the client) are provided out-of-the-box by specialists working behind the scenes. This is elasticity on steroids and allows you to focus on what is differentiating for your business!

When serverless functions are idle they cost nothing (because you pay per use), potentially delivering significant cost savings over containers or VMs. We say ‘potentially’ because not all situations are the same. A generalized adoption of serverless functions might end up costing you more money if not carefully considered.

When should app teams Uue (or not use) FaaS?

When launching a new service, a FaaS model is likely the best choice you have because:

Serverless functions can be set up quickly, minimizing the work for infrastructure

The pay-per-use model needs no upfront investment

The scaling capabilities provide consistent response time even under different load conditions.

If, over time, the load becomes more stable and predictable, you should reconsider serverless functions — for stable and predictable workloads, it can be expensive compared to the alternatives.

Let us explore this using the AWS price list at the time of writing.

Consider an application that receives 3,000,000 requests per month. Each request takes 500 msec to be processed by a Lambda with 4GB memory (the CPU gets automatically assigned based on memory). In FaaS’ pay-per-use model, regardless of load curve (peaks or flat), the cost per month is fixed: $100.60.

If we were using VMs or containers, the price heavily depends on the shape of the load curve. Let’s explore two scenarios.

With load peaks. If the load is characterised by peaks and we want to guarantee good response time consistently for our clients, we need the infrastructure to be able to sustain peak workloads. Let’s assume – at peak we have 10 concurrent requests per second (possible if the 3,000,000 requests are concentrated within certain hours of the day or on certain days like at month end).

In this case, you might need a VM (AWS EC2) with 8 CPUs and 32GB memory to provide the same performance as Lambda. When you choose that, other things remaining constant, the monthly cost would be $197.22. This means that you’ll be paying double what you would if you’d chosen FaaS.

With flat load and no peaks, we could easily sustain the load – a VM with 2 CPUs and 8MB memory would suffice. Then, the monthly costs in this case would be $31.73 USD, less than a third of the cost of Lambda.

A realistic business case is much more complex and needs a thorough analysis. But just from looking at these simplified scenarios, it’s clear that a FaaS model could be very attractive but burdensome when expectations change.

This means that application teams need to build for the flexibility to change the deployment model as the context evolves. As with everything else, flexibility comes at a price.

In the second part of this two-part series, we explore how you can achieve flexibility without shooting up your costs.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.