Recently we wrote about the importance of explainability in machine learning projects. In this post we delve deeper and explore how it can actually be done by data scientists, researchers, and others.

The use of algorithms to automate or delegate decision making processes is increasing and can be found in a diverse range of domains. Sometimes this is driven by a desire to cut costs; occasionally it comes from a belief that algorithms are more "objective" and "unbiased" when it comes to decision-making. This belief is false; algorithms are only as good and fair as the data used to train them and the cost functions they were taught to optimize.

As we increasingly delegate important tasks to machines, it’s important to remain sensitive to what algorithms can and cannot do. This is particularly crucial when they are used to make crucial decisions that could seriously affect the lives of many people. If we create a learning algorithm that tries to blindly copy historic data, it’s highly likely that algorithms will replicate the biases and unjust practices of the environment from which the data emerged.

What can we do practically to avoid these issues? How can a team of Data science or machine learning engineers, maybe with limited data and domain knowledge, make sure this is avoided?

There are multiple aspects to creating a fair model:

- Data: Make sure the sample data is an accurate representation of the population. In cases where the actual population itself is biased due to pre-existing unfair practices, consider altering the data/sampling technique to remove the bias. For example, if you are trying to automate hiring processes, if the actual population of people hired historically is biased, then the data needs to be altered to avoid transferring this bias into the new automated hiring system.

- Problem formulation: Make sure the mathematical problem statement being solved matches the actual business problem and not an unfair or inaccurate proxy of it. A good example of this is IMPACT, a teacher assessment tool developed in 2007 in Washington, D.C. It was supposed to use data to weed out low-performing teachers. But ‘low-performing’ was initially defined by the grades of the students taught by these teachers. This led to many unfair instances of good teachers of students with special educational needs being fired.

- Explainability: Even if a model is created with due consideration to the two aspects above, stakeholders and consumers can verify if the model is robust and unbiased only if it is explainable — in other words, only if they understand it can accountability be established and clarified.

Although all three are important, the final point - explainability - is often overlooked. One of the reasons for this is that it is the most challenging as it places additional demands on people using algorithms. This article focuses on explainability; first it provides a brief recap before then exploring how we can incorporate explainability into a machine learning model.

What is Explainability?

Explainability is the extent to which humans can understand (and thus explain) the output of a model. This is in contrast to the concept of ‘black box’ models, where even the designers do not understand the inner workings of a trained model, and thus cannot explain the results produced by it.

Models can be inherently easy-to-explain/transparent or difficult-to-explain/opaque. Linear regression, logistic regression, K-means clustering and decision trees are examples of inherently easy-to-explain/transparent models. In contrast, deep learning, random forests, and topic modeling are difficult-to-explain/opaque models.

Making inherently opaque models explainable

For transparent models, it is possible to directly understand the mathematical steps involved in computing the output, so that it can be simulated by a human. For inherently opaque models, some additional techniques need to be used to improve explainability. These results of these techniques can be evaluated using the following criteria:

Comprehensibility: The extent to which they are comprehensible or understandable to humans

Fidelity: The extent to which they accurately represent the original opaque models

Accuracy: Their ability to make accurate predictions on previously unseen. cases

Scalability: Their ability to scale to models with high complexity in terms of dimensionality of input and output spaces etc

Generality: Ensuring the technique isn’t only applicable to a specific subset of models, but also generalizable to a larger set of models

The techniques used to improve explainability of opaque models are called post-hoc explainability techniques. These techniques use various means to improve explainability:

Textual explanations solve the explainability problem by learning to generate text explanations that help illustrate the results from the model or by generating symbols that represent the functioning of the model. Eg: Explaining outputs of a visual system.

Visual explanations aim to visualize the model’s behavior. Many of the visualization methods existing in the literature come along with dimensionality reduction techniques that allow for a human interpretable simple visualization. Visualizations may be coupled with other techniques to improve their understanding, and are considered as the most suitable way to introduce complex interactions within the variables involved in the model to users not acquainted with ML modeling.

Local explanations tackle explainability by segmenting the solution space and giving explanations to less complex solution subspaces that are relevant for the whole model.

Explanations by example are mainly centered in extracting representative examples that grasp the inner relationships and correlations found by the model being analyzed.

Explanations by simplification denote those techniques in which a whole new system is rebuilt based on the trained model to be explained. This new, simplified model usually attempts at optimizing its resemblance to its antecedent functioning, while reducing its complexity, and keeping a similar performance score. A good example of this is LIME.

- Feature relevance explanations explain the inner functioning of a model by computing a relevance score for its managed variables. These scores quantify the sensitivity a feature has upon the output of the model. A comparison of the scores among different variables unveils the importance granted by the model to each of such variables when producing its output.

Explainability in the case of unsupervised algorithms

Though explainable ML has become a very hot research topic, the majority of the work has been focused on supervised machine learning methods. However, often due to a lack of labeled data, or due to the nature of the problem itself, unsupervised approaches are required.

There is a further challenge: in the current literature, very little work has been done on explainable unsupervised ML algorithms. Explaining the results of unsupervised models is mostly limited to visual explanations. For example, pyLDAvis for topic modeling, and dimensionality reduction plus scatter plot for clustering problems. Some interesting ongoing work on improving explainability of unsupervised learning are:

Accuracy vs explainability

If explainability is necessary for the application, as is the case with applications that could possibly negatively affect a large number of people, it is best to stick to models that are inherently transparent. But if these models are unusable due to very low accuracy, or if acceptable accuracy can be achieved only by increasing the complexity (to a level where it is not transparent anymore), then opaque models can be considered, along with some of the above techniques to improve explainability.

Explainability in the case of ensemble models — a sample scenario

In this section, we’ll look at an example scenario in which a data scientist (Neha), who was working with the NGO Women at the Table, used explainability in their machine learning research project. Neha wanted to explore the content of speeches made in United Nations conferences to understand what topics were covered and by whom. To do this, Neha converted speech audio to text, and then performed topic modeling on the text data. Once this was done she then used the most relevant words of each topic to map/classify the topic to one of the k user-given themes.

As you might be able to see, there are two critical steps in the model’s decision-making process: first a topic modeling step and then a classification of the topic to one of the themes. Since the “decision” of the model wasn’t going to have any large-scale negative impact, Neha decided to give priority to model performance. Based on a combination of manual verification of performance, and quantitative measures like coherence score, Neha decided to use Linear Discriminant Analysis (LDA) for topic modeling, and a simple cosine similarity between word embeddings for classification. The word embeddings were then generated using a pre-trained natural language processing model like BERT.

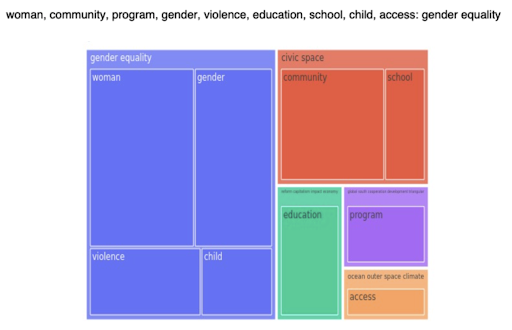

Even though this is a very simple task, it’s nevertheless unclear how the final output — the proportion of speaking time in each topic — is generated. This makes it difficult to evaluate the model and gather feedback from the audience. For example, in some instances, conference organizers might expect to see ‘gender equality’ to show up as a topic — they will know that many speakers will have covered it because it is an agenda item. Despite this, gender isn’t a topic in the final graph. It’s only by making each step of the process visible that it becomes possible to appreciate why this might be the case.

Since the audience isn’t technical and there are multiple steps involved — including unsupervised modeling — Neha uses visual explanations to break down the process and show intermediate results. She uses pyLDAvis (a Python library that helps you create interactive visualizations for clusters that are created through LDA) for topic modeling and tree maps (which are useful for visualizing data trees, or hierarchical data) to illustrate how topic words are mapped to user-given conference themes.

Cheatsheet (of methods/tools to improve transparency of ml tasks)

As we’ve discussed elsewhere, the techniques needed for explainability — and the effort needed to implement it — vary by the type of algorithm you are using.

Below you’ll find a cheat sheet that provides an overview of what is needed depending on the algorithm being used.

| Algorithm | Tools/techniques | Comments |

| Linear/logistic regression | Variables and dimensionality should be fairly simple here, which means there's no need for additional explainability work. As above. As above. As above. As above. | Transparent provided similarity measures are simple enough to be understood by humans. As above. As above. As above. As above. |

| Decision trees | ||

| K-nearest neighbors | ||

| Generalized additive models | ||

| Bayesian networks | ||

| Tree ensembles (Random forest; gradient boosting | inTrees; rule extraction | inTrees works through simplification; rule extraction is about making the rules on which an algorithm has been explicit (Eg: For 80% of training data, income > 300k implies loan granted) |

| Multi-layer neural networks | DeepRED | Through simplification. |

| Model agnostic | LIME; SHAP; Anchors | Local explanation using linear approximation (LIME); feature relevance explanations (SHAP); local explanation (Anchors). |

Final thoughts

Explainability is a very important aspect of machine learning, especially in scenarios where the decisions made by the model affect many people in a possibly negative way. In such situations, it is best to use inherently transparent models if they provide acceptable performance. If they perform too poorly, or if they must become increasingly complex (due to increasing number of features etc) to achieve performance, other models can be considered, but always making sure to maintain the explainability by using one or more methods described above.

It’s interesting to note that the methods used to improve explainability have also become a target for adversarial attacks. Some of these methods, though easy to explain, could be sensitive to small changes in the data. An adversarial attack could compromise the results of the methods used to improve explainability. Making sure that both the ML algorithm and the methods used to make them explainable are safe from such attacks is an interesting area of research.

Finally, we should remember that even with good tools and techniques to help explain a model, communicating these explanations to an audience is an open challenge. The best way to do this will vary based on the expertise of the audience, and the kind of actions expected from them.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.