Introducing Agile Analytics

A Value-Driven Approach to Business Intelligence and Data Warehousing

This article is the first chapter from the book Agile Analytics: A Value-Driven Approach to Business Intelligence and Data Warehousing.

Like Agile software development, Agile Analytics is established on a set of core values and guiding principles. It is not a rigid or prescriptive methodology; rather it is a style of building a data warehouse, data marts, business intelligence applications, and analytics applications that focuses on the early and continuous delivery of business value throughout the development lifecycle. In practice, Agile Analytics consists of a set of highly disciplined practices and techniques, some of which may be tailored to fit the unique data warehouse/business intelligence (DW/BI) project demands found in your organization.

Agile Analytics includes practices for project planning, management, and monitoring; for effective collaboration with your business customers and management stakeholders; and for ensuring technical excellence by the delivery team. This article is the first chapter from my book Agile Analytics: A Value-Driven Approach to Business Intelligence and Data Warehousing. It outlines the tenets of Agile Analytics and establishes the foundational principles behind each of the practices and techniques that are introduced in the successive chapters of the book.

Agile is a reserved word when used to describe a development style. It means something very specific. Unfortunately, "agile" occasionally gets misused as a moniker for processes that are ad hoc, slipshod, and lacking in discipline. Agile relies on discipline and rigor; however, it is not a heavyweight or highly ceremonious process despite the attempts of some methodologists to codify it with those trappings. Rather, Agile falls somewhere in the middle between just enough structure and just enough flexibility. It has been said that Agile is simple but not easy, describing the fact that it is built on a simple set of sensible values and principles but requires a high degree of discipline and rigor to properly execute. It is important to accurately understand the minimum set of characteristics that differentiate a true Agile process from those that are too unstructured or too rigid. This article is intended to leave you with a clear understanding of those characteristics as well as the underlying values and principles of Agile Analytics. These are derived directly from the tried and proven foundations established by the Agile software community and are adapted to the nuances of data warehousing and business intelligence development.

Alpine-Style Systems Development

I'm a bit of an armchair climber and mountaineer. I'm fascinated by the trials and travails of climbing high mountains like Everest, Annapurna, and others that rise to over 8,000 meters above sea level. These expeditions are complicated affairs involving challenging planning and logistics, a high degree of risk and uncertainty, a high probability of death (for every two climbers who reach the top of Annapurna, another one dies trying!), difficult decisions in the face of uncontrollable variables, and incredible rewards when success is achieved. While it may not be as adventuresome, building complex business intelligence systems is a lot like high-altitude climbing. We face lots of risk and uncertainty, complex planning, difficult decisions in the heat of battle, and the likelihood of death! Okay, maybe not that last part, but you get the analogy. Unfortunately the success rate for building DW/BI systems isn't very much better than the success rate for high-altitude mountaineering expeditions.

Climbing teams first began successfully "conquering" these high mountains in the 1950s, '60s, and '70s. In those early days the preferred mountaineering style was known as "siege climbing," which had a lot of similarities to a military excursion. Expeditions were led in an autocratic command-and-control fashion, often by someone with more military leadership experience than climbing experience. Climbing teams were supported by the large numbers of porters required to carry massive amounts of gear and supplies to base camp and higher. Mounting a siege-style expedition takes over a year of planning and can take two months or more to execute during the climbing season. Siege climbing is a yo-yo-like affair in which ropes are fixed higher and higher on the mountain, multiple semipermanent camps are established at various points along the route, and loads of supplies are relayed by porters to those higher camps. Finally, with all this support, a small team of summit climbers launches the final push for the summit on a single day, leaving from the high camp and returning to the same. Brilliant teams have successfully climbed mountains for years in this style, but the expeditions are prohibitively expensive, time-consuming to execute, and fraught with heavyweight procedures and bureaucracy.

Traditional business intelligence systems development is a lot like siege climbing. It can result in high-quality, working systems that deliver the desired capabilities. However, these projects are typically expensive, exhibiting a lot of planning, extensive design prior to development, and long development cycles. Like siege-style expeditions, all of the energy goes into one shot at the summit. If the summit bid fails, it is too time-consuming to return to base camp and regroup for another attempt. In my lifetime (and I'm not that old yet) I've seen multiple traditional DW/BI projects with budgets of $20 million or more, and timelines of 18 to 24 months, founder. When such projects fail, the typical management response is to cancel the project entirely rather than adjust, adapt, and regroup for another "summit attempt."

In the 1970s a new mountaineering method called "alpine-style" emerged, making it feasible for smaller teams to summit these high peaks faster, more cheaply, and with less protocol. Alpine-style mountaineering still requires substantial planning, a sufficient supporting team, and enough gear and supplies to safely reach the summit. However, instead of spending months preparing the route for the final summit push, alpine-style climbers spend about a week moving the bare essentials up to the higher camps. In this style, if conditions are right, summits can be reached in a mere ten days. Teams of two to three climbers share a single tent and sleeping bag, fewer ropes are needed, and the climbers can travel much lighter and faster. When conditions are not right, it is feasible for alpine-style mountaineers to return to base camp and wait for conditions to improve to make another summit bid.

Agile DW/BI development is much like alpine-style climbing. It is essential that we have a sufficient amount of planning, the necessary support to be successful, and an appropriate amount of protocol. Our "summit" is the completion of a high-quality, working business intelligence system that is of high value to its users. As in mountaineering, reaching our summit requires the proper conditions. We need just the right amount of planning—but we must be able to adapt our plan to changing factors and new information. We must prepare for a high degree of risk and uncertainty—but we must be able to nimbly manage and respond as risks unfold. We need support and involvement from a larger community—but we seek team self-organization rather than command-and-control leadership.

Agile Analytics is a development "style" rather than a methodology or even a framework. The line between siege-style and alpine-style mountaineering is not precisely defined, and alpine-style expeditions may include some siege-style practices. Each style is best described in terms of its values and guiding principles. Each alpine-style expedition employs a distinct set of climbing practices that support a common set of values and principles. Similarly, each Agile DW/BI project team must adapt its technical, project management, and customer collaboration practices to best support the Agile values and principles.

Premier mountaineer Ed Viesturs has a formula, or core value, that is his cardinal rule in the big mountains: "Getting to the top is optional. Getting down is mandatory." (Viesturs and Roberts 2006) I love this core value because it is simple and elegant, and it provides a clear basis for all of Ed's decision making when he is on the mountain. In the stress of the climb, or in the midst of an intensely challenging project, we need just such a basis for decision making—our "North Star." In 2000, a group of the most influential application software developers convened in Salt Lake City and formed the Agile Alliance. Through the process of sharing and comparing each of their "styles" of software development, the Agile Manifesto emerged as a simple and elegant basis for project guidance and decision making. The Agile Manifesto reads:

Manifesto for Agile Software Development

We are uncovering better ways of developing software by doing it and helping others do it. Through this work we have come to value:

Individuals and interactions over processes and tools

Working software over comprehensive documentation

Customer collaboration over contract negotiation

Responding to change over following a plan

That is, while there is value in the items on the right, we value the items on the left more.

With due respect to the Agile Alliance, of which I am a member, I have adapted the Agile Manifesto just a bit in order to make it more appropriate to Agile Analytics:

Manifesto for Agile Analytics Development

We are uncovering better ways of developing data warehousing and business intelligence systems by doing it and helping others do it. Through this work we have come to value:

Individuals and interactions over processes and tools

Working DW/BI systems over comprehensive documentation

End-user and stakeholder collaboration over contract negotiation

Responding to change over following a plan

That is, while there is value in the items on the right, we value the items on the left more.

I didn't want to mess with the original manifesto too much, but it is important to acknowledge that DW/BI systems are fundamentally different from application software. In addition to dealing with large volumes of data, our efforts involve systems integration, customization, and programming. Nonetheless, the Agile core values are very relevant to DW/BI systems development. These values emphasize the fact that our primary objective is the creation of high-quality, high-value, working DW/BI systems. Every activity related to any project either (a) directly and materially contributes to this primary objective or (b) does not. Agile Analytics attempts to maximize a-type activities while acknowledging that there are some b-type activities that are still important, such as documenting your enterprise data model.

What Is Agile Analytics?

In this article, I will introduce you to a set of Agile DW/BI principles and practices. These include technical, project management, and user collaboration practices. I will demonstrate how you can apply these on your projects, and how you can tailor them to the nuances of your environment. However, the title of this section is "What Is Agile Analytics?" so I should probably take you a bit further than the mountaineering analogy.

Here's What Agile Analytics Is

So here is a summary of the key characteristics of Agile Analytics. This is simply a high-level glimpse at the key project traits that are the mark of agility, not an exhaustive list of practices. Throughout the remainder of this book I will introduce you to a set of specific practices that will enable you to achieve agility on your DW/BI projects. Moreover, Agile Analytics is a development style, not a prescriptive methodology that tells you precisely what you must do and how you must do it. The dynamics of each project within each organization require practices that can be tailored appropriately to the environment. Remember, the primary objective is a high-quality, high-value, working DW/BI system. These characteristics simply serve that goal:

Iterative, incremental, evolutionary. Foremost, Agile is an iterative, incremental, and evolutionary style of development. We work in short iterations that are generally one to three weeks long, and never more than four weeks. We build the system in small increments or "chunks" of user-valued functionality. And we evolve the working system by adapting to frequent user feedback. Agile development is like driving around in an unfamiliar city; you want to avoid going very far without some validation that you are on the right course. Short iterations with frequent user reviews help ensure that we are never very far off course in our development.

Value-driven development. The goal of each development iteration is the production of user-valued features. While you and I may appreciate the difficulty of complex data architectures, elegant data models, efficient ETL scripts, and so forth, users generally couldn't care less about these things. What users of DW/BI systems care about is the presentation of and access to information that helps them either solve a business problem or make better business decisions. Every iteration must produce at least one new user-valued feature in spite of the fact that user features are just the tip of the architectural iceberg that is a DW/BI system.

Production quality. Each newly developed feature must be fully tested and debugged during the development iteration. Agile development is not about building hollow prototypes; it is about incrementally evolving to the right solution with the best architectural underpinnings. We do this by integrating ruthless testing early and continuously into the DW/BI development process.3 Developers must plan for and include rigorous testing in their development process. A user feature is "Done" when it is of production quality, it is successfully integrated into the evolving system, and developers are proud of their work. That same feature is "Done! Done!" when the user accepts it as delivering the right value.

Barely sufficient processes. Traditional styles of DW/BI development are rife with a high degree of ceremony. I've worked on many projects that involved elaborate stage-gate meetings between stages of development such as the transition from requirements analysis to design. These gates are almost always accompanied by a formal document that must be "signed off" as part of the gating process. In spite of this ceremony many DW/BI projects struggle or founder. Agile DW/BI emphasizes a sufficient amount of ceremony to meet the practical needs of the project (and future generations) but nothing more. If a data dictionary is deemed important for use by future developers, then perhaps a digital image of a whiteboard table or a simple spreadsheet table will suffice. Since our primary objective is the production of high-quality, high-value, working systems, we must be able to minimize the amount of ceremony required for other activities.

Automation, automation, automation. The only way to be truly Agile is to automate as many routine processes as possible. Test automation is perhaps the most critical. If you must test your features and system manually, guess how often you're likely to rerun your tests? Test automation enables you to frequently revalidate that everything is still working as expected. Build automation enables you to frequently build a version of your complete working DW/BI system in a demo or preproduction environment. This helps establish continuous confidence that you are never more than a few hours or days away from putting a new version into production. Agile Analytics teams seek to automate any process that is done more than once. The more you can automate, the more you can focus on developing user features.

Collaboration. Too often in traditional projects the development team solely bears the burden of ensuring that timelines are met, complete scope is delivered, budgets are managed, and quality is ensured. Agile business intelligence acknowledges that there is a broader project community that shares responsibility for project success. The project community includes the subcommunities of users, business owners, stakeholders, executive sponsors, technical experts, project managers, and others. Frequent collaboration between the technical and user communities is critical to success. Daily collaboration within the technical community is also critical. In fact, establishing a collaborative team workspace is an essential ingredient of successful Agile projects.

Self-organizing, self-managing teams. Hire the best people, give them the tools and support they need, then stand aside and allow them to be successful. There is a key shift in the Agile project management style compared to traditional project management. The Agile project manager's role is to enable team members to work their magic and to facilitate a high degree of collaboration with users and other members of the project community. The Agile project team decides how much work it can complete during an iteration, then holds itself accountable to honor those commitments. The Agile style is not a substitute for having the right people on the team.

Guiding Principles

The core values contained in the Agile Manifesto motivate a set of guiding principles for DW/BI systems design and development. These principles often become the tiebreaker when difficult trade-off decisions must be made. Similarly, the Agile Alliance has established a set of principles for software development. The following Agile Analytics principles borrow liberally from the Agile Alliance principles:

- Our highest priority is to satisfy the DW/BI user community through early and continuous delivery of working user features.

- We welcome changing requirements, even late in development. Agile processes harness change for the DW/BI users' competitive advantage.

- We deliver working software frequently, providing users with new DW/BI features every few weeks.

- Users, stakeholders, and developers must share project ownership and work together daily throughout the project.

- We value the importance of talented and experienced business intelligence experts. We give them the environment and support they need and trust them to get the job done.

- The most efficient and effective method of conveying information to and within a development team is face-to-face conversation.

- A working business intelligence system is the primary measure of progress.

- We recognize the balance among project scope, schedule, and cost. The data warehousing team must work at a sustainable pace.

- Continuous attention to the best data warehousing practices enhances agility.

- The best architectures, requirements, and designs emerge from self-organizing teams.

- At regular intervals, the team reflects on how to become more effective, then tunes and adjusts its behavior accordingly.

Take a minute to reflect on these principles. How many of them are present in the projects in your organization? Do they make sense for your organization? Give them another look. Are they realistic principles for your organization? I have found these not only to be commonsense principles, but also to be effective and achievable on real projects. Furthermore, adherence to these principles rather than reliance on a prescriptive and ceremonious process model is very liberating.

Myths and Misconceptions

There are some myths and misconceptions that seem to prevail among other DW/BI practitioners and experts that I have talked to about this style of development. I recently had an exchange on this topic with a seasoned veteran in both software development and data warehousing who is certified at the mastery level in DW/BI and data management and who has managed large software development groups. His misunderstanding of Agile development made it evident that myths and misconceptions abound even among the most senior DW/BI practitioners. Agile Analytics is not:

A wholesale replacement of traditional practices. I am not suggesting that everything we have learned and practiced in the short history of DW/BI systems development is wrong, and that Agile is the new savior that will rescue us from our hell. There are many good DW/BI project success stories, which is why DW/BI continues to be among the top five strategic initiatives for most large companies today. It is important that we keep the practices and methods that work well, improve those that allow room for improvement, and replace those that are problematic. Agile Analytics seeks to modify our general approach to DW/BI systems development without discarding the best practices we've learned on our journey so far.

Synonymous with Scrum or eXtreme Programming (XP). Scrum is perhaps the Agile flavor that has received the most publicity (along with XP) in recent years. However, it is incorrect to say that "Agile was formerly known as eXtreme Programming," as one skeptic told me. In fact, there are many different Agile development flavors that add valuable principles and practices to the broader collective known as Agile development. These include Scrum, Agile Modeling, Agile Data, Crystal, Adaptive, DSDM, Lean Development, Feature Driven Development, Agile Project Management (APM), and others.5 Each is guided by the core values expressed in the Agile Manifesto. Agile Analytics is an adaptation of principles and practices from a variety of these methods to the complexities of data-intensive, analytics-based systems integration efforts like data warehousing and data mart development.

Simply iterating. Short, frequent development iterations are an essential cornerstone of Agile development. Unfortunately, this key practice is commonly misconstrued as the definition of agility. Not long ago I was asked to mentor a development team that had "gone Agile" but wasn't experiencing the expected benefits of agility. Upon closer inspection I discovered that they were planning in four-week "iterations" but didn't expect to have any working features until about the sixth month of the project. Effectively they had divided the traditional waterfall model into time blocks they called iterations. They completely missed the point. The aim of iterative development is to demonstrate working features and to obtain frequent feedback from the user community. This means that every iteration must result in demonstrable working software.

For systems integration; it's only for programming. Much of our effort in DW/BI development is focused on the integration of multiple commercial tools, thereby minimizing the volume of raw programming required. DW/BI tool vendors would have us believe that DW/BI development is simply a matter of hooking up the tools to the source systems and pressing the "Go" button. You've probably already discovered that building an effective DW/BI system is not that simple. A DW/BI development team includes a heterogeneous mixture of skills, including extraction, transformation, load (ETL) development; database development; data modeling (both relational and multidimensional); application development; and others. In fact, compared to the more homogeneous skills required for applications development, DW/BI development is quite complex in this regard. This complexity calls for an approach that supports a high degree of customer collaboration, frequent delivery of working software, and frequent feedback—aha, an Agile approach!

An excuse for ad hoc behavior. Some have mistaken the tenets of Agile development for abandonment of rigor, quality, or structure, in other words, "hacking." This misperception could not be farther from the truth. Agility is a focus on the frequent delivery of high-value, production-quality, working software to the user community with the goal of continuously adapting to user feedback. This means that automated testing and quality assurance are critical components of all iterative development activities. We don't build prototypes; we build working features and then mature those features in response to user input. Others mistake the Agile Manifesto as disdain of documentation, which is also incorrect. Agile DW/BI seeks to ensure that a sufficient amount of documentation is produced. The keyword here is sufficient. Sufficiency implies that there is a legitimate purpose for the document, and when that purpose is served, there is no need for additional documentation.

In my work with teams that are learning and adopting the Agile DW/BI development style, I often find that they are looking for a prescriptive methodology that makes it very clear which practices to apply and when. This is a natural inclination for new Agile practitioners, and I will provide some recommendations that may seem prescriptive in nature. In fact you may benefit initially by creating your own "recipe" for the application of Agile DW/BI principles and practices. However, I need to reemphasize that Agile Analytics is a style, not a methodology and not a framework. Figuratively, you can absorb agility into your DNA with enough focus, practice, and discipline. You'll know you've reached that point when you begin applying Agile principles to everything you do such as buying a new car, remodeling a bathroom, or writing a book.

Data Warehousing Architectures and Skill Sets

To ensure that we are working from a common understanding, here is a very brief summary of data warehouse architectures and requisite skill sets. This is not a substitute for any of the more comprehensive technical books on data warehousing but should be sufficient as a baseline for the remainder of the book.

Data Warehousing Conceptual Architectures

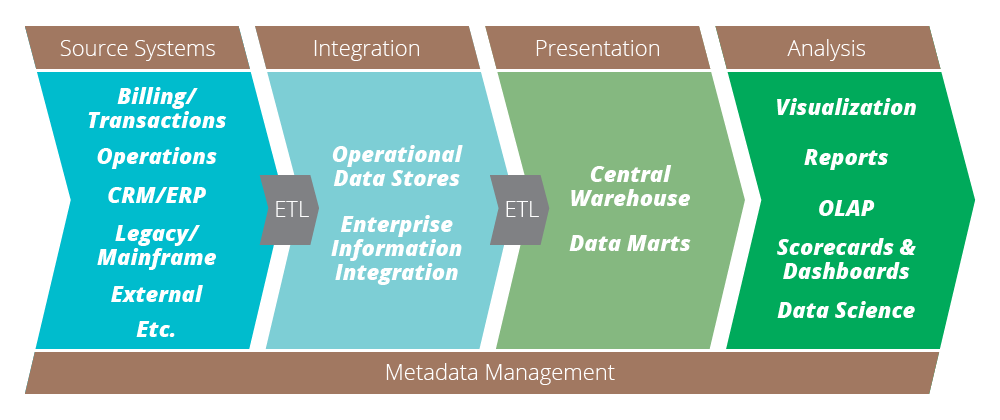

Figure 1.1 depicts an abstracted classical data warehousing architecture and is suitable to convey either a Kimball-style (Kimball and Ross 2002) or an Inmon-style (Inmon 2005) architecture. This is a high-level conceptual architecture containing multiple layers, each of which includes a complex integration of commercial technologies, data modeling and manipulation, and some custom code.

Figure 1.1 Classical data warehouse architecture

The data warehouse architecture includes one or more operational source systems from which data is extracted, transformed, and loaded into the data warehouse repositories. These systems are optimized for the daily transactional processing required to run the business operations. Most DW/BI systems source data from multiple operational systems, some of which are legacy systems that may be several decades old and reside on older technologies.

Data from these sources is extracted into an integration tier in the architecture that acts as a "holding pen" where data can be merged, manipulated, transformed, cleansed, and validated without placing an undue burden on the operational systems. This tier may include an operational data store or an enterprise information integration (EII) repository that acts as a system of record for all relevant operational data. The integration database is typically based on a relational data model and may have multiple subcomponents, including pre-staging, staging, and an integration repository, each serving a different purpose relating to the consolidation and preprocessing of data from disparate source systems. Common technologies for staging databases are Oracle, IBM DB2, Microsoft SQL Server, and NCR Teradata. The DW/BI community is beginning to see increasing use of the open-source database MySQL for this architectural component.

Data is extracted from the staging database, transformed, and loaded into a presentation tier in the architecture that contains appropriate structures for optimized multidimensional and analytical queries. This system is designed to support the data slicing and dicing that define the power of a data warehouse. There are a variety of alternatives for the implementation of the presentation database, including normalized relational schemas and denormalized schemas like star, snowflake, and even "starflake." Moreover, the presentation tier may include a single enterprise data warehouse or a collection of subject-specific data marts. Some architectures include a hybrid of both of these. Presentation repositories are typically implemented in the same technologies as the integration database.

Finally, data is presented to the business users at the analysis tier in the architecture. This conceptual layer in the system represents the variety of applications and tools that provide users with access to the data, including report writers, ad hoc querying, online analytical processing (OLAP), data visualization, data mining, and statistical analysis. BI tool vendors such as Pentaho, Cognos, MicroStrategy, Business Objects, Microsoft, Oracle, IBM, and others produce commercial products that enable data from the presentation database to be aggregated and presented within user applications.

This is a generalized architecture, and actual implementations vary in the details. One major variation on the Kimball architecture is the Inmon architecture (Inmon 2005), which inserts a layer of subject-specific data marts that contain subsets of the data from the main warehouse. Each data mart supports only the specific end-user applications that are relevant to the business subject area for which that mart was designed. Regardless of your preferences for Kimball- versus Inmon-style architectures, and of the variations found in implementation detail, Figure 1.1 will serve as reference architecture for the discussions in this book. The Agile DW/BI principles and practices that are introduced here are not specific to any particular architecture.

Diverse and Disparate Technical Skills

Inherent in the implementation of this architecture are the following aspects of development, each requiring a unique set of development skills:

Data modeling. Design and implementation of data models are required for both the integration and presentation repositories. Relational data models are distinctly different from dimensional data models, and each has unique properties. Moreover, relational data modelers may not have dimensional modeling expertise and vice versa.

ETL development. ETL refers to the extraction of data from source systems into staging, the transformations necessary to recast source data for analysis, and the loading of transformed data into the presentation repository. ETL includes the selection criteria to extract data from source systems, performing any necessary data transformations or derivations needed, data quality audits, and cleansing.

Data cleansing. Source data is typically not perfect. Furthermore, merging data from multiple sources can inject new data quality issues. Data hygiene is an important aspect of data warehouse that requires specific skills and techniques.

OLAP design. Typically data warehouses support some variety of online analytical processing (HOLAP, MOLAP, or ROLAP). Each OLAP technique is different but requires special design skills to balance the reporting requirements against performance constraints.

Application development. Users commonly require an application interface into the data warehouse that provides an easy-to-use front end combined with comprehensive analytical capabilities, and one that is tailored to the way the users work. This often requires some degree of custom programming or commercial application customization.

Production automation. Data warehouses are generally designed for periodic automated updates when new and modified data is slurped into the warehouse so that users can view the most recent data available. These automated update processes must have built-in fail-over strategies and must ensure data consistency and correctness.

General systems and database administration. Data warehouse developers must have many of the same skills held by the typical network administrator and database administrator. They must understand the implications of efficiently moving possibly large volumes of data across the network, and the issues of effectively storing changing data.

Why Do We Need Agile Analytics?

In my years as a DW/BI consultant and practitioner I have learned three consistent truths: Building successful DW/BI systems is hard; DW/BI development projects fail very often; and it is better to fail fast and adapt than to fail late after the budget is spent.

First Truth: Building DW/BI Systems Is Hard

If you have taken part in a data warehousing project, you are aware of the numerous challenges, perils, and pitfalls. Ralph Kimball, Bill Inmon, and other DW/BI pioneers have done an excellent job of developing reusable architectural patterns for data warehouse and DW/BI implementation. Software vendors have done a good job of creating tools and technologies to support the concepts. Nonetheless, DW/BI is just plain hard, and for several reasons:

- Lack of experience. Most organizations don't build multiple DW/BI systems, and therefore development processes don't get a chance to mature through experience.

- Ambitious goals. Organizations often set out to build an enterprise data warehouse, or at least a broad-reaching data mart, which makes the process more complex.

- Domain knowledge versus subject matter expertise. DW/BI practitioners often have extensive expertise in business intelligence but not in the organization's business domain, causing gaps in understanding. Business users typically don't know what they can, or should, expect from a DW/BI system.

- Unrealistic expectations. Business users often think of data warehousing as a technology-based plug-and-play application that will quickly provide them with miraculous insights.

- Educated user phenomenon. As users gain a better understanding of data warehousing, their needs and wishes change.

- Shooting the messenger. DW/BI systems are like shining a bright light in the attic: You may not always like what you find. When the system exposes data quality problems, business users tend to distrust the DW/BI system.

- Focus on technology. Organizations often view a DW/BI system as an IT application rather than a joint venture between business stakeholders and IT developers.

- Specialized skills. Data warehousing requires an entirely different skill set from that of typical database administrators (DBAs) and developers. Most organizations do not have staff members with adequate expertise in these areas.

- Multiple skills. Data warehousing requires a multitude of unique and distinct skills such as multidimensional modeling, data cleansing, ETL development, OLAP design, application development, and so forth.

These unique DW/BI development characteristics compound the already complex process of building software or building database applications.

Second Truth: DW/BI Development Projects Fail Often

Unfortunately, I'm not the only one who has experienced failure on DW/BI projects. A quick Google search on "data warehouse failure polls" results in a small library of case studies, postmortems, and assessment articles. Estimated failure rates of around 50 percent are common and are rarely disputed.

When I speak to groups of business intelligence practitioners, I often begin my talks with an informal survey. First I ask everyone who has been involved in the completion of one or more DW/BI projects to stand. It varies depending on the audience, but usually more than half the group stands up. Then I ask participants to sit down if they have experienced projects that were delivered late, projects that had significant budget overruns, or projects that did not satisfy users' expectations. Typically nobody is left standing by the third question, and I haven't even gotten to questions about acceptable quality or any other issues. It is apparent that most experienced DW/BI practitioners have lived through at least one project failure.

While there is no clear definition of what constitutes "failure," Sid Adelman and Larissa Moss classify the following situations as characteristic of limited acceptance or outright project failure (Moss and Adelman 2000):

- The project is over budget.

- The schedule has slipped.

- Some expected functionality was not implemented.

- Users are unhappy.

- Performance is unacceptable.

- Availability of the warehouse applications is poor.

- There is no ability to expand.

- The data and/or reports are poor.

- The project is not cost-justified.

- Management does not recognize the benefits of the project.

In other words, simply completing the technical implementation of a data warehouse doesn't constitute success. Take another look at this list. Nearly every situation is "customer"-focused; that is, primarily end users determine whether a project is successful.

There are literally hundreds of similar evaluations of project failures, and they exhibit a great deal of overlap in terms of root causes: incorrect requirements, weak processes, inability to adapt to changes, project scope mismanagement, unrealistic schedules, inflated expectations, and so forth.

Third Truth: It Is Best to Fail Fast and Adapt

Unfortunately, the traditional development model does little to uncover these deficiencies early in the project. As Jeff DeLuca, one of the creators of Feature Driven Development (FDD), says, "We should try to break the back of the project as early as possible to avoid the high cost of change later downstream." In a traditional approach, it is possible for developers to plow ahead in the blind confidence that they are building the right product, only to discover at the end of the project that they were sadly mistaken. This is true even when one uses all the best practices, processes, and methodologies.

What is needed is an approach that promotes early discovery of project peril. Such an approach must place the responsibility of success equally on the users, stakeholders, and developers and should reward a team's ability to adapt to new directions and substantial requirements changes.

As we observed earlier, most classes of project failure are user-satisfaction-oriented. If we can continuously adapt the DW/BI system and align with user expectations, users will be satisfied with the outcome. In all of my past involvement in traditional DW/BI implementations I have consistently seen the following phenomena at the end of the project:

Users have become more educated about BI. As the project progresses, so does users' understanding of BI. So, what they told you at the beginning of the project may have been based on a misunderstanding or incorrect expectations.

User requirements have changed or become more refined. That's true of all software and implementation projects. It's just a fact of life. What they told you at the beginning is much less relevant than what they tell you at the end.

Users' memories of early requirements reviews are fuzzy. It often happens that contractually speaking, a requirement is met by the production system, but users are less than thrilled, having reactions like "What I really meant was . . ." or "That may be what I said, but it's not what I want."

Users have high expectations when anticipating a new and useful tool. Left to their own imaginations, users often elevate their expectations of the BI system well beyond what is realistic or reasonable. This only leaves them disappointed when they see the actual product.

Developers build based on the initial snapshot of user requirements. In waterfall-style development the initial requirements are reviewed and approved, then act as the scoping contract. Meeting the terms of the contract is not nearly as satisfying as meeting the users' expectations.

All these factors lead to a natural gap between what is built and what is needed. An approach that frequently releases new BI features to users, hears user feedback, and adapts to change is the single best way to fail fast and correct the course of development.

Is Agile Really Better?

There is increasing evidence that Agile approaches lead to higher project success rates. Scott Ambler, a leader in Agile database development and Agile Modeling, has conducted numerous surveys on Agile development in an effort to quantify the impact and effectiveness of these methods. Beginning in 2007, Ambler conducted three surveys specifically relating to IT project success rates.6 The 2007 survey explored success rates of different IT project types and methods. Only 63 percent of traditional projects and data warehousing projects were successful, while Agile projects experienced a 72 percent rate of success. The 2008 survey focused on four success criteria: quality, ROI, functionality, and schedule. In all four areas Agile methods significantly outperformed traditional, sequential development approaches. The 2010 survey continued to show that Agile methods in IT produce better results.

I should note here that traditional definitions of success involve metrics such as on time, on budget, and to specification. While these metrics may satisfy management efforts to control budgets, they do not always correlate to customer satisfaction. In fact, scope, schedule, and cost are poor measures of progress and success. Martin Fowler argues, "Project success is more about whether the software delivers value that's greater than the cost of the resources put into it." He points out that XP 2002 conference speaker Jim Johnson, chairman of the Standish Group, observed that a large proportion of features are frequently unused in software products. He quoted two studies: a DuPont study, which found that only 25 percent of a system's features were really needed, and a Standish study, which found that 45 percent of features were never used and only 20 percent of features were used often or always (Fowler 2002). These findings are further supported by a Department of Defense study, which found that only 2 percent of the code in $35.7 billion worth of software was used as delivered, and 75 percent was either never used or was canceled prior to delivery (Leishman and Cook 2002).

Agile development is principally aimed at the delivery of high-priority value to the customer community. Measures of progress and success must focus more on value delivery than on traditional metrics of on schedule, on budget, and to spec.

Jim Highsmith points out:

This statement reflects the notion that incrementally evolving a system by frequently seeking and adapting to customer feedback will result in building the right solution, but it may not be the solution that was originally planned.

The Difficulties of Agile Analytics

Applying Agile methods to DW/BI is not without challenges. Many of the project management and technical practices I introduce in this book are adapted from those of our software development colleagues who have been maturing these practices for the past decade or longer. Unfortunately, the specific practices and tools used to custom-build software in languages like Java, C++, or C# do not always transfer easily to systems integration using proprietary technologies like Informatica, Oracle, Cognos, and others. Among the problems that make Agile difficult to apply to DW/BI development are the following:

Tool support. There aren't many tools that support technical practices such as test-driven database or ETL development, database refactoring, data warehouse build automation, and others that are introduced in this book. The tools that do exist are less mature than the ones used for software development. However, this current state of tool support continues to get better, through both open-source as well as commercial tools.

Data volume. It takes creative thinking to use lightweight development practices to build high-volume data warehouses and BI systems. We need to use small, representative data samples to quickly build and test our work, while continuously proving that our designs will work with production data volumes. This is more of an impediment to our way of approaching the problem rather than a barrier that is inherent in the problem domain. Impediments are those challenges that can be eliminated or worked around; barriers are insurmountable.

"Heavy lifting." While Agile Analytics is a feature-driven (think business intelligence features) approach, the most time-consuming aspect of building DW/BI systems is in the back-end data warehouse or data marts. Early in the project it may seem as if it takes a lot of "heavy lifting" on the back end just to expose a relatively basic BI feature on the front end. Like the data volume challenge, it takes creative thinking to build the smallest/simplest back-end data solution needed to produce business value on the front end.

Continuous deployment. The ability to deploy new features into production frequently is a goal of Agile development. This goal is hampered by DW/BI systems that are already in production with large data volumes. Sometimes updating a production data warehouse with a simple data model revision can require significant time and careful execution. Frequent deployment may look very different in DW/BI from the way it looks in software development.

The nuances of your project environment may introduce other such difficulties. In general, those who successfully embrace Agile's core values and guiding principles learn how to effectively adapt their processes to mitigate these difficulties. For each of these challenges I find it useful to ask the question "Will the project be better off if we can overcome this difficulty despite how hard it may be to overcome?" As long as the answer to that question is yes, it is worth grappling with the challenges in order to make Agile Analytics work. With time and experience these difficulties become easier to overcome.

Introducing FlixBuster Analytics

Now seems like a good time to introduce the running DW/BI example that I'll be revisiting throughout this book to show you how the various Agile practices are applied. I use an imaginary video rental chain to demonstrate the Agile Analytics practices. The company is FlixBuster, and they have retail stores in cities throughout North America. FlixBuster also offers video rentals online where customers can manage their rental requests and movies are shipped directly to their mailing address. Finally, FlixBuster offers movie downloads directly to customers' computers.

FlixBuster has customers who are members and customers who are nonmembers. Customers fall into three buying behavior groups: those who shop exclusively in retail stores, those who shop exclusively online, and those who split their activity across channels. FlixBuster customers can order a rental online or in the store, and they can return videos in the store or via a postage-paid return envelope provided by the company.

Members pay a monthly subscription fee, which determines their rental privileges. Top-tier members may rent up to three videos at the same time. There is also a membership tier allowing two videos at a time as well as a tier allowing one at a time. Members may keep their rentals indefinitely with no late charges. As soon as FlixBuster receives a returned video from a member, the next one is shipped. Nonmembers may also rent videos in the stores following the traditional video rental model with a four-day return policy.

Approximately 75 percent of the brick-and-mortar FlixBuster stores across North America are corporately owned and managed; the remaining 25 percent are privately owned franchises. FlixBuster works closely with franchise owners to ensure that the customer experience is consistent across all stores. FlixBuster prides itself on its large inventory of titles, the rate of customer requests that are successfully fulfilled, and how quickly members receive each new video by mail.

FlixBuster has a complex partnership with the studios producing the films and the clearinghouses that provide licensed media to FlixBuster and manage royalty payments and license agreements. Each title is associated with a royalty percentage to be paid to the studio. Royalty statements and payments are made on a monthly basis to each of the clearinghouses.

Furthermore, FlixBuster sales channels (e-tail and retail) receive a percentage of the video rental revenue. Franchise owners receive a negotiated revenue amount that is generally higher than for corporately owned retail outlets. The online channel receives still a different revenue percentage to cover its operating costs.

FlixBuster has determined that there is a good business case for developing an enterprise business intelligence system. This DW/BI system will serve corporate users from finance, marketing, channel sales, customer management, inventory management, and other departments. FlixBuster also intends to launch an intranet BI portal for subscription use by its clearinghouse partners, studios, franchisees, and possibly even Internet movie database providers. Such an intranet portal is expected to provide additional revenue streams for FlixBuster.

There are multiple data sources for the FlixBuster DW/BI system, including FlixBackOffice, the corporate ERP system; FlixOps, the video-by-mail fulfillment system; FlixTrans, the transactional and point-of-sale system; FlixClear, the royalty management system; and others.

FlixBuster has successfully completed other development projects using Agile methods and is determined to take an Agile Analytics approach on the development of its DW/BI system, FlixAnalysis. During high-level executive steering committee analysis and reviews, it has been decided that the first production release of FlixAnalysis will be for the finance department and will be a timeboxed release cycle of six months.

Wrap-Up

This article has laid the foundation for an accurate, if high-level, understanding of Agile Analytics. Successive chapters in the book serve to fill in the detailed "how-to" techniques that an Agile Analytics team needs to put these concepts into practice. You should now understand that Agile Analytics isn't simply a matter of chunking tasks into two-week iterations, holding a 15-minute daily team meeting, or retitling the project manager a "scrum master." Although these may be Agile traits, new Agile teams often limit their agility to these simpler concepts and lose sight of the things that truly define agility. True agility is reflected by traits like early and frequent delivery of production-quality, working BI features, delivering the highest-valued features first, tackling risk and uncertainty early, and continuous stakeholder and developer interaction and collaboration.

Agile Analytics teams evolve toward the best system design by continuously seeking and adapting to feedback from the business community. Agile Analytics balances the right amount of structure and formality against a sufficient amount of flexibility, with a constant focus on building the right solution. The key to agility lies in the core values and guiding principles more than in a set of specific techniques and practices—although effective techniques and practices are important. Mature Agile Analytics teams elevate themselves above a catalog of practices and establish attitudes and patterns of behavior that encourage seeking feedback, adapting to change, and delivering maximum value.

If you are considering adopting Agile Analytics, keep these core values and guiding principles at the top of your mind. When learning any new technique, it is natural to look for successful patterns that can be mimicked. This is a valuable approach that will enable a new Agile team to get on the right track and avoid unnecessary pitfalls. While I have stressed that Agile development is not a prescriptive process, new Agile teams will benefit from some recipe-style techniques. Therefore, many of the practices introduced in this book may have a bit of a prescriptive feel. I encourage you to try these practices first as prescribed and then, as you gain experience, tailor them as needed to be more effective. But be sure you're tailoring practices for the right reasons. Be careful not to tailor a practice simply because it was difficult or uncomfortable on the first try. Also, be sure not to simply cherry-pick the easy practices while ignoring the harder ones. Often the harder practices are the ones that will have the biggest impact on your team's performance.

Enjoyed this first chapter? Read more and order a copy of Agile Analytics: A Value-Driven Approach to Business Intelligence and Data Warehousing.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.