When to Automate and Why

In any project lifecycle, our goal should be to automate all the activities that are repeated while creating and maintaining the system. The practice of ‘Ruthless automation’ is apt to automate such tasks.

In project lifecycle, usually because of with tight deadlines, or just not being aware enough, we either tend to overlook or fail to identify automation of the manual and repeated tasks.

Let me share my story of how I identified and automated some of the tasks, that are otherwise taken for granted, and thereby reduced my manual and potentially error-prone activities, and thus raise the bar.

The project I was working on was a rich content marketing website which demanded a complex interactive data with high availability.

Here are a few situations that I came across, where I tried to automate some drudgery:

Setting up test data, again and again!

The Pain:

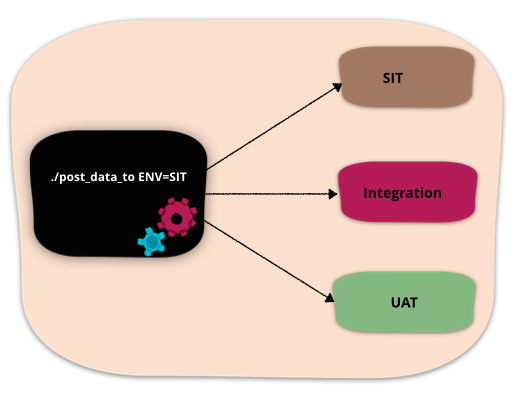

We had various different types of environments (SIT, Integration, UAT, etc.). With every new feature, we had to validate the functionality across all these environments, progressively.

Every time a new feature was released, I had to set up the test data on multiple environments. Though I could use REST plugins for browser (like POSTMAN), it was difficult to track all that test data for a feature. Also, we had provision on each environment to clear the data. With cleaning of data, I needed to set up data again. In addition to this, it was becoming very difficult to upload multiple variations of same data. This was one thing I never looked forward to.

The Remedy:

I wrote a small script which solved my problems. With this script, I was able to upload many variants of the data to any supported environment and also, it was easy for me to track which version of data is present on environment.

Different data formats!

As being marketing site, content creation was major task and routine work. Content creation was distributed across different teams. To support different formats of contents, we had an adapter layer for data transformation in between.

The Pain

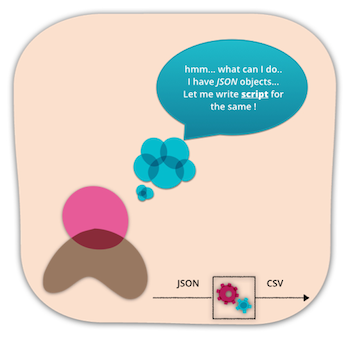

Typically content creators used to create data using different tools and data formats varied across these tools. They always preferred data in spreadsheets. Getting the data into consumable format for content creators, manually fetching JSON data from the system and formatting the data for Excel was a tedious task.

The Remedy

A small script to convert data between formats was all I needed. With this script, I was able to convert JSON into CSV which saved lot of time and I was able to serve request of content authors quickly.

Debugging with Preview and Live stacks

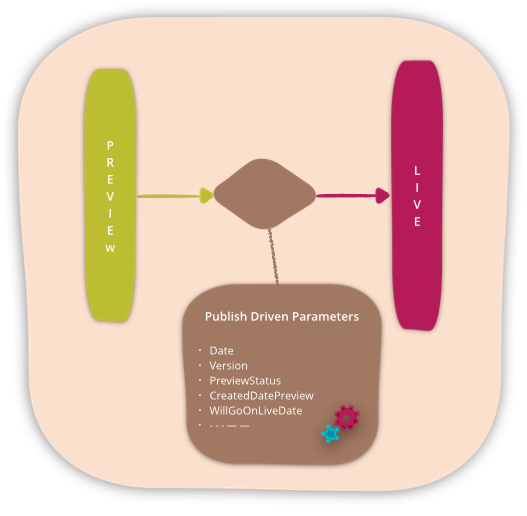

As said before, content creation was a routine task and content authors needed a provision to preview page before actually going live. We had Preview and Live environments. For each content, completeness check would decide whether page would go live or not.

The Pain

Preview and Live environments played a vital role in content creation. Only some of the parameters in a huge JSON object along with different dates would drive the the transition from Preview to Live. With big launches, huge data creation used to happen and apparently content creators would face many issues with data being shown on Preview environment and not on Live.

While debugging, to reach a certain conclusion I needed to inspect the JSON data. Manually going through each parameter within every response was time consuming. I used to miss some of the important publish driven parameters(Refer diagram). Also, date format was not readable.

The Remedy

I made a note of repetitive steps and wrote a script for the same. Refer gist for the script, which gave a json file printing publish driven parameters and their status in a friendly format.

These were just some of my experiences. But you get the idea. Don’t you?

Summary

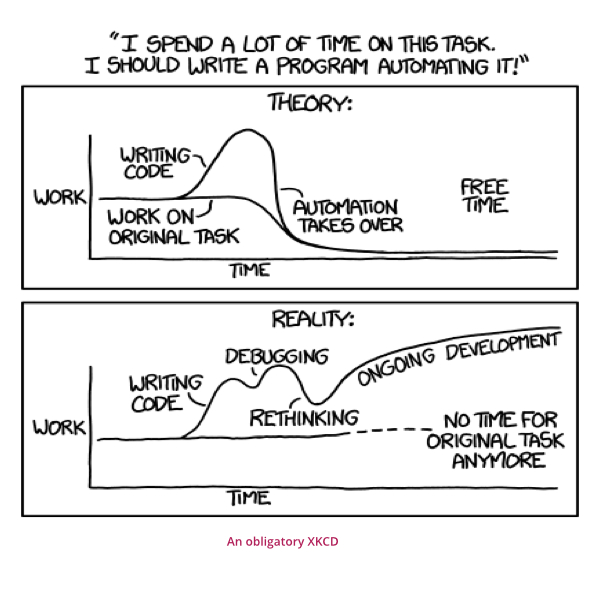

Quite a few of us are usually little hesitant to try out these kind of things because we want to quickly finish the task at hand. But in my experience, a little extra time spent on automating the stuff we do over and over, can save a lot of time and effort in the long run.

Certainly there is cost involved in creation and maintenance of such tasks. But I favour automating task over human error.

There are lot of things in project which we try to automate. It takes time to build and take effort for maintenance of the same, but of course it gives benefits. A Cost - Value analysis and mapping of all the things you would want to automate is very important - this helps in prioritising all the automation work that is possible for the product under test.

It shouldn’t have to be said – check the automation scripts you write into source control. Share the love – make sure all of your team members use the same process to perform routine tasks, and that they can contribute fixes and improvements.

To summarize, here are the takeaways:

Think before you repeat!

If you are doing same thing over and over again, stop and think “Do I really need to repeat the same steps again?” That could be a good candidate for automation.

Don’t try to automate everything at once!

When you are writing some script, take baby steps. Think, “what is the minimalistic thing I want right away!”. Keep building on top of it whenever tasks get complicated.

Be patient!

You will struggle to find what you need, be patient and keep working till you get it right! Don’t give up, there will be light at the end of the long tunnel. ;)

Automate all the things!

Well, it’s fun! You reduce the time you spend on repetitive tasks, speed up things and learn along side! :)

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.