Moving to the Phoenix Server Pattern - Part 1: Introduction

This is part 1 of a series. Read part 2 and part 3.

Snowflakes Aren’t Always Beautiful

‘Snowflake’ servers are long running servers that have evolved from their first configured state. As a result of being modified over time to keep up system updates and other configuration changes, they can become quite unique, and difficult to reproduce. After a while, nobody is quite sure what the exact state of the servers are, and changing one thing breaks another, until quite frankly, they become a brittle mess no team wants to deal with.

The Thoughtworks digital team had been running on snowflake servers for a while and ran into all the issues described above. Security updates were applied weekly to our snowflake servers individually and after a while, things began to break as a result of the configuration changes. The more we tried to fix them, the more things broke. We spent a lot of time fixing servers.

An example of a time when a combination of security updates, and other factors caused us pain was when the update triggered a Ruby upgrade. These usually shouldn’t be a problem, but for our team, caused a lot of pain and cost a lot of time.

What had happened was, our application code required an older version of ruby. The update, which was applied directly on the live servers, replaced the older version with a newer one on the servers. The application broke because we didn’t have the older ruby version on our servers, and our PPA provider had deleted its copy of the older version. We had to switch over to using our disaster recovery server, which is usually an application version behind the live servers, while we fixed the dependencies between the application code and server ruby versions.

While we spent time fixing servers and changing configuration , we had people rolling off the team and others joining, which meant the team was losing context on the entire state of the project’s servers. No one on the team had confidence in their knowledge of the state of the servers. We got to a point where the servers had drifted from their original configuration in our Puppet files, because of the security updates, and a bad habit we had developed of applying fixes for different issues that came up directly on the boxes instead of through our automated configuration tools.

One day an outage occurred on our live servers which required that we replace them with new servers. We feared one of our servers had been compromised. It was under attack and was just dying while we watched and probed, running into issues the other live servers were not facing. We couldn’t understand why that was happening, partly because all the live servers were so different. We were not very confident in our ability to recreate our production servers.

After going through a lot of pain and time to create new servers just like the old, the team decided that the Phoenix Server Pattern was the way to go. And so after several months of hard work, delivering outwardly visible value for the business alongside changing the project’s infrastructure, we switched over to using phoenix servers, which we build as immutable servers.

Phoenix to the Rescue

According to legend, the phoenix dies in a big blaze of fire, and out of its ashes, is reborn. As Martin Fowler recommends, “A server should be like a phoenix, regularly rising out of the ashes." The digital team doesn’t literally burn its servers on a regular basis, especially being that they are cloud servers, but we regularly create new servers for our website from a known state, and delete the old.

Our Process

On the digital team, we use Puppet as our automated configuration tool. Whenever we want to make configuration changes on our servers, we create those changes using Puppet and push the changes through to our continuous integration server. This triggers a ‘create base image step’, which creates a base image using Packer, and provisions it using Puppet. Security updates are applied from the OS’s package manager onto the base image and our first pre-production environment pipeline is kicked off.

The pipeline, on its first step creates a new cloud server based on the latest base image. On that server, we deploy the latest version of the website application, environment specific configuration and our in house content service, Hacienda. After, we verify through automated tests that the build is viable, and then replace the current server under our load balancer with the verified server.

Every time we make application changes and push them through to our pipelines, we do not create a new base image. However, we create a new cloud server based off the latest base image, then run through the deployment cycle as described above. In pre-production environments, after old servers are replaced with the new servers under the load balancer, they are automatically deleted. In production, deletion is automated, but has to be manually triggered to make the deletion step deliberate.

This process I have described is the ‘Phoenix Server Pattern’ - creating a new server each time a new build is triggered. The new server is created from a base image, which is created every time there are changes in the servers’ configuration. In doing this, there is no need to apply configuration changes after the server is created.

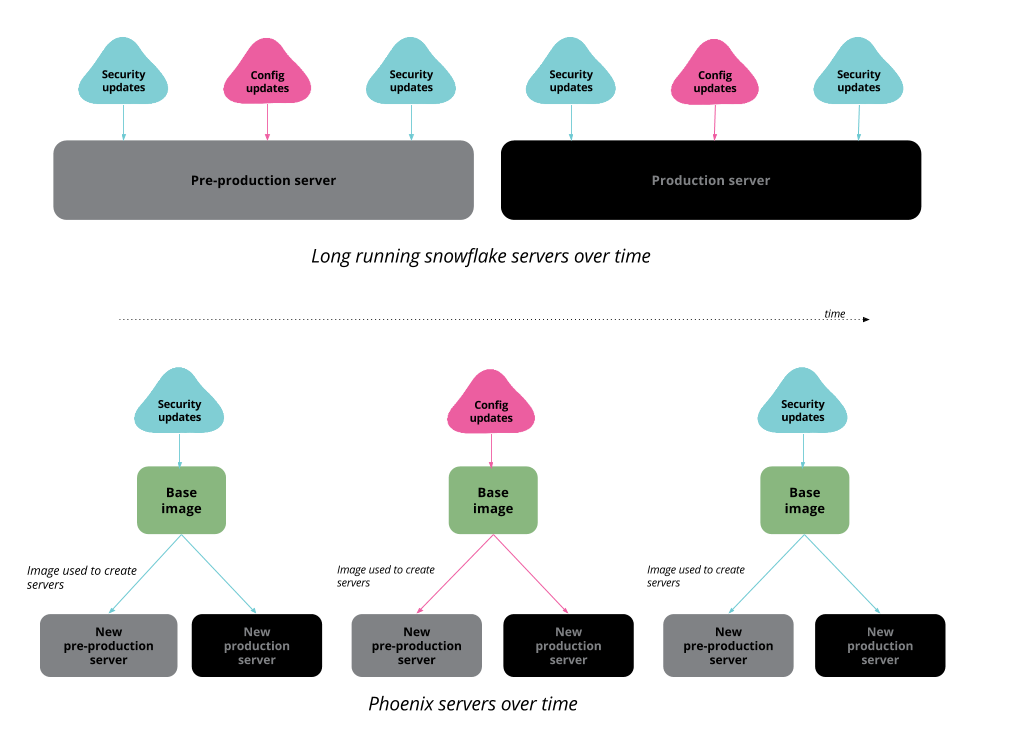

Simplified version of how we used to apply updates on our snowflake application servers over time, compared to how we do the same for our current phoenix process.

Why You Would Use Phoenix Servers

Using phoenix servers is not the cure-all for all things ops. However, they do help a team control configuration drift. In theory, when the state of a system is known, that knowledge is a reliable foundation for adding new infrastructure changes without breaking existing functionality. This of course can also be achieved using automated configuration tools to re-apply or add new configuration, such as Puppet or Ansible in a process known as configuration synchronization.

The drawback of using only these tools to control drift is that only the state of files within the tools’ control can be guaranteed. Any changes to files that are not managed by the configuration tools can become long-lived and may lead to drift. On the other hand, confidence in the state of the system is boosted using the Phoenix Server Pattern, since servers are built from scratch and any ad-hoc changes are blown away and replaced with a known state from the automated configuration tool. Using immutable servers, through the Phoenix Server Pattern gives our team much more confidence when adding configuration updates.

Applying security updates are quite easy now. The weekly security updates to all snowflake servers that gave us lots of problems are a thing of the past. With phoenix servers, the security updates are applied to the base image. So every server created, from our pre-production environments to production have the same version of updates, and any breaking changes are more likely to be seen earlier in the delivery cycle, rather than later (in production).

Applying security updates from the OS’s package manager means we may have some drift from the original configuration files. We do not have control of the effects these updates have on our servers, and we do not know about any knock on effects until they happen. In the snowflake world, we could not predict how security updates would affect a particular server, as the servers were all different. However, with phoenix servers, we are better able to mitigate the effects of updates, if any, since all the servers have the same configuration, including security updates.

Prior to moving to using the Phoenix Server Pattern, we could not say we wanted a server that looked exactly like the one in our showcase environment, for example, to spike out a new feature for our application. In the phoenix server world, servers are such a disposable commodity. It is quite easy to use an older server for a spike, or create a new one that is just like the others, configuration wise, and not worry about disrupting the current work flow or bringing down the entire project operation.

Disaster recovery is so beautiful with phoenix servers. Imagine a critical bug somehow makes it to a production environment despite all of a team’s tests. While the team takes time to forward fix, it is really easy to replace the buggy server with the previous known working server, so users have the best experience possible.

In the past, we had a disaster recovery server that was always one build behind the live servers. But being snowflake servers, they were quite different from the production servers, because of various updates applied to them over time. Swapping with phoenix servers instead of snowflake servers when there needs to be a rollback gives the team more confidence about the state of the servers in live. Using phoenix servers for a rollback is an easy and painless process, because of the nature of the Phoenix Server Pattern, which is all about swapping servers in and out.

Why You Might Not Want Phoenix Servers

The phoenix pattern is a beautiful thing, but we have had to deal with some cons. Practicing continuous integration, alongside continuous delivery means that we create several phoenix servers daily. Depending on how often older servers are deleted, many servers created daily means more resource usage such as CPU and memory on our cloud provider, which increases monetary cost. Keeping both snowflake and phoenix servers running while we worked on moving over to only using phoenix servers, in addition to keeping old phoenix servers around tripled our cloud provider bill.

Creating servers for every build (instead of just applying changes) increases the time from when a change leaves our local development machine to the time it gets to our production stage. If we had to push a quick change to our live servers, it would take about five extra minutes through each pipeline using phoenix servers compared to snowflake servers. The team is currently working on ways to reduce this time to live. In the meanwhile, this is a small price to pay, compared to having developer pairs spending a major part of their days managing snowflake servers instead of writing application code, which is what was happening to us.

Acknowledgements: Thanks to Laura Ionescu, Pablo Porto Veloso, Ben Earlham, Dan Moore, Matt Chamberlain, and Darren Haken for contributions to the article. Our next article talks about things to consider before using/switching over to using the Phoenix Server Pattern.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.