In part two of this blog series, we dive into the architecture of the machine learning methods we apply in the RegretsReporter analysis. To get the most out of this blog, you may need to already understand the fundamentals of machine learning and linear algebra, but we’ll do our best to make this interesting for everyone.

In our previous post (posted on both the Mozilla and Thoughtworks blogs), we discussed our experimental study to investigate user control on the YouTube platform. To measure the degree to which YouTube is respecting your feedback depends on our ability to determine how similar any two YouTube videos are to each other. We need to do this for hundreds of millions of pairs of videos, so we rely first on research assistants to make this determination for a smaller number of video pairs, and then on a machine learning model to handle the rest.

Defining similarity

As you can imagine, there are many variables to consider to gauge whether two videos are similar or not. To someone uninterested in video games, two videos about different video games may appear similar, while to a fan the difference is easily recognizable. There are many subtleties, so we are not able to precisely describe what we mean by “similar”, but we developed a policy to guide our research assistants in classifying pairs. The policy defines classes of similarities, such as similar subject matter or similar political or social opinions, and explains how to assess whether video pairs are similar in each class. This policy helps ensure that the classification is consistent.

Research Assistants

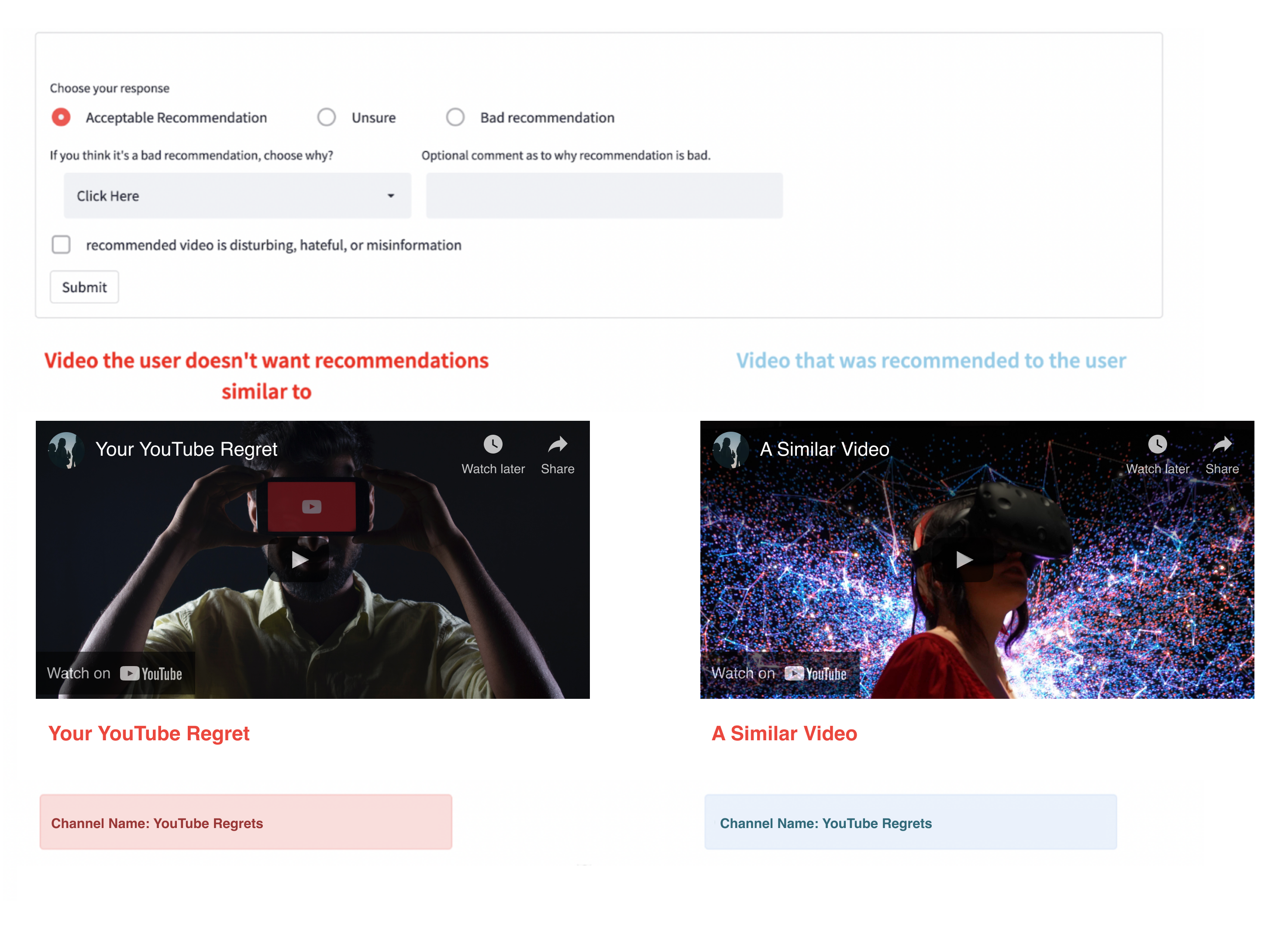

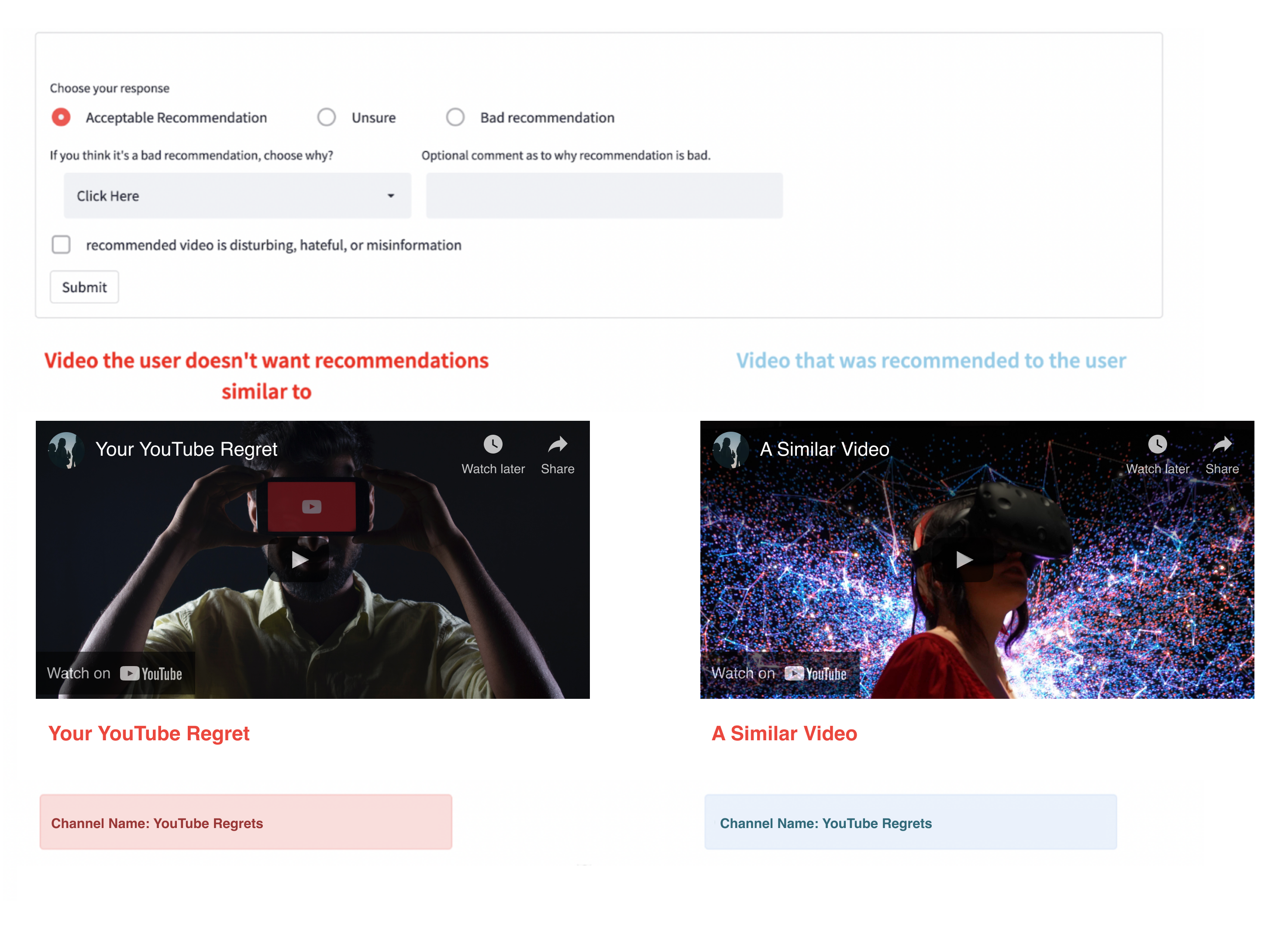

We contracted 24 research assistants, all graduate students at Exeter University, to perform 20 hours each, classifying video pairs using a classification tool that we developed. As discussed below, they were partners in our research. The tool they used displays the thumbnail, title and other metadata for each video. The thumbnails are clickable for cases where the research assistant needs to watch either or both videos to make an assessment. There are then radio buttons that allow the research assistant to specify whether the pair is similar or not and, if so, in what ways. These research assistants are partners in our research and will be named for their contributions in our final report. They all read the classification policy and worked with Dr. Chico Camargo, who ensured they had all the support they needed to contribute to this work. They classified a total of 44,434 pairs sampled from the rejected videos from our YouTube Regrets participants and their subsequent recommendations.

Due to the global reach of the experiment, our dataset contains videos in a number of different languages and we wanted to make sure that our similarity model worked across different languages. In fact, we used the gcld3 model to automatically detect video language from the description, and we found 102 different languages! To allow for classification across languages, we sought out research assistants with varied language skills, although we also allowed them to classify pairs in languages they didn’t understand by using translation tools or in cases where language understanding was not necessary to make a classification.

General modeling approach

Next, we use this labeled data to train a model that will be able to predict whether two YouTube videos are similar or not. Thus the input for the model is a set of two videos and the output is a single binary value indicating whether the videos are similar or not. However, we allow our model to express uncertainty, and as a result it actually outputs a similarity probability.

If it outputs close to 1, it is very confident that the videos are similar

If it outputs close to 0, it is very confident that the videos are not similar

If it outputs close to 0.5, it is uncertain

We mention that the input of the model is a set of two videos. It is certainly possible to build a model that runs directly on the full audio and video tracks, but such models are extremely computationally intensive. The computational resources required as well as the time from data scientists and data engineers would be expensive, not to mention carbon emissions concern.

In many cases, a simpler approach can be adequate. We started with a simpler model, which turned out to be extremely effective. It is very unlikely that a more complex model would significantly improve on the model we used.

In our model, we represented each video as a set of elements we extract:

The video title

The video channel

The video description

The video transcript (as provided by the content creator, or auto-generated by YouTube)

The video thumbnail

Training the model

Thanks to our research assistants, we had 44,434 labeled video pairs to train our model (although about 3% of these were labeled “unsure” and so unused). For each of these pairs, the research assistant determined whether the videos are similar or not, and our model is able to learn from these examples.

Since this project operates without YouTube’s support, we are limited to the publicly available data we can fetch from YouTube. The extension that our participants used would send us video IDs for all videos that the participant rejected or that were recommended to the participant. When possible, the extension also provides some of the information that our model uses, such as video title and channel, but this data is not consistently available. Thus, for each video that we analyze, we use automated methods to acquire the needed data (title, channel, description, transcript, and thumbnail) from the YouTube site directly.

With the features data acquired from YouTube and the labels provided by our research assistants, we were ready to train the model.

Language models

At the core of the most modern natural language processing (NLP) techniques are “language models” which are often based on the “transformer” architecture. Language models are trained by processing enormous volumes of text to produce a statistical understanding of the language(s) they were trained on. There are diverse applications for such language models, but in our case we apply them for semantic similarity: they can determine to what degree two texts are about the same thing.

There are two fundamental approaches to semantic similarity in NLP: bi-encoders and cross-encoders. Bi-encoders take a text as input and produce a vector embedding - you can think of this as a mathematical specification of a point in space. The model is designed such that similar texts will correspond to points in space that are close to each other. The word2vec model is one of the first and simplest examples of this idea.

The alternative approach is the cross encoder, which adds a classification layer on top. A cross-encoder takes two texts as an input and directly produces an estimate of how similar those texts are. While, for certain applications, bi-encoders can be more computationally efficient, cross-encoders tend to be more accurate and are easy to fine-tune for a new application.

Fine-tuning is important as there are often differences between the sorts of text that a language model is trained on and the sort of text that must be analyzed for a specific application. For example, a model might be trained on Wikipedia articles, but then used to analyze transcripts of YouTube videos. Using fine-tuning, we can teach our language models to better function with the sorts of text on which we apply them.

It’s worth noting that language models can be trained to work in a single language, or in multiple languages. In our work, we use multilingual models that cover the languages of the vast majority of the videos we analyze. See the ethics section below for more discussion of this.

Basic model

As a simple first approach, we used a bi-encoder to obtain vector embeddings for the title, description, and transcript of each video in our data. We use a similar model that works on images to obtain a vector embedding for the video thumbnail. Then, for each video pair, we compute a set of features:

The cosine similarity (a measure of how close they are) of the title embeddings

The cosine similarity of the description embeddings

The cosine similarity of the transcript embeddings

The cosine similarity of the thumbnail embeddings

A boolean feature indicating whether the two videos are from the same channel

We then train a logistic regression model using these five features to predict whether the pair of videos was similar or not. We also tried xgboost as an alternative to logistic regression but did not find any significant performance improvement.

As a note, transcripts are unavailable for a portion of our videos, so we actually have two versions of the model - one for pairs in which both videos have transcripts and one for pairs in which at least one video is missing the transcript.

This model functioned and was able to determine if two videos are similar or not. It was better than a random guess, but the accuracy was quite poor. So we looked for ways to improve the model.

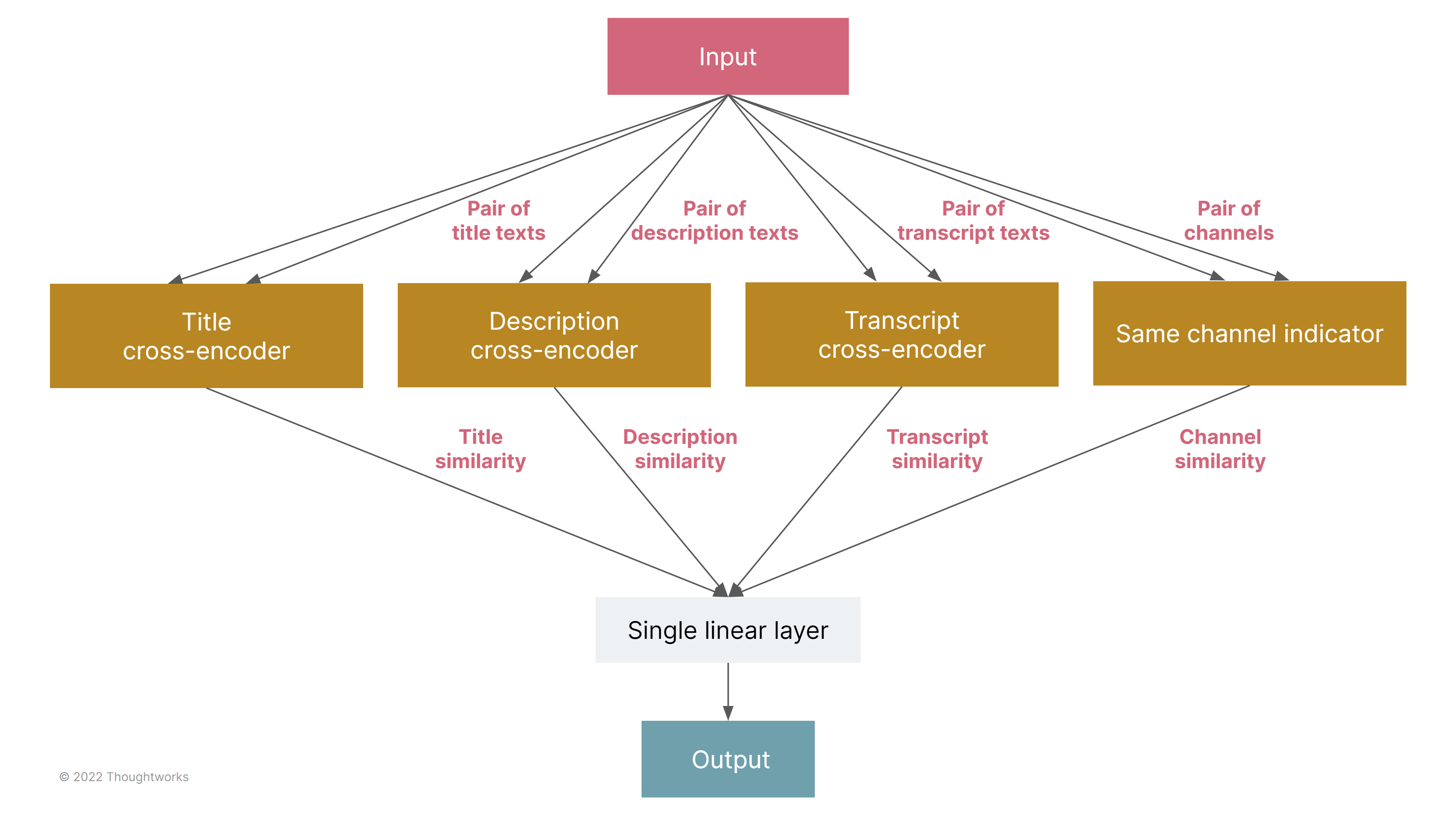

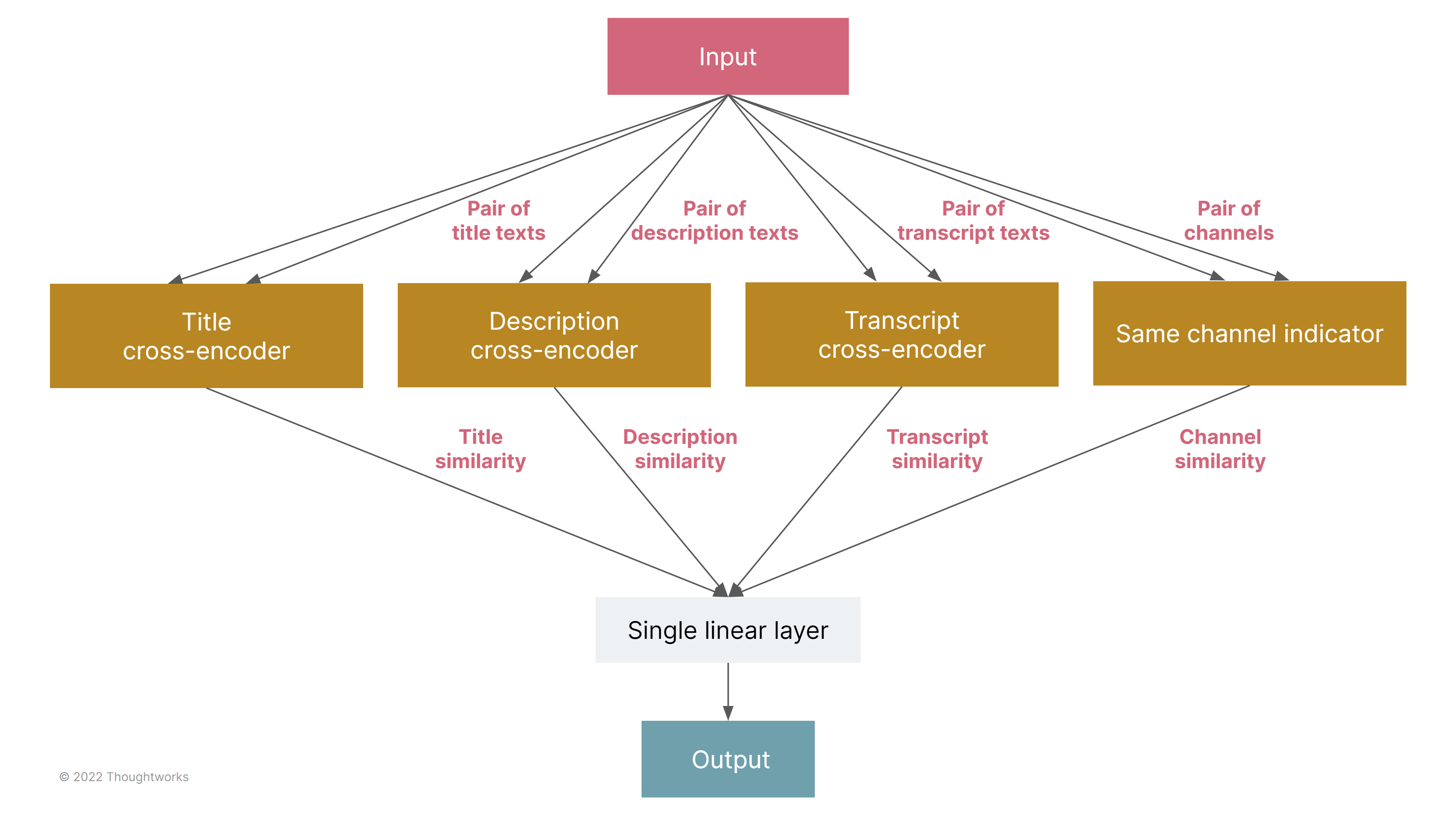

Unified model

As illustrated below, the unified model replaces the bi-encoders from the first model with a set of cross-encoders for determining the similarity of each of the text components of the videos, which are then connected directly, along with a channel-equality signal into a single linear layer with a sigmoid output. This final layer is equivalent to the logistic regression from our first model.

The motivation for the unified model was twofold:

Cross-encoders tend to have better accuracy than bi-encoders, so we wanted to switch from bi-encoders to cross-encoders

We wanted to individually fine-tune the cross-encoders, as video titles, descriptions, and transcripts tend to each have different sorts of text and are all probably quite different from the sort of text that most language models are trained on.

Fine-tuning is most effective with labeled data. If we have a set of text pairs that are labeled as similar or dissimilar, we can use them for fine-tuning. In our case, we have labeled data, but we have labeled video pairs, not labeled text pairs. We may know that two videos are similar, but that does not necessarily mean that the titles of those two videos are similar. This means that we can’t directly fine-tune a cross-encoder. But with our unified model, we can train the whole model based on the labeled video pairs and the training process will fine-tune the cross-encoder for each text type simultaneously as part of the larger model. That’s why we call the whole model the “unified model”

Model Evaluation

The model was evaluated for accuracy, precision, recall, and AUROC. Accuracy tells you the percent of correct predictions over all the predictions, and it's a useful metric when all classes are equally important and your data is balanced. Precision shows the proportion of positive predictions that are truly positive. Conversely, recall measures the model's capability to detect positive samples and thus calculates the proportion of true positive examples that are classified as positive. Generally, the most important metric or metrics will depend on the application.

To get a better view of the overall performance of the model, we can also use a metric called AUROC. AUROC stands for Area Under ROC curve, while ROC stands for Receiver Operating Characteristic. This curve shows how well your model can separate positive and negative examples and also identify the best threshold for the separation. An excellent model has an area under ROC close to 1.0 so it can separate samples well.

In the end, we mostly used AUROC on evaluating our models during training (on each epoch with validation dataset) and after training with separate test dataset. We did not see validation loss increase nor validation AUROC decrease during training so overfitting didn’t seem to be much of a problem. We also used automatic early stopping of the training if the model would have started overfitting. With the final test set of 10% of labeled training data, our models were achieving following scores presented on the table below. It is difficult to compare these metrics to other methods as most other work is designed for video retrieval rather than directly assessing similarity, but these metrics by far surpassed our expectations. However, they were still not quite accurate enough for analysis of the subtle differences in recommendation patterns in our study, limiting the model’s usefulness for that application.

Metric |

Model with transcripts |

Model without transcripts |

Accuracy |

91% |

91% |

Precision |

92% |

95% |

Recall |

91% |

87% |

AUROC |

0.968 |

0.967 |

As mentioned earlier, transcripts are unavailable for a portion of our videos, so we actually had two versions of the model — one for pairs in which both videos have transcripts and one for pairs in which at least one video is missing the transcript.

Because our evaluation data excludes the approximately 3% of pairs rated as unsure, actual performance may be a few percent lower.

Performance by similarity type

As our research assistants provided a reason when they classified a video pair as similar, we can evaluate the model’s performance on different types of similarity. Below are the recall metrics by reason. Note that this is on all training and test data; however, as above, there is no evidence of overfitting. It is notable that the model performs slightly worse for “Same type of undesirable content” as there are many ways in which video pairs can be similar in that way. The exceptionally high performance for “same controversy” is notable as well and probably attributable to consistent language being used when discussing a particular controversy.

Same controversy |

97% |

Same persons |

90% |

Same type of undesirable content |

79% |

Similar opinion |

94% |

Similar subject |

92% |

Other |

56% |

Possible Model Enhancements

Additional Features

There are many possible features that could be added to the model:

The thumbnail image was not included in our final model as we had limited time and its value seemed too limited to justify the effort. This has not been thoroughly explored

There are methods (such as this example) which use data external to YouTube to get more information about YouTube videos. The linked example uses Twitter references to YouTube videos, combined with information about the ideology of a set of reference Twitter accounts, to estimate the ideological slant of a YouTube video

Instead of simply using channel equality as a feature, we considered (and still plan to) develop a channel embedding model using the channel co-recommendation data we’ve collected in our study

Our analysis shows that the time between reporting of the two videos in our data is a predictor of their similarity. This could be included in the model, at the cost of decreasing the generalizability of its application

Model architecture

Our experimentation suggested that our architecture is effective, however, it’s possible a more complex model architecture, perhaps replacing the single linear layer with something more sophisticated, could improve performance.

Ethical concerns

Bias in language models

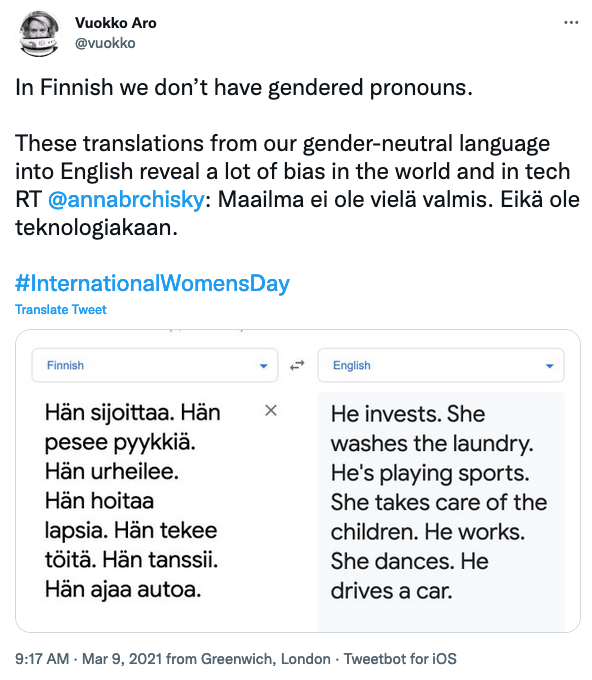

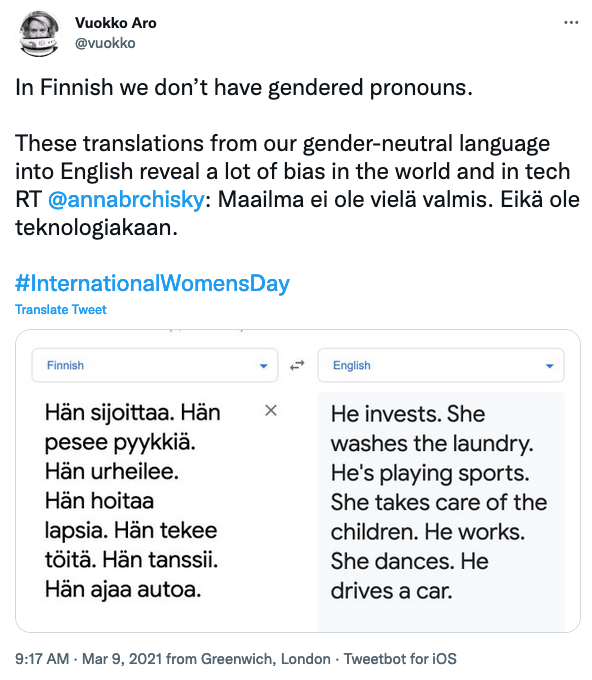

It has long been understood that AI language models encode the social biases that are reflected in the texts they are trained on. For example, when translating from languages with gender neutral pronouns to a language with gendered pronouns, Google Translate used to choose male pronouns for words that have a male bias and vice versa. For example:

In our case, the cross-encoders that we use to determine similarity of texts are also trained on texts that inevitably reflect social bias. To understand the implications of this, we need to consider the application of the model: to determine if videos are semantically similar or not. So the concern is that our model may, in some systematic way, think certain kinds of videos are more or less similar to each other.

For example, it’s possible that the models have encoded a social bias that certain ethnicities are more often involved in violent situations. If this were the case, it is possible that videos about people of one ethnicity may be more likely to be rated similar to videos about violent situations. This could be evaluated by applying the model to synthetic video pairs crafted to test these situations. There is also active research in measuring bias in language models, as part of the broader field of AI fairness.

In our case, we have not analyzed the biases in our model as, for our application, potential for harm is extremely low. Due to the randomized design of our experiment, any biases will tend to balance out between experiment arms and so not meaningfully affect our metrics. Where there is a likelihood of bias impacting our results is in the examples we share of similar video pairs. The pairs that our model helps us discover are selected in a possibly biased way. However, as we are manually curating such pairs, we feel confident that we can avoid allowing such biases to be expressed in our findings.

A more difficult issue is the multilingual nature of our data. We use the “mmarco-mMiniLMv2-L12-H384-v1” model which supports a set of 100 languages (the original mMiniLMv2 base model) including English, German, Spanish and Chinese. However, it is reasonable to expect that the model's performance varies among the languages that it supports. The impact can vary — the model may fail either with false positives, in which it thinks a dissimilar pair is similar, or false negatives, in which it thinks a similar pair is dissimilar. We performed a basic analysis to evaluate the performance of our model in different languages and it suggested that our model performs well across languages, but the potential differences in the quality of our labels between languages reduced our confidence.

We do expect that bad YouTube recommendation rates may vary between different languages and geographic regions. Our previous findings showed that YouTube users in countries where English is not a primary language have a disproportionately high rate of unwanted recommendations. We have chosen not to report any analysis of differences in bad recommendation rates between languages and countries, as we are not confident that any such findings are due to real differences in YouTube’s recommender system rather than a product of our model’s more subtle inconsistent behavior between different languages.

Involvement of research assistants

Our research assistants performed labeling work that is often devalued in machine learning development and rarely adequately compensated. Our research assistants are partners in our research, are compensated at standard research assistant rates for students at Exeter University, and had support resources available in case of exposure to upsetting content.

Sharing

We intend to make the trained model publicly available in the near future. All code is available here.

Acknowledgments

We are grateful to Ranadheer Malla of the University of Exeter for his leadership in the development of the classification tool, Glenda Leonard of the Mozilla Corporation for her work in developing and executing the methods to acquire the needed data from YouTube, and our team of research assistants from Exeter that performed the classification work.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.