Whenever I read, I have a habit of spotting parallels between the insights of a given book and some of my own daily practices. This happened recently when I was revisiting Kent Beck’s Extreme Programming Explained. Extreme Programming (XP), Beck writes, is based on five core values (XP) — Communication, Simplicity, Feedback, Courage and Respect. These are all, of course, important values, but I was particularly surprised at my realization that each one correlates with automated test practice.

In this blog post I’ll demonstrate what I mean and show that each XP value has something important to say about tests and clean code.

Communication

Communication is an integral part of XP. When you’re dealing with complex systems, communicating intention and meaning is crucial. This is true in all aspects of your work — code included. One of the reasons for this is the fact that developers spend more time reading code than writing it. This is where tests can be particularly powerful: they are often the quickest way to actually understand code. The wider benefits can be significant — if a developer has to spend time puzzling around a test to figure out what it means and what it does, they will have less time to spend on features and fixing bugs. In other words their velocity drops.

One means of communication is how you name tests. These can convey a rule or a behavior to the person reading them — particularly useful if you have a new team member.The name of the test should offer a clear clue for a developer to understand what exactly is being tested and how the target object is supposed to behave. The name should be descriptive and should define the behavior — what should happen rather than how.

`should trigger mail alert job` --> does not define the clear behavior of when it should and when it should not

`should trigger a mail alert job when time reaches threshold` --> defines clear intent.

The way we arrange test code should also be done in a way that improves readability. Arrange, Act, Assert or Setup, Execute, Verify, Teardown. These guidelines help to make code more readable by dividing code structure in a way that makes it easier to know what is happening and where. There are some dsl provided in some languages as framework (Given, When, Then), which are created with the sole purpose of bringing a skeleton for the tests.

@Test

void shouldReturnInterestBasisForLeapYear() {

var clock = Clock.fixed(Instant.parse("2000-12-03T10:15:30.00Z"), UTC);

var basisProvider = new LeapYearInterestBasisProvider(clock);

//1

var currentInterestBasis = basisProvider.getCurrentInterestBasis()

//2

assertThat(currentInterestBasis).isEqualTo(366);

}

//1,2: Space helps to structure the code and read code better at a glance.

Simplicity

Kent Beck calls simplicity “the most intensely intellectual of the XP values.” This is because it requires the most thought and consideration — ironically, simplicity is incredibly hard.

We tend to think about simplicity when we decide the scope of a project, saying things like what's the simplest thing we can do right now that would possibly work? This isn’t always straightforward, of course and can lead to some debate — I have seen decisions change drastically when what the “simplest thing” is was challenged in a team! Just as simplicity makes project scope manageable, making test code simple makes it more manageable — or readable. To keep test code simple and clean, there are a number of principles that should be followed:

The test name should be simple. It should communicate the business domain with clear intent.

Sometimes test data setup can make tests bulkier. It’s good practice to extract test helpers to reduce duplication and bring discipline across the test suite.

Sometimes test helpers aren’t useful. We often see FakeServer, AcceptanceTestDriver which sets up the common structure needed for test code. Another technique when test data setup gets bulky is to use test data builders. For a class that requires a complex setup, we can create a test data builder that has a field for each constructor parameter, initialized to a safe value. The builder will have chain-able public methods for overwriting the values and the build method should return new instances.

data class CountryCode(val code: String, val country: String) data class PhoneNumber(val countryCode: CountryCode, val number: String) data class Address(val streetCode: Int, val block: Int, val unit: Int, val pin: String) data class Person( val name: String, val age: Int, val address: Address, val phoneNumber: PhoneNumber )

Imagine creating test data for Person class; you repeat it in every test wherever it’s needed and find creative names for them. With builders, it becomes easier to hide this complexity and remove this repetition.

class PersonBuilder(

val name = aRandomName(),

val age = aRandomNumber(),

val address = anAddress(),

val phoneNumber = aPhoneNumber()

){

fun build(): Person = {

Person(name, age, address, phoneNumber)

}

}

val person = Person(name = "Alice", age = 30) /

/* This will create a person class with name as Alice and age as 30 and all other data is created by builder under the hood.This gives you flexibility to define in test with respect to context of the test and thus override only those parameters. */

I used to be of the opinion some time back that everything which is input should be mocked. I was wrong. We shouldn’t mock value objects (if the setup is complex, create test data builders); we should only mock entities whose behavior needs to be controlled. There are some layers which we cannot mock; an external API, for example, can’t be mocked for the simple reason that we don’t own them.In these instances, we should write an adapter layer.

Feedback

We apply feedback at every stage of XP. We do retrospectives to reflect back and look at where we could improve. After all, no fixed direction can be valid for long. We incorporate feedback from these sessions and should make changes accordingly.

Tests give us feedback: they tell us instantly whether our code is working.When the smallest unit of code fails, we get feedback quickly; this should allow us to act to remove any issues that could be more significant and costly. on time and thus can act upon it.

However, while all tests give feedback, they don’t all do it in the same way. Unit tests help to drive our code, integration tests make sure that different components work together correctly and contract tests validate the communication boundaries between different systems. Infra tests, meanwhile, allow us to ensure configurations are correct.

- Unit test - helps to design and define contract

- Integration test - verify integration with component is working correctly

- Contract test - components can talk to each other

- Infra test - configurations are correct

- Acceptance test - system meets user expectations

Even though each testing layer provides us with feedback, every test has a cost in terms of maintenance and how much time each layer takes to run. This is why the test pyramid is so important — it helps us to acknowledge and manage the various trade-offs that are an inevitable part of the testing process. More tests might be desirable, but they require more time; setting up higher level tests costs more than setting up unit tests. Because of this, teams need to be clear and deliberate in the tests they choose — although decisions might change and evolve, adding any new testing later needs to be well-defined, with the purpose and feedback they will offer clearly understood and articulated.

Given the major purpose of adding tests is to provide rapid feedback, test failures need to be clear — i.e. it should be obvious what exactly has happened and what should be done to fix them. This is why tests need to be simple and well-defined.

Courage

Writing code and making changes to code requires courage (and confidence). Tests enable this sense of courage — without proper test coverage, developers may lack the courage needed to make the changes to the code that they believe they should make. Caution can ultimately have negative consequences, opening up the possibility that not only will code not be properly improved but it will also be left with bugs and flaws.

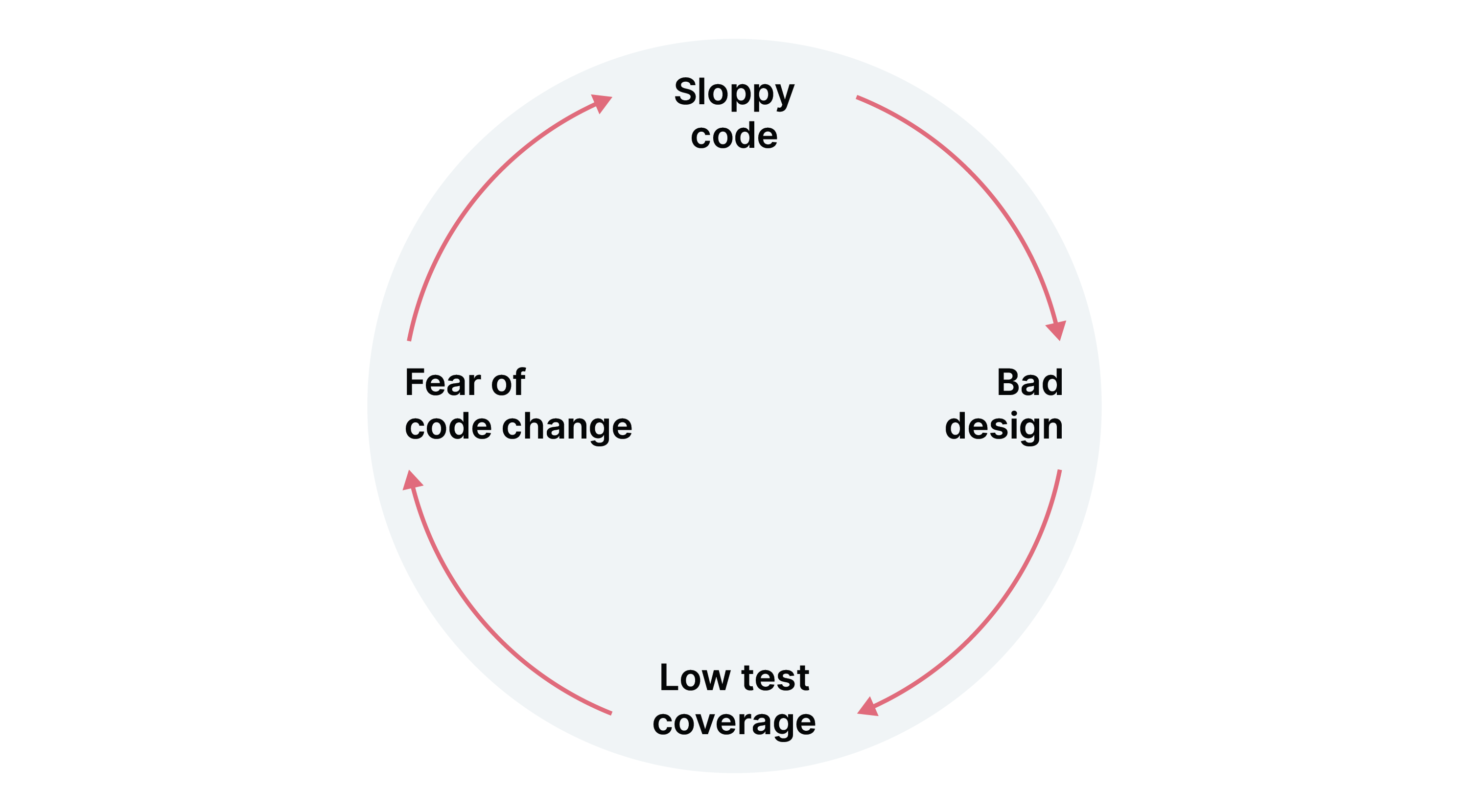

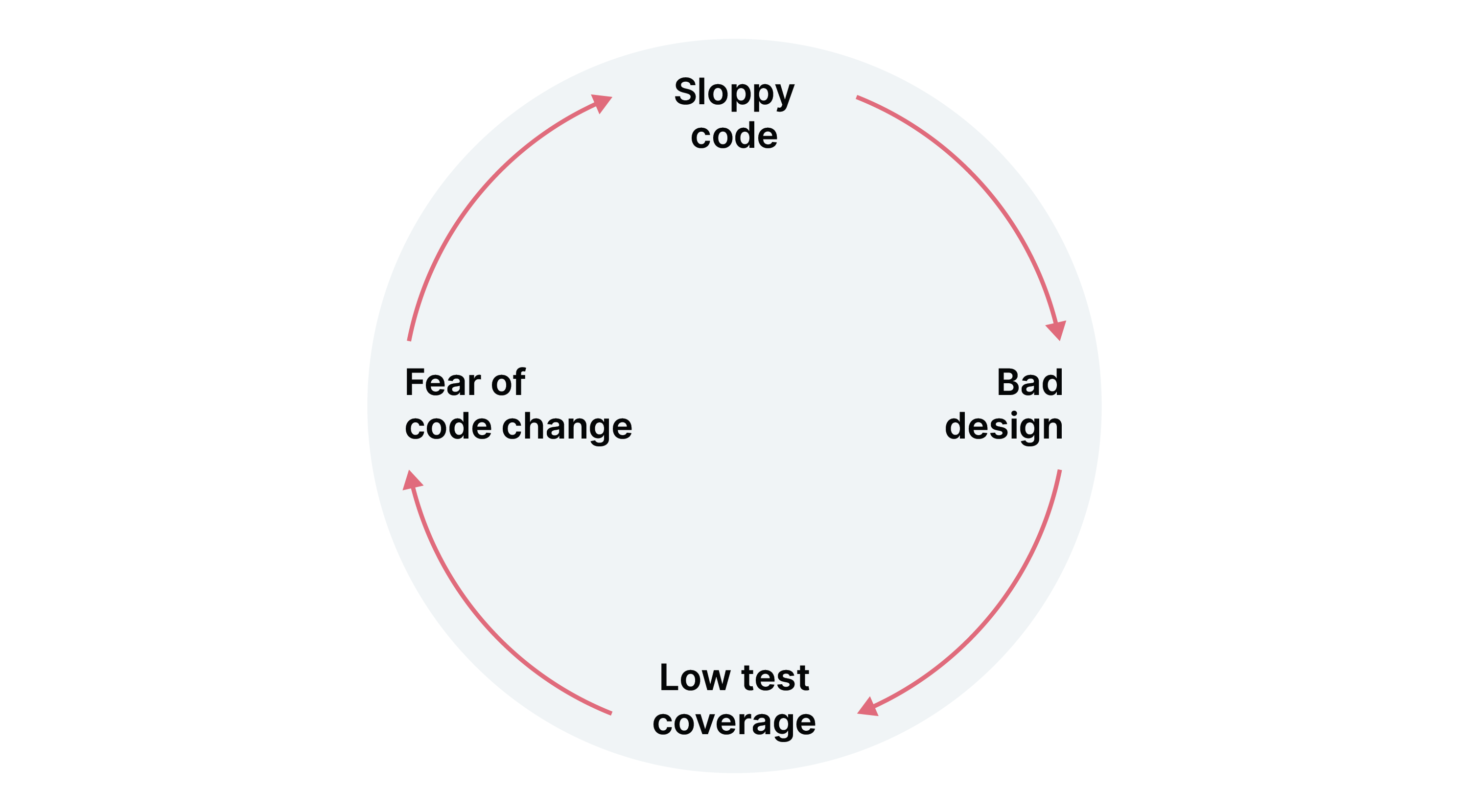

If there is a bug fix or feature that needs to be updated, the code may have already become a big ball of mud which no one wants to touch. At this point, even though the team wants to refactor, they can easily fall into a vicious cycle of not refactoring due to a fear of updating code or test design — in other words, a lack of courage.

If a team gets legacy code without any test suites and has to work on the code, they can start by adding tests around existing code. (Characterization test)

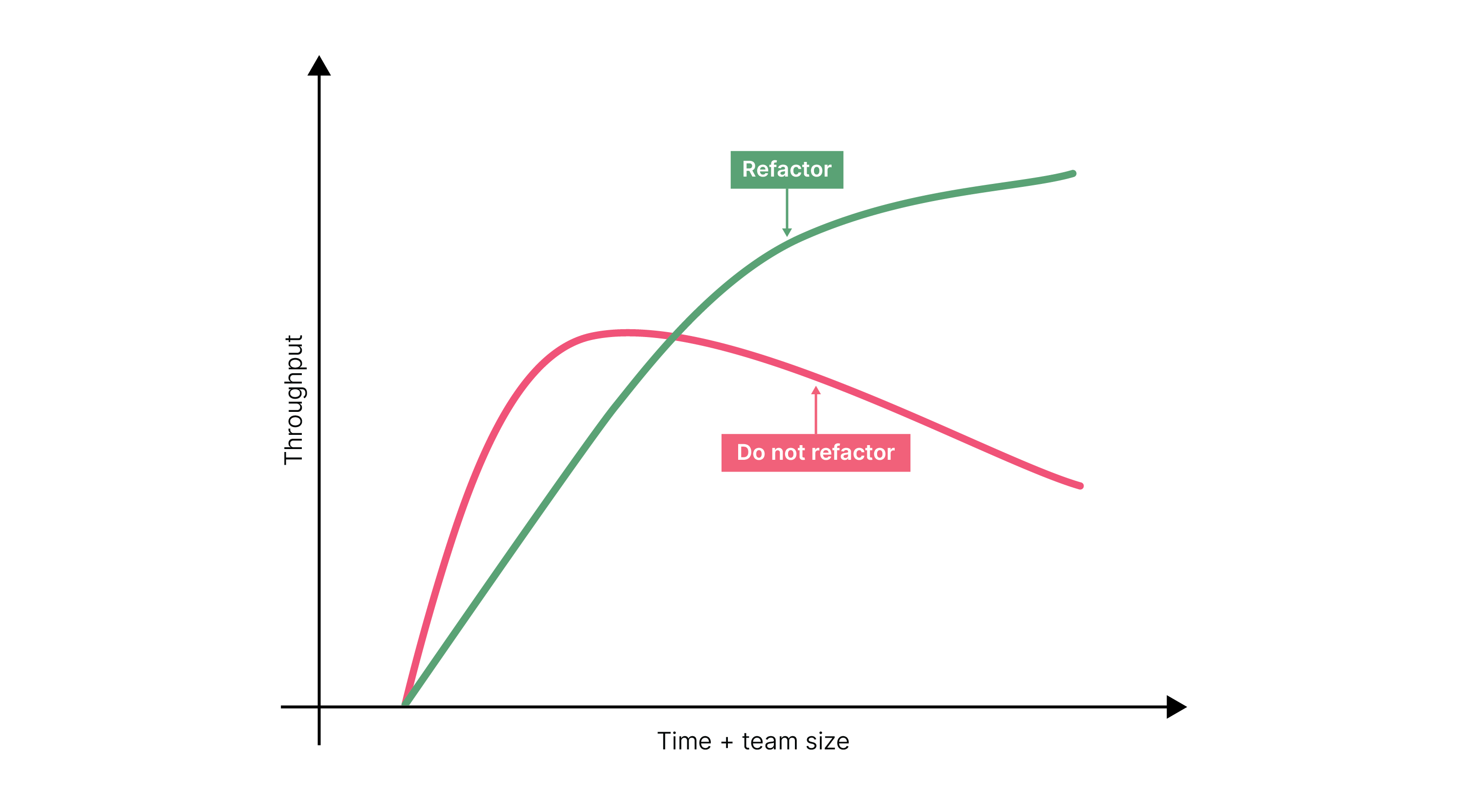

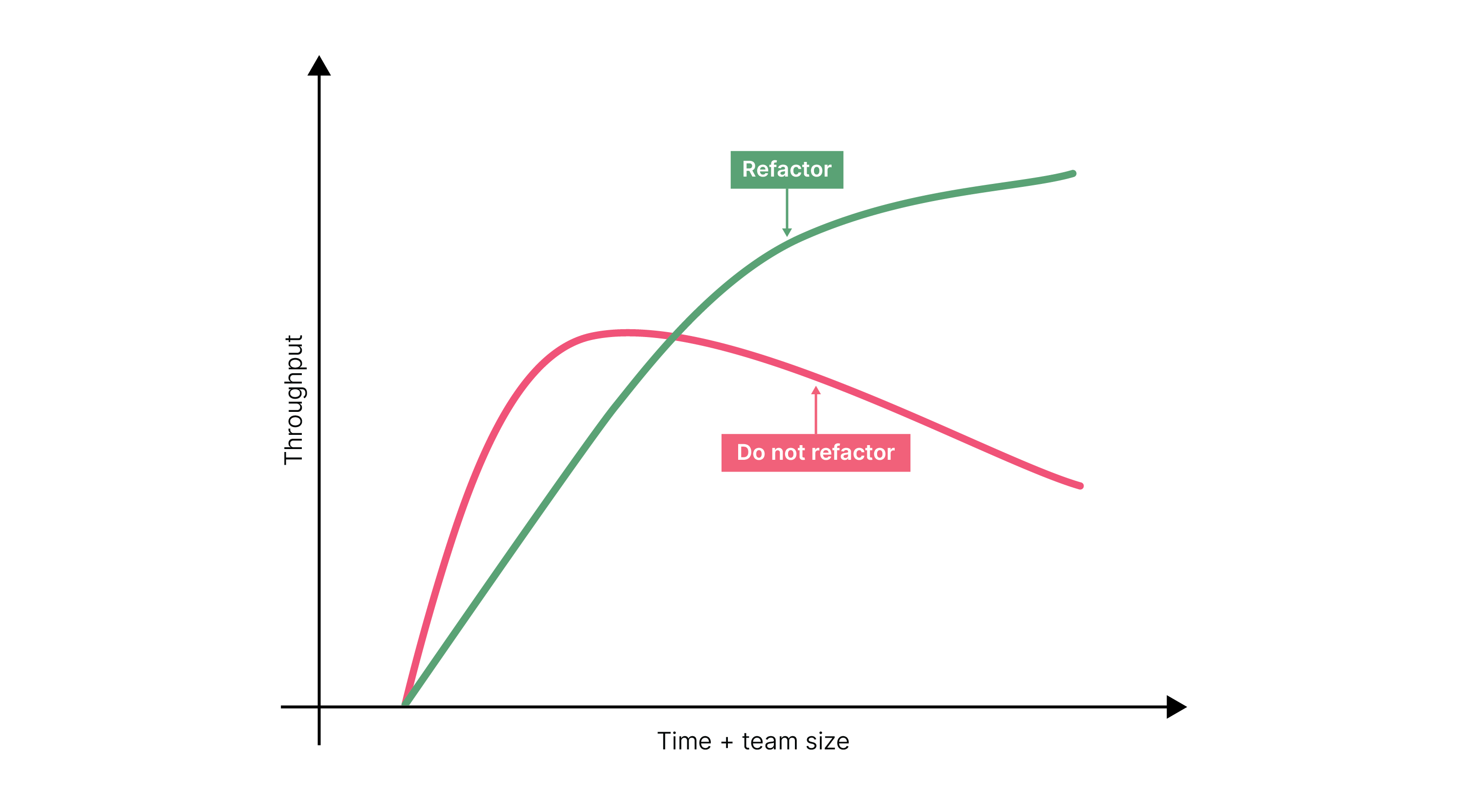

In these instances, refactoring tests should be a continuous process — unlike refactoring code. Although delaying it might lead to a perception that we are starting to move faster as a team,later time will likely be later spent refactoring. Think of it this way: if you let the tests rot, your code will rot too. Keep your tests clean.

Respect

Respect for all team members is crucial for the success of any project. This ensures a healthy working environment, of course, but it also means better decisions can be made and you work together more effectively.

We should also have respect for our code too. That’s often overlooked when it comes to test code as there can be a tendency to write tests in a “quick and dirty” way, lacking the type of effort and attention to detail that one would have if they were writing production code. This approach is invariably the start of wider and more disruptive failures, leading to a loss of confidence in the test suite.

So, teams need to respect test code in the way they do production code. If there is a decision from the beginning to keep the testing code clean, that makes it a habit; that should mean that adding new tests is much easier, with little temptation to write something “quick and dirty.”

Along with keeping tests clean, it’s also important that the tests are deterministic. If they fail, they should fail for the correct reason and fail repeatedly — not dependent on the machine, time or an external dependency. It’s very important to address these tests before the team loses trust. Fortunately, there are ways to tackle these types of flaky tests; you can find out more here.

In short, start treating your test code as production code. Tests are not second class citizens. They require the same thought, design and care as production code, as at the end tests signal code can be deployed to production.

XP and automated tests

XP's values create a baseline to be followed for a given project. These are all practices that help you become more disciplined in your approach . Similarly, tests help team members learn about the domain — they could be seen as talking artifacts. If tests are written in a timely and clean manner, they will successfully provide feedback, give teams the necessary courage to refactor and make code maintainable and readable.

Tests and XP

Communication

Tests talk to teams

Simplicity

Tests should be well structured and readable to ensure simplicity

Feedback

Tests turn red to green with feedback about the code

Courage

Tests give courage to refactor

Respect

Tests should be treated with the same respect as code

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.