In recent years, agile teams have started practicing the shift left test approach to speed up product deliveries and remove testing bottlenecks. However, while this makes sense, often this approach can be undermined by only shifting the functional tests left, and leaving the cross functional requirements (CFR) to be addressed at the end of the delivery.

This has its beginnings in poorly developed release roadmaps. They are designed in such a way that operational must-haves — like performance and security tests — are slotted into the last few weeks of delivery. This means teams end up testing CFRs in a hurry to meet release deadlines, which then lead to risky releases with a compromise in quality. This is the least desirable approach but, surprisingly, is still common across organizations.

Let’s say you have two weeks left to release your product; all the functional tests have been completed as per the release plan. Now your focus is on the cross-functional tests, with the main one being performance. While running performance tests, you discover one of the key endpoints has a major performance decline; it's critical that it’s fixed before the product goes live. Will you be confident enough to release your product at the agreed time?

Worst of all, you uncover this performance fix will require a major revamp of your existing approach. In such a situation,

Will you be happy to make the changes with just a few days left for the release?

Will you release the product on the release date?

Are you confident in the quality of delivery?

If a product can satisfy its functional requirements but fails to meet just one CFR (be it performance, capacity, speed, security, availability, scalability etc.), the product will falter and fail to deliver for users.

It’s important to strike the right balance and give equal weight to cross functional requirements. This article explores how we can do that by adopting processes and practices which allow us to shift CFR tests left, building them into the product right from inception through to delivery.

The following chart illustrates shift left testing strategy for CFRs. They are the list of activities performed at each phase to shift CFRs to the left of the delivery cycle.

Requirements

Identify and prioritize the CFRs

Gather detailed requirements from the owner of each CFR

Design

Define the DoD (Definition Of Done) of the product

Include CFRs in acceptance criteria for a user story

Include efforts for CFRs in the story estimates

Have a 'CFRs by design' mindset

Develop

Meet all the KPIs mentioned in the acceptance criteria

Integrate linters and static code analyzers into the developer environment for faster feedback

Automate the CFR tests and integrate it to CI

Test

Execute manual explratory testing for CFRs

Carry out all CFR tests mentioned in story DoD and capture results

Release

Test in production

Let's take a closer look at each of the activities carried out in detail.

Identify your CFRs

The first step towards shifting CFRs left is to identify and gather cross-functional requirements during the inception/planning phase.

During inception, in addition to discussing product features, planning user journeys, and system architecture, the delivery team should also focus on identifying and gathering cross-functional requirements of the product. The team should discuss and align on priority CFRs with stakeholders. Upfront clarity on CFRs will help the team keep them at the forefront of their mind when brainstorming and ideating in design conversations. For example, a 'performance by design' mindset lends itself to good performance, or at least the agility to change or reconfigure an application to cope with unanticipated performance challenges.

This is an important first step to prevent design-related operational problems from occurring within the system.

Define your DoD

The next step is to document the DoD (Definition Of Done) for a story.

The DOD for a story is a shared understanding of expectations that a story must meet in order to be released to production.

The delivery team should collectively identify and document the different types of tests (both functional and cross-functional) that will be carried out for the product. These tests should be documented as DoD for the team to follow, for every story. The team can discuss the ownership of each test — who will contribute to which tests (what tests will be written by devs, and what will be written by QAs), so they agree on DEV-DoD and QA-DoD.

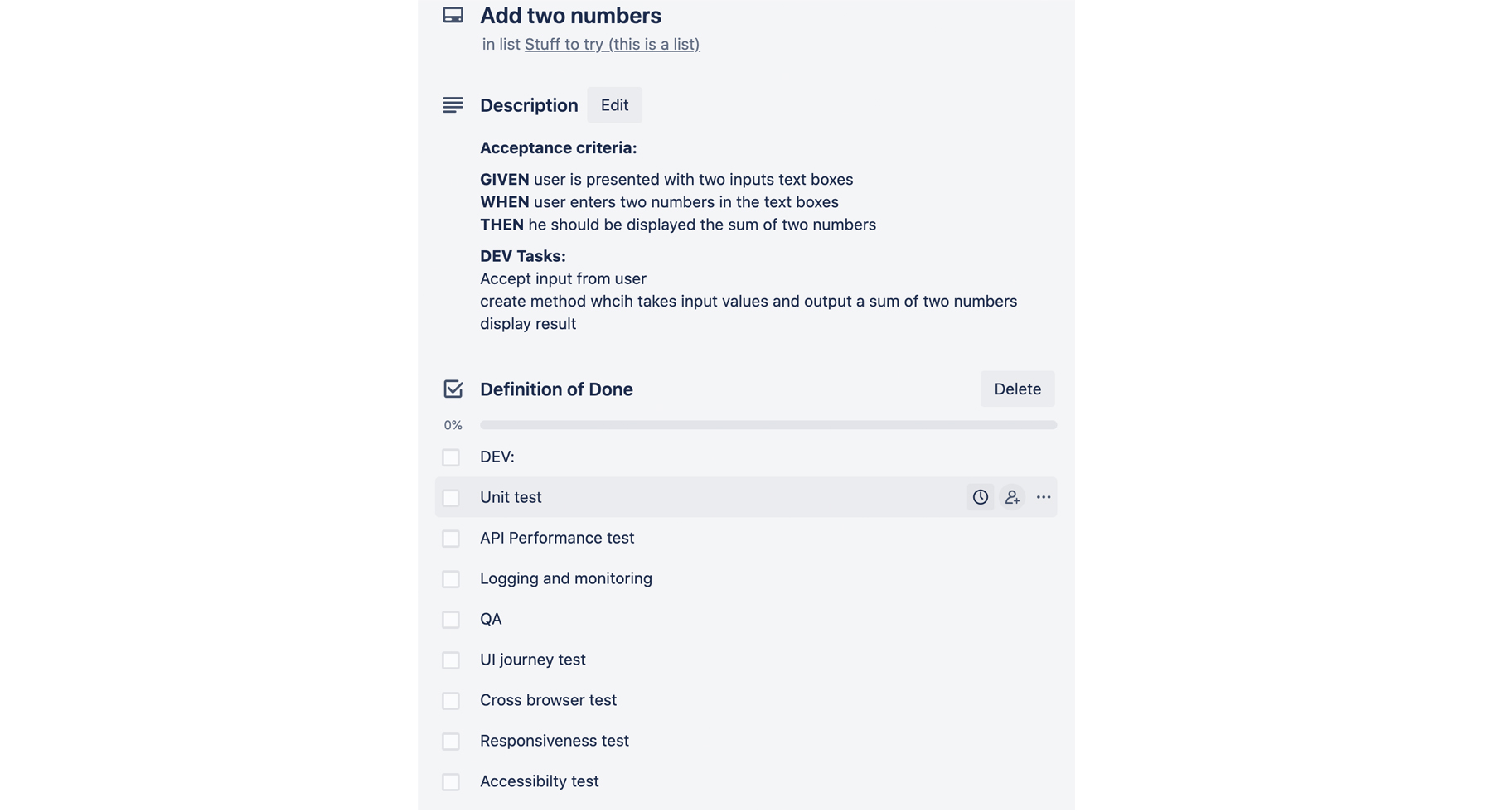

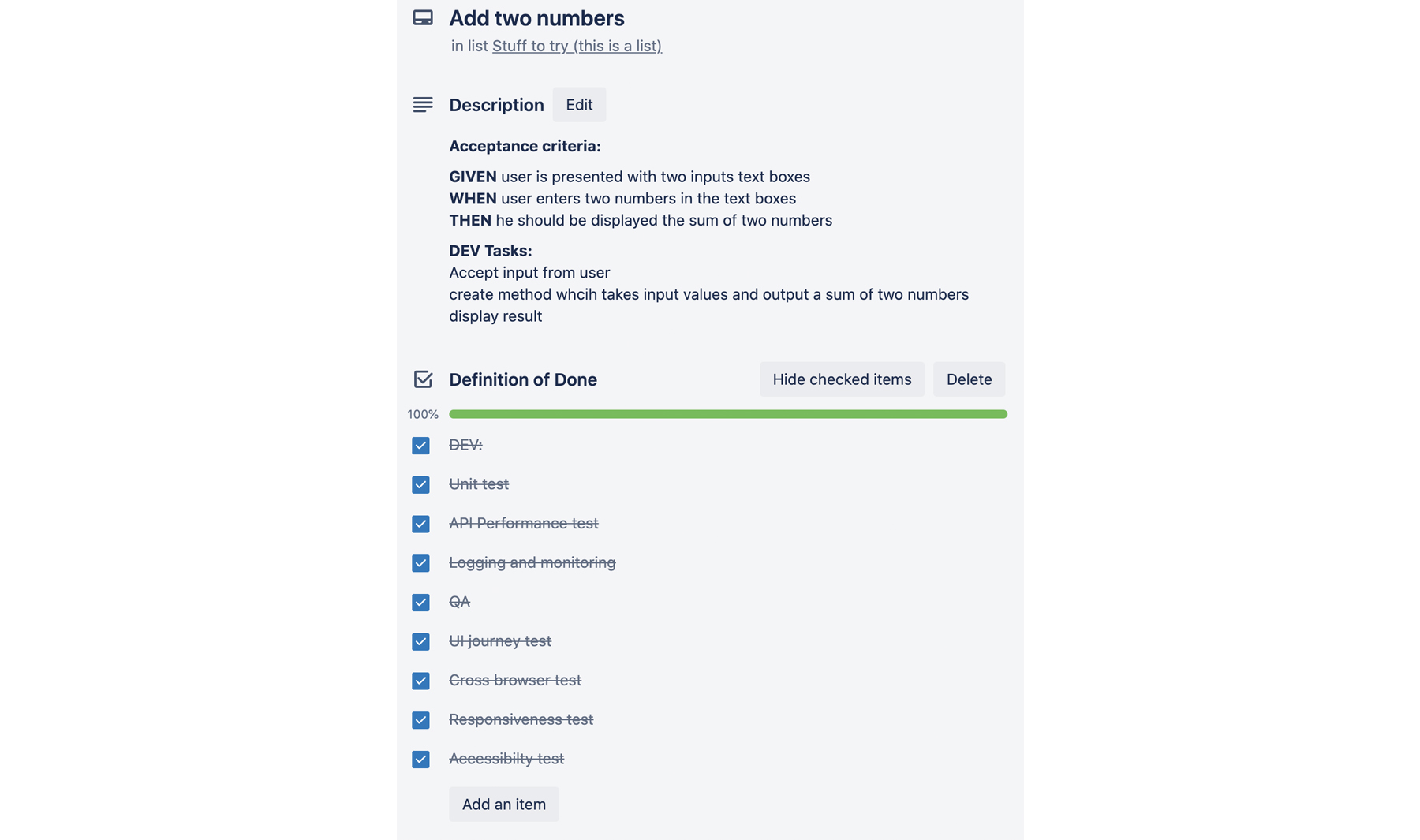

The image below is a basic example of what a story-level DoD document might look like.

Tests |

Ownership |

| Unit tests | Dev |

| E2E journey tests | QA |

| UI performance tests | QA |

| API performance tests |

Dev |

| Accesibility | QA |

. . . |

Definition of Done (at a story level)

Track your CFRs

Once your DoD is identified and agreed on by the team, it is important to ensure the team practices and follows them. There’s a relatively simple way of doing this – adding and tracking the tests as part of the story card.

During story breakdown and detailing

After the inception stage, while creating user stories, all the cross functional requirements that the story has to meet should also be captured in the respective story card along with its functional requirements.

During estimations

During estimation meetings, the team should discuss and add the different types of tests that will be covered in the story card. The estimate and story points should also factor in the estimates for CFR tests. For example, whenever a story involves the development of a new endpoint, the CFRs that correspond to this endpoint, such as performance, security, audit and logging, should also be covered in the story. Again, this means story estimates have to be provided keeping CFRs in mind.

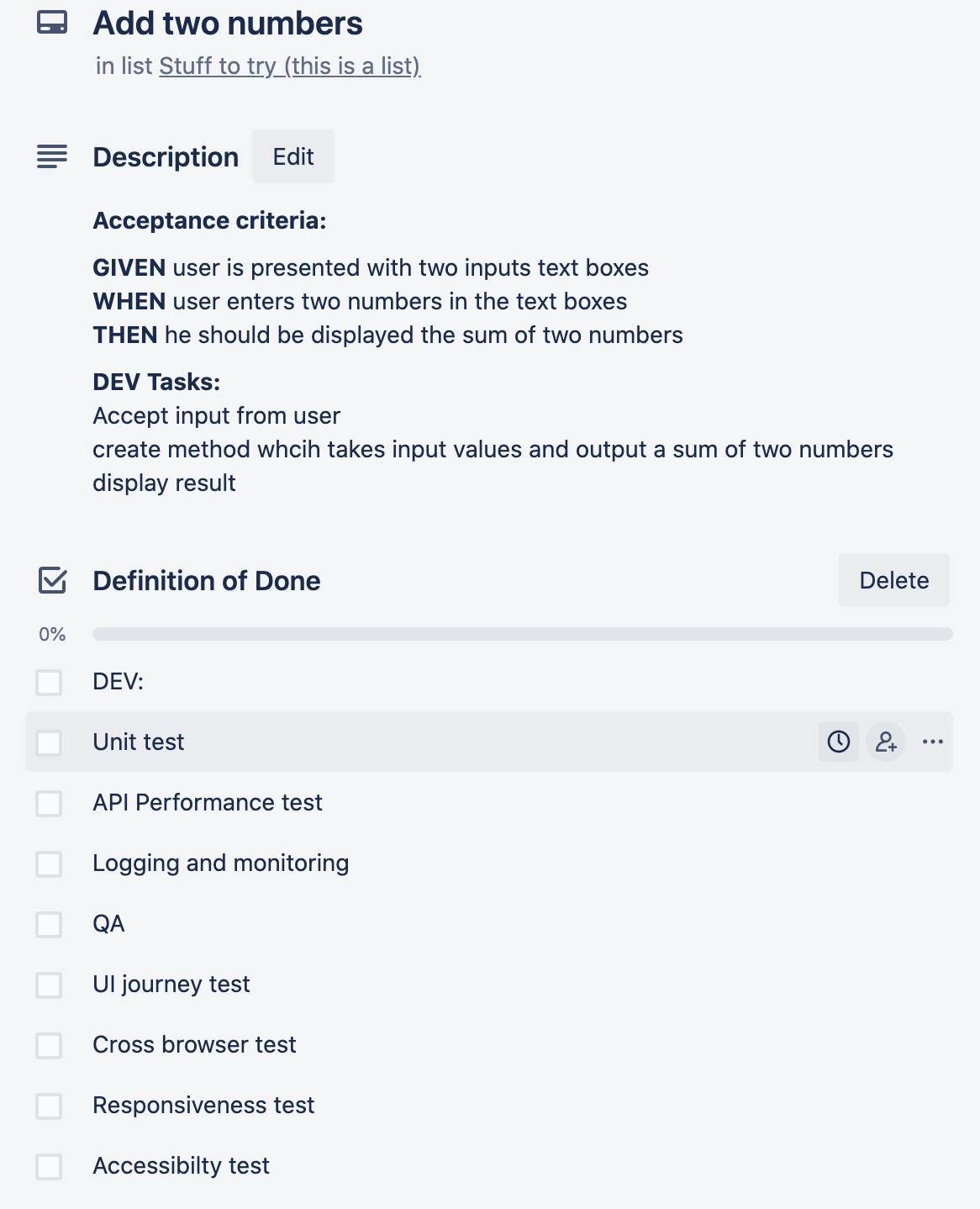

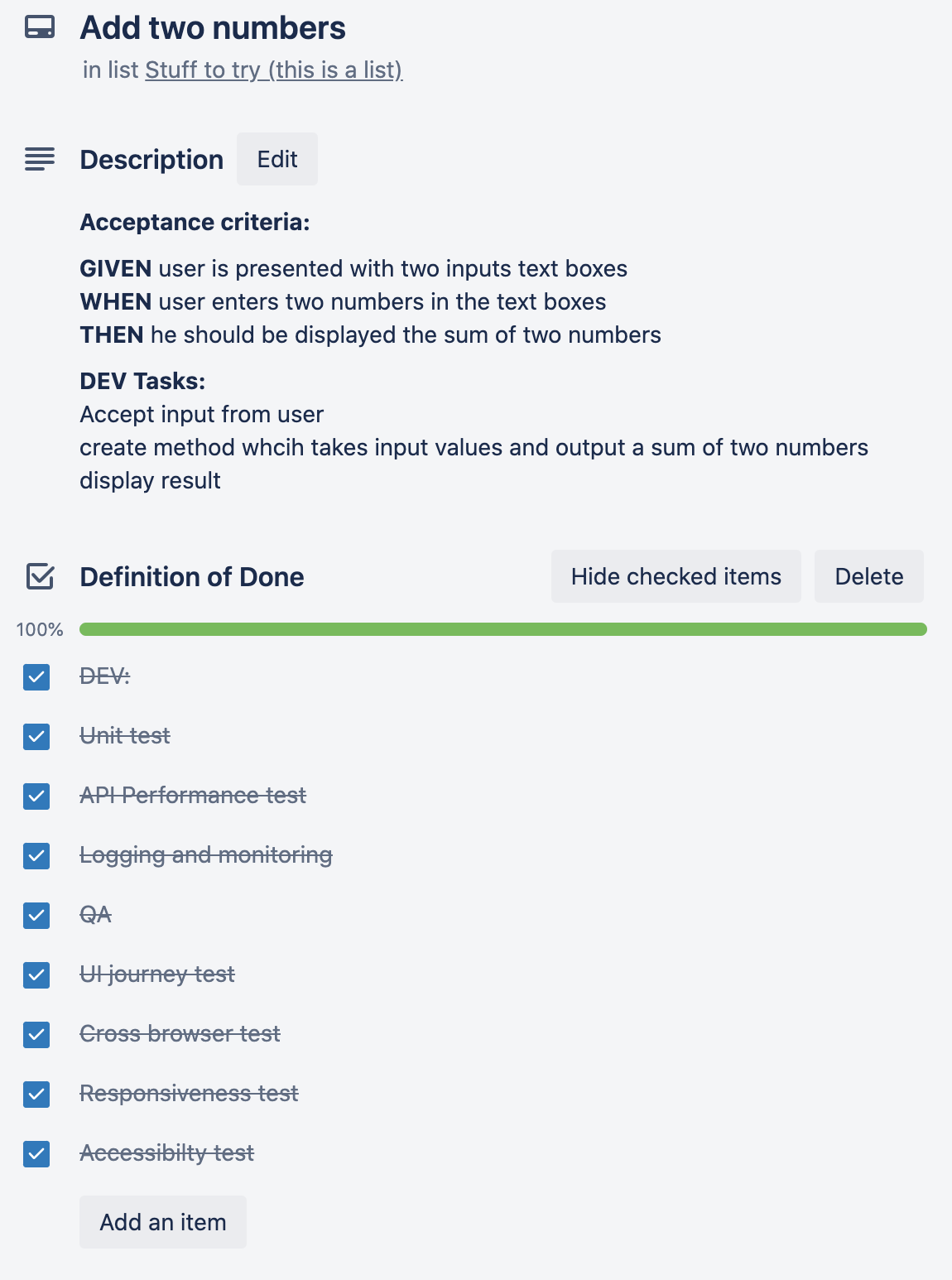

Here’s an example DoD list captured for a story card:

During kickoffs

Once the story is ready for development, during a kickoff (KO) meeting, cross-functional Acceptance Criteria (AC) should be discussed alongside the functional AC. Taking from the example above, the team should agree on and note the performance benchmark for the endpoint, and align on the contracts for the endpoint alongside the tests covered in the contract and the API test layers. The endpoint’s expected KPIs should be added in as part of the user story’s acceptance criteria.

The KO provides an opportunity for the team to raise and clarify any doubts regarding each CFR applicable for a story. This enables the team to put more thought and focus on the CFR before they even work on it, which in turn, should help minimize bugs.

During development

Test automation and integration with the Continuous Integration (CI) pipeline is a major step in the shift left approach. Along with automated functional tests, the team should also pick potential CFR test candidates for automation. These tests should be added and incorporated into build pipelines. Integrating linters, code checkers, and analyzers into the coding environment can help you get early feedback on cross functional issues like security and accessibility errors while writing the code. This helps catch errors in the developer machine before the code is pushed to servers. Integrating dependency checkers and scanners to the CI pipeline, alongside implementing monitoring tools, will help alert the team to any potential issues due to the changes being made. This approach helps catch issues before they are pushed to higher environments.

During desk checks

Once the story is said to be 'DEV DONE,' all the functional and cross-functional tests mentioned in the dev DoD section of the story should be complete.

During desk checks, QAs will verify that all the tests owned by developers (Dev DoD) are complete and automated tests have been incorporated into the build pipelines. In the example we talked about earlier, during the desk check, QAs should check whether the KPIs defined in the acceptance criteria have been met, if the tests have been integrated to the pipeline and the results have been captured without any outstanding feedback.

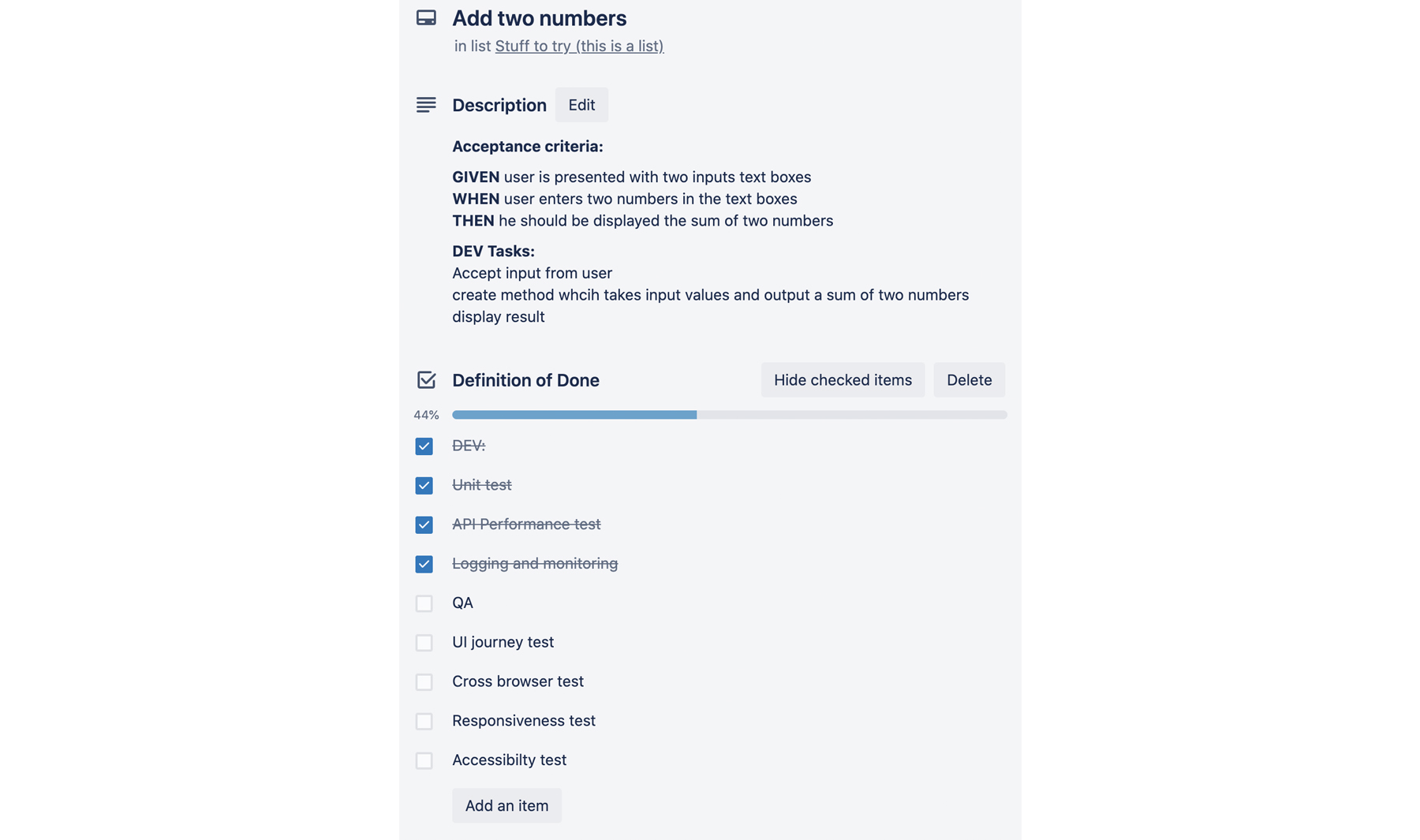

The diagram below shows the status of the card after the desk check is complete.

During QA testing

By this time, two thirds of cross functional tests will have been covered; the risk of critical issues going into production is now greatly reduced. QAs will execute the remaining functional and cross-functional tests mentioned in the QA DoD section. Once all the tests have passed and the ACs have been met from both functional and cross-functional standpoints, the story will be moved to the 'QA Done' lane.

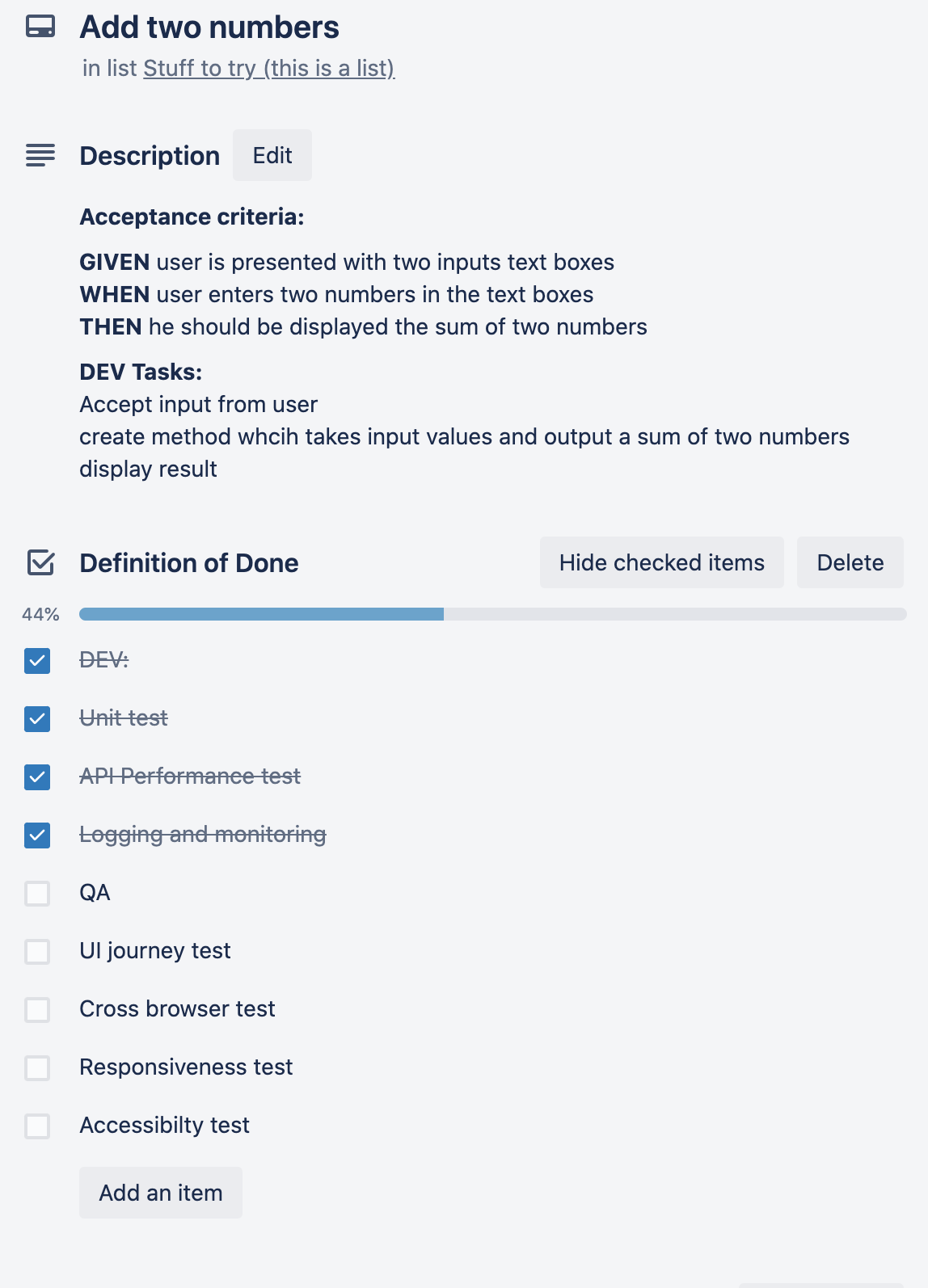

Here’s how the story looks after all DoD tests are complete.

As you can see, CFRs have become a part and parcel of story development. This means they require no additional time and effort at the end of the iteration release cycle. In short, CFRs are baked into the product right from inception to delivery.

Conclusion

This approach helps the team:

Gain early feedback on CFR bugs which could be very expensive to fix in the later stages of the project

Reduce the risk of CFR issues impacting the delivery timeline

Take delivery pressure off the team by minimizing any last minute surprises

Put more time, attention, and focus on cross-functional elements while developing the story (improving the end product's quality)

Remove the cost of redesign/reworking on the tech solution at a later point in time due to the CFR constraints not being met

Make a story production-ready as soon as the story is DONE, since all the functional and cross-functional tests of the story are completed

Having said that, the shift left approach has its own challenges. It needs a change in the team’s mindset, where developers write test cases, a change in the way the organization works, the testing responsibilities to be shared across the team, and most importantly, better planning of tests ahead of development. Regardless of what approach your team decides to follow, the key takeaway is to have a shift left mindset in testing cross functional requirements; always look for ways to integrate it early in the development cycle.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.