Moving to the Phoenix Server Pattern - Part 2: Things to Consider

This is part 2 of a series. Read part 1 and part 3.

The Phoenix Server Pattern is a practice for managing changes in the infrastructure of a project. In the Thoughtworks digital team we had been running our infrastructure in the cloud for a while but we faced some issues that forced us to re-evaluate our previous approach on how to manage change on servers and lead us to adopt the Phoenix Server Pattern. In this article we will cover some things to consider before adopting this pattern and some of the challenges we faced when migrating our infrastructure to use phoenix servers.

Click to read previous article - Introduction to moving to the Phoenix Server Pattern.

Have a Strategy to Experiment

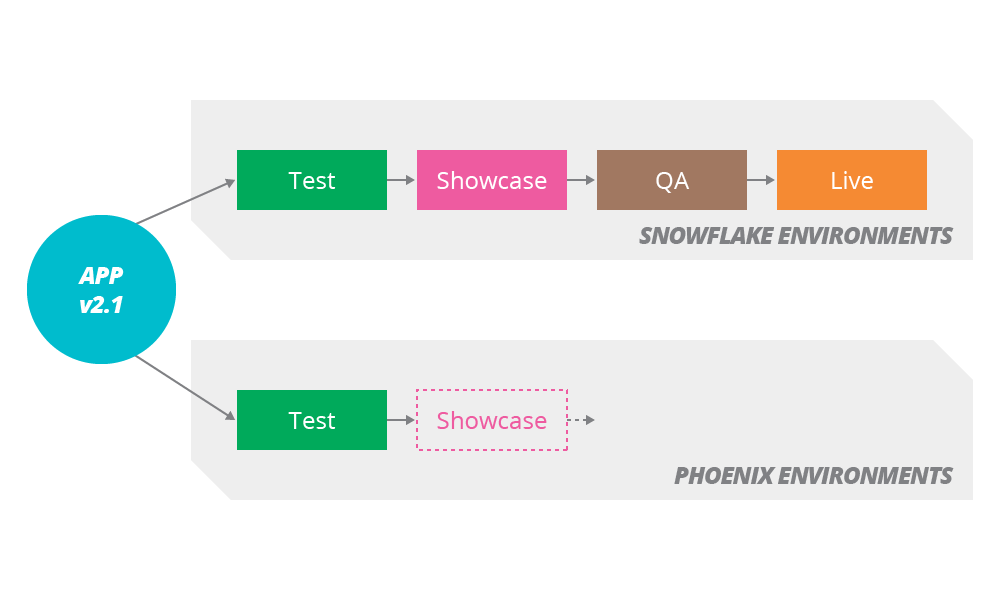

We needed a strategy to experiment without impacting the delivery of new features. One of the first decisions we made was to follow a branch by abstraction approach. We created phoenix server replicas for each of our continuous integration (CI) environments, one by one. We have several of these environments where we test new versions of the app and deployment/building scripts and other procedures before they are applied to the production environment.

Each application build was deployed to both phoenix and snowflake environments but only the snowflake ones were used to bring new builds into production. This guaranteed that if something went wrong while building one of our new phoenix environments, we could still deliver features by using the snowflake version of that environment.

After the first pre-production environment was replicated using phoenix servers, we realized the benefits of this early decision. We were able to experiment and try different approaches to adapt pre-existing building and deployment scripts to support phoenix servers without impacting our delivery capabilities.

All of the CI pipelines used to orchestrate the phoenix environments share the same building and deployment scripts. Without a branch-by-abstraction approach, we would not be able to push a new build to production if something bad happened changing these scripts. A bug in one of these scripts would affect upstream phoenix pipelines already being used to deliver new features.

Cloud Provider Support

Your cloud provider should offer several services and features to allow your team to implement phoenix servers. A lack of support from your cloud provider can constrain the decisions the team can make further on. Thus, you should evaluate how your cloud provider will allow you to migrate from snowflakes to phoenix servers and the services and features you will need beforehand.

Server Image Creation

One example of these services is the creation and management of server images. Most cloud providers offer this kind of service but the time that it takes to create a server image or deploy a new server from a pre-existing image can vary a lot from one cloud provider to another. This can impact the time to live of new features, i.e the time that takes for a feature from development until it provides value to the users. For example, our time to live increased when we moved to phoenix servers. Creating a phoenix server from an image takes us 5 minutes on average. Applying configuration changes to one of our snowflake servers using Puppet takes around 2 minutes.

Service Discovery

Another example of these services is the ability to discover the newly created phoenix servers. The phoenix pattern assumes that your infrastructure has a way to do this. Some cloud providers offer floating IPs as a feature that allows you to retain an IP after a server is deleted and use that same IP for a new server. This works really well with the phoenix pattern as your phoenixes can retain the IPs of the old servers and the rest of your infrastructure does not need to be aware of any change.

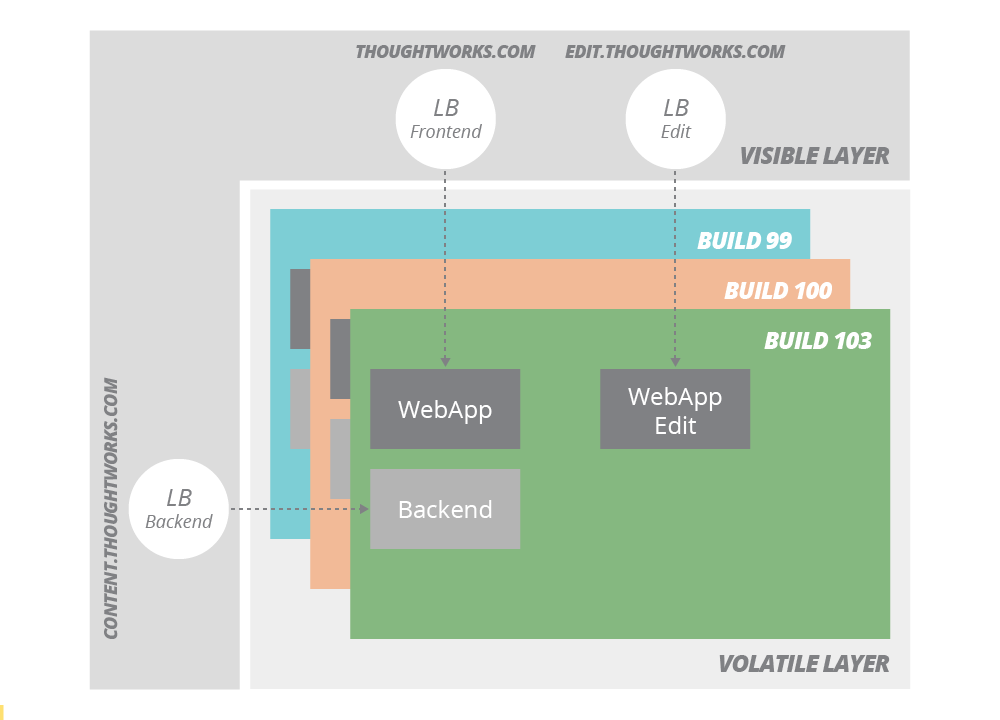

We liked the simplicity of working with floating IPs and we decided this approach should be the way forward. Unfortunately, we quickly realized that our cloud provider Rackspace didn’t offer this kind of service yet. Looking for alternatives, we explored other solutions such as service discovery tools like Consul but we concluded that the effort of introducing this type of tools was too big for the size of our infrastructure. Finally, we decided we could use load balancers as a way to discover new servers.

Our cloud provider offered an API that allowed us to create scripts to swap servers in and out of a load balancer. Following this approach, we managed to have our first CI environment up and running quite quickly by creating at least one load balancer for each server’s role (frontend, backend and edit servers) and pointing the DNS records to the load balancers IPs. As it can be seen, most of the decisions we made were constrained by the number of services offered by our cloud provider.

Environment and Role Specific Configuration

Phoenix servers are created from a base image that contains the base configuration for all servers. This configuration is the same for all environments. There may be cases where you will need to apply configuration that is specific to an environment or to the role the server will perform.

One example of variance between servers are SSL certificates. You don’t want to deploy the same SSL certificates for your production application to your pre-production applications as this increases the risk of a data breach. Thus, another important thing to consider before adopting phoenix servers is how you will apply different configuration for each of the environments and server roles.

There are tools like Cloud-init and User Data that allow you to configure servers dynamically at boot time. With Cloud-init, the specific configuration for an environment or role can be passed to the server as data. Cloud-init will apply such configuration to each server at boot time. In order to implement these solutions you should check if your cloud provider supports them.

In our case, our cloud provider supported Cloud-init but we decided to not rely on our cloud provider capability for this. We implemented our own automated scripts with just what was needed. These scripts get executed after a phoenix server is created and their only responsibility is to apply environment and role specific configuration to the new servers. We encourage you to explore different solutions to this problem early on. This will allow your team to make future decisions knowing how new servers will be discovered.

Stateful Servers

A stateful server is one that contains state that needs to be persisted. This state could be in the form of application logs, databases, cached content, user sessions, etc. In order to implement the phoenix server pattern this state should be pushed out of the server. Thus, the state is persisted and can be accessed by new servers. Moreover, old servers can be destroyed at any time without losing state.

One solution is to organize your cloud in layers, where your servers live in a volatile layer and your state is pushed out from these servers to a persistent layer. In our case, the state of our servers are application logs and cached HTTP responses. We were already shipping logs to a central log aggregator that belongs to the persistent layer and our cached content do not need to be persisted from one server to another. We populate the cache after the application is deployed to a new server by running a script that crawls the website, thus the responses are cached before the server is used in production.

Other sources of state like databases or local content can be handled in the same way. The approach is again to push any kind of state out of the volatile layer and move this state to the persistent layer of your cloud. You should consider services like log aggregation, block and file storage as well as relational database services like Amazon RDS to build your persistent layer and make your servers stateless before they can become phoenix.

Conclusion

Implementing the phoenix server pattern can be challenging. As we have seen in this article, there are several considerations a team looking to move from snowflake to phoenix servers should consider before starting. Some of them are linked to the volatile nature of phoenix servers but others are related to the different approaches a team can follow to implement this pattern. These are some things to remember:

- Have a strategy to experiment.

- Make sure your cloud provider allows you to implement the new pattern.

- Know how you will apply environment and role specific configuration.

- Push state out of your servers.

Acknowledgements: Thanks to Laura Ionescu, Ama Asare, Mircea Moise, Matt Chamberlain, and Tom Heyes for contributions to the article. Our next article talks about evolving a disaster recovery strategy using the Phoenix Server Pattern.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.