This article invites organisations to do to their Risk and Governance functions what DevOps has done to traditional IT Operations: Transform those areas from being necessary operational expenditures and stage-gates to being competitive advantages.

Two failure modes

In organisations we work with, we often see two different failure modes. The first is hierarchical command and control through dedicated groups — architecture, security or service management being the most common — that have a massive impact on speed of delivery. These groups often maintain strict lists of approved tools and rigorous control gates for reviews at various stages. The second is at the opposite end of the spectrum: delivery teams doing whatever they want with close to zero governance imposed on them, often resulting in chaos, and a sprawl of tools and processes used across the organisation.

We find these two failure modes often reflect organisational culture and growth patterns.

Start-up and scale-up organisations tend more towards zero governance; sometimes because it hasn’t made it to their priority list yet, and sometimes in a deliberate effort to differentiate themselves from big corporate environments that tend more towards tight control and rigorous implementation of governance frameworks.

As those smaller organisations mature and grow, they often find themselves moving from zero governance to implementing “too much” governance with little regard for trying to find a middle ground. We also see the opposite trend of traditional organisations throwing out all governance as they attempt to modernise and accelerate, focusing purely on speed rather than on how to bring speed and quality into virtuous alignment.

We advocate moving away from those extremes and finding a new middle ground. Ideally we're looking for the combination of techniques that ensures organisational risks and opportunities are handled while still giving teams as much autonomy as possible within those constraints.

The need for responsive governance

Modern software delivery paradigms

The way we're designing, crafting and delivering software is constantly evolving. As of today, we know some things to be working better than others:

- Building technology platforms that support incremental, constant change over time

- Delivering through cross-functional teams that are aligned to business outcomes

- Giving those teams end-to-end ownership and accountability for code from delivery to operations in production

- Favouring architectures that are appropriately coupled through modular design

- Optimising for both high delivery velocity along with quality.

Many of these preferences are based on a subtle but powerful observation: we’ve recognised that increasing the speed of delivery and improving quality aren’t conflicting goals, but rather mutually supportive. While this is not something that’s particularly intuitive for many people, the team behind DORA has collected a lot of supporting evidence over the years that this is the case.

Traditional governance

Organisations need to satisfy many different stakeholders. They employ governance as a means of achieving their strategic goals and staying within an appropriate risk appetite. IT governance as a subset specifically aims to provide a structured approach to align business strategy with IT strategy, identifies and addresses risks and ensures an organisations’ compliance to laws and regulations.

All of those goals are fundamentally aspects of quality, the axis that traditional models of IT governance tend to optimise for. Organisations often employ technology governance via frameworks and certifying standards like ISO27k1, COBIT, TOGAF or ITIL/IEC20000 in some or all of the following areas:

- Audit and compliance (Including regulatory compliance and legal risks);

- Risk and information security;

- Software architecture: alignment with strategy, coordinated action (cloud-migration, monolith-splitting) longer term roadmaps, scaling, performance, resilience etc;

- Cost (funding models, budgets);

- Business continuity and disaster recovery;

- Quality, change and release management;

- Project and programme management.

There are a lot of positives about those frameworks — after all they’re the collective memory of all things that have ever gone wrong in a large number of organisations. The way they’re implemented, however, tends to be inappropriate for modern, responsive organisations:

- They’re very infrequently updated (ITIL got its first update in eight years in February 2019)

- They tend to be fundamentally designed for traditional delivery and operating models, reinforcing silos

- Rollouts tend to happen big-bang for entire organisations

- They’re non-responsive to change (static or changeable only by committee).

Getting it right: governance as a competitive advantage

While most standard IT governance frameworks acknowledge that their implementation should apply the least amount of friction possible, they don’t identify the great potential of responsive governance.

Moving at the highest possible speed of delivery while maintaining the highest amount of stability allows us to “steer the ship — but avoid the rocks”, making the application of such a governance framework a major market differentiator. It would give organisations the confidence to move at the right speed while not exposing themselves to undue risk.

The following sections describe effective strategies for creating this dynamic approach to technology governance, summarised in six principles:

- Move from mandate to vision and principles (and guardrails)

- Automate and delegate compliance

- Enlist gatekeepers as collaborators

- Provide paved roads

- Radiate information/rethink your communication patterns

- Get comfortable with evolution.

Governance Principles

P1: From mandate to vision and principles (and guardrails)

Governance is largely concerned with setting the direction an organisation intends to move in, and then verifying that it’s actually doing so. Most modern technology organisations have realised that they get the fastest delivery throughput by organising themselves into reasonably autonomous units, both in terms of teams and architecture. The challenge is how to specify the desired direction in a way that doesn’t nullify the speed gains of allowing teams high levels of autonomy.

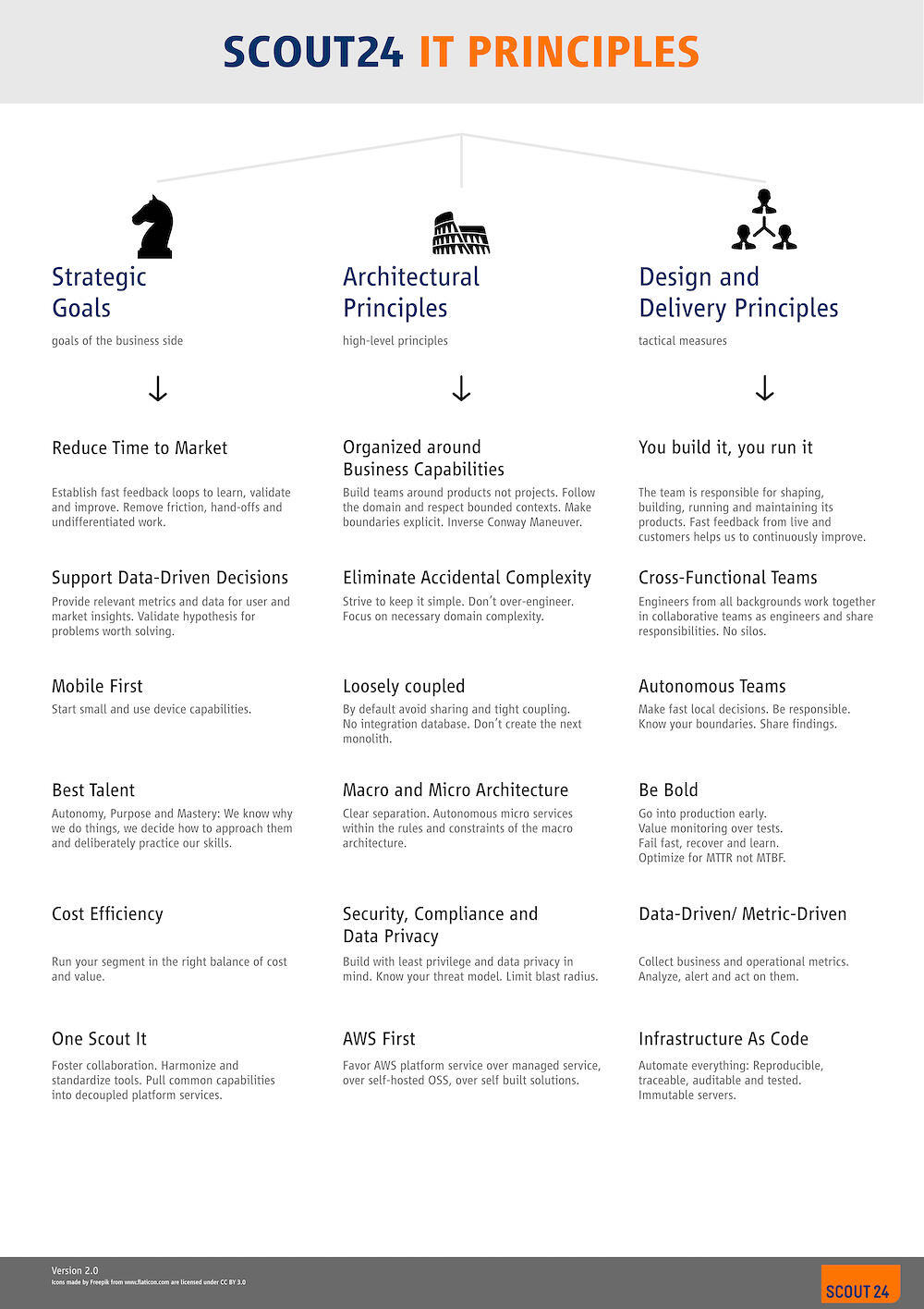

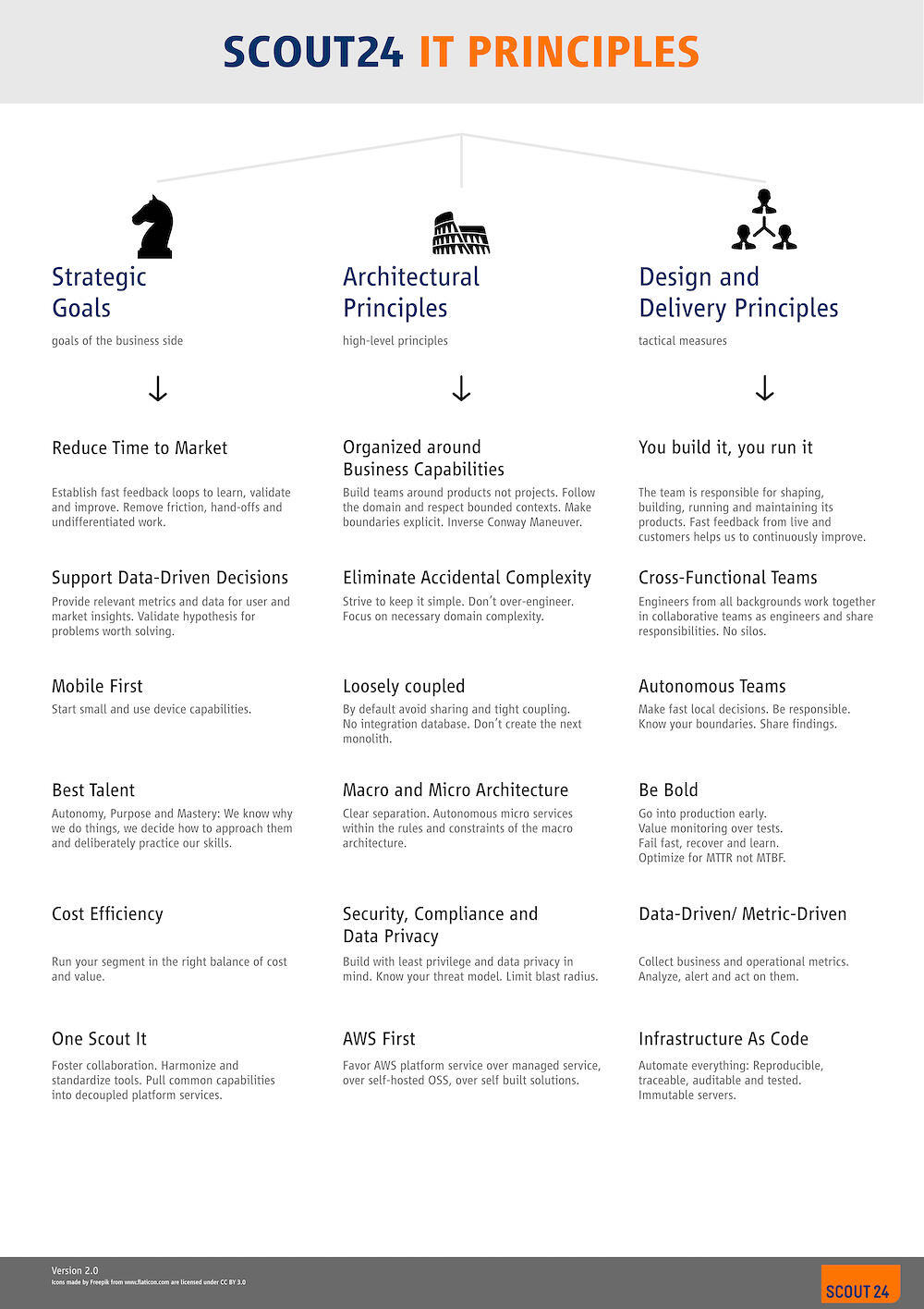

Our favoured approach is moving from mandating specific tools, protocols and solutions to framing a higher level vision with a set of principles and constraints, which still leaves a good degree of autonomy and creativity on how to fulfil them.

There are some good examples of architectural or engineering vision statements:

Co-create principles as a living artefact

While there’s still benefit in these alignment artefacts when created by a small leadership group, we find organisations get better buy-in and adoption (and better artefacts) when using collaborative approaches to creating and agreeing what the principles should be.

Once an initial version of principles has been agreed on, a very effective strategy to keep them "alive" can be to treat them like other software delivery artefacts and store them in source control, using a machine-readable format like markdown. This enables teams and organisations to keep history and track changes via standard tools and processes.

P2: Automate and delegate compliance

Since Larry Smith coined the term “Shift-left testing” in 2001 for early integration of QA into development teams, the scope of activities to be incorporated in the delivery process as early as possible has kept increasing. Front-loading activities to happen earlier and more frequently reduces risk while having a positive impact on velocity.

Extending that shift to incorporate moving governance activities into the delivery process has a number of major upsides — it means that we can now start to treat governance and compliance activities as elements of software delivery and their outputs as software artefacts. We can also make use of established techniques, processes and tools of technology platforms. Humans can switch to spending their efforts on defining what compliant software and infrastructure are, essentially what “good” looks like, and the ability to measure it. Rather than manually verifying that these criteria are met, they can let machines decide.

At a high level, this automation can and should be implemented at both build-time and run-time of a software platform.

Build-time and deploy-time automation of compliance

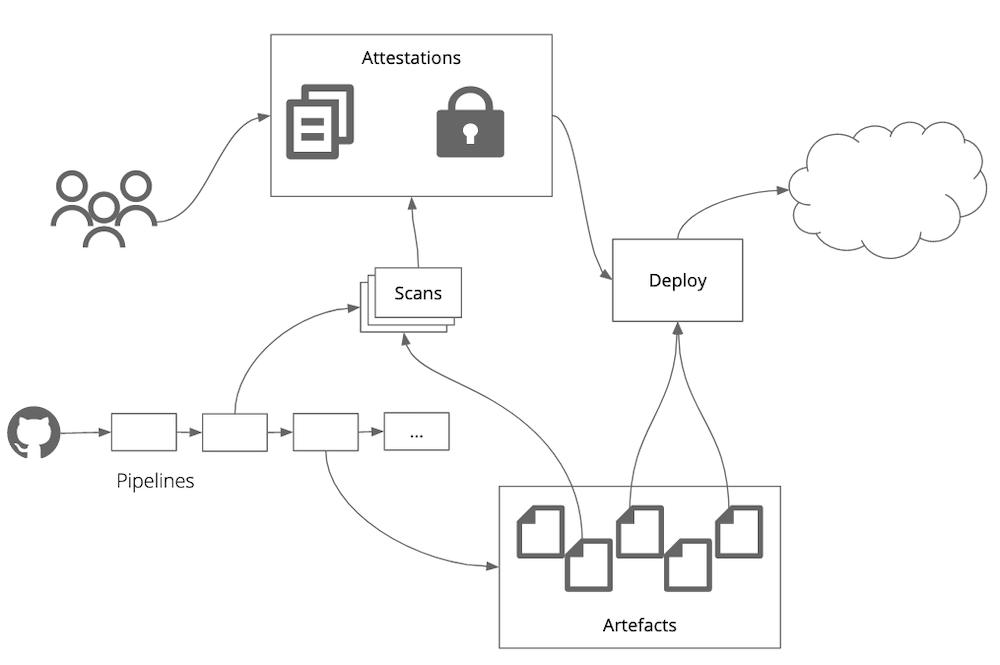

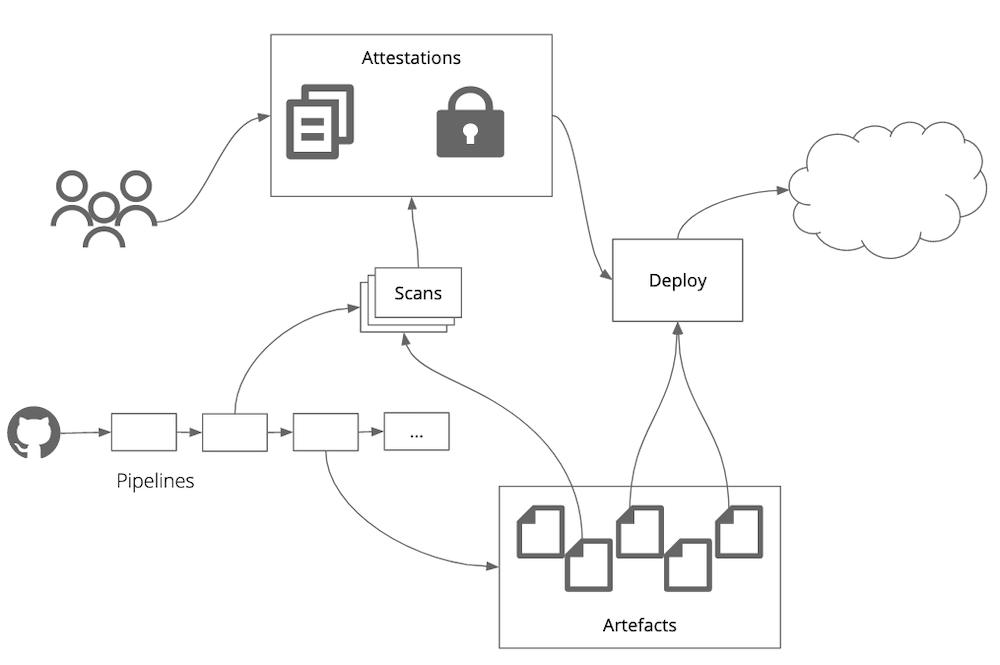

At build time we have a large arsenal of compliance tools at our disposal that most teams already use for their day-to-day software delivery activities. Using source control management we can automatically and comprehensively track all changes made to critical infrastructure. Artefact repositories give us the ability to store architectural decision records or change control artefacts. With little process and tool overhead we can also ensure artefacts relevant to audit are accurate and haven’t been tampered with through digital signatures.

We can also employ tools like static code analysis, enforce code quality metrics or mandatory, automated security testing of any change. Even better than providing a one-time static code scan or approval mechanism for dependencies, modern tooling provides the ability to continuously scan the codebase and dependencies for compliance or security issues. Platforms like Github provide ongoing code and dependency scanning capabilities for free. They even provide email alerts to their users should one of the libraries their code depends on become known to be compromised.

Most of all, we can ensure all processes that teams follow are repeatable and can’t be bypassed through the use of tools like continuous delivery pipelines. This is music to any auditor’s ear!

Compliance as code

A number of tools have emerged to enable hands-off compliance as code, recording and cryptographically verifying the entire supply chain and lineage of an application. This ensures that teams know exactly what has been deployed to — and running in — production at any given point in time, ensuring it is compliant with guidelines and controls. Effectively, manual processes like sign-offs and human inspection are superseded by automation and cryptographic verification. The expert manual efforts move to setting and reviewing policy rather than enforcing it.

Run-time automation of compliance

At run-time we can make use of programmable and observable infrastructure to automatically verify compliance. This includes adherence to security and access controls as well as governance around cost or resource utilisation through cloud vendor native tools or third party infrastructure configuration scanners. Building in the right level of observability enables us to define architectural fitness functions for a platforms’ security and risk posture.

Programmable infrastructure gives teams the ability to monitor and define thresholds, configure alerts and escalation and maintain these configurations as software assets. It also allows for easy and comprehensive access for independent assurance activities through internal or external audit functions and teams.

Meeting audit and regulatory requirements

Moving to a more automated and delegated compliance model can be challenging when dealing with requirements for independent audit and verification. Often, internal audit teams will need to be convinced that a new, automated way of implementing controls and tooling is effective.

It’s advisable to include the risk and security functions in the efforts to automate compliance as early as possible to mitigate this. An effective strategy is to assign product ownership for the automation effort to one of those two functions. The focus should be on demonstrating how new tools continue to mitigate existing risks rather than trying to implement existing tools and controls.

Satisfying external auditors to achieve government or industry regulations and certifications can be approached by having the new tooling produce the same outputs and reports the auditors are used to verifying, slowly replacing them over time as confidence increases.

P3: Enlist gatekeepers as collaborators

Instead of structuring an architecture team as a group of gatekeepers who issue opaque mandates, a better approach is to use that group as consultants, coaches and advisors for teams that get engaged early on in the process. They can be articulating the organisational principles and constraints, but should also be helping teams figure out how to meet them. This leads to better two-way learning, and also can be a good connection point for spotting good ideas or tools to reuse elsewhere. The architecture group can help with evangelising these harvested items.

Automation frees up capacity

Using automation frees up capacity. Scarce, specialised skills like architecture, infrastructure or security can be put to use to solve the hard problems as opposed to spending time policing or ticking off checklists as approval activities move from evaluation of individual instances towards overall approaches or algorithms. It also provides more support for functions like architecture to be seen as an enabler of top line rather than seen as a cost centre, as most compliance functions are traditionally viewed.

Assurance as capability building

It’s unlikely that the oversight role of your gatekeepers completely goes away over time, though one of their main objectives should be to decrease the amount of assurance required as automation and expert assistance increases. You can use the cycle of oversight and assurance interactions as a path for building skills in teams as they are introduced to new concepts, and are provided the opportunity to pair with experts to resolve issues.

P4: Provide paved roads (and the pit of success)

Netflix has been credited to have coined the term “paved road” — a set of centrally supported tools that are offered as platform-as-a-service offering to their internal delivery teams. These tools may include helper libraries, service templates, build and deployment tooling, pipeline configurations or even a managed container orchestration service based on standard tooling like Kubernetes. At Thoughtworks, we refer to the same concept as a digital platform:

“A digital platform is a set of APIs, tools, services, organisational structures and operating model that are arranged as a compelling internal product and allow autonomous teams to deliver digital solutions quickly and at scale”

The idea of these platforms is to make doing the right thing easy. The environment for delivery teams is constructed to make it trivial to operate within risk tolerance, comply with architectural principles and regulatory guidelines or standards.

It does that while giving the team the ability to move as quickly and autonomously as possible. Another term used to describe the same concept is the “pit of success”, making it easy to fall into doing things the “right” way.

Architecture reference and starter kits at TELUS

TELUS Digital has organised itself into outcome teams and enablement teams. Outcome teams are outward focused and tasked with improving the customer experience. Enablement teams are focused inwards and seek to make things easier for TELUS employees. Most of the architects sit in enablement teams supporting the outcome teams.

TELUS documents its architectural reference on a public wiki. Its goal is to document principles in a lightweight, simple format for technical resources, tools, platforms and decisions. Its members can quickly and easily get context on "Why, What & How" for every part of their platform.

The TELUS reference architecture has quickly evolved and expanded throughout the organisation. However, there are a lot of concepts and tools to juggle, each with their own practices, with few domain experts available to support each concern.

TELUS uses Starter Kits as part of their paved road, which help keep its standards in check, as they accelerate the time to kickoff new applications. TELUS Starter Kits are a reference implementations of the TELUS reference architecture. A living style-guide. A production-ready, fool-proof "Hello World". They’re easily cloned to start new applications with the current TELUS practices implemented from the start. There's also a means of merging in new changes from the starter kits, to ensure they stay current.

TELUS Starter Kits are autonomous GitHub repositories, with all of the functional implementation for a full continuous integration and continuous delivery build pipeline. They implement their best practices for: Node.js, React, Redux, Express, Jenkins, Docker, Kubernetes, OpenShift, secrets management, logging, code formatting, BFFs, and much more.

Common pitfalls

Often, organisations also extend their definition of “paved road” or digital platform services to include SaaS services like issue tracking, project management and other auxiliary software delivery tools like artefact repositories or static code analysis facilities. Adding more and more shared services in a single, centralised team is a slippery slope to creating a separate DevOps team, an antipattern that creates yet another silo rather than removing friction. Organisations frequently end up creating these teams as they start combining the “build” and “run” capabilities of a larger number of shared tools and infrastructure services.

Teams that run tooling focused on security or compliance capabilities can also sometimes devolve into inadvertently creating yet another stage-gate on the way to production, a gate owned by that team.

Finally, digital platform capabilities are sometimes built without having identified a concrete consumer or use case. This can lead to orphaned paved roads as they’re too generic to be useful. Making it a rule to prefer extracting platform capabilities from feature delivery, over building generic capabilities is a good way to protect against this.

Product mentality and product thinking

An effective strategy to avoid creating new silos is to make using the paved road non-mandatory for delivery teams. If a team chooses to stay on the paved road the platform provides, life is easier and many responsibilities are managed for them. However, teams also have the option to pick a different path, requiring them to take on the additional responsibility that comes with meeting concerns that are otherwise handled by the paved road tooling.

This simple change, of treating the developers who use your tools as internal customers, has a profound impact on how an internal platform team operates. Being required to offer a compelling, internal product enables those teams to employ strategies and tools that other external product teams already use.

This switch towards a more product-oriented mentality includes tracking of customer centric metrics (also sometimes called “developer experience” metrics), conducting customer research, creating service blueprints and the practice of creating hypotheses for potentially useful features that can be validated prior to investing in their full development. It also often leads to creating the role of an internal product manager for the platform.

Ideally, the internal product team will be in the best possible position to deliver platform solutions as it’s the core of their expertise and often involves technologies that feature delivery teams don’t get to touch frequently enough to truly get good at implementing (networking, identity management, etc.).

P5: Radiate information / rethink your communication patterns

In many traditional organisations, the flow of information is largely up and down the hierarchy — objectives and budgets flow down, status reports flow up. Dynamic governance challenges this model. Thinking of two dimensions of communication flows in organisations is helpful: north-south is how strategy and direction flows down to teams, and how the realities on the ground flow back up to direction-setters; and east-west is how teams share their lessons, discoveries and innovations to other teams and groups in the organisation. There are various techniques for making sure that both north-south and east-west communications are flowing well.

Sharing (or co-creation) of vision, principles and guardrails is ordinarily a clear north-south communication, as is a formalised design review. Architectural Decision Records (ADRs), while useful inside a team, can also be a north-south communication channel keeping people tasked with architecture governance abreast of changes at about the right level of granularity. Gregor Hohpe also has great advice on how architects can “ride the elevator” between different levels of an organisation. East-west communication may be as simple as brown-bags or internal demos, but can also be facilitated by an architecture guild / community of practice. Thoughtworks Technology Radar — as described in the next section — can operate as both east-west sharing and as a north-south opportunity to get visibility and impose direction.

Tech Radar as a lightweight governance tool

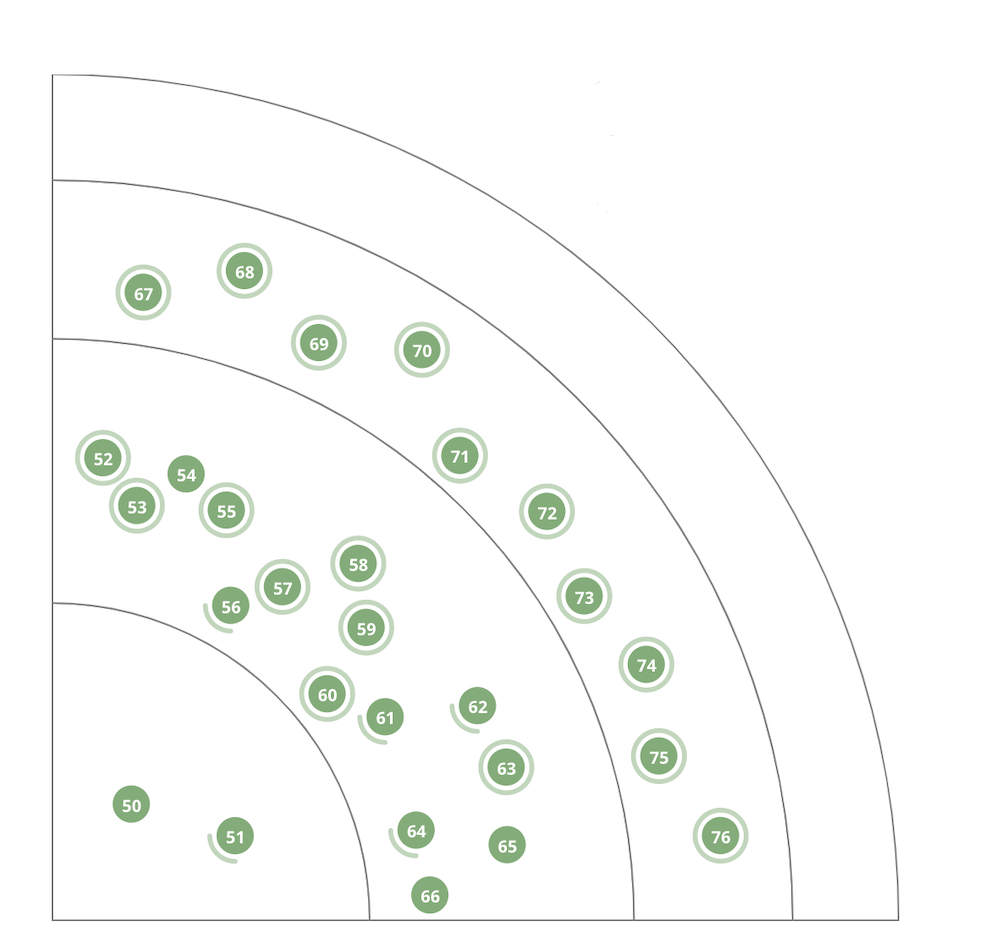

We have had success using an organisation-internal Tech Radar as a catalyst for architectural conversations, decision making and as an information radiator. Neal Ford’s article goes into more detail, but the core idea is that building and updating this artefact on a regular cadence gives visibility across teams about what tools and techniques are currently being trialled, assessed or discontinued.

Adding some discipline and rigour around when something moves from assess to trial or adopt can be a very effective tool to introduce lightweight governance.

We find that framing anything in the “Assess” ring as an experiment allows teams to share what they are trying to learn. The results can then be used to gauge whether to recommend it to other teams (e.g. moving into "Trial"). In some cases we have also found it useful to apply WIP limits for how many items can be in Assess or Trial. This forces organisations to finish the assessment of one Javascript framework before they can move onto the review of another one.

Internal tech radar at REA

The REA Tech Radar is a curated collection of technology items that make up their technology landscape.

It is an authoritative instrument to align decisions on what to use and what not to use on their systems and products and removing or replacing old technology that is updated on a quarterly cadence. Radar entries follow well-defined transition rules to move from one “ring” to the other.

Adopt ring. These technologies that are considered defaults for REA. They have agreed these are good choices and that they have the skills and resources to achieve excellence with them. Choosing an “adopt” technology is a no-brainer.

Experiment ring. The experiment ring is for technologies they are actively trialling, by building something. They have not committed to this technology, and if it is not successful they are committed to removing it.

Hold ring.The hold ring says “don’t start anything new with this technology”. They might maintain existing systems, but should not use this technology for new development. Generally items will move from Hold to Retire over time.

Retire ring. The retire ring is for technologies that are under active, funded retirement. They should definitely not be using this technology for any new development.

Decision records for visibility

Using architectural decision records (ADRs) is a great way to give external visibility into key decisions that are being made on individual teams. This can be a way to communicate with external groups who may want to understand decisions, their assumptions and their implications. It's also a great way for communicating with "future you" or new team members.

It can also be useful to extend the concept of ADRs to the decision making processes for larger organisational constructs, introducing an equivalent to the ADR that’s valid across multiple teams and, finally, decision records that are representative of decisions made across the entire organisation. Different decision making instruments can be treated with different levels of “care”, allowing for appropriate risk tolerance and speed of decision making.

P6: Get comfortable with evolution

The Evolutionary Architecture book gives lots of advice on how to steer your architecture as it evolves and verify that it's meeting key organisational concerns. If the organisation you're working in is still getting used to this idea and is used to more formal design reviews an important piece of coaching is for these review groups to get comfortable with the level of fidelity and completeness of architecture that they're seeing at different points in a systems evolution. Many of these review groups are accustomed to seeing very polished "end state" diagrams (which may or may not bear any relationship to what the end state ends up looking like). Getting them comfortable with how architectures evolve and what they should be caring about when is a big learning curve.

Comfort with evolution will be necessary to embrace the new reality of your systems being in a constant state of flux. It will also likely be necessary to navigate the organisational changes required to introduce and migrate to this new governance posture.

How to get started

The best path for migrating to a new governance approach is going to be very dependent on your starting point and your risk appetite, but there are some high level pointers that we can give, depending on the failure mode (too much or too little governance) you want to avoid.

Go to where the action is

If you’re coming from the more traditional, hierarchical governance approach, then an important first step is to work on how to push decision-making closer to where the action is. This traditional governance approach is often typified by what we might call the “Department of “No!”, whose job appears to be to block any type of change progressing. This department may show up as an architecture review board or a change approval board (CAB), but whatever the name, you can almost guarantee that this group is several steps removed from the teams actually doing the work. Interestingly the data from the 2018 State of DevOps report summarised in Accelerate shows a negative correlation between stability and the existence of a CAB — it’s not just ineffective, it can be actively detrimental.

We found that external approvals were negatively correlated with lead time, deployment frequency, and restore time, and had no correlation with change fail rate. In short, approval by an external body (such as a manager or CAB) simply doesn’t work to increase the stability of production systems, measured by the time to restore service and change fail rate. However, it certainly slows things down. It is, in fact, worse than having no change approval process at all.

If this is your starting point then you need to ask yourself and the various custodians of organisational risk that are represented across various architecture — or change approval groups what would have to be true in order to grant teams more autonomy and decision rights. The answers will likely centre around leaning on automation, providing clear context, and ensuring the right skills are on hand, while maintaining a lighter weight and more responsive level of oversight and support as you transition models.

We’ve found it effective to take a risk-based approach: Focusing on the desired outcomes from a risk perspective rather than focusing on specific implementations or controls that are currently in place (approval processes, CABs, etc.)

Start the conversation

If you’re coming from the other end of the spectrum, from an organisation with a history of low oversight and little restrictions on what teams can do, then your first steps will likely be to begin the conversation about what the limits of team autonomy should be, which areas teams should be balancing multiple risks, and where the opportunities are for sharing. Rather than the Department of “No!” described earlier, this world is sometimes known as “no department”. While you want to be careful not to accidentally stand-up that Department of “No!, you do want to begin making sure that your organisational risks are being handled in a sensible and informed manner.

A first step in this direction may be to collaboratively create a Tech Radar to share and align where organisational momentum is heading, with the opportunity to adjust that direction. Similarly, an exercise to create a vision and principles statement may be helpful, as can asking teams to emit ADRs to a well-known location.

Incremental roll-out

Changing your risk management approach naturally has some risk attached to it. So it can make sense to work out an incremental or iterative path to introducing the changes. Ideally you will be ratcheting up trust in the new approach as you ratchet down reliance on the older processes. Sometimes you may want to limit the initial remit to one area of the business or one category of applications, such as newer cloud-native services, where it’s easier to lay the foundations of automation required to give teams aligned autonomy in a safe manner.

Wherever you choose to begin we believe there’s no time like the present to get started.