Guidelines for Structuring Automated Tests

In every project, these questions come up - what kind of automated tests should we write? What is the right level of testing? How much of test coverage is “good enough”?

As mentioned by Martin Fowler, it is very important to have Self Testing Code. I would like to start with guidelines that I’ve found to be useful for planning and building test automation suites.

Guidelines for structuring automated tests

#1 Structure

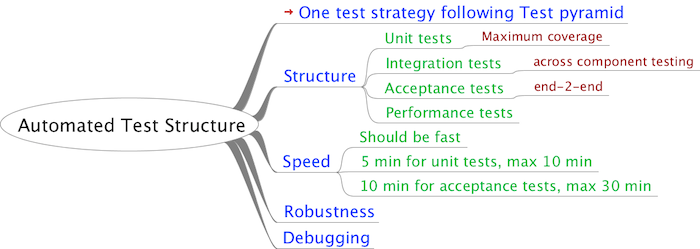

Formulate one test strategy for all your automated tests modeled closely on the Test Pyramid. Prioritize creation of unit tests to cover most test scenarios over any other types of tests. Keep brittle UI tests that are tough to maintain at a minimum. Avoid repeating tests - collectively build test suites and avoid retesting a scenario if it is already covered in another test suite. Revisit and adapt the test structure periodically and refactor tests to keep your Test Pyramid in shape. Calculating overall test coverage, including all kind of tests, can provide confidence during build & releases.

Here is a quick walkthrough of the various types of tests with pointers:

-

Unit tests: They are fast running tests that are quick to debug. They should cover the bulk of your testing strategy.

-

Write unit tests for each and every test case - for models, controllers and views

-

Typical speeds should be around 5 minutes, with a maximum of 10 minutes

-

Cover maximum scenarios using “fast” test methodologies/types. For instance, write JavaScript unit tests to test client side validations, and avoid Selenium/Capybara based acceptance tests to do so

-

Is hitting database okay in unit tests? Yes, if they are pretty fast. However, by hitting the database in unit tests, we lose the ability to run unit tests parallel execution

-

-

Integration tests: They run slower than unit tests and test component integration points.

- These tests cover interactions and contracts between the system and external systems

- Typical speeds range from 5 - 15 minutes

-

Acceptance tests: These are end-to-end (E2E) test cases and consist of happy user journeys and critical failure scenarios, which cannot be tested by unit tests.

-

Keep the numbers down - ask yourself, if I can write a unit test for this scenario, should I write an acceptance test?

-

These tests should be in production-like environment using artifact build for deployment.

-

They run between 10 - 30 minutes.

-

They help to verify that the E2E integration flow is working.

-

-

Performance tests: These are long running tests and need to be run multiple times a day within the acceptable time frame of a critical production fix.

#2 Speed

The definition of fast is debatable, and the team should collectively decide the acceptable time for the test suite to run and define it for all test types - unit, integration and acceptance. To help decide the timeframe, ask yourselves:

-

How frequently does your team check-in the code (remote push)? In the case of 2-3 times a day, test speeds of 10 minutes is acceptable. However if check-ins happen 20 times a day, 10 minutes is not acceptable. Making the test suite run 1 minute faster can save 1000 minutes collectively across the team.

-

How much time is acceptable to roll-out a critical bug fix in production (including your Continuous Integration pipeline)? Lower is better, but don’t kill yourself in speeding up your tests. Be pragmatic - in most of the projects, upto 30 minutes is an acceptable time from check-in to deployment.

-

Can you run tests in parallel? One of the easiest ways to make the test suite run faster is to run them in parallel. In particular if you have unit tests that hits the database, you lose the opportunity to run it in parallel.

#3 Robustness

Tests should NOT be fragile. Test should not fail without reasons. And re-running tests should not pass. Watch more closely on following the kind of automated tests,

-

Time dependent tests, these are the tests that run successfully when written and fail randomly based on time of the day or start failing after some time. Here is some help on how to write such tests

-

Browser based acceptance tests. Most of the time due to state, OS specific behavior and performance of the browsers randomly, these tests keep failing. Using headless browser option is preferred, which provides stability and predicted behaviors. Avoid testing JavaScript behavior and scenarios heavily using Selenium based acceptance tests. Instead of these, use Jasmine based pure JavaScript unit testing, which are more robust and fast

-

Test depending on external systems or services. If any kind of your test depends on services and system which are out of control from test, should be mocked

#4 Debugging

When it fails, it should point broken code. When test fails, if it takes long to find why this test failed, then something is wrong with the test. Also use test as your debugging tool, since automated test is the quickest way to run specific scenario.

-

Test could be trying to test too many things and coarse grain. Define the unit under test and use TestDouble to isolate unwanted dependencies

-

Bad test data which doesn’t help to identify root cause. While writing test avoid giving test data like a,b,c or 1,2,3 etc. In such cases it is very difficult to understand the failing use case

In Practice

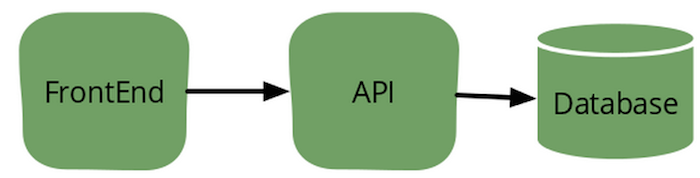

Let’s take a classical 3-tier application architecture and define automated tests for the “Sign-up” feature.

FrontEnd

-

Write controller level unit tests to cover all scenarios with TestDouble service call to API components

-

View level unit tests are NOT required, since there are not many variations in sign-up view and it will get covered in acceptance test. View level unit tests are valuable when view changes based on data-set

-

Write unit tests for all client validation cases. e.g. email, phone, etc. JavaScript unit tests

API

-

Service level unit tests (API controller tests) with mocking repository classes. If hitting database is fast and writing test is easy, choose your path. However, do not try to unit test model behavior in controller test. Write model level unit test for test. It’s okay if you like to have collaborator, but objective should be to test only controller logic

-

Write model level unit test for all the validation cases. e.g. email, phone, password hashing, check for mandatory fields etc.

- Repository level unit tests with hitting database. Some extremists might qualify this as integration test, which is fair. Since these tests run fast and provide exact details of what’s wrong when they fail, I am okay to put them as unit tests

End 2 End

-

E2E acceptance tests with one happy path and one failure case scenario only. Avoid testing validation in acceptance tests. Also avoid making assertion on validation error message, it should just verify the error case by virtue of redirect to same page. All validation scenarios are already covered with messages in unit tests

- May NOT require performance test, since this use case is used only once by the user

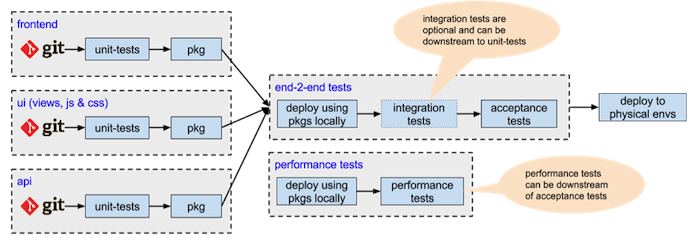

How my CI pipeline will look:

FAQs

Q When should I mock?

A By default I do not mock or stub, unless I have reasons to do so. I follow these guidelines for deciding on mocking.

-

Test depends on external components which is out of test control. e.g. web-service calls, if my test depends on external services for which I can’t control the response, unit tests become fragile and fail for reasons like unexpected response, network issues… Use library like vcr to record-n-play web service calls

-

Failing test doesn’t point broken code. This happens more often when tests are coarse grain and try to test too many things at once. Test Double can help here by isolating code not under test

-

Running test suite, takes lots of time. If my tests are taking too long to finish due to remote call, I might just mock it and have single test to cover it and not pay a cost in every test. Isolate time consuming code with TestDouble

Be careful of overdoing mocking. Use different strategies of Test Double to achieve isolation.

Q Which Test Double strategy to go for?

In a single project, it is okay to have multiple ways to achieve Test Double. Choose whichever is best suited in the context. Lots of time mocking is easy for writing and maintaining tests. And for models, fake objects using builder pattern is best suited (libraries like factory-girl can be handy).

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.