Testing goes hand in hand with every piece of modern software development work. If something is important enough to build, it’s important enough for us to know that it works. In application software development, people have been thinking of how to properly test systems for ages, thus the practices are quite solid. Yet, when we start a data engineering project, things seem uncertain. In this article, I want to discuss the iterations of the testing strategy we went through in one of the projects I worked on. Our process started out with many manual steps, which became bottlenecks for us. Though when we started automating some of the manual steps, we realized that we might be going too far with it. The pendulum had swung to the other end and all of the tooling we had constructed started slowing us down. In the end, we arrived at a strategy that didn't differ that much from a one that more typical enterprise software projects tend to use.

System

The goal of that particular project was to provide a healthcare company insights into how their products are being used. The company was quite new to making decisions based on data and the development environment was quite constrained due to privacy and legal considerations. Thus, we had to go for quite a traditional approach of creating an analytics platform that relies on batch processes that aggregate data and store results in a warehouse.

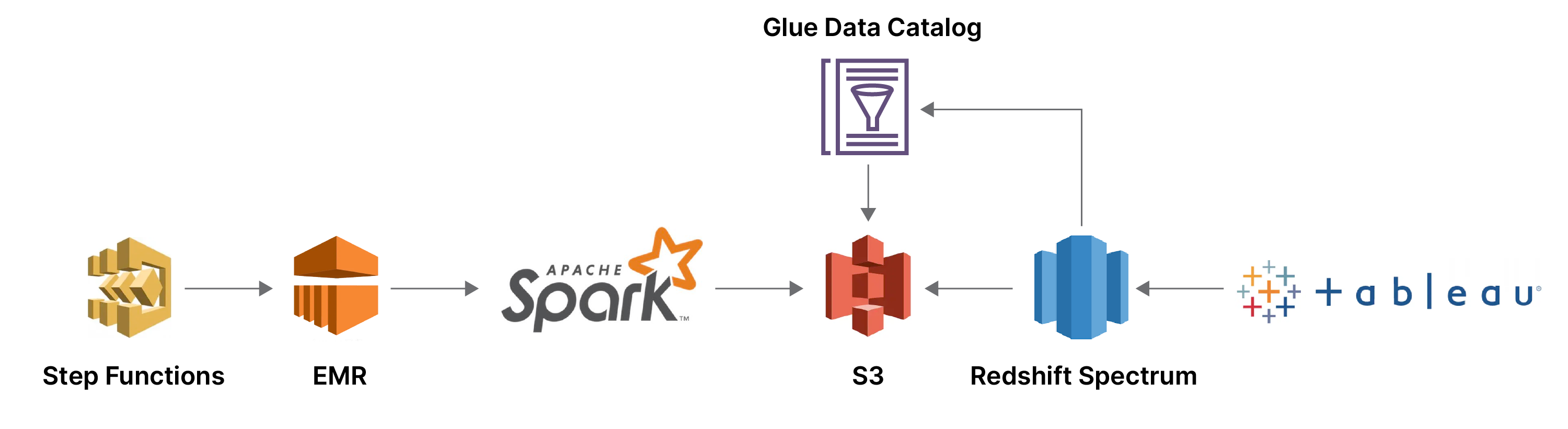

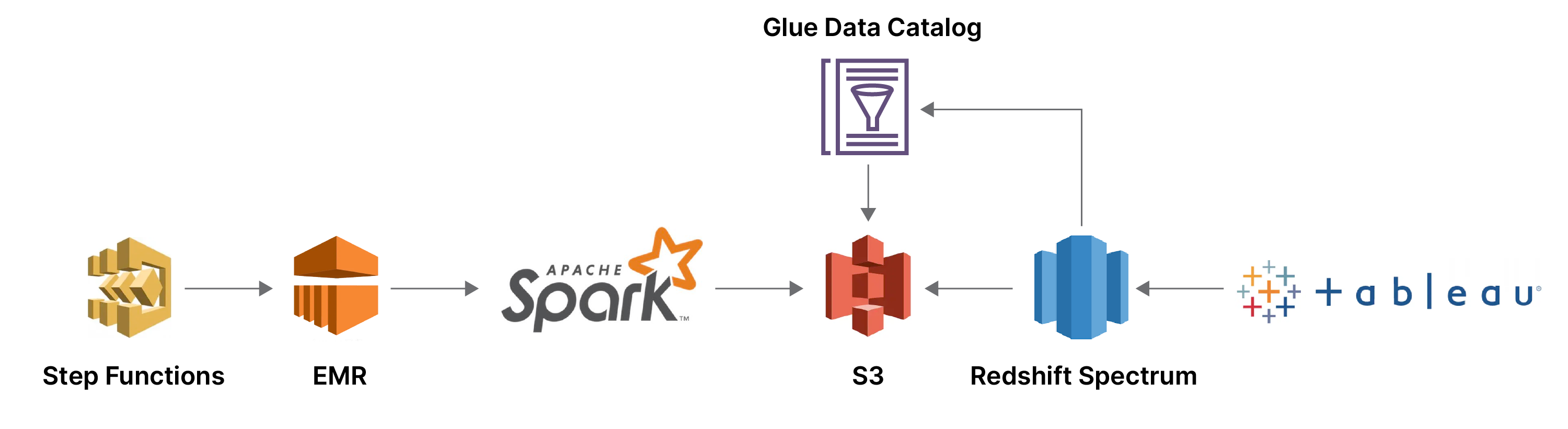

We were working on a data platform consisting of a number of data pipelines picking up data from some AWS S3 buckets, aggregating it, and storing it in another bucket. The high-level architecture of the system was the following:

The pipelines were orchestrated using AWS Step Functions which spun up an EMR cluster.

Spark applications were run on the EMR. The applications calculated the metrics, combined them, and stored them as parquet files on S3 buckets.

The files were queryable by Redshift Spectrum. Due to the use of Spectrum, there was also a Glue Data Catalog connected to the results bucket. The Data Catalog stored all the metadata.

The tables in Redshift were accessible through Tableau for analysts who wished to visualise the data.

The resulting data was stored as denormalized wide tables, making it easy for analysts to access data without needing to perform extra joins. An example of this is a table shown below, with the metrics on the right and the dimensions on the left.

date |

age |

gender |

museum |

city |

country |

visits |

unique visitors |

new memberships |

| 2021-08-23 | 30 |

Female |

Joan Miró Foundation |

Barcelona |

Spain |

4500 |

2000 |

15 |

| 2021-08-23 | 25 |

Male |

Louvre Museum |

Paris |

France |

39000 |

31000 |

68 |

The part of the system responsible for aggregating the data consisted of a number of standalone Spark applications orchestrated by AWS Step Functions. Each application has three layers - an entry point, a job that cleans data and writes the results, and a metric which aggregates cleaned data.

Evolution of the testing strategy

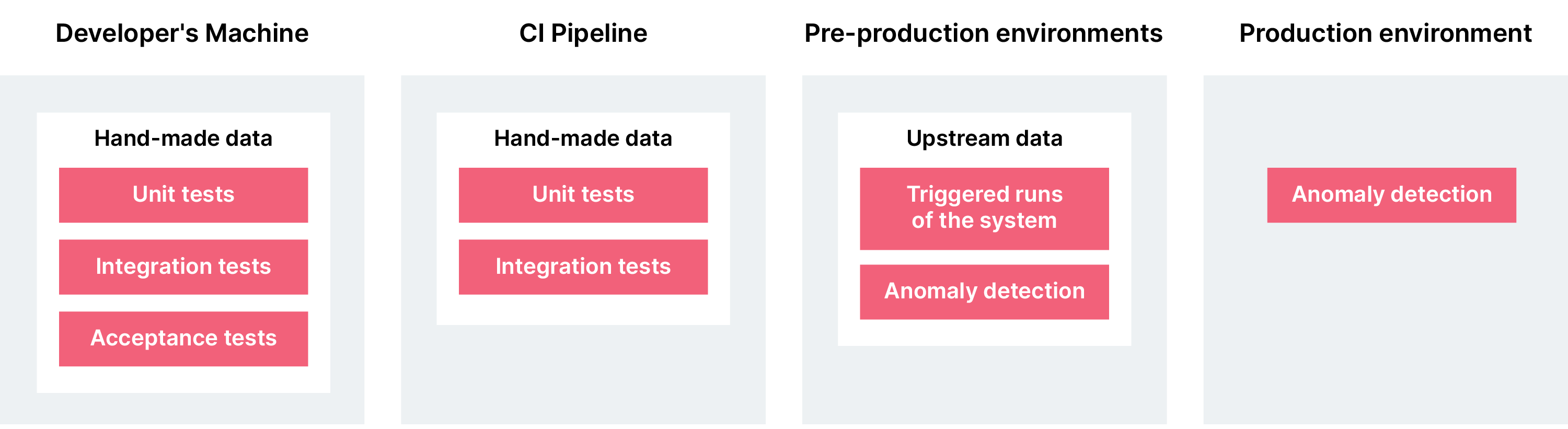

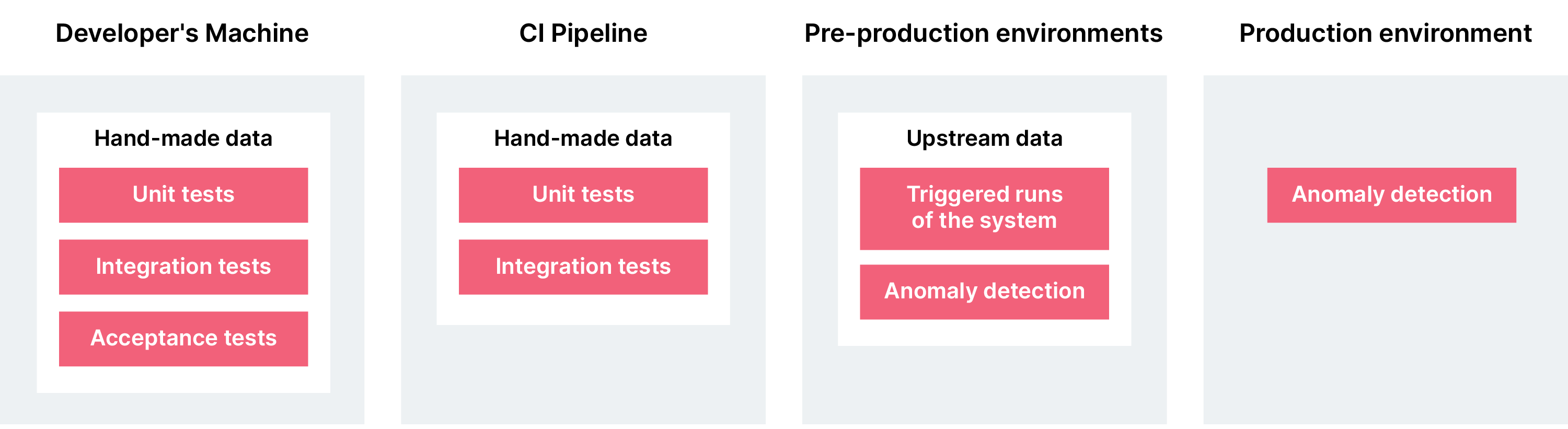

During the time I was in the project, we went through three testing phases. They were:

Test everything - we wrote extensive unit, integration, and acceptance tests with hand-crafted files and DataFrames.

The rise of DSL - we started generating files and DataFrames with a custom-built DSL.

The stumble of the DSL - we scaled down our use of the DSL because of how complicated the DSL started becoming.

I will explain each of the phases in more detail below.

Test everything

At the beginning of the project, we were developing each metric separately. Usually, we wrote the metric first, then the job layer, and finally, we plugged it into the pipeline. This meant that every metric had its own thorough test suite that had been written from scratch.

As an example, suppose we were writing a daily metric for unique visits. As a data source of "facts", we would have a csv file with records of all the visits of that particular day. To enrich this data we would use two additional sources, which would be the visitors’ profile containing optionally their demographic information, such as age and gender, and a snapshot of museum information, such as its name and location.

This lead to a number of unit test scenarios, such as:

The happy path where all the data sources had data

One of the reference data sources missing

Second reference data source missing

Fact table missing

Data sources containing partial information

For the unit tests, we manually created DataFrames with input data and compared the results to the expectations. The expected DataFrames were also created manually. Each of the metrics had about five to eight unit tests testing mostly similar things. Developing these tests followed a very similar pattern making it very prone to errors, since we were copy pasting the same tests and changing only some details.

The next layer of the system consisted of jobs that coordinate and clean data sources, and call the metric. Every metric belongs to one job, but it can be invoked multiple times with different parameters. In this layer we were testing the integration between metrics and jobs. These integration tests took files as input and compared the results of the metric with manually created files with expected results.

While there exist positive aspects to using manually created files, such as being able to quickly simulate various scenarios of missing data and data type incompatibilities, there are a lot of drawbacks as well. The main ones are:

It takes more effort to create the files and even more to modify if something changes in the business logic.

Tests using manually created files don't capture domain knowledge.

Tests run slower.

The main issue with using files becomes the cost of maintenance and keeping tests independent as the test suite grows. It may easily happen that two tests run using the same days and the files start overlapping.

The final piece of our initial testing strategy was to have tests covering the end to end flow of the system. In our case that was calculating all the metrics we needed and combining them into final tables. We created tests that would upload files to S3, trigger the job under test, download the results from S3 and compare them with expected results. We called them acceptance tests because they were coupled to a verification document that we had to produce for the clients QA process. Here we encountered the same problems as in the previous tests, they were difficult to maintain due to the existence of a lot of files. Another issue we faced was that since we were using real infrastructure in these tests, the feedback loop was very long and since we didn't know how to add them to a CI pipeline, they became quite brittle.

We had a pre-production environment that tried to mimic the production environment. Thus, our upstream dependencies were producing data for us and our system was scheduled to run periodically. This setup was meant to help us identify whether our dependencies were following the agreed data contracts.

As this strategy was settling, we realized that there is a lot of code duplication in the functionality and testing. We recognised that the development of the metrics followed the same pattern of taking fact tables from different sources and adding dimensions to them, and then performing aggregations on those enriched tables. The common parts of enriching with dimensions and performing aggregations were extracted into separate functions that could be reused across all metrics. This allowed us to speed up the development process and write less test code.

In summary, at the end of the first phase, we had three layers of testing in the form of unit, integration, and acceptance tests. All of them required manual work of setting up test data, with the integration layer being the most time consuming since there were many tests that required the creation of multiple test files.

The rise of the DSL

With the struggles of managing manually created files slowing down the development, we realised that a better option would be to generate the files when we need them, so at runtime. Thus was born the idea of creating a DSL for generating the files we needed. We explored using some existing data generation tools, such as faker, but none of them quite fit our domain.

Some time earlier, when faced with the need to run performance tests for our system, we created a small application to generate a massive amount of synthetic data. We used generators available in the ScalaCheck library. Most of the data was meaningless, but we did create specific generators for columns that had to contain meaningful data for the validation rules of our pipelines to pass.

Our use of ScalaCheck generators closely resembles property-based testing. This approach would have allowed us to test for corner cases that are harder to anticipate. With the DSL though, we were more interested in a more typical example-based testing approach with developers picking meaningful test scenarios.

We considered extending our ScalaCheck generator application, but decided to keep the two testing approaches separate. We ended up writing our DSL from scratch using a simple Builder pattern.

The DSL for generating files for the above example would look like this:

val museumScenario = new Scenario()

museumScenario

.visitor("José", LocalDate.parse("1996-01-09"), Gender.MALE, "Estonia")

.atMuseum("José", "Joan Miró Foundation", "Barcelona", "Spain")

.visitsMuseum("José", "Joan Miró Foundation", LocalDate.parse("2021-08-23"))

.and

.visitor("Maria", LocalDate.parse("1991-01-09"), Gender.FEMALE, "Belgium")

.atMuseum("Maria", "Louvre Museum", "Paris", "France")

.visitsMuseum("Maria", "Louvre Museum", LocalDate.parse("2021-08-23"))

.build()

The DSL can generate files for the fact table and the reference tables. We started using it across all of our testing layers - unit, integration, and acceptance tests. We were able to remove almost all of the manually created test files and make tests more descriptive. This helped us reduce the cognitive load associated with the development of the metrics.

Once the initial elation of not needing to create files passed we started feeling the overhead that came with the extendability of the DSL.

We had created an anti-corruption layer between the data sources and our metrics, meaning we had special loaders that were cleaning data and the job classes were mostly coordinating data sources and calling the metric with the right parameters. The introduction of the loaders meant that our source data had now multiple shapes, raw and cleaned, and the DSL had to support all of them. Writing any new tests and functionality required changes to the DSL. We didn't treat the DSL as production code and didn't design it as well as we could have.

The stumble of the DSL

With the implementation of the DSL, we started taking a look at our testing strategy in general. We realized that though we were testing a lot, but not necessarily testing the right things. For example, the integration test layer was essentially testing the same things as the unit test layer with the addition of file reading and writing. Additionally, we realized that we had gone overboard with the use of the DSL. Unit tests, which should be testing a very isolated piece of code, had become too complicated. Integration tests were also way too heavy considering they should have only tested the coordination of the data sources and metrics.

We took a decision of largely rolling back the use of the DSL and keeping it only for the acceptance tests. In the unit tests, we started using DataFrames again, this time with cleaned data, and focusing only on columns that are needed for the functionality at test.

For the integration tests, we stopped using real data all together and transitioned to using mocks. With mocks, we could focus on just the interactions between the data sources and the metrics. We could do that because all of the business logic was already being tested on the loader and the metric level. Since we started testing only the interactions between components, we were able to stop thinking about what kind of data needs to be used in those tests. Instead of generating meaningful or even meaningless data, we were able to use Scala's case classes with default values in the tests and still get the same results.

For testing loaders, we returned to the use of manually created files. They were not files from production rather they tried to mimic the properties of the files we expected to see in production. This strategy made sense to us since it was in line with what should be tested for the loaders. While for the unit tests we constructed DataFrames with just enough data, for the loaders we wanted to use files that reflected reality as much as possible. Yet, due to the loaders having a predefined set of functionalities, namely loading in the file, testing them with real files didn't become the hassle that testing metrics with real files used to be.

Originally, we had two main considerations for introducing the DSL: test maintainability and capturing domain knowledge. We didn't want the developers to be fiddling with real files and we wanted the tests to reflect their intentions. Developers shouldn't have had to go into real test files to see what the test was testing. After rolling back the DSL, we realized that our domain wasn't complicated enough to warrant the use of a DSL. A DSL would have suited better if we had to keep track of complicated edge cases and our tests had to serve as real documentation. This wasn't the case for us, since most of our aggregations were quite simple and followed similar patterns.

Summary

In the end, we ended up with a strategy that isn't necessarily that surprising. The unit tests still use DataFrame to test an isolated piece of functionality, the integration tests focus on the interactions between components, the acceptance tests capture the end to end flow. It reminds us of a typical testing strategy inspired by the testing pyramid and years of testing enterprise software systems.

We realised that manually creating test files increased the cognitive load of the team beyond its ability to deal with it. The introduction of the DSL to automate creating files seemed like a good idea but it had the same effect on the cognitive load. It was a mistake to rely on automated data generation on every level of testing. Instead, the answer for us was to rethink our testing strategy and be more intentional about what and how we test. We got rid of most of the tests using I/O, condensed DataFrames with test inputs, and started using mocks to test interfaces. The clarity we achieved by rethinking our strategy helped us make our test suite more robust and gave us the confidence that we can keep extending it in an efficient manner. Rewriting most of the test suite has been painful, but worth it in the end.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.