There are many well documented benefits of continuous delivery such as lower risk releases, lower costs, higher quality and faster time to market.

With streaming data pipelines there are other benefits too. If we are consuming data from an upstream source taking the pipeline offline to update it can mean (depending on our architecture and how long we've been offline for) that we miss data or at minimum have additional load to process when we come back online. These spikes after being offline can impact data latency SLAs. Downtime can also strain downstream applications and consumers of the data. Continuous delivery allows us to make safe incremental changes without having to take the pipeline offline.

For teams moving to a continuous delivery model for the first time it can be a little daunting.

Some of the practices like trunk based development require a shift in how developers write code. Everything we check in will now end up in production. We have to adapt our developer workflow and path to production accordingly.

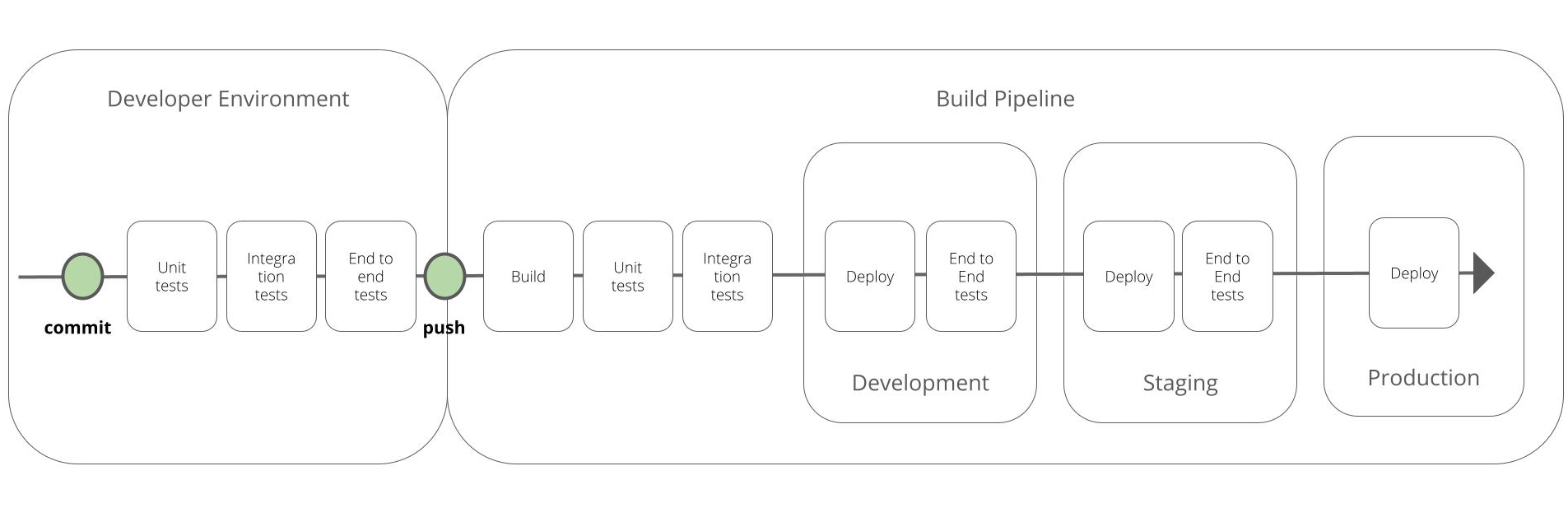

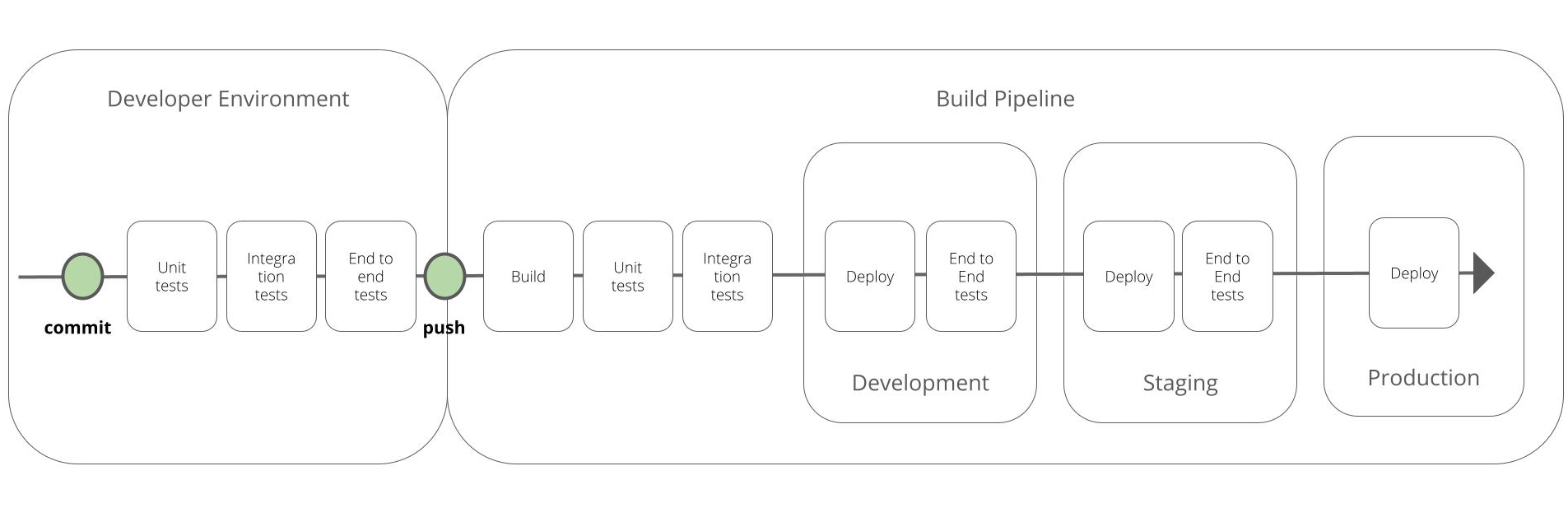

Here is a sample developer workflow and build pipeline for continuous delivery/deployment:

Key components to making this work:

- Automated build pipelines

- Build the artefacts once and then deploy to each environment

- "As code" all the things: infrastructure, configuration, observability and datastore set up

- Continuous integration: adapting a practice like trunk based development with developers committing at least daily removes the complexity and time sink that can come from merging long running branches before being able to release code

- If the build is broken either fix it quickly (~10 minutes) or roll the change back

- A comprehensive test suit comprising of unit tests, integration tests and end to end tests that run automatically, a failed test is the only thing that prevents the code from deploying to an environment

- Pre-commit and pre-push hooks that lint, check for secrets, run the test suites etc.

- The ability for each developer to run all the test suites locally for fast feedback - e.g. running integration and end to end tests in localstack, docker containers or every developer having their own terraform workspace

- Data samples for testing that are representative of the data we expect in production

- An agreed versioning strategy for datasets

- For more complex changes feature toggles, data toggles or blue-green deployment

We have used similar setups to continuously deploy changes to streaming data pipelines running under heavy load with no downtime or impact to other systems.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.