xDD Unplugged

All variants of Driven Development (henceforth the ‘xDDs’) strive to attain focused, minimalistic coding. The premise of lean development is that we should write the minimal amount of code to satisfy our goals. A way to ensure that code is able to solve the given problem and respond to change over time is to articulate the problem in machine-readable form. This allows us to programmatically validate its correctness. For this reason, xDD is mainly used by testing frameworks to set the context. A framework as a series of tests runs goals, as well as the code to fulfill them. In turn, these tests may be used in collaboration with other tools, such as continuous integration tools, as part of the software development cycle.

But, solely defining goals in machine-readable form does not assure the imperative of minimalistic development. To solve this, someone had a stroke of genius: The goals, now viewed as tests, should be written prior to writing their solutions. Lean and minimalistic development is attained as we write just enough code to satisfy a failing test. As a developer, I know, from past experience, that anything I write will ultimately be held against me. It will be criticized by countless people in different roles over a long period of time, until it will ultimately be discarded and rewritten. Hence, I strive to write as little code as possible, Vanitas vanitatum et omnia vanitas.

However, the shortcomings of this methodology are that we need a broad test suite to cover all the goals of the product along with a way to ensure that we have implemented the strict minimum that the test required. I’ll be visiting these two points later, but would like to primarily describe the testing pyramid and enumerate the variants of xDD and their application to the different layers.

Having established when to write the tests (prior to writing code), we now turn to discuss “which” tests we should write, and “how” we should write them.

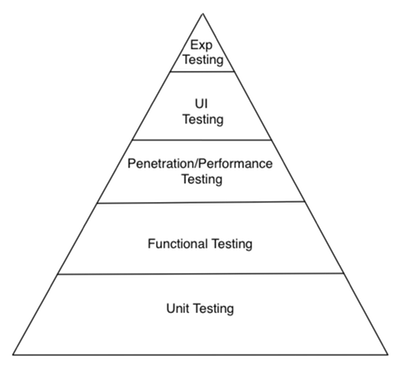

The Testing Pyramid

The testing pyramid depicts the different kinds of tests that are used when developing software. A graphically wider tier depicts a quantitatively larger set of tests than the tier above it, although some projects may be depicted as rectangles when there is high complexity and the testing technology allows for them.

Unit Tests

Although people use the term loosely to denote tests in general, Unit Tests are very focused, isolated and scoped to single functions or methods within an object. Dependencies on external resources are discounted using mocks and stubs.

Example

Using rSpec, a testing framework available for Ruby, the test below assures that a keyword object has a value:

it “should not be null” do k1 = Keyword.new(:value => ”) k1.should_not be_valid end

This example shows the use of mocks, which are programmed to return arbitrary values when their methods are called:

it “returns a newssource” do NewsSource.should_receive(:find_by_active).and_return(false) news_source = NewsSource.get_news_source news_source.should_not == nil end

news_source is mocked out to return an empty set of active news sources, yet the test assures that one will be created in this scenario.

By virtue of being at the lowest level of the pyramid, Unit Tests serve as a gatekeeper to the source control management system used by the project: These tests run on the developer’s local machine and should prevent code at the root of failing tests to be committed to source control. A counter-measure to developers having committed such code is to have a continuous integration service revert those commits when the tests fail in its environment. When practicing TDD (as a generic term), developers would write Unit Tests prior to implementing any function or method.

Functional or Integration Tests

Functional or integration tests span a single end-to-end functional thread. These represent the total interaction of internal and external objects expected to achieve a portion of the application’s desired functionality.

These tests too serve as gatekeepers, but of the promotion model. By definition, passing tests represent allegedly functioning software; hence failures represent software that does not deliver working functionality.

Example

Here we are ensuring that Subscribers, Articles and Notifications work as expected. Real objects are used, not mocks.

it “should notify even out of hours if times are not enabled” do @sub.times_enabled = 0 @notification = Notification.create!(:subscriber_id => @sub.id, :article_id => @article.id) @notification.subscriber.should_notify(Time.parse(@sub.time2).getutc + 1.hour).should be_true end

A “BDD” example is:

Background: Given I have subscriptions such as “Obama” and “Putin” And “Obama” and “Putin” have notifications And I navigate to the NewsAlert web site And I choose to log in and enter my RID and the correct password Scenario: Seeing notifications When I see the “Obama” subscription page Then I see the notifications for “Obama”

This is language a Business Analyst (BA) or Product Owner (PO) can understand and write. If the BAs or POs on your project cannot write these scenarios, then you can “drop down” to rSpec instead, if you think the above is too chatty.

Performance and Penetration Tests

Performance and penetration tests are cross-functional and without context. They test performance and security across different scopes of the code by applying expected thresholds to unit and functional threads. At the unit level, they will surface problems with poorly performing methods. At the functional level poorly performing system interfaces will be highlighted. At the application level load/stress tests will be applied to selected user flows.

Example

A “BDD” example is:

Scenario: Measuring notification deletion When I decide to remove all “1000″ notifications for “Obama” Then it takes no longer than 10 milliseconds

User Interface and User Experience Tests

UI/UX tests validate the user’s experience as she uses the system to achieve her business goals for which the application was originally written.

These tests may also validate grammar, layout, style and other standards.

Of the testing framework, these are the most fragile. One reason is that often the essence is not separated from the implementation. The UI will likely have the greatest rate of change in a given project as product owners are exposed to working software iteratively. Having UI tests that rely heavily on the UI’s physical layout will lead to their rework as the system undergoes change. Being able to express the essence, or desired behaviour, of the thread under test is key to writing maintainable UI tests.

Example

Feature: NewsAlert user is able to log in Background: Given I am a Mobile NewsAlert customer And I navigate to the NewsAlert web site Then I am taken to the home page which has “Log in” and “Activate” buttons Scenario: Login When I am signed up When I choose to log in and enter my ID and the correct password Then I am logged in to my account settings page

Exploratory Testing

Above “UI Tests”, at the apex of the pyramid, we find “Exploratory Testing”, where team members perform unscripted tests to find corners of functionality not covered by other tests. Successful ones are then redistributed down to the lower tiers as formally scripted tests. Since these are unscripted, we’ll not cover them further here.

Flavors of Test Driven Development

In my opinion, xDDs are basically the same, deriving from the generic term of “Test Driven Development”, or TDD. When thinking of TDD and all other xDDs, please bear in mind the introductory section above: we develop the goals (tests) prior to developing the code that will satisfy them. Hence, the “driven” suffix: nothing but the tests drives our development efforts. Given a testing framework and a methodology, we can implement a system purely by writing code that satisfies the sum of its tests.

The dichotomy of the different xDDs can be explained by their function and target audience. Generically, and falsely, TDD would most probably denote the writing of Unit Tests by developers as they implement objects and need to justify methods therein and their implementation. Applied to non-object oriented development, Unit Tests would be written to test single functions.

The reader may contest to this being the first step in a “driven” system. To have methods under test, one must have their encapsulating object, themselves borne of an analysis yet unexpressed. Subscribing to this logic, I usually recommend development using BDD. Behavior-driven development documents the system’s specification by example (a must-read book), regardless of their implementation details. This allows us to distinguish and isolate the specification of the application by describing value to its consumer, with the goal of ignoring implementation and user interactions.

This has great consequences in software development. As one writes BDD scripts, one shows commitment and rationale to their inherent business value. Nonsensical requirements may be promptly pruned from the test suite and thus from the product, establishing a way to develop lean products, not only their lean implementation.

BDD may be used for all these layers, as in some instances; it is more convenient to use the User Story format for integration tests than low-level Unit Test syntax. A more technical term is Acceptance Test Driven Development (ATDD). This flavor is the same as BDD, but alludes that Agile story cards’ tests are being expressed programmatically. Here, the acceptance criteria for stories are translated to machine-readable acceptance tests. ATDD is put to full use if the project is staffed with Product Owners that are comfortable using the English-like syntax of Gherkin (see example below). In their absence or will, BAs may take on this task. If neither are available nor willing, developers would usually “drop down” to a more technical syntax such as used in rSpec, in order to remove what they refer to as “fluff”. I would recommend writing Gherkin as it serves as functional specifications that can be readily communicated to non-technical people as a reminder of how they intended the system to function.

As software development grows to encompass Infrastructure as Code (IaC), there are now ways to express hardware expectations using MDD, or Monitor-driven Development (MDD). MDD applies the same principles of Lean development to code that represent machines (virtual or otherwise).

Example

This example will actually provision a VM, configure it to install mySQL and drop the VM at the end of the test.

Feature: App deploys to a VM Background: Given I have a vm with ip “33.33.33.33″ Scenario: Installing mySQL When I provision users on it When I run the “dbserver” ansible playbook Then I log on as “deployer”, then “mysql” is installed And I can log on as “deployer” to mysql Then I remove the VM

The full example can be viewed here.

ServerSpec gives us bliss:

describe service(‘apache2′) do

it { should be_enabled }

it { should be_running }

end

describe port(80) do

it { should be_listening }

end

For a more extreme example of xDD, please refer to my blog entry regarding Returns-driven Development (RDD) for writing tests from a business-goal perspective.

Caution

The quality of the tests is measured by how precisely they test the code at their respective levels, as well as how they were written with regards to the amount of code or spanning responsibility and the quality of their assertions.

Unit tests that do not use stubs or mocks when accessing external services of all kinds are probably testing too much and will be slow to execute. Slow test-suites will, eventually, become a bottleneck that may be destined to be abandoned by the team. Conversely, testing basic compiler functions will lead to a huge test-suite, giving false indication of the breath of the safety net it provides.

Similarly, tests that lack correct assertions or have too many of them, are either testing nothing at all, or testing too much.

Yet there is a paradox: The tests’ importance and impact are proportionally inverse to their fragility in the pyramid. In other words, as we climb the tiers, the more important the tests become, yet they become less robust and trustworthy at the same time. A major pitfall at the upper levels is the lack of application or business-logic coverage. I was on a project that had hundreds of passing tests, yet the product failed in production, as external interfaces were not mocked, neither simulated nor tested adequately. Our pyramid’s peak was bare, and the product’s shortcomings were immediately visible in production. Such may be the fate of any system that interacts with external systems across different companies; lacking dedicated environments, one must resort to simulating their interfaces, something that comes with its own risks.

We quickly found that the art and science of software development is no different than the art and science of contriving its tests. It is for this reason that I rely on the “driven” methodologies to save me from my own misdoings.

In Summary

TDD in its different guises is an activity whereby a feature is implemented by describing its behavior from its user’s perspective.

Describing the intended behavior as acceptance criteria drives us to adopt the habit of writing only as much code that is needed to fulfill the test.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.