XR (extended reality) is one of the most exciting and most-hyped emerging technologies. It’s important to note that XR comes in a number of different forms and implementations. This can often create confusion, so it’s worth clarifying. The key ways in which XR is implemented in real-world products are:

Augmented reality (AR) which overlays digital content in the live environment.

Virtual reality (VR) which delivers content through wearable devices. Here, the whole environment is virtual and computer generated.

Mixed reality (MR) is a mix of both worlds.

Mobile XR delivers content through smartphones or tablets.

WebXR delivers content through AR/VR-enabled web products. (Today, you can build XR properties with something as simple as Javascript libraries.)

Heads-up display (HUD) is content projected using specific devices — the most common consumer versions of these are for cars.

There are already a wealth of examples of it being used in different industries: smart mirrors, for example, are gaining popularity in both retail — where it’s used to explore potential purchasing options — and leisure — where personal trainers can guide clients through new exercises.

However and wherever XR is implemented, a key challenge is content creation. Nothing, after all, can be extended without new content. XR depends on dynamism — we need to be able to create content that supports and facilitates such dynamism.

Content challenges in XR adoption

The journey toward creating 3D content for XR is filled with speed bumps. At the content generation level, there are several siloes. These can lead to content being created that isn’t shareable and usable. Even if we do manage to create content for XR applications, questions about management, access control and versioning still remain.

There are a number of reasons for this, but the main issue is that most devices today are bulky and heavy, which makes consumption difficult for end-users. Publishing protocols like HTTP, streaming or WebRTC, moreover, just aren’t sufficient.

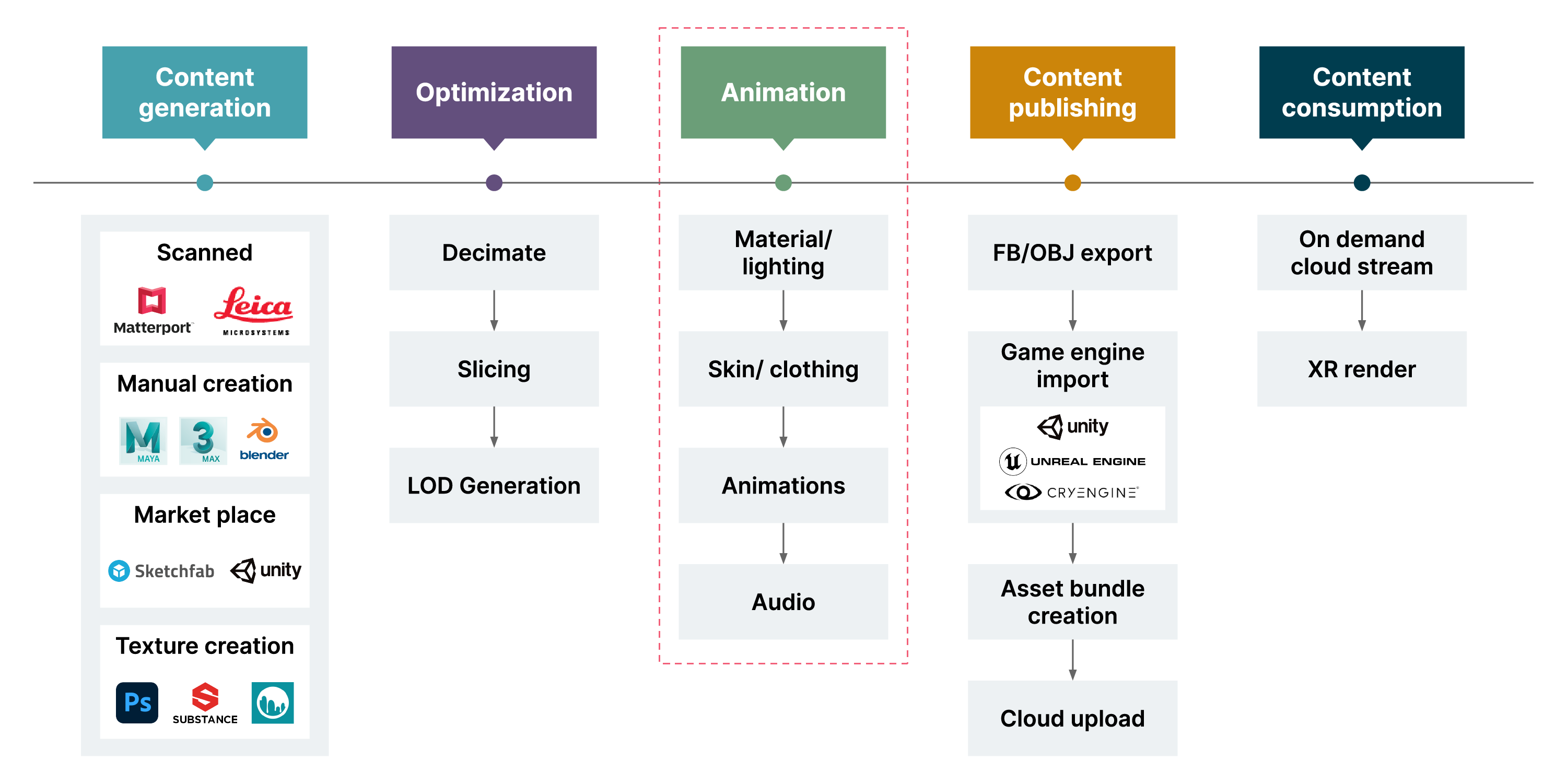

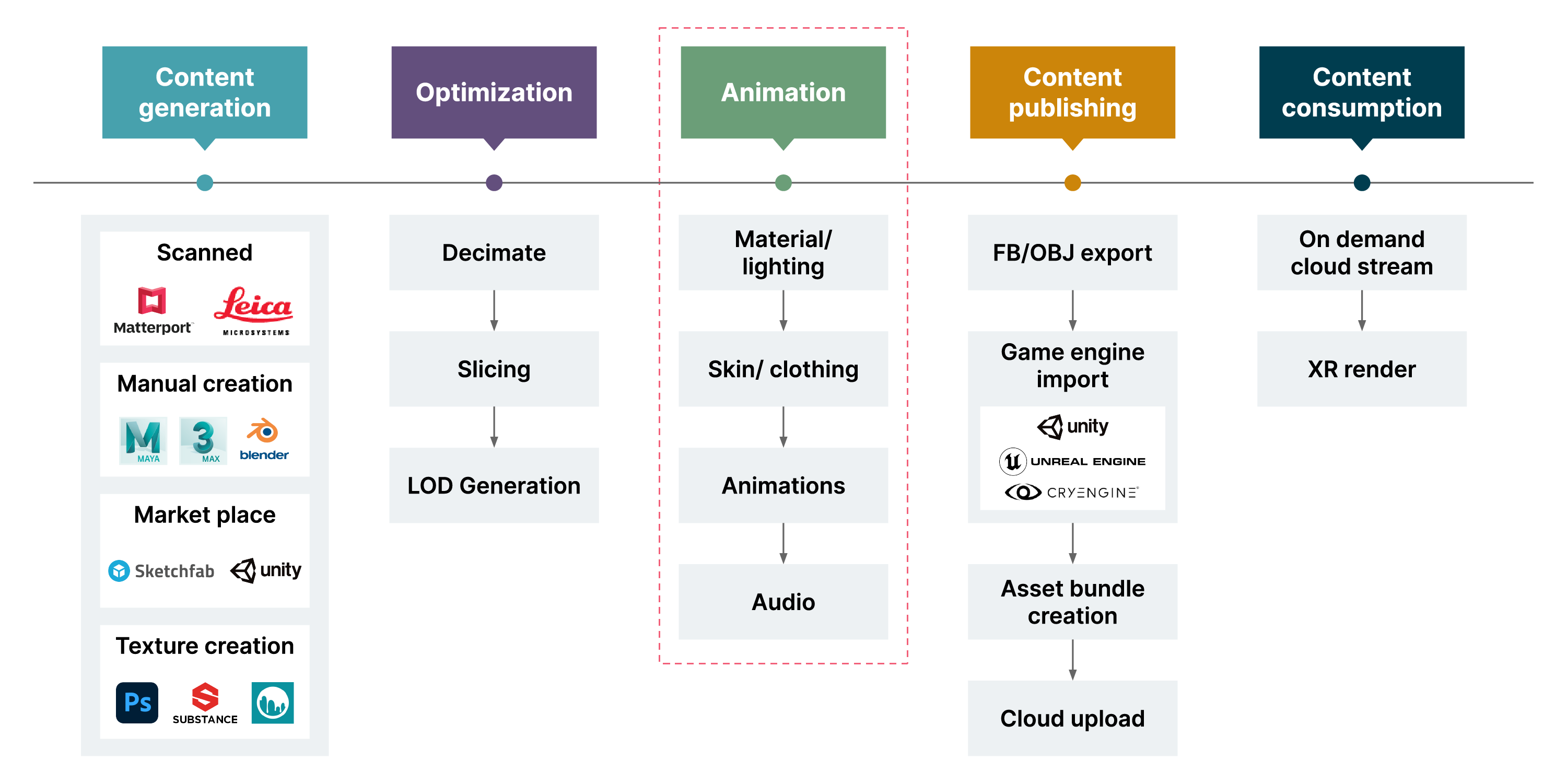

The diagram below demonstrates the extent of the complexity of an XR content development pipeline:

XR content management: a typical flow

Let’s explore each of these steps in a little bit more detail.

Content generation

The basic unit of a 3D model’s construction is a point, defined by three dimensions: x, y and z. Hundreds or thousands of points brought together make a “point cloud.” These are then meshed using triangulation or a mesh generation algorithm. A typical model has millions of points and polygons that aren’t ready for consumption by consumer XR devices. This is why they need to be optimized.

Optimization

With XR content editing tools, such as Blender, Max or Maya developers reduce the size of the content, slicing it based on the level of detail a given application needs.

Animation

For a virtual world to feel real, it needs to engage our senses. XR now has achieved sight and sound with lighting, audio, color, clothing and material. Haptic devices for touch are also gaining popularity.

Publishing

XR content is published by exporting it to the right format, that can be consumed by game engines, and reusable asset bundles are made available for the XR developers. Developers typically use FBX/OBJ/GLB/GLTF format export, use game engine tools — such as Unity, Unreal, Blender — for import asset bundling and then uploads or shares over cloud or asset stores.

Consumption

Cloud stream and XR Render enable remote content consumption. Rendering XR content in the head-mounted/mobile device creates heavy utilization of compute, network and power, however cloud/edge computing with next-gen networking (5G/6G) enables the remote rendering of XR content on edge or cloud. This will enable more real life use cases to run in the XR devices.

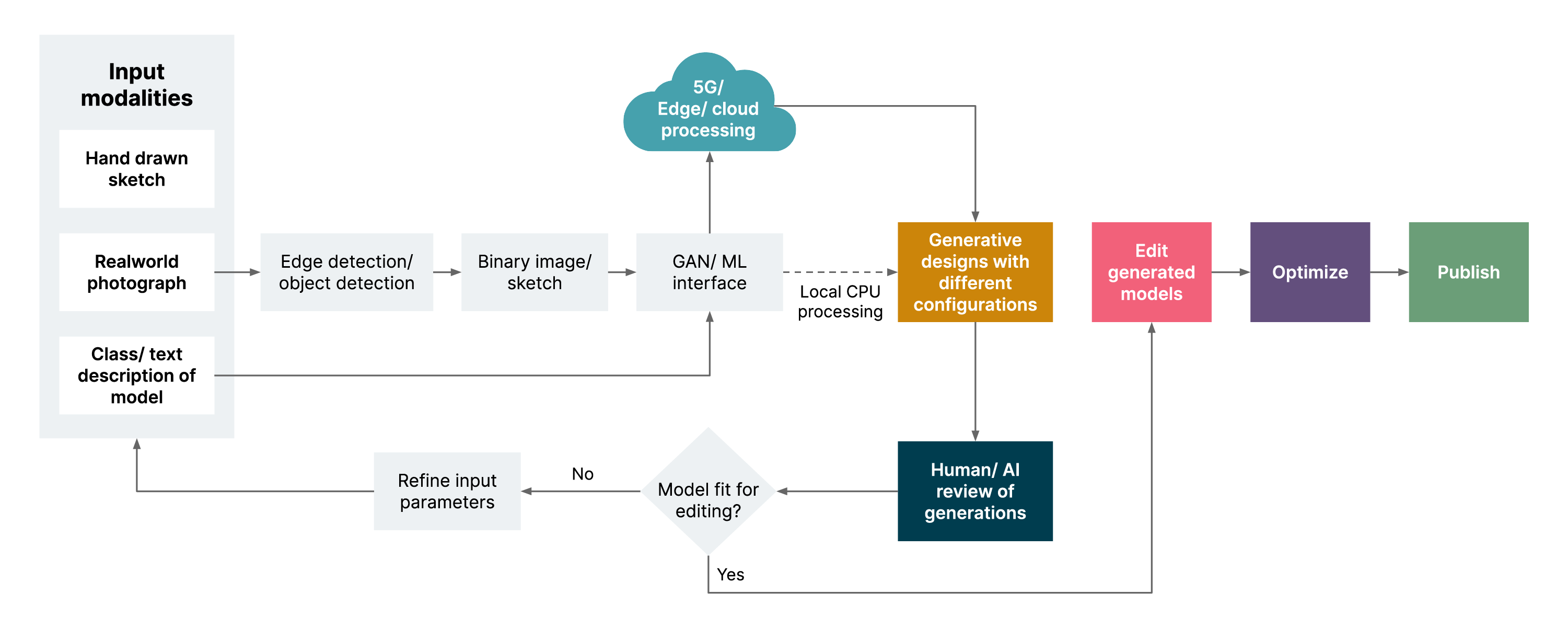

The biggest challenge with all these processes today is that they are manual and, therefore, not scalable. That’s why AI can add significant value — new content can be produced algorithmically.

Artificial intelligence for XR content

There are a number of ways AI can support us when it comes to XR content — even beyond creation. It can also help us manage and publish content.

Here are a few of the ways AI is being used for XR content in practice.

Content pipeline automation

One of our clients had a huge volume of XR content that wasn’t ready for consumption because their content pipeline was highly manual. To address this, we built a pre-processing API for all their 3D assets. This distributes the load to a cluster of nodes to pipeline ops.

We set up the preprocessing pipeline using Blender CLI on the cloud and then performed model optimization and format conversion using Python scripts, and Dockerized the Blender scripts which were then deployed on Kubernetes clusters. After that, the XR-ready output was cached in a file store. This significantly reduced the manual burden in processing 3D models and accelerated the journey to consumption.

AI for XR content generation

To make XR a reality, we need data at scale. One way of doing this is by replicating the physical environment, creating a digital twin of the real world. Apple’s RoomPlan API, photogrammetry scans, hardware-assisted 3D scans etc. have all been successful in doing this. Elsewhere, facial reconstruction from monocular photos can help us generate avatars.

Google has also been working on creating 3D models from a textual description of the visual. You can write something simple, like “I need a chair with two armrests,” and the algorithm will create a 3D model from your request. The Unity Art Engine also uses AI for texturing, pattern unwrapping and denoising.

ArchiGAN can generate models for architecture without the help of an architect/engineer. Users can feed their descriptions as sketches or text, and it’ll automatically generate models on the fly. This is not just for rooms or interiors but also for large buildings, roads, flyovers etc., including configurations for fake cities/malls and adaptive floor plans.

Training deep learning on 3D model data

To do all of this, machine learning and deep models need to be trained on 3D data. This isn’t completely different from using images to train an image recognition algorithm; it is, however, much more complex. Whereas image recognition works by applying a pixel grid to an image, representing it through three values (R,G and B), for 3D data the datasets are much more sophisticated.

The structural definitions of 3D data for deep learning use cases are evolving. 3D Point clouds (such as LIDAR data) are commonly available to represent 3D objects. However, to process 3D point clouds for deep learning, they need to be correctly structurally represented. Pointnet and VoxelNet can both provide possible structural definitions for 3D models for Machine Learning (ML) consumption. PointNet provides a unified architecture for classification/segmentation directly on 3D point clouds whereas VoxelNet divides the point clouds into equally spaced 3D Voxels.

A large scale library of richly annotated 3D shapes is offered by ShapeNet to train ML models. This library contains roughly 50,000 unique 3D models in 50 different categories for deep learning.

AI-assisted 3D modeling

If we bring AI into the XR content pipeline, the possible steps might include handling various inputs (sketches, photographs etc.) and using advanced processing capabilities (driven by edge/5G/cloud) to generate content. Humans or an AI system could review that content, with any further optimizations as the process is extended. Once the design is deemed acceptable it can be published.

If XR is to become truly mainstream — and deliver significant business value — we artificial intelligence will play a vital role. As we have demonstrated, this is already happening; however as research and applications progress, we will see new opportunities and possibilities emerge. It will be up to us all in the industry to seize them and build extraordinary experiences.

Disclaimer: The statements and opinions expressed in this article are those of the author(s) and do not necessarily reflect the positions of Thoughtworks.